2.9 KiB

| comments | description | keywords |

|---|---|---|

| true | Learn how to use Roboflow for organizing, labelling, preparing, and hosting your datasets for YOLOv5 models. Enhance your model deployments with our platform. | Ultralytics, YOLOv5, Roboflow, data organization, data labelling, data preparation, model deployment, active learning, machine learning pipeline |

Roboflow Datasets

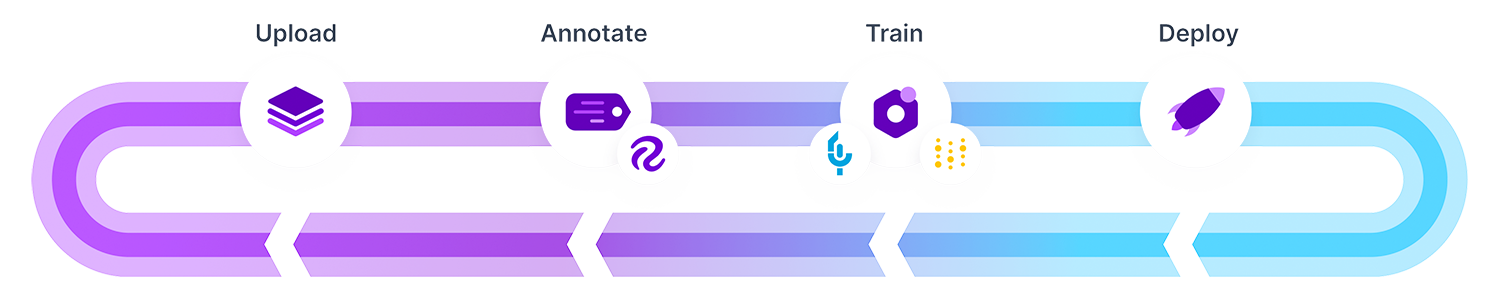

You can now use Roboflow to organize, label, prepare, version, and host your datasets for training YOLOv5 🚀 models. Roboflow is free to use with YOLOv5 if you make your workspace public. UPDATED 7 June 2023.

!!! Warning

Roboflow users can use Ultralytics under the [AGPL license](https://github.com/ultralytics/ultralytics/blob/main/LICENSE) or procure an [Enterprise license](https://ultralytics.com/license) directly from Ultralytics. Be aware that Roboflow does **not** provide Ultralytics licenses, and it is the responsibility of the user to ensure appropriate licensing.

Upload

You can upload your data to Roboflow via web UI, REST API, or Python.

Labeling

After uploading data to Roboflow, you can label your data and review previous labels.

Versioning

You can make versions of your dataset with different preprocessing and offline augmentation options. YOLOv5 does online augmentations natively, so be intentional when layering Roboflow's offline augs on top.

Exporting Data

You can download your data in YOLOv5 format to quickly begin training.

from roboflow import Roboflow

rf = Roboflow(api_key="YOUR API KEY HERE")

project = rf.workspace().project("YOUR PROJECT")

dataset = project.version("YOUR VERSION").download("yolov5")

Custom Training

We have released a custom training tutorial demonstrating all of the above capabilities. You can access the code here:

Active Learning

The real world is messy and your model will invariably encounter situations your dataset didn't anticipate. Using active learning is an important strategy to iteratively improve your dataset and model. With the Roboflow and YOLOv5 integration, you can quickly make improvements on your model deployments by using a battle tested machine learning pipeline.