commit

fea03cf22d

501 changed files with 25367 additions and 12463 deletions

@ -0,0 +1,49 @@ |

||||

# Ultralytics YOLO 🚀, AGPL-3.0 license |

||||

# Builds ultralytics/ultralytics:latest-cpu image on DockerHub https://hub.docker.com/r/ultralytics/ultralytics |

||||

# Image is CPU-optimized for ONNX, OpenVINO and PyTorch YOLOv8 deployments |

||||

|

||||

# Use the official Python 3.10 slim-bookworm as base image |

||||

FROM python:3.10-slim-bookworm |

||||

|

||||

# Downloads to user config dir |

||||

ADD https://ultralytics.com/assets/Arial.ttf https://ultralytics.com/assets/Arial.Unicode.ttf /root/.config/Ultralytics/ |

||||

|

||||

# Install linux packages |

||||

# g++ required to build 'tflite_support' and 'lap' packages, libusb-1.0-0 required for 'tflite_support' package |

||||

RUN apt update \ |

||||

&& apt install --no-install-recommends -y python3-pip git zip curl htop libgl1-mesa-glx libglib2.0-0 libpython3-dev gnupg g++ libusb-1.0-0 |

||||

# RUN alias python=python3 |

||||

|

||||

# Create working directory |

||||

WORKDIR /usr/src/ultralytics |

||||

|

||||

# Copy contents |

||||

# COPY . /usr/src/app (issues as not a .git directory) |

||||

RUN git clone https://github.com/ultralytics/ultralytics /usr/src/ultralytics |

||||

ADD https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n.pt /usr/src/ultralytics/ |

||||

|

||||

# Remove python3.11/EXTERNALLY-MANAGED or use 'pip install --break-system-packages' avoid 'externally-managed-environment' Ubuntu nightly error |

||||

# RUN rm -rf /usr/lib/python3.11/EXTERNALLY-MANAGED |

||||

|

||||

# Install pip packages |

||||

RUN python3 -m pip install --upgrade pip wheel |

||||

RUN pip install --no-cache -e ".[export]" thop --extra-index-url https://download.pytorch.org/whl/cpu |

||||

|

||||

# Run exports to AutoInstall packages |

||||

RUN yolo export model=tmp/yolov8n.pt format=edgetpu imgsz=32 |

||||

RUN yolo export model=tmp/yolov8n.pt format=ncnn imgsz=32 |

||||

# Requires <= Python 3.10, bug with paddlepaddle==2.5.0 |

||||

RUN pip install --no-cache paddlepaddle==2.4.2 x2paddle |

||||

# Remove exported models |

||||

RUN rm -rf tmp |

||||

|

||||

# Usage Examples ------------------------------------------------------------------------------------------------------- |

||||

|

||||

# Build and Push |

||||

# t=ultralytics/ultralytics:latest-python && sudo docker build -f docker/Dockerfile-python -t $t . && sudo docker push $t |

||||

|

||||

# Run |

||||

# t=ultralytics/ultralytics:latest-python && sudo docker run -it --ipc=host $t |

||||

|

||||

# Pull and Run with local volume mounted |

||||

# t=ultralytics/ultralytics:latest-python && sudo docker pull $t && sudo docker run -it --ipc=host -v "$(pwd)"/datasets:/usr/src/datasets $t |

||||

@ -1 +1 @@ |

||||

docs.ultralytics.com |

||||

docs.ultralytics.com |

||||

|

||||

@ -1,28 +1,26 @@ |

||||

# Security Policy |

||||

--- |

||||

description: Discover how Ultralytics ensures the safety of user data and systems. Check out the measures we have implemented, including Snyk and GitHub CodeQL Scanning. |

||||

keywords: Ultralytics, Security Policy, data security, open-source projects, Snyk scanning, CodeQL scanning, vulnerability detection, threat prevention |

||||

--- |

||||

|

||||

At [Ultralytics](https://ultralytics.com), the security of our users' data and systems is of utmost importance. To |

||||

ensure the safety and security of our [open-source projects](https://github.com/ultralytics), we have implemented |

||||

several measures to detect and prevent security vulnerabilities. |

||||

# Security Policy |

||||

|

||||

[](https://snyk.io/advisor/python/ultralytics) |

||||

At [Ultralytics](https://ultralytics.com), the security of our users' data and systems is of utmost importance. To ensure the safety and security of our [open-source projects](https://github.com/ultralytics), we have implemented several measures to detect and prevent security vulnerabilities. |

||||

|

||||

## Snyk Scanning |

||||

|

||||

We use [Snyk](https://snyk.io/advisor/python/ultralytics) to regularly scan the YOLOv8 repository for vulnerabilities |

||||

and security issues. Our goal is to identify and remediate any potential threats as soon as possible, to minimize any |

||||

risks to our users. |

||||

We use [Snyk](https://snyk.io/advisor/python/ultralytics) to regularly scan all Ultralytics repositories for vulnerabilities and security issues. Our goal is to identify and remediate any potential threats as soon as possible, to minimize any risks to our users. |

||||

|

||||

[](https://snyk.io/advisor/python/ultralytics) |

||||

|

||||

## GitHub CodeQL Scanning |

||||

|

||||

In addition to our Snyk scans, we also use |

||||

GitHub's [CodeQL](https://docs.github.com/en/code-security/code-scanning/automatically-scanning-your-code-for-vulnerabilities-and-errors/about-code-scanning-with-codeql) |

||||

scans to proactively identify and address security vulnerabilities. |

||||

In addition to our Snyk scans, we also use GitHub's [CodeQL](https://docs.github.com/en/code-security/code-scanning/automatically-scanning-your-code-for-vulnerabilities-and-errors/about-code-scanning-with-codeql) scans to proactively identify and address security vulnerabilities across all Ultralytics repositories. |

||||

|

||||

[](https://github.com/ultralytics/ultralytics/actions/workflows/codeql.yaml) |

||||

|

||||

## Reporting Security Issues |

||||

|

||||

If you suspect or discover a security vulnerability in the YOLOv8 repository, please let us know immediately. You can |

||||

reach out to us directly via our [contact form](https://ultralytics.com/contact) or |

||||

via [security@ultralytics.com](mailto:security@ultralytics.com). Our security team will investigate and respond as soon |

||||

as possible. |

||||

If you suspect or discover a security vulnerability in any of our repositories, please let us know immediately. You can reach out to us directly via our [contact form](https://ultralytics.com/contact) or via [security@ultralytics.com](mailto:security@ultralytics.com). Our security team will investigate and respond as soon as possible. |

||||

|

||||

We appreciate your help in keeping the YOLOv8 repository secure and safe for everyone. |

||||

We appreciate your help in keeping all Ultralytics open-source projects secure and safe for everyone. |

||||

|

||||

@ -0,0 +1,81 @@ |

||||

--- |

||||

comments: true |

||||

description: Learn about the Caltech-101 dataset, its structure and uses in machine learning. Includes instructions to train a YOLO model using this dataset. |

||||

keywords: Caltech-101, dataset, YOLO training, machine learning, object recognition, ultralytics |

||||

--- |

||||

|

||||

# Caltech-101 Dataset |

||||

|

||||

The [Caltech-101](https://data.caltech.edu/records/mzrjq-6wc02) dataset is a widely used dataset for object recognition tasks, containing around 9,000 images from 101 object categories. The categories were chosen to reflect a variety of real-world objects, and the images themselves were carefully selected and annotated to provide a challenging benchmark for object recognition algorithms. |

||||

|

||||

## Key Features |

||||

|

||||

- The Caltech-101 dataset comprises around 9,000 color images divided into 101 categories. |

||||

- The categories encompass a wide variety of objects, including animals, vehicles, household items, and people. |

||||

- The number of images per category varies, with about 40 to 800 images in each category. |

||||

- Images are of variable sizes, with most images being medium resolution. |

||||

- Caltech-101 is widely used for training and testing in the field of machine learning, particularly for object recognition tasks. |

||||

|

||||

## Dataset Structure |

||||

|

||||

Unlike many other datasets, the Caltech-101 dataset is not formally split into training and testing sets. Users typically create their own splits based on their specific needs. However, a common practice is to use a random subset of images for training (e.g., 30 images per category) and the remaining images for testing. |

||||

|

||||

## Applications |

||||

|

||||

The Caltech-101 dataset is extensively used for training and evaluating deep learning models in object recognition tasks, such as Convolutional Neural Networks (CNNs), Support Vector Machines (SVMs), and various other machine learning algorithms. Its wide variety of categories and high-quality images make it an excellent dataset for research and development in the field of machine learning and computer vision. |

||||

|

||||

## Usage |

||||

|

||||

To train a YOLO model on the Caltech-101 dataset for 100 epochs, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page. |

||||

|

||||

!!! example "Train Example" |

||||

|

||||

=== "Python" |

||||

|

||||

```python |

||||

from ultralytics import YOLO |

||||

|

||||

# Load a model |

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training) |

||||

|

||||

# Train the model |

||||

results = model.train(data='caltech101', epochs=100, imgsz=416) |

||||

``` |

||||

|

||||

=== "CLI" |

||||

|

||||

```bash |

||||

# Start training from a pretrained *.pt model |

||||

yolo detect train data=caltech101 model=yolov8n-cls.pt epochs=100 imgsz=416 |

||||

``` |

||||

|

||||

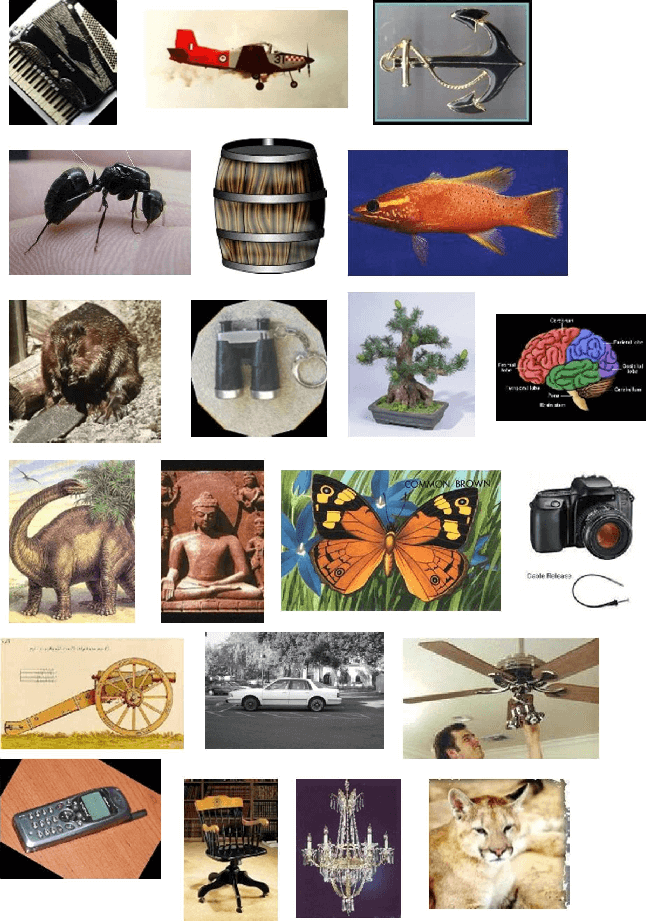

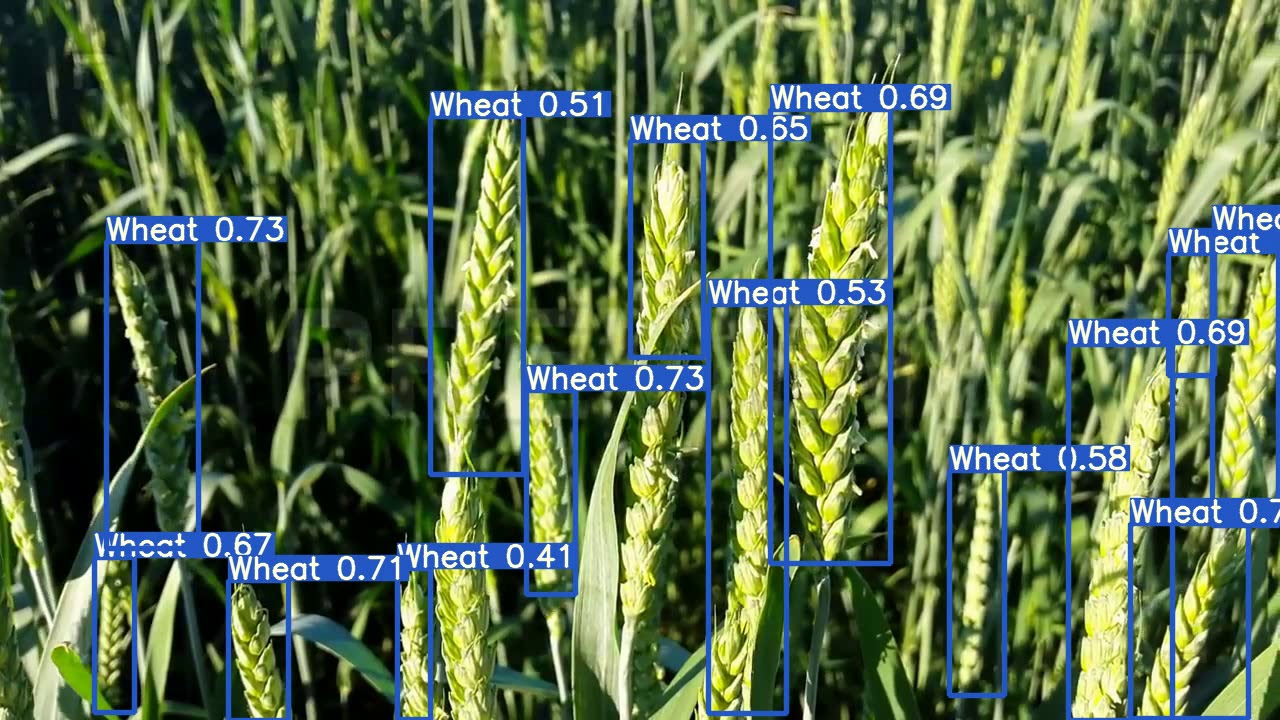

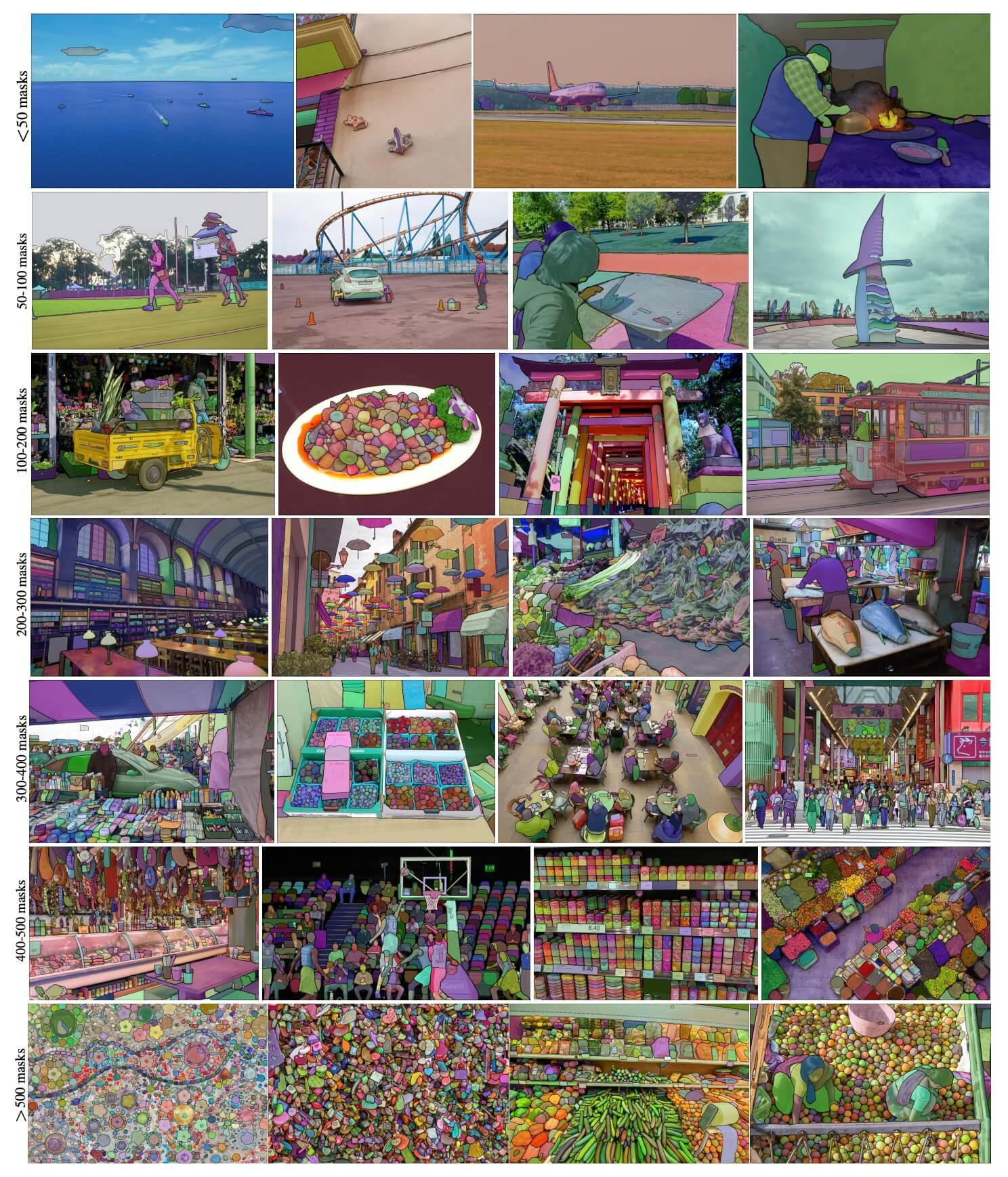

## Sample Images and Annotations |

||||

|

||||

The Caltech-101 dataset contains high-quality color images of various objects, providing a well-structured dataset for object recognition tasks. Here are some examples of images from the dataset: |

||||

|

||||

|

||||

|

||||

The example showcases the variety and complexity of the objects in the Caltech-101 dataset, emphasizing the significance of a diverse dataset for training robust object recognition models. |

||||

|

||||

## Citations and Acknowledgments |

||||

|

||||

If you use the Caltech-101 dataset in your research or development work, please cite the following paper: |

||||

|

||||

!!! note "" |

||||

|

||||

=== "BibTeX" |

||||

|

||||

```bibtex |

||||

@article{fei2007learning, |

||||

title={Learning generative visual models from few training examples: An incremental Bayesian approach tested on 101 object categories}, |

||||

author={Fei-Fei, Li and Fergus, Rob and Perona, Pietro}, |

||||

journal={Computer vision and Image understanding}, |

||||

volume={106}, |

||||

number={1}, |

||||

pages={59--70}, |

||||

year={2007}, |

||||

publisher={Elsevier} |

||||

} |

||||

``` |

||||

|

||||

We would like to acknowledge Li Fei-Fei, Rob Fergus, and Pietro Perona for creating and maintaining the Caltech-101 dataset as a valuable resource for the machine learning and computer vision research community. For more information about the Caltech-101 dataset and its creators, visit the [Caltech-101 dataset website](https://data.caltech.edu/records/mzrjq-6wc02). |

||||

@ -0,0 +1,78 @@ |

||||

--- |

||||

comments: true |

||||

description: Explore the Caltech-256 dataset, a diverse collection of images used for object recognition tasks in machine learning. Learn to train a YOLO model on the dataset. |

||||

keywords: Ultralytics, YOLO, Caltech-256, dataset, object recognition, machine learning, computer vision, deep learning |

||||

--- |

||||

|

||||

# Caltech-256 Dataset |

||||

|

||||

The [Caltech-256](https://data.caltech.edu/records/nyy15-4j048) dataset is an extensive collection of images used for object classification tasks. It contains around 30,000 images divided into 257 categories (256 object categories and 1 background category). The images are carefully curated and annotated to provide a challenging and diverse benchmark for object recognition algorithms. |

||||

|

||||

## Key Features |

||||

|

||||

- The Caltech-256 dataset comprises around 30,000 color images divided into 257 categories. |

||||

- Each category contains a minimum of 80 images. |

||||

- The categories encompass a wide variety of real-world objects, including animals, vehicles, household items, and people. |

||||

- Images are of variable sizes and resolutions. |

||||

- Caltech-256 is widely used for training and testing in the field of machine learning, particularly for object recognition tasks. |

||||

|

||||

## Dataset Structure |

||||

|

||||

Like Caltech-101, the Caltech-256 dataset does not have a formal split between training and testing sets. Users typically create their own splits according to their specific needs. A common practice is to use a random subset of images for training and the remaining images for testing. |

||||

|

||||

## Applications |

||||

|

||||

The Caltech-256 dataset is extensively used for training and evaluating deep learning models in object recognition tasks, such as Convolutional Neural Networks (CNNs), Support Vector Machines (SVMs), and various other machine learning algorithms. Its diverse set of categories and high-quality images make it an invaluable dataset for research and development in the field of machine learning and computer vision. |

||||

|

||||

## Usage |

||||

|

||||

To train a YOLO model on the Caltech-256 dataset for 100 epochs, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page. |

||||

|

||||

!!! example "Train Example" |

||||

|

||||

=== "Python" |

||||

|

||||

```python |

||||

from ultralytics import YOLO |

||||

|

||||

# Load a model |

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training) |

||||

|

||||

# Train the model |

||||

results = model.train(data='caltech256', epochs=100, imgsz=416) |

||||

``` |

||||

|

||||

=== "CLI" |

||||

|

||||

```bash |

||||

# Start training from a pretrained *.pt model |

||||

yolo detect train data=caltech256 model=yolov8n-cls.pt epochs=100 imgsz=416 |

||||

``` |

||||

|

||||

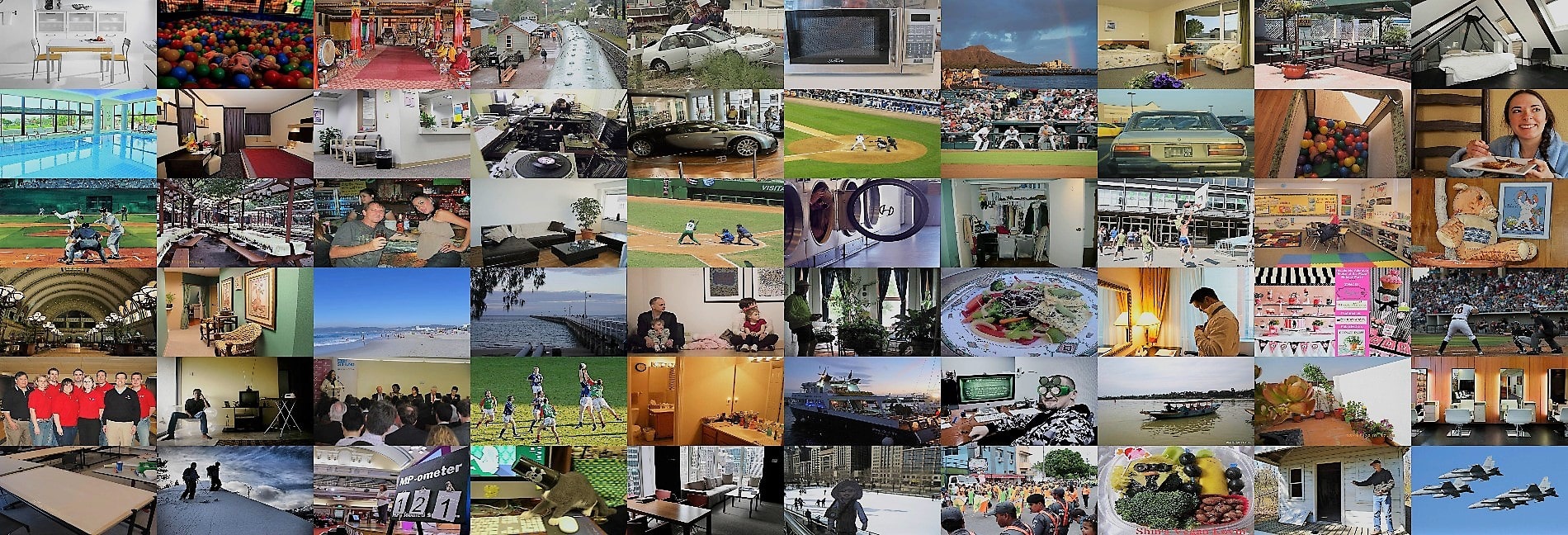

## Sample Images and Annotations |

||||

|

||||

The Caltech-256 dataset contains high-quality color images of various objects, providing a comprehensive dataset for object recognition tasks. Here are some examples of images from the dataset ([credit](https://ml4a.github.io/demos/tsne_viewer.html)): |

||||

|

||||

|

||||

|

||||

The example showcases the diversity and complexity of the objects in the Caltech-256 dataset, emphasizing the importance of a varied dataset for training robust object recognition models. |

||||

|

||||

## Citations and Acknowledgments |

||||

|

||||

If you use the Caltech-256 dataset in your research or development work, please cite the following paper: |

||||

|

||||

!!! note "" |

||||

|

||||

=== "BibTeX" |

||||

|

||||

```bibtex |

||||

@article{griffin2007caltech, |

||||

title={Caltech-256 object category dataset}, |

||||

author={Griffin, Gregory and Holub, Alex and Perona, Pietro}, |

||||

year={2007} |

||||

} |

||||

``` |

||||

|

||||

We would like to acknowledge Gregory Griffin, Alex Holub, and Pietro Perona for creating and maintaining the Caltech-256 dataset as a valuable resource for the machine learning and computer vision research community. For more information about the |

||||

|

||||

Caltech-256 dataset and its creators, visit the [Caltech-256 dataset website](https://data.caltech.edu/records/nyy15-4j048). |

||||

@ -0,0 +1,80 @@ |

||||

--- |

||||

comments: true |

||||

description: Explore the CIFAR-10 dataset, widely used for training in machine learning and computer vision, and learn how to use it with Ultralytics YOLO. |

||||

keywords: CIFAR-10, dataset, machine learning, image classification, computer vision, YOLO, Ultralytics, training, testing, deep learning, Convolutional Neural Networks, Support Vector Machines |

||||

--- |

||||

|

||||

# CIFAR-10 Dataset |

||||

|

||||

The [CIFAR-10](https://www.cs.toronto.edu/~kriz/cifar.html) (Canadian Institute For Advanced Research) dataset is a collection of images used widely for machine learning and computer vision algorithms. It was developed by researchers at the CIFAR institute and consists of 60,000 32x32 color images in 10 different classes. |

||||

|

||||

## Key Features |

||||

|

||||

- The CIFAR-10 dataset consists of 60,000 images, divided into 10 classes. |

||||

- Each class contains 6,000 images, split into 5,000 for training and 1,000 for testing. |

||||

- The images are colored and of size 32x32 pixels. |

||||

- The 10 different classes represent airplanes, cars, birds, cats, deer, dogs, frogs, horses, ships, and trucks. |

||||

- CIFAR-10 is commonly used for training and testing in the field of machine learning and computer vision. |

||||

|

||||

## Dataset Structure |

||||

|

||||

The CIFAR-10 dataset is split into two subsets: |

||||

|

||||

1. **Training Set**: This subset contains 50,000 images used for training machine learning models. |

||||

2. **Testing Set**: This subset consists of 10,000 images used for testing and benchmarking the trained models. |

||||

|

||||

## Applications |

||||

|

||||

The CIFAR-10 dataset is widely used for training and evaluating deep learning models in image classification tasks, such as Convolutional Neural Networks (CNNs), Support Vector Machines (SVMs), and various other machine learning algorithms. The diversity of the dataset in terms of classes and the presence of color images make it a well-rounded dataset for research and development in the field of machine learning and computer vision. |

||||

|

||||

## Usage |

||||

|

||||

To train a YOLO model on the CIFAR-10 dataset for 100 epochs with an image size of 32x32, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page. |

||||

|

||||

!!! example "Train Example" |

||||

|

||||

=== "Python" |

||||

|

||||

```python |

||||

from ultralytics import YOLO |

||||

|

||||

# Load a model |

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training) |

||||

|

||||

# Train the model |

||||

results = model.train(data='cifar10', epochs=100, imgsz=32) |

||||

``` |

||||

|

||||

=== "CLI" |

||||

|

||||

```bash |

||||

# Start training from a pretrained *.pt model |

||||

yolo detect train data=cifar10 model=yolov8n-cls.pt epochs=100 imgsz=32 |

||||

``` |

||||

|

||||

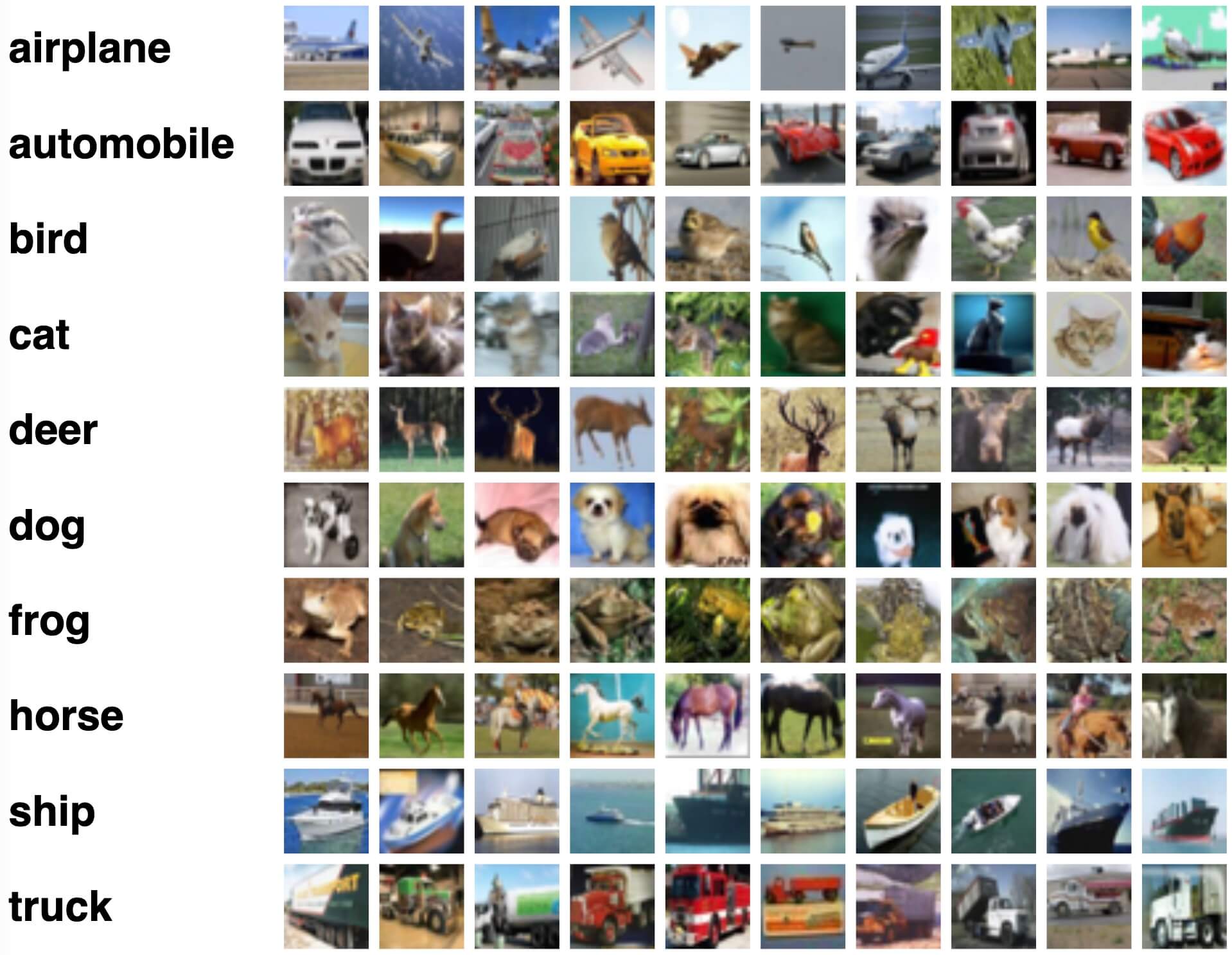

## Sample Images and Annotations |

||||

|

||||

The CIFAR-10 dataset contains color images of various objects, providing a well-structured dataset for image classification tasks. Here are some examples of images from the dataset: |

||||

|

||||

|

||||

|

||||

The example showcases the variety and complexity of the objects in the CIFAR-10 dataset, highlighting the importance of a diverse dataset for training robust image classification models. |

||||

|

||||

## Citations and Acknowledgments |

||||

|

||||

If you use the CIFAR-10 dataset in your research or development work, please cite the following paper: |

||||

|

||||

!!! note "" |

||||

|

||||

=== "BibTeX" |

||||

|

||||

```bibtex |

||||

@TECHREPORT{Krizhevsky09learningmultiple, |

||||

author={Alex Krizhevsky}, |

||||

title={Learning multiple layers of features from tiny images}, |

||||

institution={}, |

||||

year={2009} |

||||

} |

||||

``` |

||||

|

||||

We would like to acknowledge Alex Krizhevsky for creating and maintaining the CIFAR-10 dataset as a valuable resource for the machine learning and computer vision research community. For more information about the CIFAR-10 dataset and its creator, visit the [CIFAR-10 dataset website](https://www.cs.toronto.edu/~kriz/cifar.html). |

||||

@ -0,0 +1,80 @@ |

||||

--- |

||||

comments: true |

||||

description: Discover how to leverage the CIFAR-100 dataset for machine learning and computer vision tasks with YOLO. Gain insights on its structure, use, and utilization for model training. |

||||

keywords: Ultralytics, YOLO, CIFAR-100 dataset, image classification, machine learning, computer vision, YOLO model training |

||||

--- |

||||

|

||||

# CIFAR-100 Dataset |

||||

|

||||

The [CIFAR-100](https://www.cs.toronto.edu/~kriz/cifar.html) (Canadian Institute For Advanced Research) dataset is a significant extension of the CIFAR-10 dataset, composed of 60,000 32x32 color images in 100 different classes. It was developed by researchers at the CIFAR institute, offering a more challenging dataset for more complex machine learning and computer vision tasks. |

||||

|

||||

## Key Features |

||||

|

||||

- The CIFAR-100 dataset consists of 60,000 images, divided into 100 classes. |

||||

- Each class contains 600 images, split into 500 for training and 100 for testing. |

||||

- The images are colored and of size 32x32 pixels. |

||||

- The 100 different classes are grouped into 20 coarse categories for higher level classification. |

||||

- CIFAR-100 is commonly used for training and testing in the field of machine learning and computer vision. |

||||

|

||||

## Dataset Structure |

||||

|

||||

The CIFAR-100 dataset is split into two subsets: |

||||

|

||||

1. **Training Set**: This subset contains 50,000 images used for training machine learning models. |

||||

2. **Testing Set**: This subset consists of 10,000 images used for testing and benchmarking the trained models. |

||||

|

||||

## Applications |

||||

|

||||

The CIFAR-100 dataset is extensively used for training and evaluating deep learning models in image classification tasks, such as Convolutional Neural Networks (CNNs), Support Vector Machines (SVMs), and various other machine learning algorithms. The diversity of the dataset in terms of classes and the presence of color images make it a more challenging and comprehensive dataset for research and development in the field of machine learning and computer vision. |

||||

|

||||

## Usage |

||||

|

||||

To train a YOLO model on the CIFAR-100 dataset for 100 epochs with an image size of 32x32, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page. |

||||

|

||||

!!! example "Train Example" |

||||

|

||||

=== "Python" |

||||

|

||||

```python |

||||

from ultralytics import YOLO |

||||

|

||||

# Load a model |

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training) |

||||

|

||||

# Train the model |

||||

results = model.train(data='cifar100', epochs=100, imgsz=32) |

||||

``` |

||||

|

||||

=== "CLI" |

||||

|

||||

```bash |

||||

# Start training from a pretrained *.pt model |

||||

yolo detect train data=cifar100 model=yolov8n-cls.pt epochs=100 imgsz=32 |

||||

``` |

||||

|

||||

## Sample Images and Annotations |

||||

|

||||

The CIFAR-100 dataset contains color images of various objects, providing a well-structured dataset for image classification tasks. Here are some examples of images from the dataset: |

||||

|

||||

|

||||

|

||||

The example showcases the variety and complexity of the objects in the CIFAR-100 dataset, highlighting the importance of a diverse dataset for training robust image classification models. |

||||

|

||||

## Citations and Acknowledgments |

||||

|

||||

If you use the CIFAR-100 dataset in your research or development work, please cite the following paper: |

||||

|

||||

!!! note "" |

||||

|

||||

=== "BibTeX" |

||||

|

||||

```bibtex |

||||

@TECHREPORT{Krizhevsky09learningmultiple, |

||||

author={Alex Krizhevsky}, |

||||

title={Learning multiple layers of features from tiny images}, |

||||

institution={}, |

||||

year={2009} |

||||

} |

||||

``` |

||||

|

||||

We would like to acknowledge Alex Krizhevsky for creating and maintaining the CIFAR-100 dataset as a valuable resource for the machine learning and computer vision research community. For more information about the CIFAR-100 dataset and its creator, visit the [CIFAR-100 dataset website](https://www.cs.toronto.edu/~kriz/cifar.html). |

||||

@ -0,0 +1,79 @@ |

||||

--- |

||||

comments: true |

||||

description: Learn how to use the Fashion-MNIST dataset for image classification with the Ultralytics YOLO model. Covers dataset structure, labels, applications, and usage. |

||||

keywords: Ultralytics, YOLO, Fashion-MNIST, dataset, image classification, machine learning, deep learning, neural networks, training, testing |

||||

--- |

||||

|

||||

# Fashion-MNIST Dataset |

||||

|

||||

The [Fashion-MNIST](https://github.com/zalandoresearch/fashion-mnist) dataset is a database of Zalando's article images—consisting of a training set of 60,000 examples and a test set of 10,000 examples. Each example is a 28x28 grayscale image, associated with a label from 10 classes. Fashion-MNIST is intended to serve as a direct drop-in replacement for the original MNIST dataset for benchmarking machine learning algorithms. |

||||

|

||||

## Key Features |

||||

|

||||

- Fashion-MNIST contains 60,000 training images and 10,000 testing images of Zalando's article images. |

||||

- The dataset comprises grayscale images of size 28x28 pixels. |

||||

- Each pixel has a single pixel-value associated with it, indicating the lightness or darkness of that pixel, with higher numbers meaning darker. This pixel-value is an integer between 0 and 255. |

||||

- Fashion-MNIST is widely used for training and testing in the field of machine learning, especially for image classification tasks. |

||||

|

||||

## Dataset Structure |

||||

|

||||

The Fashion-MNIST dataset is split into two subsets: |

||||

|

||||

1. **Training Set**: This subset contains 60,000 images used for training machine learning models. |

||||

2. **Testing Set**: This subset consists of 10,000 images used for testing and benchmarking the trained models. |

||||

|

||||

## Labels |

||||

|

||||

Each training and test example is assigned to one of the following labels: |

||||

|

||||

0. T-shirt/top |

||||

1. Trouser |

||||

2. Pullover |

||||

3. Dress |

||||

4. Coat |

||||

5. Sandal |

||||

6. Shirt |

||||

7. Sneaker |

||||

8. Bag |

||||

9. Ankle boot |

||||

|

||||

## Applications |

||||

|

||||

The Fashion-MNIST dataset is widely used for training and evaluating deep learning models in image classification tasks, such as Convolutional Neural Networks (CNNs), Support Vector Machines (SVMs), and various other machine learning algorithms. The dataset's simple and well-structured format makes it an essential resource for researchers and practitioners in the field of machine learning and computer vision. |

||||

|

||||

## Usage |

||||

|

||||

To train a CNN model on the Fashion-MNIST dataset for 100 epochs with an image size of 28x28, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page. |

||||

|

||||

!!! example "Train Example" |

||||

|

||||

=== "Python" |

||||

|

||||

```python |

||||

from ultralytics import YOLO |

||||

|

||||

# Load a model |

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training) |

||||

|

||||

# Train the model |

||||

results = model.train(data='fashion-mnist', epochs=100, imgsz=28) |

||||

``` |

||||

|

||||

=== "CLI" |

||||

|

||||

```bash |

||||

# Start training from a pretrained *.pt model |

||||

yolo detect train data=fashion-mnist model=yolov8n-cls.pt epochs=100 imgsz=28 |

||||

``` |

||||

|

||||

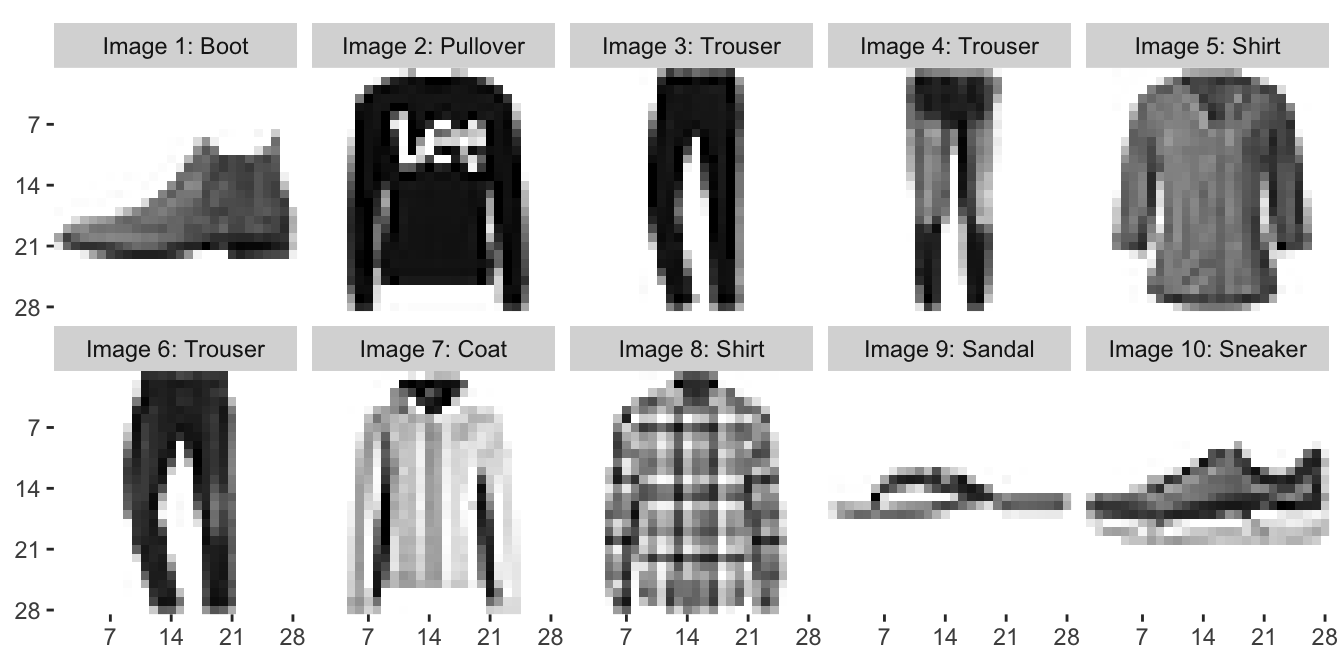

## Sample Images and Annotations |

||||

|

||||

The Fashion-MNIST dataset contains grayscale images of Zalando's article images, providing a well-structured dataset for image classification tasks. Here are some examples of images from the dataset: |

||||

|

||||

|

||||

|

||||

The example showcases the variety and complexity of the images in the Fashion-MNIST dataset, highlighting the importance of a diverse dataset for training robust image classification models. |

||||

|

||||

## Acknowledgments |

||||

|

||||

If you use the Fashion-MNIST dataset in your research or development work, please acknowledge the dataset by linking to the [GitHub repository](https://github.com/zalandoresearch/fashion-mnist). This dataset was made available by Zalando Research. |

||||

@ -0,0 +1,83 @@ |

||||

--- |

||||

comments: true |

||||

description: Understand how to use ImageNet, an extensive annotated image dataset for object recognition research, with Ultralytics YOLO models. Learn about its structure, usage, and significance in computer vision. |

||||

keywords: Ultralytics, YOLO, ImageNet, dataset, object recognition, deep learning, computer vision, machine learning, dataset training, model training, image classification, object detection |

||||

--- |

||||

|

||||

# ImageNet Dataset |

||||

|

||||

[ImageNet](https://www.image-net.org/) is a large-scale database of annotated images designed for use in visual object recognition research. It contains over 14 million images, with each image annotated using WordNet synsets, making it one of the most extensive resources available for training deep learning models in computer vision tasks. |

||||

|

||||

## Key Features |

||||

|

||||

- ImageNet contains over 14 million high-resolution images spanning thousands of object categories. |

||||

- The dataset is organized according to the WordNet hierarchy, with each synset representing a category. |

||||

- ImageNet is widely used for training and benchmarking in the field of computer vision, particularly for image classification and object detection tasks. |

||||

- The annual ImageNet Large Scale Visual Recognition Challenge (ILSVRC) has been instrumental in advancing computer vision research. |

||||

|

||||

## Dataset Structure |

||||

|

||||

The ImageNet dataset is organized using the WordNet hierarchy. Each node in the hierarchy represents a category, and each category is described by a synset (a collection of synonymous terms). The images in ImageNet are annotated with one or more synsets, providing a rich resource for training models to recognize various objects and their relationships. |

||||

|

||||

## ImageNet Large Scale Visual Recognition Challenge (ILSVRC) |

||||

|

||||

The annual [ImageNet Large Scale Visual Recognition Challenge (ILSVRC)](http://image-net.org/challenges/LSVRC/) has been an important event in the field of computer vision. It has provided a platform for researchers and developers to evaluate their algorithms and models on a large-scale dataset with standardized evaluation metrics. The ILSVRC has led to significant advancements in the development of deep learning models for image classification, object detection, and other computer vision tasks. |

||||

|

||||

## Applications |

||||

|

||||

The ImageNet dataset is widely used for training and evaluating deep learning models in various computer vision tasks, such as image classification, object detection, and object localization. Some popular deep learning architectures, such as AlexNet, VGG, and ResNet, were developed and benchmarked using the ImageNet dataset. |

||||

|

||||

## Usage |

||||

|

||||

To train a deep learning model on the ImageNet dataset for 100 epochs with an image size of 224x224, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page. |

||||

|

||||

!!! example "Train Example" |

||||

|

||||

=== "Python" |

||||

|

||||

```python |

||||

from ultralytics import YOLO |

||||

|

||||

# Load a model |

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training) |

||||

|

||||

# Train the model |

||||

results = model.train(data='imagenet', epochs=100, imgsz=224) |

||||

``` |

||||

|

||||

=== "CLI" |

||||

|

||||

```bash |

||||

# Start training from a pretrained *.pt model |

||||

yolo train data=imagenet model=yolov8n-cls.pt epochs=100 imgsz=224 |

||||

``` |

||||

|

||||

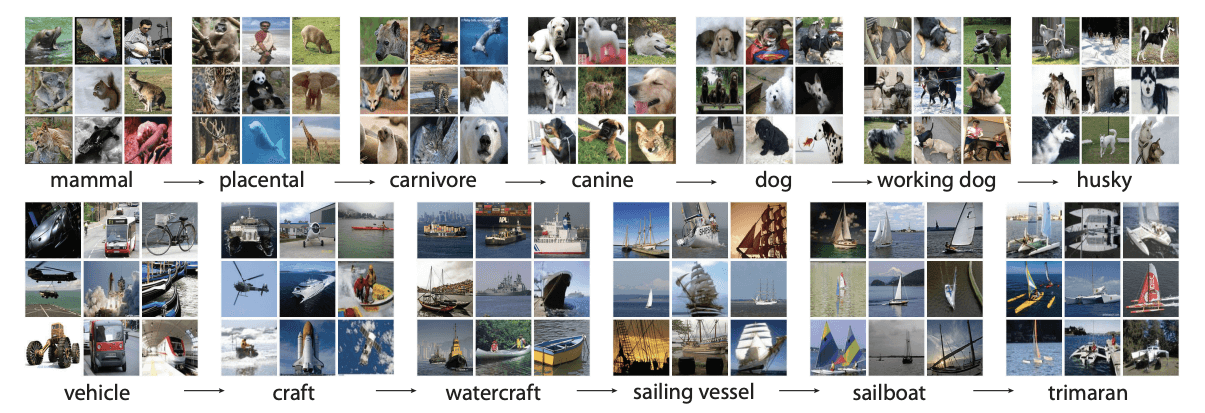

## Sample Images and Annotations |

||||

|

||||

The ImageNet dataset contains high-resolution images spanning thousands of object categories, providing a diverse and extensive dataset for training and evaluating computer vision models. Here are some examples of images from the dataset: |

||||

|

||||

|

||||

|

||||

The example showcases the variety and complexity of the images in the ImageNet dataset, highlighting the importance of a diverse dataset for training robust computer vision models. |

||||

|

||||

## Citations and Acknowledgments |

||||

|

||||

If you use the ImageNet dataset in your research or development work, please cite the following paper: |

||||

|

||||

!!! note "" |

||||

|

||||

=== "BibTeX" |

||||

|

||||

```bibtex |

||||

@article{ILSVRC15, |

||||

author = {Olga Russakovsky and Jia Deng and Hao Su and Jonathan Krause and Sanjeev Satheesh and Sean Ma and Zhiheng Huang and Andrej Karpathy and Aditya Khosla and Michael Bernstein and Alexander C. Berg and Li Fei-Fei}, |

||||

title={ImageNet Large Scale Visual Recognition Challenge}, |

||||

year={2015}, |

||||

journal={International Journal of Computer Vision (IJCV)}, |

||||

volume={115}, |

||||

number={3}, |

||||

pages={211-252} |

||||

} |

||||

``` |

||||

|

||||

We would like to acknowledge the ImageNet team, led by Olga Russakovsky, Jia Deng, and Li Fei-Fei, for creating and maintaining the ImageNet dataset as a valuable resource for the machine learning and computer vision research community. For more information about the ImageNet dataset and its creators, visit the [ImageNet website](https://www.image-net.org/). |

||||

@ -0,0 +1,78 @@ |

||||

--- |

||||

comments: true |

||||

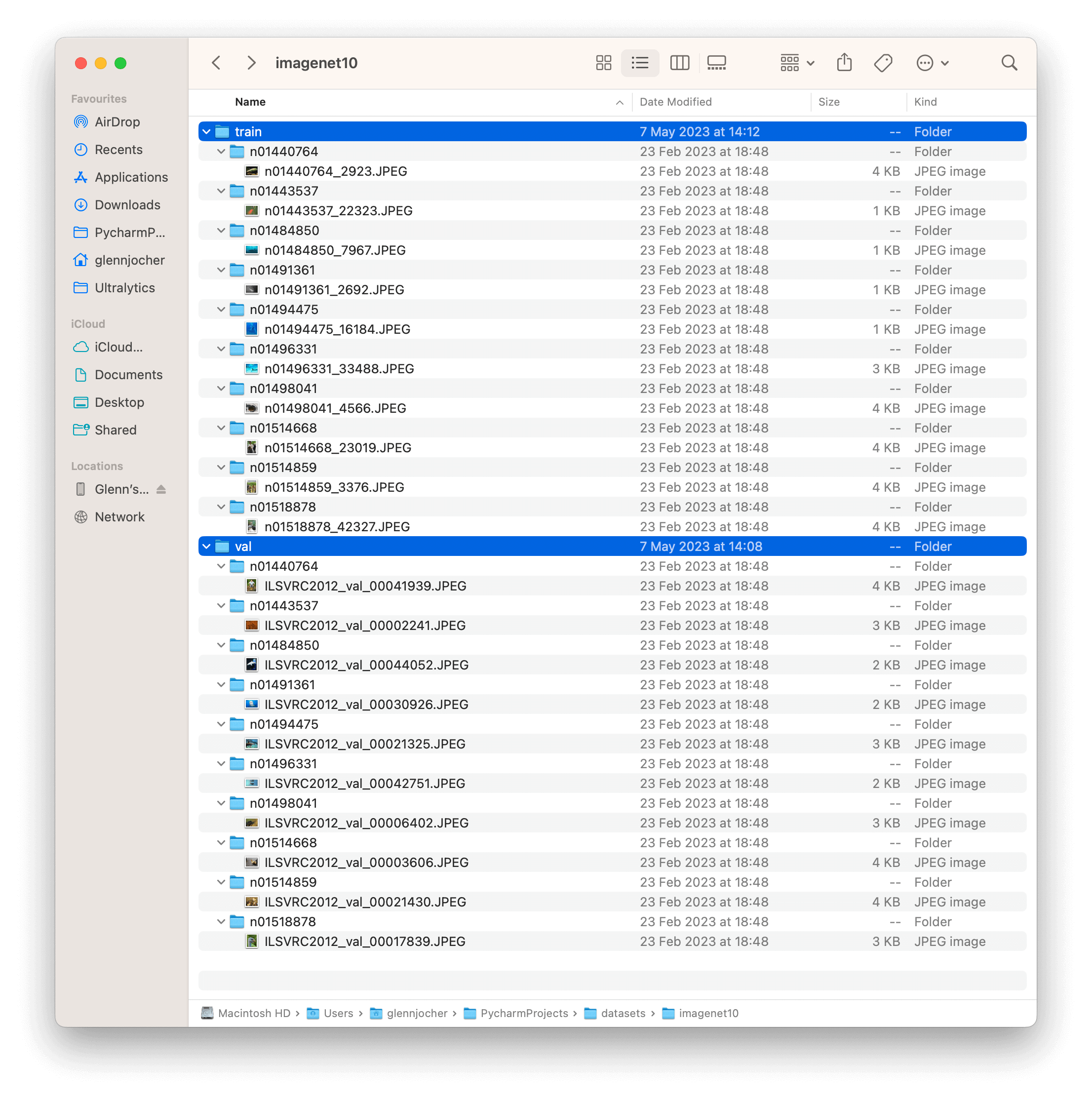

description: Explore the compact ImageNet10 Dataset developed by Ultralytics. Ideal for fast testing of computer vision training pipelines and CV model sanity checks. |

||||

keywords: Ultralytics, YOLO, ImageNet10 Dataset, Image detection, Deep Learning, ImageNet, AI model testing, Computer vision, Machine learning |

||||

--- |

||||

|

||||

# ImageNet10 Dataset |

||||

|

||||

The [ImageNet10](https://github.com/ultralytics/yolov5/releases/download/v1.0/imagenet10.zip) dataset is a small-scale subset of the [ImageNet](https://www.image-net.org/) database, developed by [Ultralytics](https://ultralytics.com) and designed for CI tests, sanity checks, and fast testing of training pipelines. This dataset is composed of the first image in the training set and the first image from the validation set of the first 10 classes in ImageNet. Although significantly smaller, it retains the structure and diversity of the original ImageNet dataset. |

||||

|

||||

## Key Features |

||||

|

||||

- ImageNet10 is a compact version of ImageNet, with 20 images representing the first 10 classes of the original dataset. |

||||

- The dataset is organized according to the WordNet hierarchy, mirroring the structure of the full ImageNet dataset. |

||||

- It is ideally suited for CI tests, sanity checks, and rapid testing of training pipelines in computer vision tasks. |

||||

- Although not designed for model benchmarking, it can provide a quick indication of a model's basic functionality and correctness. |

||||

|

||||

## Dataset Structure |

||||

|

||||

The ImageNet10 dataset, like the original ImageNet, is organized using the WordNet hierarchy. Each of the 10 classes in ImageNet10 is described by a synset (a collection of synonymous terms). The images in ImageNet10 are annotated with one or more synsets, providing a compact resource for testing models to recognize various objects and their relationships. |

||||

|

||||

## Applications |

||||

|

||||

The ImageNet10 dataset is useful for quickly testing and debugging computer vision models and pipelines. Its small size allows for rapid iteration, making it ideal for continuous integration tests and sanity checks. It can also be used for fast preliminary testing of new models or changes to existing models before moving on to full-scale testing with the complete ImageNet dataset. |

||||

|

||||

## Usage |

||||

|

||||

To test a deep learning model on the ImageNet10 dataset with an image size of 224x224, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page. |

||||

|

||||

!!! example "Test Example" |

||||

|

||||

=== "Python" |

||||

|

||||

```python |

||||

from ultralytics import YOLO |

||||

|

||||

# Load a model |

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training) |

||||

|

||||

# Train the model |

||||

results = model.train(data='imagenet10', epochs=5, imgsz=224) |

||||

``` |

||||

|

||||

=== "CLI" |

||||

|

||||

```bash |

||||

# Start training from a pretrained *.pt model |

||||

yolo train data=imagenet10 model=yolov8n-cls.pt epochs=5 imgsz=224 |

||||

``` |

||||

|

||||

## Sample Images and Annotations |

||||

|

||||

The ImageNet10 dataset contains a subset of images from the original ImageNet dataset. These images are chosen to represent the first 10 classes in the dataset, providing a diverse yet compact dataset for quick testing and evaluation. |

||||

|

||||

|

||||

The example showcases the variety and complexity of the images in the ImageNet10 dataset, highlighting its usefulness for sanity checks and quick testing of computer vision models. |

||||

|

||||

## Citations and Acknowledgments |

||||

|

||||

If you use the ImageNet10 dataset in your research or development work, please cite the original ImageNet paper: |

||||

|

||||

!!! note "" |

||||

|

||||

=== "BibTeX" |

||||

|

||||

```bibtex |

||||

@article{ILSVRC15, |

||||

author = {Olga Russakovsky and Jia Deng and Hao Su and Jonathan Krause and Sanjeev Satheesh and Sean Ma and Zhiheng Huang and Andrej Karpathy and Aditya Khosla and Michael Bernstein and Alexander C. Berg and Li Fei-Fei}, |

||||

title={ImageNet Large Scale Visual Recognition Challenge}, |

||||

year={2015}, |

||||

journal={International Journal of Computer Vision (IJCV)}, |

||||

volume={115}, |

||||

number={3}, |

||||

pages={211-252} |

||||

} |

||||

``` |

||||

|

||||

We would like to acknowledge the ImageNet team, led by Olga Russakovsky, Jia Deng, and Li Fei-Fei, for creating and maintaining the ImageNet dataset. The ImageNet10 dataset, while a compact subset, is a valuable resource for quick testing and debugging in the machine learning and computer vision research community. For more information about the ImageNet dataset and its creators, visit the [ImageNet website](https://www.image-net.org/). |

||||

@ -0,0 +1,113 @@ |

||||

--- |

||||

comments: true |

||||

description: Learn about the ImageNette dataset and its usage in deep learning model training. Find code snippets for model training and explore ImageNette datatypes. |

||||

keywords: ImageNette dataset, Ultralytics, YOLO, Image classification, Machine Learning, Deep learning, Training code snippets, CNN, ImageNette160, ImageNette320 |

||||

--- |

||||

|

||||

# ImageNette Dataset |

||||

|

||||

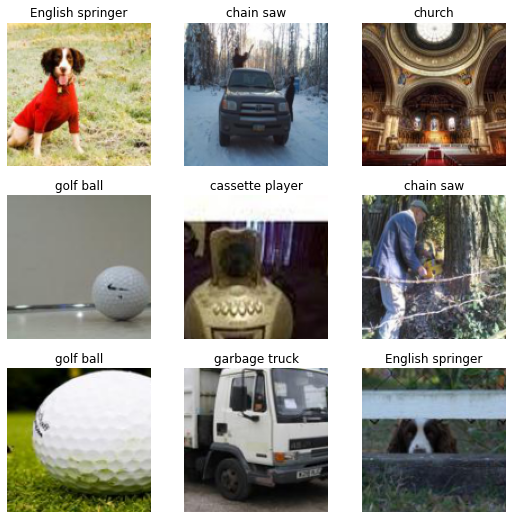

The [ImageNette](https://github.com/fastai/imagenette) dataset is a subset of the larger [Imagenet](http://www.image-net.org/) dataset, but it only includes 10 easily distinguishable classes. It was created to provide a quicker, easier-to-use version of Imagenet for software development and education. |

||||

|

||||

## Key Features |

||||

|

||||

- ImageNette contains images from 10 different classes such as tench, English springer, cassette player, chain saw, church, French horn, garbage truck, gas pump, golf ball, parachute. |

||||

- The dataset comprises colored images of varying dimensions. |

||||

- ImageNette is widely used for training and testing in the field of machine learning, especially for image classification tasks. |

||||

|

||||

## Dataset Structure |

||||

|

||||

The ImageNette dataset is split into two subsets: |

||||

|

||||

1. **Training Set**: This subset contains several thousands of images used for training machine learning models. The exact number varies per class. |

||||

2. **Validation Set**: This subset consists of several hundreds of images used for validating and benchmarking the trained models. Again, the exact number varies per class. |

||||

|

||||

## Applications |

||||

|

||||

The ImageNette dataset is widely used for training and evaluating deep learning models in image classification tasks, such as Convolutional Neural Networks (CNNs), and various other machine learning algorithms. The dataset's straightforward format and well-chosen classes make it a handy resource for both beginner and experienced practitioners in the field of machine learning and computer vision. |

||||

|

||||

## Usage |

||||

|

||||

To train a model on the ImageNette dataset for 100 epochs with a standard image size of 224x224, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page. |

||||

|

||||

!!! example "Train Example" |

||||

|

||||

=== "Python" |

||||

|

||||

```python |

||||

from ultralytics import YOLO |

||||

|

||||

# Load a model |

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training) |

||||

|

||||

# Train the model |

||||

results = model.train(data='imagenette', epochs=100, imgsz=224) |

||||

``` |

||||

|

||||

=== "CLI" |

||||

|

||||

```bash |

||||

# Start training from a pretrained *.pt model |

||||

yolo detect train data=imagenette model=yolov8n-cls.pt epochs=100 imgsz=224 |

||||

``` |

||||

|

||||

## Sample Images and Annotations |

||||

|

||||

The ImageNette dataset contains colored images of various objects and scenes, providing a diverse dataset for image classification tasks. Here are some examples of images from the dataset: |

||||

|

||||

|

||||

|

||||

The example showcases the variety and complexity of the images in the ImageNette dataset, highlighting the importance of a diverse dataset for training robust image classification models. |

||||

|

||||

## ImageNette160 and ImageNette320 |

||||

|

||||

For faster prototyping and training, the ImageNette dataset is also available in two reduced sizes: ImageNette160 and ImageNette320. These datasets maintain the same classes and structure as the full ImageNette dataset, but the images are resized to a smaller dimension. As such, these versions of the dataset are particularly useful for preliminary model testing, or when computational resources are limited. |

||||

|

||||

To use these datasets, simply replace 'imagenette' with 'imagenette160' or 'imagenette320' in the training command. The following code snippets illustrate this: |

||||

|

||||

!!! example "Train Example with ImageNette160" |

||||

|

||||

=== "Python" |

||||

|

||||

```python |

||||

from ultralytics import YOLO |

||||

|

||||

# Load a model |

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training) |

||||

|

||||

# Train the model with ImageNette160 |

||||

results = model.train(data='imagenette160', epochs=100, imgsz=160) |

||||

``` |

||||

|

||||

=== "CLI" |

||||

|

||||

```bash |

||||

# Start training from a pretrained *.pt model with ImageNette160 |

||||

yolo detect train data=imagenette160 model=yolov8n-cls.pt epochs=100 imgsz=160 |

||||

``` |

||||

|

||||

!!! example "Train Example with ImageNette320" |

||||

|

||||

=== "Python" |

||||

|

||||

```python |

||||

from ultralytics import YOLO |

||||

|

||||

# Load a model |

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training) |

||||

|

||||

# Train the model with ImageNette320 |

||||

results = model.train(data='imagenette320', epochs=100, imgsz=320) |

||||

``` |

||||

|

||||

=== "CLI" |

||||

|

||||

```bash |

||||

# Start training from a pretrained *.pt model with ImageNette320 |

||||

yolo detect train data=imagenette320 model=yolov8n-cls.pt epochs=100 imgsz=320 |

||||

``` |

||||

|

||||

These smaller versions of the dataset allow for rapid iterations during the development process while still providing valuable and realistic image classification tasks. |

||||

|

||||

## Citations and Acknowledgments |

||||

|

||||

If you use the ImageNette dataset in your research or development work, please acknowledge it appropriately. For more information about the ImageNette dataset, visit the [ImageNette dataset GitHub page](https://github.com/fastai/imagenette). |

||||

@ -0,0 +1,84 @@ |

||||

--- |

||||

comments: true |

||||

description: Explore the ImageWoof dataset, designed for challenging dog breed classification. Train AI models with Ultralytics YOLO using this dataset. |

||||

keywords: ImageWoof, image classification, dog breeds, machine learning, deep learning, Ultralytics, YOLO, dataset |

||||

--- |

||||

|

||||

# ImageWoof Dataset |

||||

|

||||

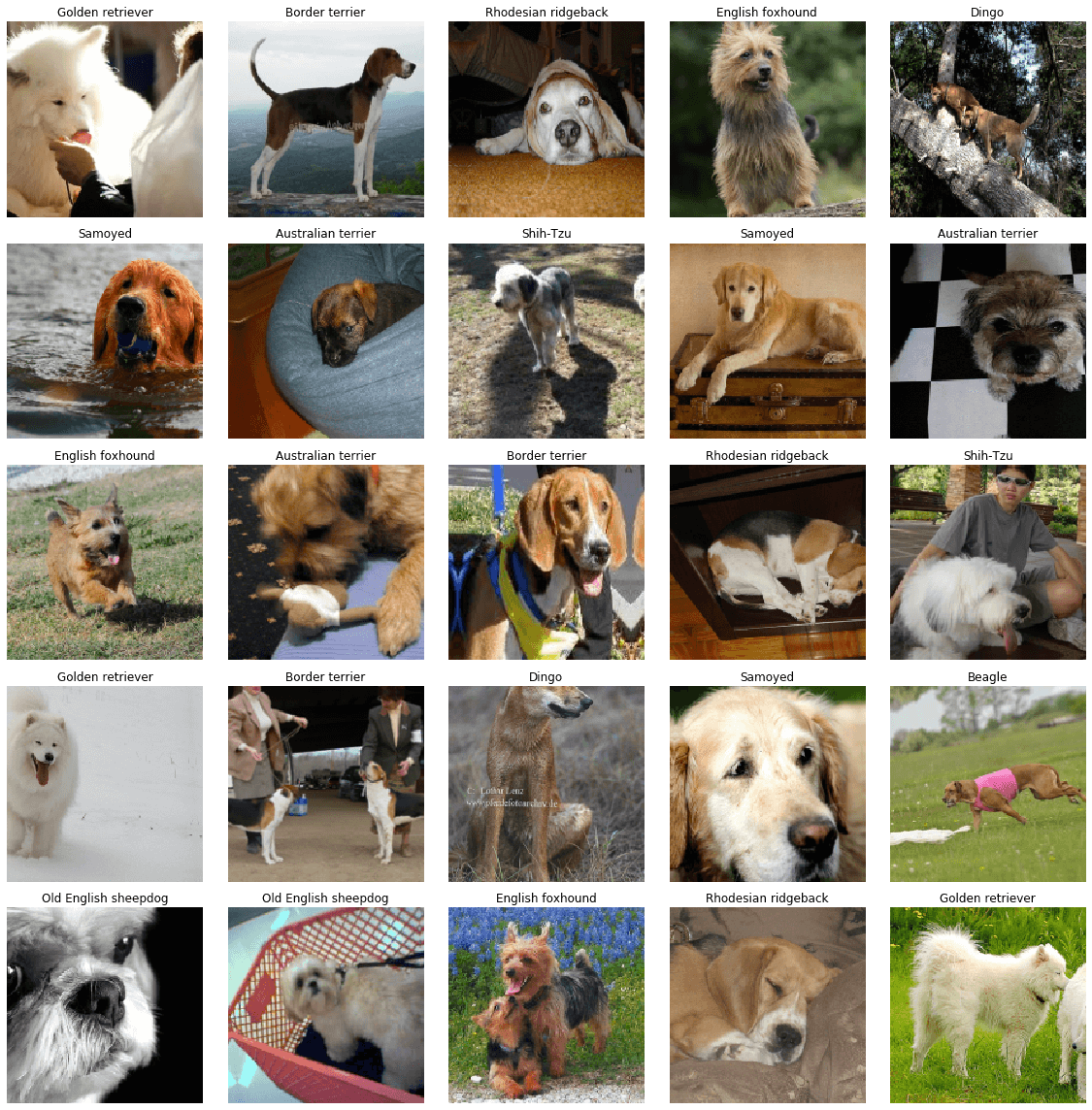

The [ImageWoof](https://github.com/fastai/imagenette) dataset is a subset of the ImageNet consisting of 10 classes that are challenging to classify, since they're all dog breeds. It was created as a more difficult task for image classification algorithms to solve, aiming at encouraging development of more advanced models. |

||||

|

||||

## Key Features |

||||

|

||||

- ImageWoof contains images of 10 different dog breeds: Australian terrier, Border terrier, Samoyed, Beagle, Shih-Tzu, English foxhound, Rhodesian ridgeback, Dingo, Golden retriever, and Old English sheepdog. |

||||

- The dataset provides images at various resolutions (full size, 320px, 160px), accommodating for different computational capabilities and research needs. |

||||

- It also includes a version with noisy labels, providing a more realistic scenario where labels might not always be reliable. |

||||

|

||||

## Dataset Structure |

||||

|

||||

The ImageWoof dataset structure is based on the dog breed classes, with each breed having its own directory of images. |

||||

|

||||

## Applications |

||||

|

||||

The ImageWoof dataset is widely used for training and evaluating deep learning models in image classification tasks, especially when it comes to more complex and similar classes. The dataset's challenge lies in the subtle differences between the dog breeds, pushing the limits of model's performance and generalization. |

||||

|

||||

## Usage |

||||

|

||||

To train a CNN model on the ImageWoof dataset for 100 epochs with an image size of 224x224, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page. |

||||

|

||||

!!! example "Train Example" |

||||

|

||||

=== "Python" |

||||

|

||||

```python |

||||

from ultralytics import YOLO |

||||

|

||||

# Load a model |

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training) |

||||

|

||||

# Train the model |

||||

results = model.train(data='imagewoof', epochs=100, imgsz=224) |

||||

``` |

||||

|

||||

=== "CLI" |

||||

|

||||

```bash |

||||

# Start training from a pretrained *.pt model |

||||

yolo detect train data=imagewoof model=yolov8n-cls.pt epochs=100 imgsz=224 |

||||

``` |

||||

|

||||

## Dataset Variants |

||||

|

||||

ImageWoof dataset comes in three different sizes to accommodate various research needs and computational capabilities: |

||||

|

||||

1. **Full Size (imagewoof)**: This is the original version of the ImageWoof dataset. It contains full-sized images and is ideal for final training and performance benchmarking. |

||||

|

||||

2. **Medium Size (imagewoof320)**: This version contains images resized to have a maximum edge length of 320 pixels. It's suitable for faster training without significantly sacrificing model performance. |

||||

|

||||

3. **Small Size (imagewoof160)**: This version contains images resized to have a maximum edge length of 160 pixels. It's designed for rapid prototyping and experimentation where training speed is a priority. |

||||

|

||||

To use these variants in your training, simply replace 'imagewoof' in the dataset argument with 'imagewoof320' or 'imagewoof160'. For example: |

||||

|

||||

```python |

||||

# For medium-sized dataset |

||||

model.train(data='imagewoof320', epochs=100, imgsz=224) |

||||

|

||||

# For small-sized dataset |

||||

model.train(data='imagewoof160', epochs=100, imgsz=224) |

||||

``` |

||||

|

||||

It's important to note that using smaller images will likely yield lower performance in terms of classification accuracy. However, it's an excellent way to iterate quickly in the early stages of model development and prototyping. |

||||

|

||||

## Sample Images and Annotations |

||||

|

||||

The ImageWoof dataset contains colorful images of various dog breeds, providing a challenging dataset for image classification tasks. Here are some examples of images from the dataset: |

||||

|

||||

|

||||

|

||||

The example showcases the subtle differences and similarities among the different dog breeds in the ImageWoof dataset, highlighting the complexity and difficulty of the classification task. |

||||

|

||||

## Citations and Acknowledgments |

||||

|

||||

If you use the ImageWoof dataset in your research or development work, please make sure to acknowledge the creators of the dataset by linking to the [official dataset repository](https://github.com/fastai/imagenette). |

||||

|

||||

We would like to acknowledge the FastAI team for creating and maintaining the ImageWoof dataset as a valuable resource for the machine learning and computer vision research community. For more information about the ImageWoof dataset, visit the [ImageWoof dataset repository](https://github.com/fastai/imagenette). |

||||

@ -0,0 +1,120 @@ |

||||

--- |

||||

comments: true |

||||

description: Explore image classification datasets supported by Ultralytics, learn the standard dataset format, and set up your own dataset for training models. |

||||

keywords: Ultralytics, image classification, dataset, machine learning, CIFAR-10, ImageNet, MNIST, torchvision |

||||

--- |

||||

|

||||

# Image Classification Datasets Overview |

||||

|

||||

## Dataset format |

||||

|

||||

The folder structure for classification datasets in torchvision typically follows a standard format: |

||||

|

||||

``` |

||||

root/ |

||||

|-- class1/ |

||||

| |-- img1.jpg |

||||

| |-- img2.jpg |

||||

| |-- ... |

||||

| |

||||

|-- class2/ |

||||

| |-- img1.jpg |

||||

| |-- img2.jpg |

||||

| |-- ... |

||||

| |

||||

|-- class3/ |

||||

| |-- img1.jpg |

||||

| |-- img2.jpg |

||||

| |-- ... |

||||

| |

||||

|-- ... |

||||

``` |

||||

|

||||

In this folder structure, the `root` directory contains one subdirectory for each class in the dataset. Each subdirectory is named after the corresponding class and contains all the images for that class. Each image file is named uniquely and is typically in a common image file format such as JPEG or PNG. |

||||

|

||||

** Example ** |

||||

|

||||

For example, in the CIFAR10 dataset, the folder structure would look like this: |

||||

|

||||

``` |

||||

cifar-10-/ |

||||

| |

||||

|-- train/ |

||||

| |-- airplane/ |

||||

| | |-- 10008_airplane.png |

||||

| | |-- 10009_airplane.png |

||||

| | |-- ... |

||||

| | |

||||

| |-- automobile/ |

||||

| | |-- 1000_automobile.png |

||||

| | |-- 1001_automobile.png |

||||

| | |-- ... |

||||

| | |

||||

| |-- bird/ |

||||

| | |-- 10014_bird.png |

||||

| | |-- 10015_bird.png |

||||

| | |-- ... |

||||

| | |

||||

| |-- ... |

||||

| |

||||

|-- test/ |

||||

| |-- airplane/ |

||||

| | |-- 10_airplane.png |

||||

| | |-- 11_airplane.png |

||||

| | |-- ... |

||||

| | |

||||

| |-- automobile/ |

||||

| | |-- 100_automobile.png |

||||

| | |-- 101_automobile.png |

||||

| | |-- ... |

||||

| | |

||||

| |-- bird/ |

||||

| | |-- 1000_bird.png |

||||

| | |-- 1001_bird.png |

||||

| | |-- ... |

||||

| | |

||||

| |-- ... |

||||

``` |

||||

|

||||

In this example, the `train` directory contains subdirectories for each class in the dataset, and each class subdirectory contains all the images for that class. The `test` directory has a similar structure. The `root` directory also contains other files that are part of the CIFAR10 dataset. |

||||

|

||||

## Usage |

||||

|

||||

!!! example "" |

||||

|

||||

=== "Python" |

||||

|

||||

```python |

||||

from ultralytics import YOLO |

||||

|

||||

# Load a model |

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training) |

||||

|

||||

# Train the model |

||||

results = model.train(data='path/to/dataset', epochs=100, imgsz=640) |

||||

``` |

||||

=== "CLI" |

||||

|

||||

```bash |

||||

# Start training from a pretrained *.pt model |

||||

yolo detect train data=path/to/data model=yolov8n-cls.pt epochs=100 imgsz=640 |

||||

``` |

||||

|

||||

## Supported Datasets |

||||

|

||||

Ultralytics supports the following datasets with automatic download: |

||||

|

||||

* [Caltech 101](caltech101.md): A dataset containing images of 101 object categories for image classification tasks. |

||||

* [Caltech 256](caltech256.md): An extended version of Caltech 101 with 256 object categories and more challenging images. |

||||

* [CIFAR-10](cifar10.md): A dataset of 60K 32x32 color images in 10 classes, with 6K images per class. |

||||

* [CIFAR-100](cifar100.md): An extended version of CIFAR-10 with 100 object categories and 600 images per class. |

||||

* [Fashion-MNIST](fashion-mnist.md): A dataset consisting of 70,000 grayscale images of 10 fashion categories for image classification tasks. |

||||

* [ImageNet](imagenet.md): A large-scale dataset for object detection and image classification with over 14 million images and 20,000 categories. |

||||

* [ImageNet-10](imagenet10.md): A smaller subset of ImageNet with 10 categories for faster experimentation and testing. |

||||

* [Imagenette](imagenette.md): A smaller subset of ImageNet that contains 10 easily distinguishable classes for quicker training and testing. |

||||

* [Imagewoof](imagewoof.md): A more challenging subset of ImageNet containing 10 dog breed categories for image classification tasks. |

||||

* [MNIST](mnist.md): A dataset of 70,000 grayscale images of handwritten digits for image classification tasks. |

||||

|

||||

### Adding your own dataset |

||||

|

||||

If you have your own dataset and would like to use it for training classification models with Ultralytics, ensure that it follows the format specified above under "Dataset format" and then point your `data` argument to the dataset directory. |

||||

@ -0,0 +1,86 @@ |

||||

--- |

||||

comments: true |

||||

description: Detailed guide on the MNIST Dataset, a benchmark in the machine learning community for image classification tasks. Learn about its structure, usage and application. |

||||

keywords: MNIST dataset, Ultralytics, image classification, machine learning, computer vision, deep learning, AI, dataset guide |

||||

--- |

||||

|

||||

# MNIST Dataset |

||||

|

||||

The [MNIST](http://yann.lecun.com/exdb/mnist/) (Modified National Institute of Standards and Technology) dataset is a large database of handwritten digits that is commonly used for training various image processing systems and machine learning models. It was created by "re-mixing" the samples from NIST's original datasets and has become a benchmark for evaluating the performance of image classification algorithms. |

||||

|

||||

## Key Features |

||||

|

||||

- MNIST contains 60,000 training images and 10,000 testing images of handwritten digits. |

||||

- The dataset comprises grayscale images of size 28x28 pixels. |

||||

- The images are normalized to fit into a 28x28 pixel bounding box and anti-aliased, introducing grayscale levels. |

||||

- MNIST is widely used for training and testing in the field of machine learning, especially for image classification tasks. |

||||

|

||||

## Dataset Structure |

||||

|

||||

The MNIST dataset is split into two subsets: |

||||

|

||||

1. **Training Set**: This subset contains 60,000 images of handwritten digits used for training machine learning models. |

||||

2. **Testing Set**: This subset consists of 10,000 images used for testing and benchmarking the trained models. |

||||

|

||||

## Extended MNIST (EMNIST) |

||||

|

||||

Extended MNIST (EMNIST) is a newer dataset developed and released by NIST to be the successor to MNIST. While MNIST included images only of handwritten digits, EMNIST includes all the images from NIST Special Database 19, which is a large database of handwritten uppercase and lowercase letters as well as digits. The images in EMNIST were converted into the same 28x28 pixel format, by the same process, as were the MNIST images. Accordingly, tools that work with the older, smaller MNIST dataset will likely work unmodified with EMNIST. |

||||

|

||||

## Applications |

||||

|

||||

The MNIST dataset is widely used for training and evaluating deep learning models in image classification tasks, such as Convolutional Neural Networks (CNNs), Support Vector Machines (SVMs), and various other machine learning algorithms. The dataset's simple and well-structured format makes it an essential resource for researchers and practitioners in the field of machine learning and computer vision. |

||||

|

||||

## Usage |

||||

|

||||

To train a CNN model on the MNIST dataset for 100 epochs with an image size of 32x32, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page. |

||||

|

||||

!!! example "Train Example" |

||||

|

||||

=== "Python" |

||||

|

||||

```python |

||||

from ultralytics import YOLO |

||||

|

||||

# Load a model |

||||

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training) |

||||

|

||||

# Train the model |

||||

results = model.train(data='mnist', epochs=100, imgsz=32) |

||||

``` |

||||

|

||||

=== "CLI" |

||||

|

||||

```bash |

||||

# Start training from a pretrained *.pt model |

||||

cnn detect train data=mnist model=yolov8n-cls.pt epochs=100 imgsz=28 |

||||

``` |

||||

|

||||

## Sample Images and Annotations |

||||

|

||||

The MNIST dataset contains grayscale images of handwritten digits, providing a well-structured dataset for image classification tasks. Here are some examples of images from the dataset: |

||||

|

||||

|

||||

|

||||

The example showcases the variety and complexity of the handwritten digits in the MNIST dataset, highlighting the importance of a diverse dataset for training robust image classification models. |

||||

|

||||

## Citations and Acknowledgments |

||||

|

||||

If you use the MNIST dataset in your |

||||

|

||||

research or development work, please cite the following paper: |

||||

|

||||

!!! note "" |

||||

|

||||

=== "BibTeX" |

||||

|

||||

```bibtex |

||||

@article{lecun2010mnist, |

||||

title={MNIST handwritten digit database}, |

||||

author={LeCun, Yann and Cortes, Corinna and Burges, CJ}, |

||||

journal={ATT Labs [Online]. Available: http://yann.lecun.com/exdb/mnist}, |

||||

volume={2}, |

||||

year={2010} |

||||

} |

||||

``` |

||||

|

||||

We would like to acknowledge Yann LeCun, Corinna Cortes, and Christopher J.C. Burges for creating and maintaining the MNIST dataset as a valuable resource for the machine learning and computer vision research community. For more information about the MNIST dataset and its creators, visit the [MNIST dataset website](http://yann.lecun.com/exdb/mnist/). |

||||

@ -0,0 +1,97 @@ |

||||

--- |

||||

comments: true |

||||

description: Explore Argoverse, a comprehensive dataset for autonomous driving tasks including 3D tracking, motion forecasting and depth estimation used in YOLO. |

||||

keywords: Argoverse dataset, autonomous driving, YOLO, 3D tracking, motion forecasting, LiDAR data, HD maps, ultralytics documentation |

||||

--- |

||||

|

||||

# Argoverse Dataset |

||||

|

||||

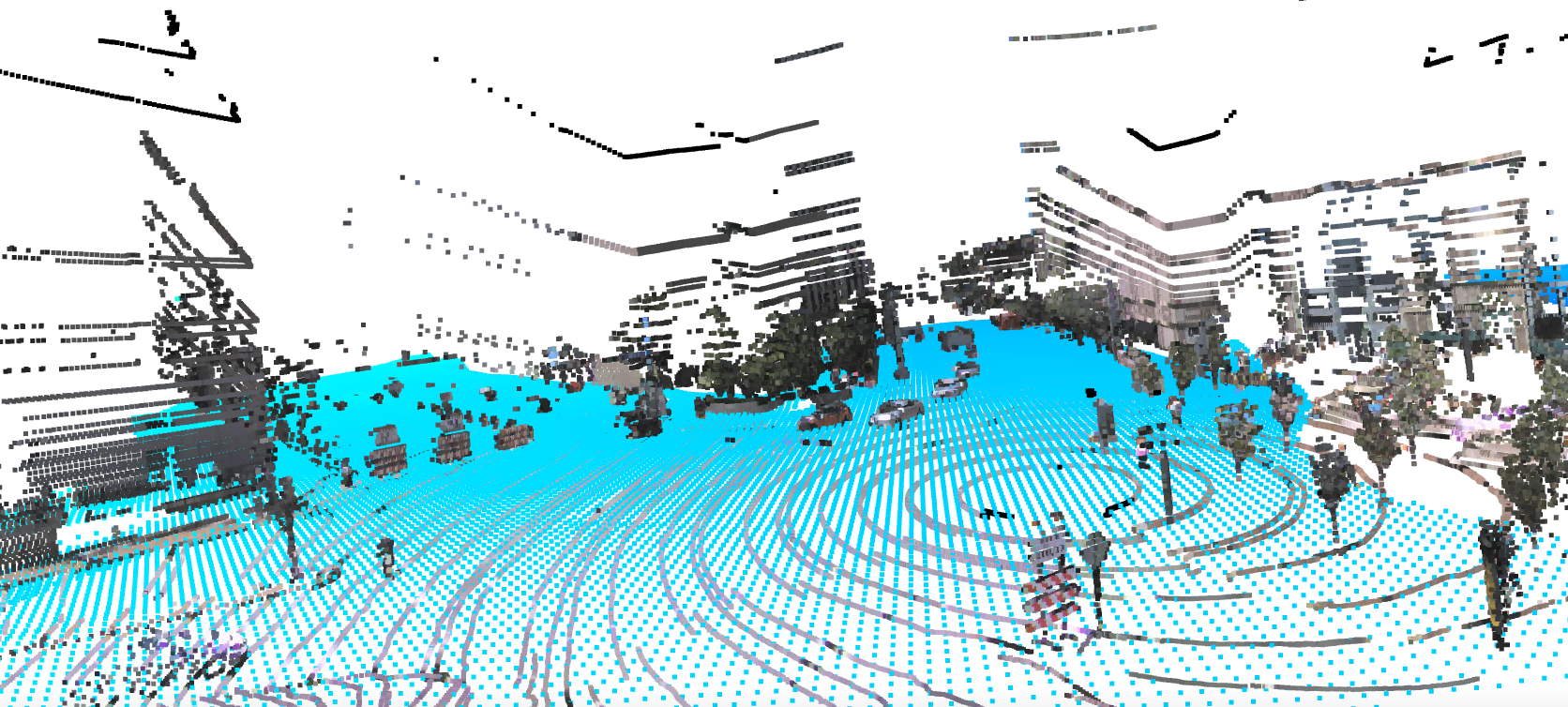

The [Argoverse](https://www.argoverse.org/) dataset is a collection of data designed to support research in autonomous driving tasks, such as 3D tracking, motion forecasting, and stereo depth estimation. Developed by Argo AI, the dataset provides a wide range of high-quality sensor data, including high-resolution images, LiDAR point clouds, and map data. |

||||

|

||||

!!! note |

||||

|

||||

The Argoverse dataset *.zip file required for training was removed from Amazon S3 after the shutdown of Argo AI by Ford, but we have made it available for manual download on [Google Drive](https://drive.google.com/file/d/1st9qW3BeIwQsnR0t8mRpvbsSWIo16ACi/view?usp=drive_link). |

||||

|

||||

## Key Features |

||||

|

||||

- Argoverse contains over 290K labeled 3D object tracks and 5 million object instances across 1,263 distinct scenes. |

||||

- The dataset includes high-resolution camera images, LiDAR point clouds, and richly annotated HD maps. |

||||

- Annotations include 3D bounding boxes for objects, object tracks, and trajectory information. |

||||

- Argoverse provides multiple subsets for different tasks, such as 3D tracking, motion forecasting, and stereo depth estimation. |

||||

|

||||

## Dataset Structure |

||||

|

||||

The Argoverse dataset is organized into three main subsets: |

||||

|

||||

1. **Argoverse 3D Tracking**: This subset contains 113 scenes with over 290K labeled 3D object tracks, focusing on 3D object tracking tasks. It includes LiDAR point clouds, camera images, and sensor calibration information. |

||||

2. **Argoverse Motion Forecasting**: This subset consists of 324K vehicle trajectories collected from 60 hours of driving data, suitable for motion forecasting tasks. |

||||

3. **Argoverse Stereo Depth Estimation**: This subset is designed for stereo depth estimation tasks and includes over 10K stereo image pairs with corresponding LiDAR point clouds for ground truth depth estimation. |

||||

|

||||

## Applications |

||||

|

||||

The Argoverse dataset is widely used for training and evaluating deep learning models in autonomous driving tasks such as 3D object tracking, motion forecasting, and stereo depth estimation. The dataset's diverse set of sensor data, object annotations, and map information make it a valuable resource for researchers and practitioners in the field of autonomous driving. |

||||

|

||||

## Dataset YAML |

||||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. For the case of the Argoverse dataset, the `Argoverse.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/Argoverse.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/Argoverse.yaml). |

||||

|

||||

!!! example "ultralytics/cfg/datasets/Argoverse.yaml" |

||||

|

||||

```yaml |

||||

--8<-- "ultralytics/cfg/datasets/Argoverse.yaml" |

||||

``` |

||||

|

||||

## Usage |

||||

|

||||

To train a YOLOv8n model on the Argoverse dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page. |

||||

|

||||

!!! example "Train Example" |

||||

|

||||

=== "Python" |

||||

|

||||

```python |

||||

from ultralytics import YOLO |

||||

|

||||

# Load a model |

||||

model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training) |

||||

|

||||

# Train the model |

||||

results = model.train(data='Argoverse.yaml', epochs=100, imgsz=640) |

||||

``` |

||||

|

||||

=== "CLI" |

||||

|

||||

```bash |

||||

# Start training from a pretrained *.pt model |

||||

yolo detect train data=Argoverse.yaml model=yolov8n.pt epochs=100 imgsz=640 |

||||

``` |

||||

|

||||

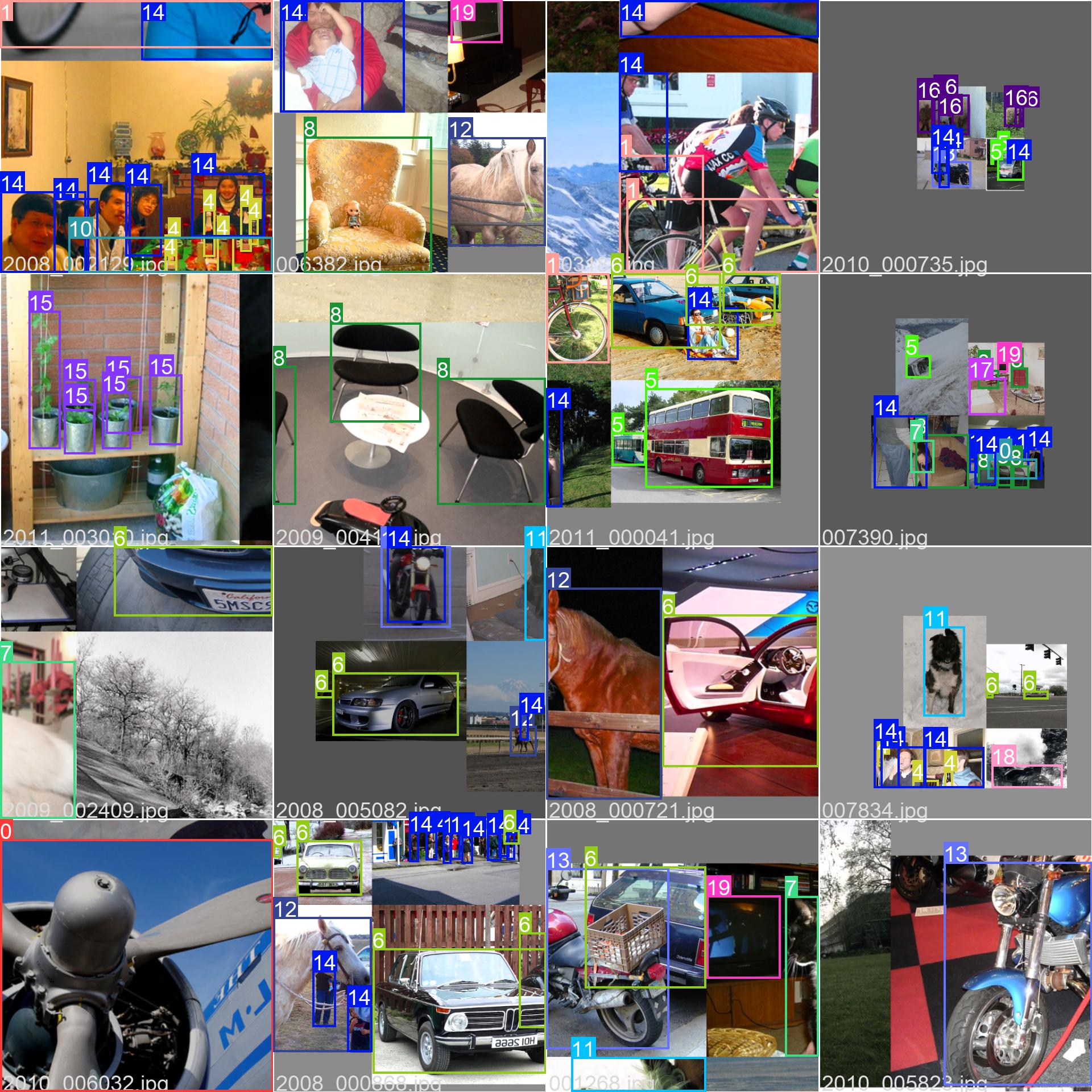

## Sample Data and Annotations |

||||

|

||||

The Argoverse dataset contains a diverse set of sensor data, including camera images, LiDAR point clouds, and HD map information, providing rich context for autonomous driving tasks. Here are some examples of data from the dataset, along with their corresponding annotations: |

||||

|

||||

|

||||

|

||||

- **Argoverse 3D Tracking**: This image demonstrates an example of 3D object tracking, where objects are annotated with 3D bounding boxes. The dataset provides LiDAR point clouds and camera images to facilitate the development of models for this task. |

||||

|

||||

The example showcases the variety and complexity of the data in the Argoverse dataset and highlights the importance of high-quality sensor data for autonomous driving tasks. |

||||

|

||||

## Citations and Acknowledgments |

||||

|

||||

If you use the Argoverse dataset in your research or development work, please cite the following paper: |

||||

|

||||

!!! note "" |

||||

|

||||

=== "BibTeX" |

||||

|

||||

```bibtex |

||||

@inproceedings{chang2019argoverse, |

||||

title={Argoverse: 3D Tracking and Forecasting with Rich Maps}, |

||||

author={Chang, Ming-Fang and Lambert, John and Sangkloy, Patsorn and Singh, Jagjeet and Bak, Slawomir and Hartnett, Andrew and Wang, Dequan and Carr, Peter and Lucey, Simon and Ramanan, Deva and others}, |

||||

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition}, |

||||

pages={8748--8757}, |

||||

year={2019} |

||||

} |

||||

``` |

||||

|

||||

We would like to acknowledge Argo AI for creating and maintaining the Argoverse dataset as a valuable resource for the autonomous driving research community. For more information about the Argoverse dataset and its creators, visit the [Argoverse dataset website](https://www.argoverse.org/). |

||||

@ -0,0 +1,94 @@ |

||||

--- |

||||

comments: true |

||||