Ultralytics Docs are the gateway to understanding and utilizing our cutting-edge machine learning tools. These documents are deployed to [https://docs.ultralytics.com](https://docs.ultralytics.com) for your convenience.

[Ultralytics](https://ultralytics.com) Docs are the gateway to understanding and utilizing our cutting-edge machine learning tools. These documents are deployed to [https://docs.ultralytics.com](https://docs.ultralytics.com) for your convenience.

2. Navigate to the cloned repository's root directory:

```bash

cd ultralytics

```

```bash

cd ultralytics

```

3. Install the package in developer mode using pip (or pip3 for Python 3):

```bash

pip install -e '.[dev]'

```

```bash

pip install -e '.[dev]'

```

- This command installs the ultralytics package along with all development dependencies, allowing you to modify the package code and have the changes immediately reflected in your Python environment.

@ -63,31 +63,31 @@ Supporting multi-language documentation? Follow these steps:

1. Stage all new language \*.md files with Git:

```bash

git add docs/**/*.md -f

```

```bash

git add docs/**/*.md -f

```

2. Build all languages to the `/site` folder, ensuring relevant root-level files are present:

```bash

# Clear existing /site directory

rm -rf site

```bash

# Clear existing /site directory

rm -rf site

# Loop through each language config file and build

mkdocs build -f docs/mkdocs.yml

for file in docs/mkdocs_*.yml; do

echo "Building MkDocs site with $file"

mkdocs build -f "$file"

done

```

# Loop through each language config file and build

mkdocs build -f docs/mkdocs.yml

for file in docs/mkdocs_*.yml; do

echo "Building MkDocs site with $file"

mkdocs build -f "$file"

done

```

3. To preview your site, initiate a simple HTTP server:

```bash

cd site

python -m http.server

# Open in your preferred browser

```

```bash

cd site

python -m http.server

# Open in your preferred browser

```

- 🖥️ Access the live site at `http://localhost:8000`.

@ -99,9 +99,10 @@ Choose a hosting provider and deployment method for your MkDocs documentation:

- Use `mkdocs deploy` to build and deploy your site.

* ### GitHub Pages Deployment Example:

```bash

mkdocs gh-deploy

```

```bash

mkdocs gh-deploy

```

- Update the "Custom domain" in your repository's settings for a personalized URL.

@ -113,8 +114,6 @@ Choose a hosting provider and deployment method for your MkDocs documentation:

We cherish the community's input as it drives Ultralytics open-source initiatives. Dive into the [Contributing Guide](https://docs.ultralytics.com/help/contributing) and share your thoughts via our [Survey](https://ultralytics.com/survey?utm_source=github&utm_medium=social&utm_campaign=Survey). A heartfelt thank you 🙏 to each contributor!

<!-- Pictorial representation of our dedicated contributor community -->

@ -38,7 +38,7 @@ This guide assumes that you already have a working Raspberry Pi OS install and h

First, we need to install the Edge TPU runtime. There are many different versions available, so you need to choose the right version for your operating system.

| Raspberry Pi OS | High frequency mode | Version to download |

@ -14,7 +14,7 @@ Every consideration regarding the data should closely align with [your project's

## Setting Up Classes and Collecting Data

Collecting images and video for a computer vision project involves defining the number of classes, sourcing data, and considering ethical implications. Before you start gathering your data, you need to be clear about:

Collecting images and video for a computer vision project involves defining the number of classes, sourcing data, and considering ethical implications. Before you start gathering your data, you need to be clear about:

### Choosing the Right Classes for Your Project

@ -31,13 +31,13 @@ Something to note is that starting with more specific classes can be very helpfu

### Sources of Data

You can use public datasets or gather your own custom data. Public datasets like those on [Kaggle](https://www.kaggle.com/datasets) and [Google Dataset Search Engine](https://datasetsearch.research.google.com/) offer well-annotated, standardized data, making them great starting points for training and validating models.

You can use public datasets or gather your own custom data. Public datasets like those on [Kaggle](https://www.kaggle.com/datasets) and [Google Dataset Search Engine](https://datasetsearch.research.google.com/) offer well-annotated, standardized data, making them great starting points for training and validating models.

Custom data collection, on the other hand, allows you to customize your dataset to your specific needs. You might capture images and videos with cameras or drones, scrape the web for images, or use existing internal data from your organization. Custom data gives you more control over its quality and relevance. Combining both public and custom data sources helps create a diverse and comprehensive dataset.

### Avoiding Bias in Data Collection

Bias occurs when certain groups or scenarios are underrepresented or overrepresented in your dataset. It leads to a model that performs well on some data but poorly on others. It's crucial to avoid bias so that your computer vision model can perform well in a variety of scenarios.

Bias occurs when certain groups or scenarios are underrepresented or overrepresented in your dataset. It leads to a model that performs well on some data but poorly on others. It's crucial to avoid bias so that your computer vision model can perform well in a variety of scenarios.

Here is how you can avoid bias while collecting data:

@ -67,7 +67,7 @@ Depending on the specific requirements of a [computer vision task](../tasks/inde

### Common Annotation Formats

After selecting a type of annotation, it's important to choose the appropriate format for storing and sharing annotations.

After selecting a type of annotation, it's important to choose the appropriate format for storing and sharing annotations.

Commonly used formats include [COCO](../datasets/detect/coco.md), which supports various annotation types like object detection, keypoint detection, stuff segmentation, panoptic segmentation, and image captioning, stored in JSON. [Pascal VOC](../datasets/detect/voc.md) uses XML files and is popular for object detection tasks. YOLO, on the other hand, creates a .txt file for each image, containing annotations like object class, coordinates, height, and width, making it suitable for object detection.

@ -84,7 +84,7 @@ Regularly reviewing and updating your labeling rules will help keep your annotat

### Popular Annotation Tools

Let's say you are ready to annotate now. There are several open-source tools available to help streamline the data annotation process. Here are some useful open annotation tools:

Let's say you are ready to annotate now. There are several open-source tools available to help streamline the data annotation process. Here are some useful open annotation tools:

- **[Label Studio](https://github.com/HumanSignal/label-studio)**: A flexible tool that supports a wide range of annotation tasks and includes features for managing projects and quality control.

- **[CVAT](https://github.com/cvat-ai/cvat)**: A powerful tool that supports various annotation formats and customizable workflows, making it suitable for complex projects.

@ -98,7 +98,7 @@ These open-source tools are budget-friendly and provide a range of features to m

### Some More Things to Consider Before Annotating Data

Before you dive into annotating your data, there are a few more things to keep in mind. You should be aware of accuracy, precision, outliers, and quality control to avoid labeling your data in a counterproductive manner.

Before you dive into annotating your data, there are a few more things to keep in mind. You should be aware of accuracy, precision, outliers, and quality control to avoid labeling your data in a counterproductive manner.

#### Understanding Accuracy and Precision

@ -135,6 +135,7 @@ While reviewing, if you find errors, correct them and update the guidelines to a

Here are some questions that might encounter while collecting and annotating data:

- **Q1:** What is active learning in the context of data annotation?

- **A1:** Active learning in data annotation is a technique where a machine learning model iteratively selects the most informative data points for labeling. This improves the model's performance with fewer labeled examples. By focusing on the most valuable data, active learning accelerates the training process and improves the model's ability to generalize from limited data.

<palign="center">

@ -142,9 +143,11 @@ Here are some questions that might encounter while collecting and annotating dat

</p>

- **Q2:** How does automated annotation work?

- **A2:** Automated annotation uses pre-trained models and algorithms to label data without needing human effort. These models, which have been trained on large datasets, can identify patterns and features in new data. Techniques like transfer learning adjust these models for specific tasks, and active learning helps by selecting the most useful data points for labeling. However, this approach is only possible in certain cases where the model has been trained on sufficiently similar data and tasks.

- **Q3:** How many images do I need to collect for [YOLOv8 custom training](../modes/train.md)?

- **A3:** For transfer learning and object detection, a good general rule of thumb is to have a minimum of a few hundred annotated objects per class. However, when training a model to detect just one class, it is advisable to start with at least 100 annotated images and train for around 100 epochs. For complex tasks, you may need thousands of images per class to achieve reliable model performance.

@ -63,9 +63,11 @@ Other tasks, like [object detection](../tasks/detect.md), are not suitable as th

The order of model selection, dataset preparation, and training approach depends on the specifics of your project. Here are a few tips to help you decide:

- **Clear Understanding of the Problem**: If your problem and objectives are well-defined, start with model selection. Then, prepare your dataset and decide on the training approach based on the model's requirements.

- **Example**: Start by selecting a model for a traffic monitoring system that estimates vehicle speeds. Choose an object tracking model, gather and annotate highway videos, and then train the model with techniques for real-time video processing.

- **Unique or Limited Data**: If your project is constrained by unique or limited data, begin with dataset preparation. For instance, if you have a rare dataset of medical images, annotate and prepare the data first. Then, select a model that performs well on such data, followed by choosing a suitable training approach.

- **Example**: Prepare the data first for a facial recognition system with a small dataset. Annotate it, then select a model that works well with limited data, such as a pre-trained model for transfer learning. Finally, decide on a training approach, including data augmentation, to expand the dataset.

- **Need for Experimentation**: In projects where experimentation is crucial, start with the training approach. This is common in research projects where you might initially test different training techniques. Refine your model selection after identifying a promising method and prepare the dataset based on your findings.

@ -118,6 +120,7 @@ Here are some questions that might encounter while defining your computer vision

</p>

- **Q2:** Can the scope of a computer vision project change after the problem statement is defined?

- **A2:** Yes, the scope of a computer vision project can change as new information becomes available or as project requirements evolve. It's important to regularly review and adjust the problem statement and objectives to reflect any new insights or changes in project direction.

- **Q3:** What are some common challenges in defining the problem for a computer vision project?

@ -130,7 +133,7 @@ Connecting with other computer vision enthusiasts can be incredibly helpful for

### Community Support Channels

- **GitHub Issues:** Head over to the YOLOv8 GitHub repository. You can use the [Issues tab](https://github.com/ultralytics/ultralytics/issues) to raise questions, report bugs, and suggest features. The community and maintainers can assist with specific problems you encounter.

- **Ultralytics Discord Server:** Become part of the [Ultralytics Discord server](https://ultralytics.com/discord/). Connect with fellow users and developers, seek support, exchange knowledge, and discuss ideas.

- **Ultralytics Discord Server:** Become part of the [Ultralytics Discord server](https://ultralytics.com/discord/). Connect with fellow users and developers, seek support, exchange knowledge, and discuss ideas.

@ -44,7 +44,7 @@ Here's a compilation of in-depth guides to help you master different aspects of

- [OpenVINO Latency vs Throughput Modes](optimizing-openvino-latency-vs-throughput-modes.md) - Learn latency and throughput optimization techniques for peak YOLO inference performance.

- [Steps of a Computer Vision Project ](steps-of-a-cv-project.md) 🚀 NEW: Learn about the key steps involved in a computer vision project, including defining goals, selecting models, preparing data, and evaluating results.

- [Defining A Computer Vision Project's Goals](defining-project-goals.md) 🚀 NEW: Walk through how to effectively define clear and measurable goals for your computer vision project. Learn the importance of a well-defined problem statement and how it creates a roadmap for your project.

- - [Data Collection and Annotation](data-collection-and-annotation.md)🚀 NEW: Explore the tools, techniques, and best practices for collecting and annotating data to create high-quality inputs for your computer vision models.

- [Data Collection and Annotation](data-collection-and-annotation.md)🚀 NEW: Explore the tools, techniques, and best practices for collecting and annotating data to create high-quality inputs for your computer vision models.

- [Preprocessing Annotated Data](preprocessing_annotated_data.md)🚀 NEW: Learn about preprocessing and augmenting image data in computer vision projects using YOLOv8, including normalization, dataset augmentation, splitting, and exploratory data analysis (EDA).

@ -14,7 +14,7 @@ After performing the [Segment Task](../tasks/segment.md), it's sometimes desirab

## Recipe Walk Through

1. Begin with the necessary imports

1. Begin with the necessary imports

```python

from pathlib import Path

@ -31,7 +31,7 @@ After performing the [Segment Task](../tasks/segment.md), it's sometimes desirab

***

2. Load a model and run `predict()` method on a source.

2. Load a model and run `predict()` method on a source.

```python

from ultralytics import YOLO

@ -58,7 +58,7 @@ After performing the [Segment Task](../tasks/segment.md), it's sometimes desirab

***

3. Now iterate over the results and the contours. For workflows that want to save an image to file, the source image `base-name` and the detection `class-label` are retrieved for later use (optional).

3. Now iterate over the results and the contours. For workflows that want to save an image to file, the source image `base-name` and the detection `class-label` are retrieved for later use (optional).

```{ .py .annotate }

# (2) Iterate detection results (helpful for multiple images)

@ -81,7 +81,7 @@ After performing the [Segment Task](../tasks/segment.md), it's sometimes desirab

***

4. Start with generating a binary mask from the source image and then draw a filled contour onto the mask. This will allow the object to be isolated from the other parts of the image. An example from `bus.jpg` for one of the detected `person` class objects is shown on the right.

4. Start with generating a binary mask from the source image and then draw a filled contour onto the mask. This will allow the object to be isolated from the other parts of the image. An example from `bus.jpg` for one of the detected `person` class objects is shown on the right.

@ -140,7 +140,7 @@ After performing the [Segment Task](../tasks/segment.md), it's sometimes desirab

***

5. Next there are 2 options for how to move forward with the image from this point and a subsequent option for each.

5. Next there are 2 options for how to move forward with the image from this point and a subsequent option for each.

### Object Isolation Options

@ -251,7 +251,7 @@ After performing the [Segment Task](../tasks/segment.md), it's sometimes desirab

***

6. <u>What to do next is entirely left to you as the developer.</u> A basic example of one possible next step (saving the image to file for future use) is shown.

6. <u>What to do next is entirely left to you as the developer.</u> A basic example of one possible next step (saving the image to file for future use) is shown.

- **NOTE:** this step is optional and can be skipped if not required for your specific use case.

@ -29,7 +29,7 @@ Without further ado, let's dive in!

- It includes 6 class labels, each with its total instance counts listed below.

| Class Label | Instance Count |

|:------------|:--------------:|

| :---------- | :------------: |

| Apple | 7049 |

| Grapes | 7202 |

| Pineapple | 1613 |

@ -173,7 +173,7 @@ The rows index the label files, each corresponding to an image in your dataset,

fold_lbl_distrb.loc[f"split_{n}"] = ratio

```

The ideal scenario is for all class ratios to be reasonably similar for each split and across classes. This, however, will be subject to the specifics of your dataset.

The ideal scenario is for all class ratios to be reasonably similar for each split and across classes. This, however, will be subject to the specifics of your dataset.

4. Next, we create the directories and dataset YAML files for each split.

@ -219,7 +219,7 @@ The rows index the label files, each corresponding to an image in your dataset,

5. Lastly, copy images and labels into the respective directory ('train' or 'val') for each split.

- __NOTE:__ The time required for this portion of the code will vary based on the size of your dataset and your system hardware.

- **NOTE:** The time required for this portion of the code will vary based on the size of your dataset and your system hardware.

@ -263,7 +263,7 @@ NCNN is a high-performance neural network inference framework optimized for the

The following table provides a snapshot of the various deployment options available for YOLOv8 models, helping you to assess which may best fit your project needs based on several critical criteria. For an in-depth look at each deployment option's format, please see the [Ultralytics documentation page on export formats](../modes/export.md#export-formats).

| Deployment Option | Performance Benchmarks | Compatibility and Integration | Community Support and Ecosystem | Case Studies | Maintenance and Updates | Security Considerations | Hardware Acceleration |

| PyTorch | Good flexibility; may trade off raw performance | Excellent with Python libraries | Extensive resources and community | Research and prototypes | Regular, active development | Dependent on deployment environment | CUDA support for GPU acceleration |

| TorchScript | Better for production than PyTorch | Smooth transition from PyTorch to C++ | Specialized but narrower than PyTorch | Industry where Python is a bottleneck | Consistent updates with PyTorch | Improved security without full Python | Inherits CUDA support from PyTorch |

| ONNX | Variable depending on runtime | High across different frameworks | Broad ecosystem, supported by many orgs | Flexibility across ML frameworks | Regular updates for new operations | Ensure secure conversion and deployment practices | Various hardware optimizations |

@ -22,14 +22,14 @@ NVIDIA Jetson is a series of embedded computing boards designed to bring acceler

[Jetson Orin](https://www.nvidia.com/en-us/autonomous-machines/embedded-systems/jetson-orin/) is the latest iteration of the NVIDIA Jetson family based on NVIDIA Ampere architecture which brings drastically improved AI performance when compared to the previous generations. Below table compared few of the Jetson devices in the ecosystem.

| | Jetson AGX Orin 64GB | Jetson Orin NX 16GB | Jetson Orin Nano 8GB | Jetson AGX Xavier | Jetson Xavier NX | Jetson Nano |

For a more detailed comparison table, please visit the **Technical Specifications** section of [official NVIDIA Jetson page](https://developer.nvidia.com/embedded/jetson-modules).

|  |  |

| Conveyor Belt Packets Counting Using Ultralytics YOLOv8 | Fish Counting in Sea using Ultralytics YOLOv8 |

@ -89,7 +89,7 @@ Object counting with [Ultralytics YOLOv8](https://github.com/ultralytics/ultraly

video_writer.release()

cv2.destroyAllWindows()

```

=== "Count in Polygon"

```python

@ -131,7 +131,7 @@ Object counting with [Ultralytics YOLOv8](https://github.com/ultralytics/ultraly

video_writer.release()

cv2.destroyAllWindows()

```

=== "Count in Line"

```python

@ -225,7 +225,7 @@ Object counting with [Ultralytics YOLOv8](https://github.com/ultralytics/ultraly

Here's a table with the `ObjectCounter` arguments:

|  |

| Suitcases Cropping at airport conveyor belt using Ultralytics YOLOv8 |

| Suitcases Cropping at airport conveyor belt using Ultralytics YOLOv8 |

!!! Example "Object Cropping using YOLOv8 Example"

@ -84,7 +84,7 @@ Object cropping with [Ultralytics YOLOv8](https://github.com/ultralytics/ultraly

| `source` | `str` | `'ultralytics/assets'` | Specifies the data source for inference. Can be an image path, video file, directory, URL, or device ID for live feeds. Supports a wide range of formats and sources, enabling flexible application across different types of input. |

| `conf` | `float` | `0.25` | Sets the minimum confidence threshold for detections. Objects detected with confidence below this threshold will be disregarded. Adjusting this value can help reduce false positives. |

| `iou` | `float` | `0.7` | Intersection Over Union (IoU) threshold for Non-Maximum Suppression (NMS). Lower values result in fewer detections by eliminating overlapping boxes, useful for reducing duplicates. |

@ -8,7 +8,7 @@ keywords: data preprocessing, computer vision, image resizing, normalization, da

## Introduction

After you've defined your computer vision [project's goals](./defining-project-goals.md) and [collected and annotated data](./data-collection-and-annotation.md), the next step is to preprocess annotated data and prepare it for model training. Clean and consistent data are vital to creating a model that performs well.

After you've defined your computer vision [project's goals](./defining-project-goals.md) and [collected and annotated data](./data-collection-and-annotation.md), the next step is to preprocess annotated data and prepare it for model training. Clean and consistent data are vital to creating a model that performs well.

Preprocessing is a step in the [computer vision project workflow](./steps-of-a-cv-project.md) that includes resizing images, normalizing pixel values, augmenting the dataset, and splitting the data into training, validation, and test sets. Let's explore the essential techniques and best practices for cleaning your data!

@ -22,7 +22,7 @@ We are already collecting and annotating our data carefully with multiple consid

## Data Preprocessing Techniques

One of the first and foremost steps in data preprocessing is resizing. Some models are designed to handle variable input sizes, but many models require a consistent input size. Resizing images makes them uniform and reduces computational complexity.

One of the first and foremost steps in data preprocessing is resizing. Some models are designed to handle variable input sizes, but many models require a consistent input size. Resizing images makes them uniform and reduces computational complexity.

### Resizing Images

@ -31,12 +31,12 @@ You can resize your images using the following methods:

- **Bilinear Interpolation**: Smooths pixel values by taking a weighted average of the four nearest pixel values.

- **Nearest Neighbor**: Assigns the nearest pixel value without averaging, leading to a blocky image but faster computation.

To make resizing a simpler task, you can use the following tools:

To make resizing a simpler task, you can use the following tools:

- **OpenCV**: A popular computer vision library with extensive functions for image processing.

- **PIL (Pillow)**: A Python Imaging Library for opening, manipulating, and saving image files.

With respect to YOLOv8, the 'imgsz' parameter during [model training](../modes/train.md) allows for flexible input sizes. When set to a specific size, such as 640, the model will resize input images so their largest dimension is 640 pixels while maintaining the original aspect ratio.

With respect to YOLOv8, the 'imgsz' parameter during [model training](../modes/train.md) allows for flexible input sizes. When set to a specific size, such as 640, the model will resize input images so their largest dimension is 640 pixels while maintaining the original aspect ratio.

By evaluating your model's and dataset's specific needs, you can determine whether resizing is a necessary preprocessing step or if your model can efficiently handle images of varying sizes.

@ -47,16 +47,16 @@ Another preprocessing technique is normalization. Normalization scales the pixel

- **Min-Max Scaling**: Scales pixel values to a range of 0 to 1.

- **Z-Score Normalization**: Scales pixel values based on their mean and standard deviation.

With respect to YOLOv8, normalization is seamlessly handled as part of its preprocessing pipeline during model training. YOLOv8 automatically performs several preprocessing steps, including conversion to RGB, scaling pixel values to the range [0, 1], and normalization using predefined mean and standard deviation values.

With respect to YOLOv8, normalization is seamlessly handled as part of its preprocessing pipeline during model training. YOLOv8 automatically performs several preprocessing steps, including conversion to RGB, scaling pixel values to the range [0, 1], and normalization using predefined mean and standard deviation values.

### Splitting the Dataset

Once you've cleaned the data, you are ready to split the dataset. Splitting the data into training, validation, and test sets is done to ensure that the model can be evaluated on unseen data to assess its generalization performance. A common split is 70% for training, 20% for validation, and 10% for testing. There are various tools and libraries that you can use to split your data like scikit-learn or TensorFlow.

Once you've cleaned the data, you are ready to split the dataset. Splitting the data into training, validation, and test sets is done to ensure that the model can be evaluated on unseen data to assess its generalization performance. A common split is 70% for training, 20% for validation, and 10% for testing. There are various tools and libraries that you can use to split your data like scikit-learn or TensorFlow.

Consider the following when splitting your dataset:

- **Maintaining Data Distribution**: Ensure that the data distribution of classes is maintained across training, validation, and test sets.

- **Avoiding Data Leakage**: Typically, data augmentation is done after the dataset is split. Data augmentation and any other preprocessing should only be applied to the training set to prevent information from the validation or test sets from influencing the model training.

-**Balancing Classes**: For imbalanced datasets, consider techniques such as oversampling the minority class or under-sampling the majority class within the training set.

- **Avoiding Data Leakage**: Typically, data augmentation is done after the dataset is split. Data augmentation and any other preprocessing should only be applied to the training set to prevent information from the validation or test sets from influencing the model training. -**Balancing Classes**: For imbalanced datasets, consider techniques such as oversampling the minority class or under-sampling the majority class within the training set.

### What is Data Augmentation?

@ -89,13 +89,13 @@ Also, you can adjust the intensity of these augmentation techniques through spec

## A Case Study of Preprocessing

Consider a project aimed at developing a model to detect and classify different types of vehicles in traffic images using YOLOv8. We've collected traffic images and annotated them with bounding boxes and labels.

Consider a project aimed at developing a model to detect and classify different types of vehicles in traffic images using YOLOv8. We've collected traffic images and annotated them with bounding boxes and labels.

Here's what each step of preprocessing would look like for this project:

- Resizing Images: Since YOLOv8 handles flexible input sizes and performs resizing automatically, manual resizing is not required. The model will adjust the image size according to the specified 'imgsz' parameter during training.

- Normalizing Pixel Values: YOLOv8 automatically normalizes pixel values to a range of 0 to 1 during preprocessing, so it's not required.

- Splitting the Dataset: Divide the dataset into training (70%), validation (20%), and test (10%) sets using tools like scikit-learn.

- Splitting the Dataset: Divide the dataset into training (70%), validation (20%), and test (10%) sets using tools like scikit-learn.

- Data Augmentation: Modify the dataset configuration file (.yaml) to include data augmentation techniques such as random crops, horizontal flips, and brightness adjustments.

These steps make sure the dataset is prepared without any potential issues and is ready for Exploratory Data Analysis (EDA).

@ -104,11 +104,11 @@ These steps make sure the dataset is prepared without any potential issues and i

After preprocessing and augmenting your dataset, the next step is to gain insights through Exploratory Data Analysis. EDA uses statistical techniques and visualization tools to understand the patterns and distributions in your data. You can identify issues like class imbalances or outliers and make informed decisions about further data preprocessing or model training adjustments.

### Statistical EDA Techniques

### Statistical EDA Techniques

Statistical techniques often begin with calculating basic metrics such as mean, median, standard deviation, and range. These metrics provide a quick overview of your image dataset's properties, such as pixel intensity distributions. Understanding these basic statistics helps you grasp the overall quality and characteristics of your data, allowing you to spot any irregularities early on.

### Visual EDA Techniques

### Visual EDA Techniques

Visualizations are key in EDA for image datasets. For example, class imbalance analysis is another vital aspect of EDA. It helps determine if certain classes are underrepresented in your dataset, Visualizing the distribution of different image classes or categories using bar charts can quickly reveal any imbalances. Similarly, outliers can be identified using visualization tools like box plots, which highlight anomalies in pixel intensity or feature distributions. Outlier detection prevents unusual data points from skewing your results.

@ -131,9 +131,11 @@ For a more advanced approach to EDA, you can use the Ultralytics Explorer tool.

Here are some questions that might come up while you prepare your dataset:

- **Q1:** How much preprocessing is too much?

- **A1:** Preprocessing is essential but should be balanced. Overdoing it can lead to loss of critical information, overfitting, increased complexity, and higher computational costs. Focus on necessary steps like resizing, normalization, and basic augmentation, adjusting based on model performance.

- **Q2:** What are the common pitfalls in EDA?

- **A2:** Common pitfalls in Exploratory Data Analysis (EDA) include ignoring data quality issues like missing values and outliers, confirmation bias, overfitting visualizations, neglecting data distribution, and overlooking correlations. A systematic approach helps gain accurate and valuable insights.

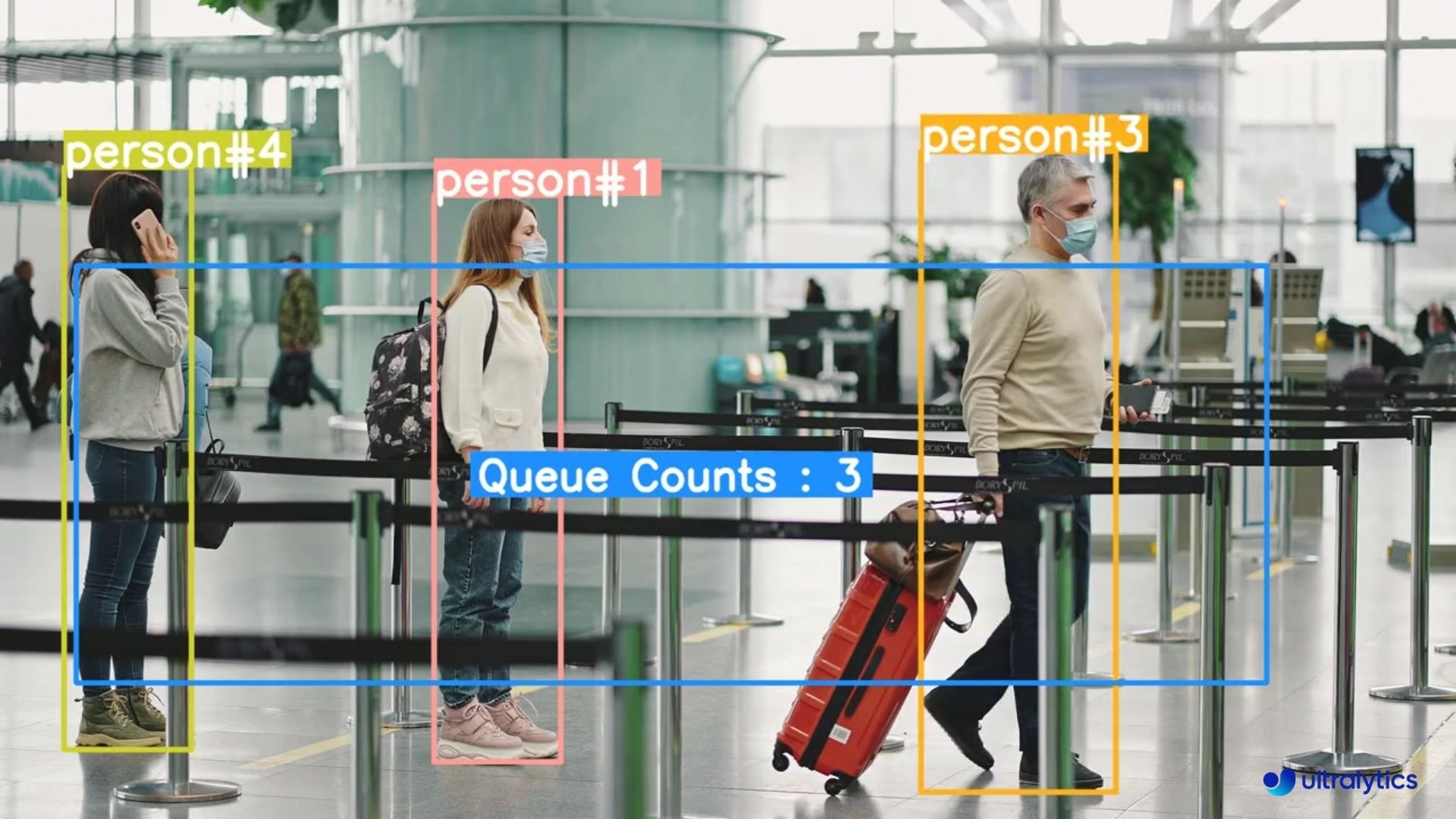

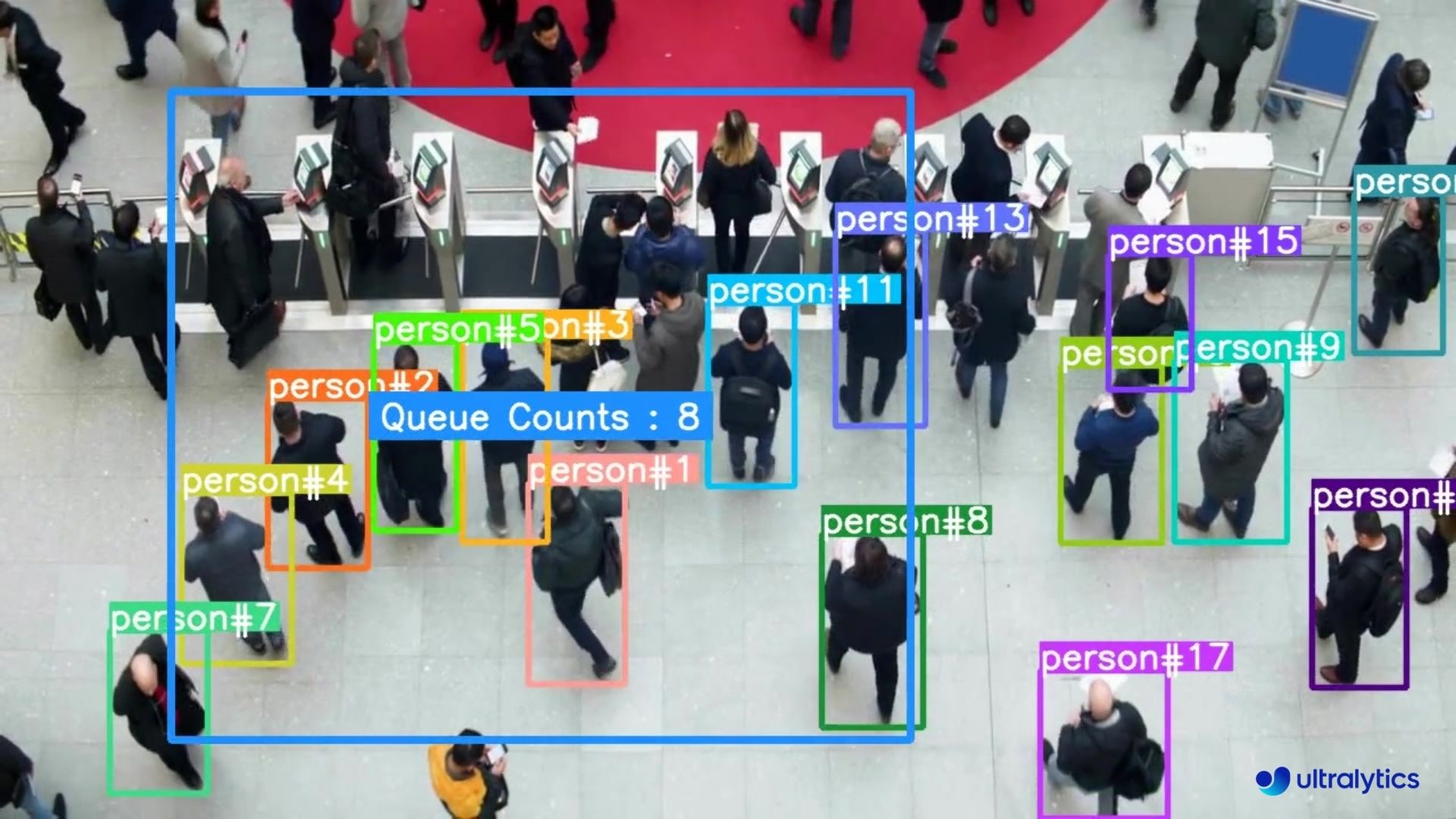

|  |  |

| Queue management at airport ticket counter Using Ultralytics YOLOv8 | Queue monitoring in crowd Ultralytics YOLOv8 |

@ -115,7 +115,7 @@ Queue management using [Ultralytics YOLOv8](https://github.com/ultralytics/ultra

| Max Power Draw | 2.5A@5V | 3A@5V | 5A@5V (PD enabled) |

## What is Raspberry Pi OS?

@ -190,7 +190,7 @@ The below table represents the benchmark results for two different models (YOLOv

| TF Lite | ✅ | 42.8 | 0.7136 | 1013.27 |

| PaddlePaddle | ✅ | 85.5 | 0.7136 | 1560.23 |

| NCNN | ✅ | 42.7 | 0.7204 | 211.26 |

=== "YOLOv8n on RPi4"

| Format | Status | Size on disk (MB) | mAP50-95(B) | Inference time (ms/im) |

@ -267,7 +267,7 @@ rpicam-hello

!!! Tip

Learn more about [`rpicam-hello` usage on official Raspberry Pi documentation](https://www.raspberrypi.com/documentation/computers/camera_software.html#rpicam-hello)

Learn more about [`rpicam-hello` usage on official Raspberry Pi documentation](https://www.raspberrypi.com/documentation/computers/camera_software.html#rpicam-hello)

### Inference with Camera

@ -329,7 +329,7 @@ There are 2 methods of using the Raspberry Pi Camera to inference YOLOv8 models.

Learn more about [`rpicam-vid` usage on official Raspberry Pi documentation](https://www.raspberrypi.com/documentation/computers/camera_software.html#rpicam-vid)

Learn more about [`rpicam-vid` usage on official Raspberry Pi documentation](https://www.raspberrypi.com/documentation/computers/camera_software.html#rpicam-vid)

|  |  |

| People Counting in Different Region using Ultralytics YOLOv8 | Crowd Counting in Different Region using Ultralytics YOLOv8 |

|  |  |

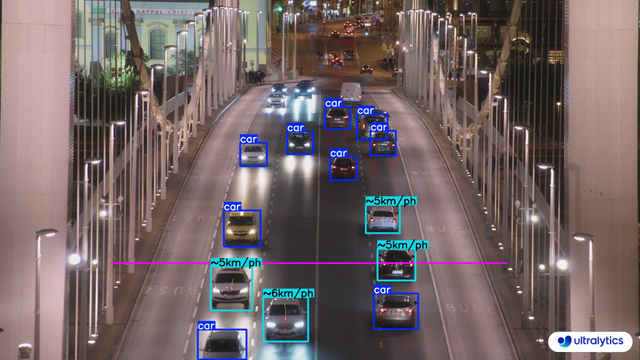

| Speed Estimation on Road using Ultralytics YOLOv8 | Speed Estimation on Bridge using Ultralytics YOLOv8 |

@ -41,9 +41,11 @@ The first step in any computer vision project is clearly defining the problem yo

Here are some examples of project objectives and the computer vision tasks that can be used to reach these objectives:

- **Objective:** To develop a system that can monitor and manage the flow of different vehicle types on highways, improving traffic management and safety.

- **Computer Vision Task:** Object detection is ideal for traffic monitoring because it efficiently locates and identifies multiple vehicles. It is less computationally demanding than image segmentation, which provides unnecessary detail for this task, ensuring faster, real-time analysis.

- **Objective:** To develop a tool that assists radiologists by providing precise, pixel-level outlines of tumors in medical imaging scans.

- **Computer Vision Task:** Image segmentation is suitable for medical imaging because it provides accurate and detailed boundaries of tumors that are crucial for assessing size, shape, and treatment planning.

- **Objective:** To create a digital system that categorizes various documents (e.g., invoices, receipts, legal paperwork) to improve organizational efficiency and document retrieval.

@ -103,7 +105,7 @@ After splitting your data, you can perform data augmentation by applying transfo

<palign="center">

<imgwidth="100%"src="https://www.labellerr.com/blog/content/images/size/w2000/2022/11/banner-data-augmentation--1-.webp"alt="Examples of Data Augmentations">

</p>

</p>

Libraries like OpenCV, Albumentations, and TensorFlow offer flexible augmentation functions that you can use. Additionally, some libraries, such as Ultralytics, have [built-in augmentation settings](../modes/train.md) directly within its model training function, simplifying the process.

@ -111,7 +113,7 @@ To understand your data better, you can use tools like [Matplotlib](https://matp

<palign="center">

<imgwidth="100%"src="https://github.com/ultralytics/ultralytics/assets/15766192/feb1fe05-58c5-4173-a9ff-e611e3bba3d0"alt="The Ultralytics Explorer Tool">

</p>

</p>

By properly [understanding, splitting, and augmenting your data](./preprocessing_annotated_data.md), you can develop a well-trained, validated, and tested model that performs well in real-world applications.

@ -165,7 +167,7 @@ Monitoring tools can help you track key performance indicators (KPIs) and detect

In addition to monitoring and maintenance, documentation is also key. Thoroughly document the entire process, including model architecture, training procedures, hyperparameters, data preprocessing steps, and any changes made during deployment and maintenance. Good documentation ensures reproducibility and makes future updates or troubleshooting easier. By effectively monitoring, maintaining, and documenting your model, you can ensure it remains accurate, reliable, and easy to manage over its lifecycle.

@ -174,15 +176,19 @@ In addition to monitoring and maintenance, documentation is also key. Thoroughly

Here are some common questions that might arise during a computer vision project:

- **Q1:** How do the steps change if I already have a dataset or data when starting a computer vision project?

- **A1:** Starting with a pre-existing dataset or data affects the initial steps of your project. In Step 1, along with deciding the computer vision task and model, you'll also need to explore your dataset thoroughly. Understanding its quality, variety, and limitations will guide your choice of model and training approach. Your approach should align closely with the data's characteristics for more effective outcomes. Depending on your data or dataset, you may be able to skip Step 2 as well.

- **Q2:** I'm not sure what computer vision project to start my AI learning journey with.

- **A2:** Check out our [guides on Real-World Projects](./index.md) for inspiration and guidance.

- **Q2:** I'm not sure what computer vision project to start my AI learning journey with.

- **A2:** Check out our [guides on Real-World Projects](./index.md) for inspiration and guidance.

- **Q3:** I don't want to train a model. I just want to try running a model on an image. How can I do that?

- **A3:** You can use a pre-trained model to run predictions on an image without training a new model. Check out the [YOLOv8 predict docs page](../modes/predict.md) for instructions on how to use a pre-trained YOLOv8 model to make predictions on your images.

- **Q4:** Where can I find more detailed articles and updates about computer vision applications and YOLOv8?

- **A4:** For more detailed articles, updates, and insights about computer vision applications and YOLOv8, visit the [Ultralytics blog page](https://www.ultralytics.com/blog). The blog covers a wide range of topics and provides valuable information to help you stay updated and improve your projects.

@ -51,7 +51,7 @@ By integrating with Codecov, we aim to maintain and improve the quality of our c

To quickly get a glimpse of the code coverage status of the `ultralytics` python package, we have included a badge and sunburst visual of the `ultralytics` coverage results. These images show the percentage of code covered by our tests, offering an at-a-glance metric of our testing efforts. For full details please see https://codecov.io/github/ultralytics/ultralytics.

In the sunburst graphic below, the innermost circle is the entire project, moving away from the center are folders then, finally, a single file. The size and color of each slice is representing the number of statements and the coverage, respectively.

@ -61,7 +61,7 @@ The Apple Neural Engine (ANE) is a dedicated hardware component integrated into

By combining quantized YOLO models with the Apple Neural Engine, the Ultralytics iOS App achieves real-time object detection on your iOS device without compromising on accuracy or performance.

| Release Year | iPhone Name | Chipset Name | Node Size | ANE TOPs |

<ahref="https://github.com/ultralytics/hub/actions/workflows/ci.yaml"><imgsrc="https://github.com/ultralytics/hub/actions/workflows/ci.yaml/badge.svg"alt="CI CPU"></a><ahref="https://colab.research.google.com/github/ultralytics/hub/blob/main/hub.ipynb"><imgsrc="https://colab.research.google.com/assets/colab-badge.svg"alt="Open In Colab"></a><ahref="https://ultralytics.com/discord"><imgalt="Discord"src="https://img.shields.io/discord/1089800235347353640?logo=discord&logoColor=white&label=Discord&color=blue"></a>

</div>

👋 Hello from the [Ultralytics](https://ultralytics.com/) Team! We've been working hard these last few months to launch [Ultralytics HUB](https://bit.ly/ultralytics_hub), a new web tool for training and deploying all your YOLOv5 and YOLOv8 🚀 models from one spot!

@ -47,7 +48,6 @@ We hope that the resources here will help you get the most out of HUB. Please br

[Ultralytics HUB](https://bit.ly/ultralytics_hub) is designed to be user-friendly and intuitive, allowing users to quickly upload their datasets and train new YOLO models. It also offers a range of pre-trained models to choose from, making it extremely easy for users to get started. Once a model is trained, it can be effortlessly previewed in the [Ultralytics HUB App](app/index.md) before being deployed for real-time classification, object detection, and instance segmentation tasks.

@ -23,9 +23,9 @@ This Gradio interface provides an easy and interactive way to perform object det

## Why Use Gradio for Object Detection?

***User-Friendly Interface:** Gradio offers a straightforward platform for users to upload images and visualize detection results without any coding requirement.

***Real-Time Adjustments:** Parameters such as confidence and IoU thresholds can be adjusted on the fly, allowing for immediate feedback and optimization of detection results.

***Broad Accessibility:** The Gradio web interface can be accessed by anyone, making it an excellent tool for demonstrations, educational purposes, and quick experiments.

-**User-Friendly Interface:** Gradio offers a straightforward platform for users to upload images and visualize detection results without any coding requirement.

-**Real-Time Adjustments:** Parameters such as confidence and IoU thresholds can be adjusted on the fly, allowing for immediate feedback and optimization of detection results.

-**Broad Accessibility:** The Gradio web interface can be accessed by anyone, making it an excellent tool for demonstrations, educational purposes, and quick experiments.

<palign="center">

<imgwidth="800"alt="Gradio example screenshot"src="https://github.com/RizwanMunawar/ultralytics/assets/26833433/52ee3cd2-ac59-4c27-9084-0fd05c6c33be">

@ -41,14 +41,14 @@ pip install gradio

1. **Upload Image:** Click on 'Upload Image' to choose an image file for object detection.

2. **Adjust Parameters:**

* **Confidence Threshold:** Slider to set the minimum confidence level for detecting objects.

* **IoU Threshold:** Slider to set the IoU threshold for distinguishing different objects.

- **Confidence Threshold:** Slider to set the minimum confidence level for detecting objects.

- **IoU Threshold:** Slider to set the IoU threshold for distinguishing different objects.

3. **View Results:** The processed image with detected objects and their labels will be displayed.

## Example Use Cases

***Sample Image 1:** Bus detection with default thresholds.

***Sample Image 2:** Detection on a sports image with default thresholds.

-**Sample Image 1:** Bus detection with default thresholds.

-**Sample Image 2:** Detection on a sports image with default thresholds.

Explore the links to learn more about each integration and how to get the most out of them with Ultralytics. See full `export` details in the [Export](../modes/export.md) page.

Or use the `project=<project>` argument when training a YOLO model, i.e. `yolo train project=my_project`.

Or use the `project=<project>` argument when training a YOLO model, i.e. `yolo train project=my_project`.

2. **Set a Run Name**: Similar to setting a project name, you can set the run name via an environment variable:

@ -76,7 +76,7 @@ Make sure that MLflow logging is enabled in Ultralytics settings. Usually, this

export MLFLOW_RUN=<your_run_name>

```

Or use the `name=<name>` argument when training a YOLO model, i.e. `yolo train project=my_project name=my_name`.

Or use the `name=<name>` argument when training a YOLO model, i.e. `yolo train project=my_project name=my_name`.

3. **Start Local MLflow Server**: To start tracking, use:

@ -84,7 +84,7 @@ Make sure that MLflow logging is enabled in Ultralytics settings. Usually, this

mlflow server --backend-store-uri runs/mlflow'

```

This will start a local server at http://127.0.0.1:5000 by default and save all mlflow logs to the 'runs/mlflow' directory. To specify a different URI, set the `MLFLOW_TRACKING_URI` environment variable.

This will start a local server at http://127.0.0.1:5000 by default and save all mlflow logs to the 'runs/mlflow' directory. To specify a different URI, set the `MLFLOW_TRACKING_URI` environment variable.

4. **Kill MLflow Server Instances**: To stop all running MLflow instances, run:

This table represents the benchmark results for five different models (YOLOv8n, YOLOv8s, YOLOv8m, YOLOv8l, YOLOv8x) across four different formats (PyTorch, TorchScript, ONNX, OpenVINO), giving us the status, size, mAP50-95(B) metric, and inference time for each combination.

@ -157,27 +157,27 @@ Benchmarks below run on Intel® Arc 770 GPU at FP32 precision.

</div>

| Model | Format | Status | Size (MB) | metrics/mAP50-95(B) | Inference time (ms/im) |

Developed by Baidu, [PaddlePaddle](https://www.paddlepaddle.org.cn/en) (**PArallel **D**istributed **D**eep **LE**arning) is China's first open-source deep learning platform. Unlike some frameworks built mainly for research, PaddlePaddle prioritizes ease of use and smooth integration across industries.

Developed by Baidu, [PaddlePaddle](https://www.paddlepaddle.org.cn/en) (**PA**rallel **D**istributed **D**eep **LE**arning) is China's first open-source deep learning platform. Unlike some frameworks built mainly for research, PaddlePaddle prioritizes ease of use and smooth integration across industries.

It offers tools and resources similar to popular frameworks like TensorFlow and PyTorch, making it accessible for developers of all experience levels. From farming and factories to service businesses, PaddlePaddle's large developer community of over 4.77 million is helping create and deploy AI applications.

@ -44,7 +44,7 @@ PaddlePaddle provides a range of options, each offering a distinct balance of ea

- **Paddle Lite**: Paddle Lite is designed for deployment on mobile and embedded devices where resources are limited. It optimizes models for smaller sizes and faster inference on ARM CPUs, GPUs, and other specialized hardware.

- **Paddle.js**: Paddle.js enables you to deploy PaddlePaddle models directly within web browsers. Paddle.js can either load a pre-trained model or transform a model from [paddle-hub](https://github.com/PaddlePaddle/PaddleHub) with model transforming tools provided by Paddle.js. It can run in browsers that support WebGL/WebGPU/WebAssembly.

- **Paddle.js**: Paddle.js enables you to deploy PaddlePaddle models directly within web browsers. Paddle.js can either load a pre-trained model or transform a model from [paddle-hub](https://github.com/PaddlePaddle/PaddleHub) with model transforming tools provided by Paddle.js. It can run in browsers that support WebGL/WebGPU/WebAssembly.

## Export to PaddlePaddle: Converting Your YOLOv8 Model

@ -57,7 +57,7 @@ To install the required package, run:

@ -61,7 +61,7 @@ To install the required packages, run:

The `tune()` method in YOLOv8 provides an easy-to-use interface for hyperparameter tuning with Ray Tune. It accepts several arguments that allow you to customize the tuning process. Below is a detailed explanation of each parameter:

| Parameter | Type | Description | Default Value |

| `data` | `str` | The dataset configuration file (in YAML format) to run the tuner on. This file should specify the training and validation data paths, as well as other dataset-specific settings. | |

| `space` | `dict, optional` | A dictionary defining the hyperparameter search space for Ray Tune. Each key corresponds to a hyperparameter name, and the value specifies the range of values to explore during tuning. If not provided, YOLOv8 uses a default search space with various hyperparameters. | |

| `grace_period` | `int, optional` | The grace period in epochs for the [ASHA scheduler](https://docs.ray.io/en/latest/tune/api/schedulers.html) in Ray Tune. The scheduler will not terminate any trial before this number of epochs, allowing the model to have some minimum training before making a decision on early stopping. | 10 |

@ -76,7 +76,7 @@ By customizing these parameters, you can fine-tune the hyperparameter optimizati

The following table lists the default search space parameters for hyperparameter tuning in YOLOv8 with Ray Tune. Each parameter has a specific value range defined by `tune.uniform()`.

@ -65,7 +65,7 @@ To install the required package, run:

!!! Tip "Installation"

=== "CLI"

```bash

# Install the required package for YOLOv8

pip install ultralytics

@ -139,7 +139,7 @@ The arguments provided when using [export](../modes/export.md) for an Ultralytic

!!! note

During calibration, twice the `batch` size provided will be used. Using small batches can lead to inaccurate scaling during calibration. This is because the process adjusts based on the data it sees. Small batches might not capture the full range of values, leading to issues with the final calibration, so the `batch` size is doubled automatically. If no batch size is specified `batch=1`, calibration will be run at `batch=1 * 2` to reduce calibration scaling errors.

During calibration, twice the `batch` size provided will be used. Using small batches can lead to inaccurate scaling during calibration. This is because the process adjusts based on the data it sees. Small batches might not capture the full range of values, leading to issues with the final calibration, so the `batch` size is doubled automatically. If no batch size is specified `batch=1`, calibration will be run at `batch=1 * 2` to reduce calibration scaling errors.

Experimentation by NVIDIA led them to recommend using at least 500 calibration images that are representative of the data for your model, with INT8 quantization calibration. This is a guideline and not a _hard_ requirement, and <u>**you will need to experiment with what is required to perform well for your dataset**.</u> Since the calibration data is required for INT8 calibration with TensorRT, make certain to use the `data` argument when `int8=True` for TensorRT and use `data="my_dataset.yaml"`, which will use the images from [validation](../modes/val.md) to calibrate with. When no value is passed for `data` with export to TensorRT with INT8 quantization, the default will be to use one of the ["small" example datasets based on the model task](../datasets/index.md) instead of throwing an error.

@ -166,13 +166,13 @@ Experimentation by NVIDIA led them to recommend using at least 500 calibration i

# Run inference

result = model.predict("https://ultralytics.com/images/bus.jpg")

```

1. Exports with dynamic axes, this will be enabled by default when exporting with `int8=True` even when not explicitly set. See [export arguments](../modes/export.md#arguments) for additional information.

2. Sets max batch size of 8 for exported model, which calibrates with `batch = 2 * 8` to avoid scaling errors during calibration.

3. Allocates 4 GiB of memory instead of allocating the entire device for conversion process.

4. Uses [COCO dataset](../datasets/detect/coco.md) for calibration, specifically the images used for [validation](../modes/val.md) (5,000 total).

=== "CLI"

```bash

@ -219,7 +219,7 @@ Experimentation by NVIDIA led them to recommend using at least 500 calibration i

See [Detection Docs](../tasks/detect.md) for usage examples with these models trained on [COCO](../datasets/detect/coco.md), which include 80 pre-trained classes.

!!! note

!!! note

Inference times shown for `mean`, `min` (fastest), and `max` (slowest) for each test using pre-trained weights `yolov8n.engine`

| Precision | Eval test | mean<br>(ms) | min \| max<br>(ms) | mAP<sup>val<br>50(B) | mAP<sup>val<br>50-95(B) | `batch` | size<br><sup>(pixels) |

@ -234,8 +234,8 @@ Experimentation by NVIDIA led them to recommend using at least 500 calibration i

=== "Segmentation (COCO)"

See [Segmentation Docs](../tasks/segment.md) for usage examples with these models trained on [COCO](../datasets/segment/coco.md), which include 80 pre-trained classes.

!!! note

!!! note

Inference times shown for `mean`, `min` (fastest), and `max` (slowest) for each test using pre-trained weights `yolov8n-seg.engine`

@ -251,7 +251,7 @@ Experimentation by NVIDIA led them to recommend using at least 500 calibration i

See [Classification Docs](../tasks/classify.md) for usage examples with these models trained on [ImageNet](../datasets/classify/imagenet.md), which include 1000 pre-trained classes.

!!! note

!!! note

Inference times shown for `mean`, `min` (fastest), and `max` (slowest) for each test using pre-trained weights `yolov8n-cls.engine`

| Precision | Eval test | mean<br>(ms) | min \| max<br>(ms) | top-1 | top-5 | `batch` | size<br><sup>(pixels) |

@ -267,7 +267,7 @@ Experimentation by NVIDIA led them to recommend using at least 500 calibration i

See [Pose Estimation Docs](../tasks/pose.md) for usage examples with these models trained on [COCO](../datasets/pose/coco.md), which include 1 pre-trained class, "person".

!!! note

!!! note

Inference times shown for `mean`, `min` (fastest), and `max` (slowest) for each test using pre-trained weights `yolov8n-pose.engine`

@ -283,7 +283,7 @@ Experimentation by NVIDIA led them to recommend using at least 500 calibration i

See [Oriented Detection Docs](../tasks/obb.md) for usage examples with these models trained on [DOTAv1](../datasets/obb/dota-v2.md#dota-v10), which include 15 pre-trained classes.

!!! note

!!! note

Inference times shown for `mean`, `min` (fastest), and `max` (slowest) for each test using pre-trained weights `yolov8n-obb.engine`

| Precision | Eval test | mean<br>(ms) | min \| max<br>(ms) | mAP<sup>val<br>50(B) | mAP<sup>val<br>50-95(B) | `batch` | size<br><sup>(pixels) |

@ -303,7 +303,7 @@ Experimentation by NVIDIA led them to recommend using at least 500 calibration i

Tested with Windows 10.0.19045, `python 3.10.9`, `ultralytics==8.2.4`, `tensorrt==10.0.0b6`

!!! note

!!! note

Inference times shown for `mean`, `min` (fastest), and `max` (slowest) for each test using pre-trained weights `yolov8n.engine`

| Precision | Eval test | mean<br>(ms) | min \| max<br>(ms) | mAP<sup>val<br>50(B) | mAP<sup>val<br>50-95(B) | `batch` | size<br><sup>(pixels) |

@ -318,8 +318,8 @@ Experimentation by NVIDIA led them to recommend using at least 500 calibration i

=== "RTX 3060 12 GB"

Tested with Windows 10.0.22631, `python 3.11.9`, `ultralytics==8.2.4`, `tensorrt==10.0.1`

!!! note

!!! note

Inference times shown for `mean`, `min` (fastest), and `max` (slowest) for each test using pre-trained weights `yolov8n.engine`

@ -336,7 +336,7 @@ Experimentation by NVIDIA led them to recommend using at least 500 calibration i

Tested with Pop!_OS 22.04 LTS, `python 3.10.12`, `ultralytics==8.2.4`, `tensorrt==8.6.1.post1`

!!! note

!!! note

Inference times shown for `mean`, `min` (fastest), and `max` (slowest) for each test using pre-trained weights `yolov8n.engine`

| Precision | Eval test | mean<br>(ms) | min \| max<br>(ms) | mAP<sup>val<br>50(B) | mAP<sup>val<br>50-95(B) | `batch` | size<br><sup>(pixels) |

@ -356,7 +356,7 @@ Experimentation by NVIDIA led them to recommend using at least 500 calibration i

@ -128,7 +128,7 @@ After running the usage code snippet, you can access the Weights & Biases (W&B)

- **Real-Time Metrics Tracking**: Observe metrics like loss, accuracy, and validation scores as they evolve during the training, offering immediate insights for model tuning.

<divstyle="text-align:center;"><blockquoteclass="imgur-embed-pub"lang="en"data-id="a/TB76U9O"><ahref="//imgur.com/D6NVnmN">Take a look at how the experiments are tracked using Weights & Biases.</a></blockquote></div><scriptasyncsrc="//s.imgur.com/min/embed.js"charset="utf-8"></script>

<divstyle="text-align:center;"><blockquoteclass="imgur-embed-pub"lang="en"data-id="a/TB76U9O"><ahref="//imgur.com/D6NVnmN">Take a look at how the experiments are tracked using Weights & Biases.</a></blockquote></div><scriptasyncsrc="//s.imgur.com/min/embed.js"charset="utf-8"></script>

- **Hyperparameter Optimization**: Weights & Biases aids in fine-tuning critical parameters such as learning rate, batch size, and more, enhancing the performance of YOLOv8.

@ -136,7 +136,7 @@ After running the usage code snippet, you can access the Weights & Biases (W&B)

- **Visualization of Training Progress**: Graphical representations of key metrics provide an intuitive understanding of the model's performance across epochs.

<divstyle="text-align:center;"><blockquoteclass="imgur-embed-pub"lang="en"data-id="a/kU5h7W4"data-context="false"><ahref="//imgur.com/a/kU5h7W4">Take a look at how Weights & Biases helps you visualize validation results.</a></blockquote></div><scriptasyncsrc="//s.imgur.com/min/embed.js"charset="utf-8"></script>

<divstyle="text-align:center;"><blockquoteclass="imgur-embed-pub"lang="en"data-id="a/kU5h7W4"data-context="false"><ahref="//imgur.com/a/kU5h7W4">Take a look at how Weights & Biases helps you visualize validation results.</a></blockquote></div><scriptasyncsrc="//s.imgur.com/min/embed.js"charset="utf-8"></script>

- **Resource Monitoring**: Keep track of CPU, GPU, and memory usage to optimize the efficiency of the training process.

@ -144,7 +144,7 @@ After running the usage code snippet, you can access the Weights & Biases (W&B)

- **Viewing Inference Results with Image Overlay**: Visualize the prediction results on images using interactive overlays in Weights & Biases, providing a clear and detailed view of model performance on real-world data. For more detailed information on Weights & Biases' image overlay capabilities, check out this [link](https://docs.wandb.ai/guides/track/log/media#image-overlays).

<divstyle="text-align:center;"><blockquoteclass="imgur-embed-pub"lang="en"data-id="a/UTSiufs"data-context="false"><ahref="//imgur.com/a/UTSiufs">Take a look at how Weights & Biases' image overlays helps visualize model inferences.</a></blockquote></div><scriptasyncsrc="//s.imgur.com/min/embed.js"charset="utf-8"></script>

<divstyle="text-align:center;"><blockquoteclass="imgur-embed-pub"lang="en"data-id="a/UTSiufs"data-context="false"><ahref="//imgur.com/a/UTSiufs">Take a look at how Weights & Biases' image overlays helps visualize model inferences.</a></blockquote></div><scriptasyncsrc="//s.imgur.com/min/embed.js"charset="utf-8"></script>

By using these features, you can effectively track, analyze, and optimize your YOLOv8 model's training, ensuring the best possible performance and efficiency.

@ -48,9 +48,9 @@ FastSAM is designed to address the limitations of the [Segment Anything Model (S

This table presents the available models with their specific pre-trained weights, the tasks they support, and their compatibility with different operating modes like [Inference](../modes/predict.md), [Validation](../modes/val.md), [Training](../modes/train.md), and [Export](../modes/export.md), indicated by ✅ emojis for supported modes and ❌ emojis for unsupported modes.

| Model Type | Pre-trained Weights | Tasks Supported | Inference | Validation | Training | Export |

Additionally, you can try FastSAM through a [Colab demo](https://colab.research.google.com/drive/1oX14f6IneGGw612WgVlAiy91UHwFAvr9?usp=sharing) or on the [HuggingFace web demo](https://huggingface.co/spaces/An-619/FastSAM) for a visual experience.

@ -19,7 +19,7 @@ Here are some of the key models supported:

5. **[YOLOv7](yolov7.md)**: Updated YOLO models released in 2022 by the authors of YOLOv4.

6. **[YOLOv8](yolov8.md) NEW 🚀**: The latest version of the YOLO family, featuring enhanced capabilities such as instance segmentation, pose/keypoints estimation, and classification.

7. **[YOLOv9](yolov9.md)**: An experimental model trained on the Ultralytics [YOLOv5](yolov5.md) codebase implementing Programmable Gradient Information (PGI).

8. **[YOLOv10](yolov10.md)**: By Tsinghua University, featuring NMS-free training and efficiency-accuracy driven architecture, delivering state-of-the-art performance and latency.

8. **[YOLOv10](yolov10.md)**: By Tsinghua University, featuring NMS-free training and efficiency-accuracy driven architecture, delivering state-of-the-art performance and latency.

9. **[Segment Anything Model (SAM)](sam.md)**: Meta's Segment Anything Model (SAM).

10. **[Mobile Segment Anything Model (MobileSAM)](mobile-sam.md)**: MobileSAM for mobile applications, by Kyung Hee University.

11. **[Fast Segment Anything Model (FastSAM)](fast-sam.md)**: FastSAM by Image & Video Analysis Group, Institute of Automation, Chinese Academy of Sciences.

@ -21,8 +21,8 @@ MobileSAM is trained on a single GPU with a 100k dataset (1% of the original ima

This table presents the available models with their specific pre-trained weights, the tasks they support, and their compatibility with different operating modes like [Inference](../modes/predict.md), [Validation](../modes/val.md), [Training](../modes/train.md), and [Export](../modes/export.md), indicated by ✅ emojis for supported modes and ❌ emojis for unsupported modes.

| Model Type | Pre-trained Weights | Tasks Supported | Inference | Validation | Training | Export |

# Baidu's RT-DETR: A Vision Transformer-Based Real-Time Object Detector

## Overview

Real-Time Detection Transformer (RT-DETR), developed by Baidu, is a cutting-edge end-to-end object detector that provides real-time performance while maintaining high accuracy. It is based on the idea of DETR (the NMS-free framework), meanwhile introducing conv-based backbone and an efficient hybrid encoder to gain real-time speed. RT-DETR efficiently processes multiscale features by decoupling intra-scale interaction and cross-scale fusion. The model is highly adaptable, supporting flexible adjustment of inference speed using different decoder layers without retraining. RT-DETR excels on accelerated backends like CUDA with TensorRT, outperforming many other real-time object detectors.

<palign="center">

@ -74,9 +75,9 @@ This example provides simple RT-DETR training and inference examples. For full d

This table presents the model types, the specific pre-trained weights, the tasks supported by each model, and the various modes ([Train](../modes/train.md) , [Val](../modes/val.md), [Predict](../modes/predict.md), [Export](../modes/export.md)) that are supported, indicated by ✅ emojis.

| Model Type | Pre-trained Weights | Tasks Supported | Inference | Validation | Training | Export |

@ -30,9 +30,9 @@ For an in-depth look at the Segment Anything Model and the SA-1B dataset, please

This table presents the available models with their specific pre-trained weights, the tasks they support, and their compatibility with different operating modes like [Inference](../modes/predict.md), [Validation](../modes/val.md), [Training](../modes/train.md), and [Export](../modes/export.md), indicated by ✅ emojis for supported modes and ❌ emojis for unsupported modes.

| Model Type | Pre-trained Weights | Tasks Supported | Inference | Validation | Training | Export |

@ -23,7 +23,7 @@ Developed by Deci AI, YOLO-NAS is a groundbreaking object detection foundational

Experience the power of next-generation object detection with the pre-trained YOLO-NAS models provided by Ultralytics. These models are designed to deliver top-notch performance in terms of both speed and accuracy. Choose from a variety of options tailored to your specific needs:

| Model | mAP | Latency (ms) |

|------------------|-------|--------------|

| ---------------- | ----- | ------------ |

| YOLO-NAS S | 47.5 | 3.21 |

| YOLO-NAS M | 51.55 | 5.85 |

| YOLO-NAS L | 52.22 | 7.87 |

@ -90,10 +90,10 @@ We offer three variants of the YOLO-NAS models: Small (s), Medium (m), and Large

Below is a detailed overview of each model, including links to their pre-trained weights, the tasks they support, and their compatibility with different operating modes.

| Model Type | Pre-trained Weights | Tasks Supported | Inference | Validation | Training | Export |

@ -48,20 +48,20 @@ This section details the models available with their specific pre-trained weight

All the YOLOv8-World weights have been directly migrated from the official [YOLO-World](https://github.com/AILab-CVC/YOLO-World) repository, highlighting their excellent contributions.

| Model Type | Pre-trained Weights | Tasks Supported | Inference | Validation | Training | Export |

@ -45,11 +45,11 @@ YOLOv10 comes in various model scales to cater to different application needs:

YOLOv10 outperforms previous YOLO versions and other state-of-the-art models in terms of accuracy and efficiency. For example, YOLOv10-S is 1.8x faster than RT-DETR-R18 with similar AP on the COCO dataset, and YOLOv10-B has 46% less latency and 25% fewer parameters than YOLOv9-C with the same performance.

@ -33,10 +33,10 @@ The YOLOv3 series, including YOLOv3, YOLOv3-Ultralytics, and YOLOv3u, are design

All three models support a comprehensive set of modes, ensuring versatility in various stages of model deployment and development. These modes include [Inference](../modes/predict.md), [Validation](../modes/val.md), [Training](../modes/train.md), and [Export](../modes/export.md), providing users with a complete toolkit for effective object detection.

| Model Type | Tasks Supported | Inference | Validation | Training | Export |

This table provides an at-a-glance view of the capabilities of each YOLOv3 variant, highlighting their versatility and suitability for various tasks and operational modes in object detection workflows.

@ -25,8 +25,8 @@ YOLOv5u represents an advancement in object detection methodologies. Originating

The YOLOv5u models, with various pre-trained weights, excel in [Object Detection](../tasks/detect.md) tasks. They support a comprehensive range of modes, making them suitable for diverse applications, from development to deployment.

| Model Type | Pre-trained Weights | Task | Inference | Validation | Training | Export |

This table provides a detailed overview of the YOLOv5u model variants, highlighting their applicability in object detection tasks and support for various operational modes such as [Inference](../modes/predict.md), [Validation](../modes/val.md), [Training](../modes/train.md), and [Export](../modes/export.md). This comprehensive support ensures that users can fully leverage the capabilities of YOLOv5u models in a wide range of object detection scenarios.

@ -75,12 +75,12 @@ This example provides simple YOLOv6 training and inference examples. For full do

The YOLOv6 series offers a range of models, each optimized for high-performance [Object Detection](../tasks/detect.md). These models cater to varying computational needs and accuracy requirements, making them versatile for a wide array of applications.

| Model Type | Pre-trained Weights | Tasks Supported | Inference | Validation | Training | Export |

This table provides a detailed overview of the YOLOv6 model variants, highlighting their capabilities in object detection tasks and their compatibility with various operational modes such as [Inference](../modes/predict.md), [Validation](../modes/val.md), [Training](../modes/train.md), and [Export](../modes/export.md). This comprehensive support ensures that users can fully leverage the capabilities of YOLOv6 models in a broad range of object detection scenarios.

@ -37,12 +37,12 @@ The YOLOv8 series offers a diverse range of models, each specialized for specifi

Each variant of the YOLOv8 series is optimized for its respective task, ensuring high performance and accuracy. Additionally, these models are compatible with various operational modes including [Inference](../modes/predict.md), [Validation](../modes/val.md), [Training](../modes/train.md), and [Export](../modes/export.md), facilitating their use in different stages of deployment and development.

| Model | Filenames | Task | Inference | Validation | Training | Export |

This table provides an overview of the YOLOv8 model variants, highlighting their applicability in specific tasks and their compatibility with various operational modes such as Inference, Validation, Training, and Export. It showcases the versatility and robustness of the YOLOv8 series, making them suitable for a variety of applications in computer vision.

@ -153,9 +153,9 @@ This example provides simple YOLOv9 training and inference examples. For full do

The YOLOv9 series offers a range of models, each optimized for high-performance [Object Detection](../tasks/detect.md). These models cater to varying computational needs and accuracy requirements, making them versatile for a wide array of applications.

| Model | Filenames | Tasks | Inference | Validation | Training | Export |

This table provides a detailed overview of the YOLOv9 model variants, highlighting their capabilities in object detection tasks and their compatibility with various operational modes such as [Inference](../modes/predict.md), [Validation](../modes/val.md), [Training](../modes/train.md), and [Export](../modes/export.md). This comprehensive support ensures that users can fully leverage the capabilities of YOLOv9 models in a broad range of object detection scenarios.

@ -74,7 +74,7 @@ Run YOLOv8n benchmarks on all supported export formats including ONNX, TensorRT

Arguments such as `model`, `data`, `imgsz`, `half`, `device`, and `verbose` provide users with the flexibility to fine-tune the benchmarks to their specific needs and compare the performance of different export formats with ease.

| `model` | `None` | Specifies the path to the model file. Accepts both `.pt` and `.yaml` formats, e.g., `"yolov8n.pt"` for pre-trained models or configuration files. |

| `data` | `None` | Path to a YAML file defining the dataset for benchmarking, typically including paths and settings for validation data. Example: `"coco8.yaml"`. |

| `imgsz` | `640` | The input image size for the model. Can be a single integer for square images or a tuple `(width, height)` for non-square, e.g., `(640, 480)`. |

@ -88,19 +88,19 @@ Arguments such as `model`, `data`, `imgsz`, `half`, `device`, and `verbose` prov

Benchmarks will attempt to run automatically on all possible export formats below.

| Format | `format` Argument | Model | Metadata | Arguments |

@ -75,7 +75,7 @@ Export a YOLOv8n model to a different format like ONNX or TensorRT. See Argument

This table details the configurations and options available for exporting YOLO models to different formats. These settings are critical for optimizing the exported model's performance, size, and compatibility across various platforms and environments. Proper configuration ensures that the model is ready for deployment in the intended application with optimal efficiency.

| `format` | `str` | `'torchscript'` | Target format for the exported model, such as `'onnx'`, `'torchscript'`, `'tensorflow'`, or others, defining compatibility with various deployment environments. |

| `imgsz` | `int` or `tuple` | `640` | Desired image size for the model input. Can be an integer for square images or a tuple `(height, width)` for specific dimensions. |

| `keras` | `bool` | `False` | Enables export to Keras format for TensorFlow SavedModel, providing compatibility with TensorFlow serving and APIs. |

@ -83,11 +83,11 @@ This table details the configurations and options available for exporting YOLO m

| `half` | `bool` | `False` | Enables FP16 (half-precision) quantization, reducing model size and potentially speeding up inference on supported hardware. |

| `int8` | `bool` | `False` | Activates INT8 quantization, further compressing the model and speeding up inference with minimal accuracy loss, primarily for edge devices. |

| `dynamic` | `bool` | `False` | Allows dynamic input sizes for ONNX and TensorRT exports, enhancing flexibility in handling varying image dimensions. |

| `simplify` | `bool` | `False` | Simplifies the model graph for ONNX exports with `onnxslim`, potentially improving performance and compatibility. |

| `simplify` | `bool` | `False` | Simplifies the model graph for ONNX exports with `onnxslim`, potentially improving performance and compatibility. |

| `opset` | `int` | `None` | Specifies the ONNX opset version for compatibility with different ONNX parsers and runtimes. If not set, uses the latest supported version. |

| `workspace` | `float` | `4.0` | Sets the maximum workspace size in GiB for TensorRT optimizations, balancing memory usage and performance. |

| `nms` | `bool` | `False` | Adds Non-Maximum Suppression (NMS) to the CoreML export, essential for accurate and efficient detection post-processing. |

| `batch` | `int` | `1` | Specifies export model batch inference size or the max number of images the exported model will process concurrently in `predict` mode. |

| `batch` | `int` | `1` | Specifies export model batch inference size or the max number of images the exported model will process concurrently in `predict` mode. |

Adjusting these parameters allows for customization of the export process to fit specific requirements, such as deployment environment, hardware constraints, and performance targets. Selecting the appropriate format and settings is essential for achieving the best balance between model size, speed, and accuracy.

@ -96,17 +96,17 @@ Adjusting these parameters allows for customization of the export process to fit

Available YOLOv8 export formats are in the table below. You can export to any format using the `format` argument, i.e. `format='onnx'` or `format='engine'`. You can predict or validate directly on exported models, i.e. `yolo predict model=yolov8n.onnx`. Usage examples are shown for your model after export completes.

| Format | `format` Argument | Model | Metadata | Arguments |

| ![Vehicle Spare Parts Detection][car spare parts] | ![Football Player Detection][football player detect] | ![People Fall Detection][human fall detect] |

| Vehicle Spare Parts Detection | Football Player Detection | People Fall Detection |

@ -104,16 +104,16 @@ YOLOv8 can process different types of input sources for inference, as shown in t

Use `stream=True` for processing long videos or large datasets to efficiently manage memory. When `stream=False`, the results for all frames or data points are stored in memory, which can quickly add up and cause out-of-memory errors for large inputs. In contrast, `stream=True` utilizes a generator, which only keeps the results of the current frame or data point in memory, significantly reducing memory consumption and preventing out-of-memory issues.

| `source` | `str` | `'ultralytics/assets'` | Specifies the data source for inference. Can be an image path, video file, directory, URL, or device ID for live feeds. Supports a wide range of formats and sources, enabling flexible application across different types of input. |

| `conf` | `float` | `0.25` | Sets the minimum confidence threshold for detections. Objects detected with confidence below this threshold will be disregarded. Adjusting this value can help reduce false positives. |

| `iou` | `float` | `0.7` | Intersection Over Union (IoU) threshold for Non-Maximum Suppression (NMS). Lower values result in fewer detections by eliminating overlapping boxes, useful for reducing duplicates. |

| `show` | `bool` | `False` | If `True`, displays the annotated images or videos in a window. Useful for immediate visual feedback during development or testing. |

| `save` | `bool` | `False` | Enables saving of the annotated images or videos to file. Useful for documentation, further analysis, or sharing results. |

| `save_frames` | `bool` | `False` | When processing videos, saves individual frames as images. Useful for extracting specific frames or for detailed frame-by-frame analysis. |

@ -409,7 +409,7 @@ YOLOv8 supports various image and video formats, as specified in [ultralytics/da

The below table contains valid Ultralytics image formats.

| Image Suffixes | Example Predict Command | Reference |

| `line_width` | `float` | Line width of bounding boxes. Scales with image size if `None`. | `None` |

| `font_size` | `float` | Text font size. Scales with image size if `None`. | `None` |

@ -800,7 +800,5 @@ Here's a Python script using OpenCV (`cv2`) and YOLOv8 to run inference on video