Contact

-For YOLOv8 bug reports and feature requests please visit [GitHub Issues](https://github.com/ultralytics/ultralytics/issues), and join our [Discord](https://discord.gg/7aegy5d8) community for questions and discussions!

+For YOLOv8 bug reports and feature requests please visit [GitHub Issues](https://github.com/ultralytics/ultralytics/issues), and join our [Discord](https://discord.gg/2wNGbc6g9X) community for questions and discussions!

@@ -259,6 +263,6 @@ For YOLOv8 bug reports and feature requests please visit [GitHub Issues](https:/

-

+

-

+

diff --git a/README.zh-CN.md b/README.zh-CN.md

index 6e4ca425a..872624907 100644

--- a/README.zh-CN.md

+++ b/README.zh-CN.md

@@ -20,7 +20,7 @@

[Ultralytics](https://ultralytics.com) [YOLOv8](https://github.com/ultralytics/ultralytics) 是一款前沿、最先进(SOTA)的模型,基于先前 YOLO 版本的成功,引入了新功能和改进,进一步提升性能和灵活性。YOLOv8 设计快速、准确且易于使用,使其成为各种物体检测与跟踪、实例分割、图像分类和姿态估计任务的绝佳选择。

-我们希望这里的资源能帮助您充分利用 YOLOv8。请浏览 YOLOv8 文档 了解详细信息,在 GitHub 上提交问题以获得支持,并加入我们的 Discord 社区进行问题和讨论!

+我们希望这里的资源能帮助您充分利用 YOLOv8。请浏览 YOLOv8 文档 了解详细信息,在 GitHub 上提交问题以获得支持,并加入我们的 Discord 社区进行问题和讨论!

如需申请企业许可,请在 [Ultralytics Licensing](https://ultralytics.com/license) 处填写表格

@@ -45,7 +45,7 @@

-

+

-

+

-

+

-

+

@@ -57,12 +57,16 @@

@@ -57,12 +57,16 @@

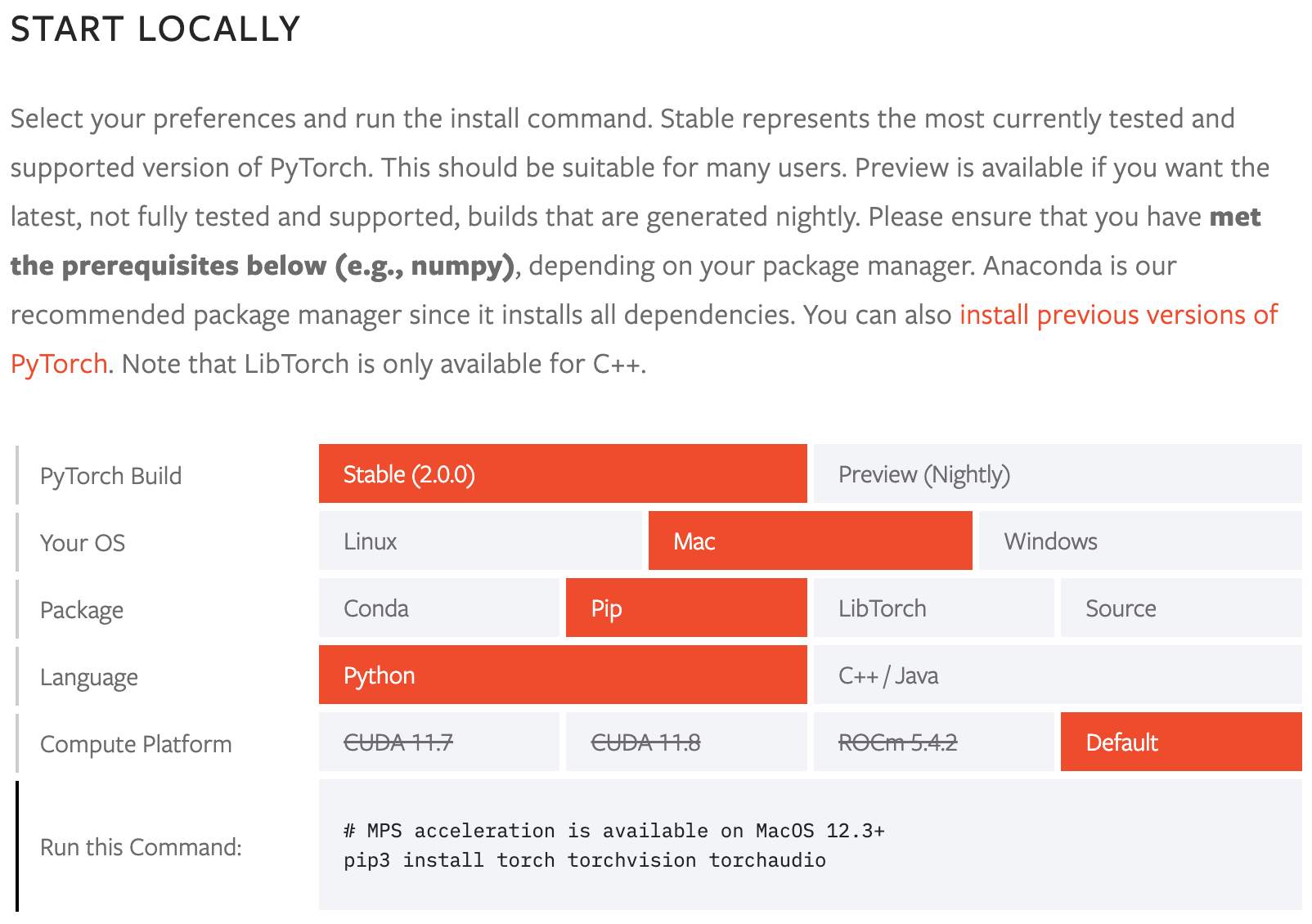

安装

-在一个 [**Python>=3.7**](https://www.python.org/) 环境中,使用 [**PyTorch>=1.7**](https://pytorch.org/get-started/locally/),通过 pip 安装 ultralytics 软件包以及所有[依赖项](https://github.com/ultralytics/ultralytics/blob/main/requirements.txt)。 +使用Pip在一个[**Python>=3.8**](https://www.python.org/)环境中安装`ultralytics`包,此环境还需包含[**PyTorch>=1.7**](https://pytorch.org/get-started/locally/)。这也会安装所有必要的[依赖项](https://github.com/ultralytics/ultralytics/blob/main/requirements.txt)。 + +[](https://badge.fury.io/py/ultralytics) [](https://pepy.tech/project/ultralytics) ```bash pip install ultralytics ``` +如需使用包括Conda、Docker和Git在内的其他安装方法,请参考[快速入门指南](https://docs.ultralytics.com/quickstart)。 +

@@ -236,7 +240,7 @@ YOLOv8 提供两种不同的许可证:

##

diff --git a/docker/Dockerfile b/docker/Dockerfile index 4fca588d2..2f86bb858 100644 --- a/docker/Dockerfile +++ b/docker/Dockerfile @@ -10,7 +10,7 @@ RUN pip install --no-cache nvidia-tensorrt --index-url https://pypi.ngc.nvidia.c ADD https://ultralytics.com/assets/Arial.ttf https://ultralytics.com/assets/Arial.Unicode.ttf /root/.config/Ultralytics/ # Install linux packages -# g++ required to build 'tflite_support' package +# g++ required to build 'tflite_support' and 'lap' packages RUN apt update \ && apt install --no-install-recommends -y gcc git zip curl htop libgl1-mesa-glx libglib2.0-0 libpython3-dev gnupg g++ # RUN alias python=python3 diff --git a/docker/Dockerfile-arm64 b/docker/Dockerfile-arm64 index bd5432394..3a91abd47 100644 --- a/docker/Dockerfile-arm64 +++ b/docker/Dockerfile-arm64 @@ -9,8 +9,9 @@ FROM arm64v8/ubuntu:22.10 ADD https://ultralytics.com/assets/Arial.ttf https://ultralytics.com/assets/Arial.Unicode.ttf /root/.config/Ultralytics/ # Install linux packages +# g++ required to build 'tflite_support' and 'lap' packages RUN apt update \ - && apt install --no-install-recommends -y python3-pip git zip curl htop gcc libgl1-mesa-glx libglib2.0-0 libpython3-dev + && apt install --no-install-recommends -y python3-pip git zip curl htop gcc libgl1-mesa-glx libglib2.0-0 libpython3-dev gnupg g++ # RUN alias python=python3 # Create working directory diff --git a/docker/Dockerfile-cpu b/docker/Dockerfile-cpu index c58e4233c..3bf0339c1 100644 --- a/docker/Dockerfile-cpu +++ b/docker/Dockerfile-cpu @@ -3,13 +3,13 @@ # Image is CPU-optimized for ONNX, OpenVINO and PyTorch YOLOv8 deployments # Start FROM Ubuntu image https://hub.docker.com/_/ubuntu -FROM ubuntu:22.10 +FROM ubuntu:lunar-20230615 # Downloads to user config dir ADD https://ultralytics.com/assets/Arial.ttf https://ultralytics.com/assets/Arial.Unicode.ttf /root/.config/Ultralytics/ # Install linux packages -# g++ required to build 'tflite_support' package +# g++ required to build 'tflite_support' and 'lap' packages RUN apt update \ && apt install --no-install-recommends -y python3-pip git zip curl htop libgl1-mesa-glx libglib2.0-0 libpython3-dev gnupg g++ # RUN alias python=python3 @@ -23,6 +23,9 @@ WORKDIR /usr/src/ultralytics RUN git clone https://github.com/ultralytics/ultralytics /usr/src/ultralytics ADD https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n.pt /usr/src/ultralytics/ +# Remove python3.11/EXTERNALLY-MANAGED or use 'pip install --break-system-packages' avoid 'externally-managed-environment' Ubuntu nightly error +RUN rm -rf /usr/lib/python3.11/EXTERNALLY-MANAGED + # Install pip packages RUN python3 -m pip install --upgrade pip wheel RUN pip install --no-cache -e . thop --extra-index-url https://download.pytorch.org/whl/cpu diff --git a/docker/Dockerfile-jetson b/docker/Dockerfile-jetson index 0182f2c26..785ca8938 100644 --- a/docker/Dockerfile-jetson +++ b/docker/Dockerfile-jetson @@ -9,7 +9,7 @@ FROM nvcr.io/nvidia/l4t-pytorch:r35.2.1-pth2.0-py3 ADD https://ultralytics.com/assets/Arial.ttf https://ultralytics.com/assets/Arial.Unicode.ttf /root/.config/Ultralytics/ # Install linux packages -# g++ required to build 'tflite_support' package +# g++ required to build 'tflite_support' and 'lap' packages RUN apt update \ && apt install --no-install-recommends -y gcc git zip curl htop libgl1-mesa-glx libglib2.0-0 libpython3-dev gnupg g++ # RUN alias python=python3 diff --git a/docs/datasets/classify/index.md b/docs/datasets/classify/index.md index fd902882d..ab6ca5cff 100644 --- a/docs/datasets/classify/index.md +++ b/docs/datasets/classify/index.md @@ -97,9 +97,24 @@ In this example, the `train` directory contains subdirectories for each class in ```bash # Start training from a pretrained *.pt model - yolo detect train data=path/to/data model=yolov8n-seg.pt epochs=100 imgsz=640 + yolo detect train data=path/to/data model=yolov8n-cls.pt epochs=100 imgsz=640 ``` ## Supported Datasets -TODO \ No newline at end of file +Ultralytics supports the following datasets with automatic download: + +* [Caltech 101](caltech101.md): A dataset containing images of 101 object categories for image classification tasks. +* [Caltech 256](caltech256.md): An extended version of Caltech 101 with 256 object categories and more challenging images. +* [CIFAR-10](cifar10.md): A dataset of 60K 32x32 color images in 10 classes, with 6K images per class. +* [CIFAR-100](cifar100.md): An extended version of CIFAR-10 with 100 object categories and 600 images per class. +* [Fashion-MNIST](fashion-mnist.md): A dataset consisting of 70,000 grayscale images of 10 fashion categories for image classification tasks. +* [ImageNet](imagenet.md): A large-scale dataset for object detection and image classification with over 14 million images and 20,000 categories. +* [ImageNet-10](imagenet10.md): A smaller subset of ImageNet with 10 categories for faster experimentation and testing. +* [Imagenette](imagenette.md): A smaller subset of ImageNet that contains 10 easily distinguishable classes for quicker training and testing. +* [Imagewoof](imagewoof.md): A more challenging subset of ImageNet containing 10 dog breed categories for image classification tasks. +* [MNIST](mnist.md): A dataset of 70,000 grayscale images of handwritten digits for image classification tasks. + +### Adding your own dataset + +If you have your own dataset and would like to use it for training classification models with Ultralytics, ensure that it follows the format specified above under "Dataset format" and then point your `data` argument to the dataset directory. \ No newline at end of file diff --git a/docs/datasets/detect/index.md b/docs/datasets/detect/index.md index 7eec93cd9..6a9cd614d 100644 --- a/docs/datasets/detect/index.md +++ b/docs/datasets/detect/index.md @@ -1,82 +1,53 @@ --- comments: true -description: Learn about supported dataset formats for training YOLO detection models, including Ultralytics YOLO and COCO, in this Object Detection Datasets Overview. -keywords: object detection, datasets, formats, Ultralytics YOLO, label format, dataset file format, dataset definition, YOLO dataset, model configuration +description: Explore supported dataset formats for training YOLO detection models, including Ultralytics YOLO and COCO. This guide covers various dataset formats and their specific configurations for effective object detection training. +keywords: object detection, datasets, formats, Ultralytics YOLO, COCO, label format, dataset file format, dataset definition, YOLO dataset, model configuration --- # Object Detection Datasets Overview +Training a robust and accurate object detection model requires a comprehensive dataset. This guide introduces various formats of datasets that are compatible with the Ultralytics YOLO model and provides insights into their structure, usage, and how to convert between different formats. + ## Supported Dataset Formats ### Ultralytics YOLO format -** Label Format ** - -The dataset format used for training YOLO detection models is as follows: - -1. One text file per image: Each image in the dataset has a corresponding text file with the same name as the image file and the ".txt" extension. -2. One row per object: Each row in the text file corresponds to one object instance in the image. -3. Object information per row: Each row contains the following information about the object instance: - - Object class index: An integer representing the class of the object (e.g., 0 for person, 1 for car, etc.). - - Object center coordinates: The x and y coordinates of the center of the object, normalized to be between 0 and 1. - - Object width and height: The width and height of the object, normalized to be between 0 and 1. - -The format for a single row in the detection dataset file is as follows: - -``` -

-```

-

-Here is an example of the YOLO dataset format for a single image with two object instances:

-

-```

-0 0.5 0.4 0.3 0.6

-1 0.3 0.7 0.4 0.2

-```

-

-In this example, the first object is of class 0 (person), with its center at (0.5, 0.4), width of 0.3, and height of 0.6. The second object is of class 1 (car), with its center at (0.3, 0.7), width of 0.4, and height of 0.2.

-

-** Dataset file format **

-

-The Ultralytics framework uses a YAML file format to define the dataset and model configuration for training Detection Models. Here is an example of the YAML format used for defining a detection dataset:

-

-```

-train:

-val:

-

-nc:

-names: [, , ..., ]

-

-```

-

-The `train` and `val` fields specify the paths to the directories containing the training and validation images, respectively.

-

-The `nc` field specifies the number of object classes in the dataset.

-

-The `names` field is a list of the names of the object classes. The order of the names should match the order of the object class indices in the YOLO dataset files.

-

-NOTE: Either `nc` or `names` must be defined. Defining both are not mandatory

-

-Alternatively, you can directly define class names like this:

+The Ultralytics YOLO format is a dataset configuration format that allows you to define the dataset root directory, the relative paths to training/validation/testing image directories or *.txt files containing image paths, and a dictionary of class names. Here is an example:

```yaml

+# Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

+path: ../datasets/coco8 # dataset root dir

+train: images/train # train images (relative to 'path') 4 images

+val: images/val # val images (relative to 'path') 4 images

+test: # test images (optional)

+

+# Classes (80 COCO classes)

names:

0: person

1: bicycle

+ 2: car

+ ...

+ 77: teddy bear

+ 78: hair drier

+ 79: toothbrush

```

-** Example **

+Labels for this format should be exported to YOLO format with one `*.txt` file per image. If there are no objects in an image, no `*.txt` file is required. The `*.txt` file should be formatted with one row per object in `class x_center y_center width height` format. Box coordinates must be in **normalized xywh** format (from 0 - 1). If your boxes are in pixels, you should divide `x_center` and `width` by image width, and `y_center` and `height` by image height. Class numbers should be zero-indexed (start with 0).

-```yaml

-train: data/train/

-val: data/val/

+` is the index of the class for the object,` ` are coordinates of boudning box, and ` ... ` are the pixel coordinates of the keypoints. The coordinates are separated by spaces.

-** Dataset file format **

+### Dataset YAML format

The Ultralytics framework uses a YAML file format to define the dataset and model configuration for training Detection Models. Here is an example of the YAML format used for defining a detection dataset:

```yaml

-train:

-val:

-

-nc:

-names: [, , ..., ]

+# Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

+path: ../datasets/coco8-pose # dataset root dir

+train: images/train # train images (relative to 'path') 4 images

+val: images/val # val images (relative to 'path') 4 images

+test: # test images (optional)

# Keypoints

-kpt_shape: [num_kpts, dim] # number of keypoints, number of dims (2 for x,y or 3 for x,y,visible)

-flip_idx: [n1, n2 ... , n(num_kpts)]

-

-```

-

-The `train` and `val` fields specify the paths to the directories containing the training and validation images, respectively.

-

-The `nc` field specifies the number of object classes in the dataset.

-

-The `names` field is a list of the names of the object classes. The order of the names should match the order of the object class indices in the YOLO dataset files.

-

-NOTE: Either `nc` or `names` must be defined. Defining both are not mandatory

-

-Alternatively, you can directly define class names like this:

+kpt_shape: [17, 3] # number of keypoints, number of dims (2 for x,y or 3 for x,y,visible)

+flip_idx: [0, 2, 1, 4, 3, 6, 5, 8, 7, 10, 9, 12, 11, 14, 13, 16, 15]

-```

+# Classes dictionary

names:

0: person

- 1: bicycle

```

-(Optional) if the points are symmetric then need flip_idx, like left-right side of human or face.

-For example let's say there're five keypoints of facial landmark: [left eye, right eye, nose, left point of mouth, right point of mouse], and the original index is [0, 1, 2, 3, 4], then flip_idx is [1, 0, 2, 4, 3].(just exchange the left-right index, i.e 0-1 and 3-4, and do not modify others like nose in this example)

-

-** Example **

-

-```yaml

-train: data/train/

-val: data/val/

+The `train` and `val` fields specify the paths to the directories containing the training and validation images, respectively.

-nc: 2

-names: ['person', 'car']

+`names` is a dictionary of class names. The order of the names should match the order of the object class indices in the YOLO dataset files.

-# Keypoints

-kpt_shape: [17, 3] # number of keypoints, number of dims (2 for x,y or 3 for x,y,visible)

-flip_idx: [0, 2, 1, 4, 3, 6, 5, 8, 7, 10, 9, 12, 11, 14, 13, 16, 15]

-```

+(Optional) if the points are symmetric then need flip_idx, like left-right side of human or face.

+For example if we assume five keypoints of facial landmark: [left eye, right eye, nose, left mouth, right mouth], and the original index is [0, 1, 2, 3, 4], then flip_idx is [1, 0, 2, 4, 3] (just exchange the left-right index, i.e 0-1 and 3-4, and do not modify others like nose in this example).

## Usage

@@ -112,14 +89,40 @@ flip_idx: [0, 2, 1, 4, 3, 6, 5, 8, 7, 10, 9, 12, 11, 14, 13, 16, 15]

## Supported Datasets

-TODO

+This section outlines the datasets that are compatible with Ultralytics YOLO format and can be used for training pose estimation models:

-## Port or Convert label formats

+### COCO-Pose

-### COCO dataset format to YOLO format

+- **Description**: COCO-Pose is a large-scale object detection, segmentation, and pose estimation dataset. It is a subset of the popular COCO dataset and focuses on human pose estimation. COCO-Pose includes multiple keypoints for each human instance.

+- **Label Format**: Same as Ultralytics YOLO format as described above, with keypoints for human poses.

+- **Number of Classes**: 1 (Human).

+- **Keypoints**: 17 keypoints including nose, eyes, ears, shoulders, elbows, wrists, hips, knees, and ankles.

+- **Usage**: Suitable for training human pose estimation models.

+- **Additional Notes**: The dataset is rich and diverse, containing over 200k labeled images.

+- [Read more about COCO-Pose](./coco.md)

-```

+### COCO8-Pose

+

+- **Description**: [Ultralytics](https://ultralytics.com) COCO8-Pose is a small, but versatile pose detection dataset composed of the first 8 images of the COCO train 2017 set, 4 for training and 4 for validation.

+- **Label Format**: Same as Ultralytics YOLO format as described above, with keypoints for human poses.

+- **Number of Classes**: 1 (Human).

+- **Keypoints**: 17 keypoints including nose, eyes, ears, shoulders, elbows, wrists, hips, knees, and ankles.

+- **Usage**: Suitable for testing and debugging object detection models, or for experimenting with new detection approaches.

+- **Additional Notes**: COCO8-Pose is ideal for sanity checks and CI checks.

+- [Read more about COCO8-Pose](./coco8-pose.md)

+

+### Adding your own dataset

+

+If you have your own dataset and would like to use it for training pose estimation models with Ultralytics YOLO format, ensure that it follows the format specified above under "Ultralytics YOLO format". Convert your annotations to the required format and specify the paths, number of classes, and class names in the YAML configuration file.

+

+### Conversion Tool

+

+Ultralytics provides a convenient conversion tool to convert labels from the popular COCO dataset format to YOLO format:

+

+```python

from ultralytics.yolo.data.converter import convert_coco

convert_coco(labels_dir='../coco/annotations/', use_keypoints=True)

-```

\ No newline at end of file

+```

+

+This conversion tool can be used to convert the COCO dataset or any dataset in the COCO format to the Ultralytics YOLO format. The `use_keypoints` parameter specifies whether to include keypoints (for pose estimation) in the converted labels.

diff --git a/docs/datasets/segment/index.md b/docs/datasets/segment/index.md

index 7d24e4160..5bafefe6d 100644

--- a/docs/datasets/segment/index.md

+++ b/docs/datasets/segment/index.md

@@ -35,46 +35,36 @@ Here is an example of the YOLO dataset format for a single image with two object

1 0.5046 0.0 0.5015 0.004 0.4984 0.00416 0.4937 0.010 0.492 0.0104

```

-Note: The length of each row does not have to be equal.

+!!! tip "Tip"

-** Dataset file format **

+ - The length of each row does not have to be equal.

+ - Each segmentation label must have a **minimum of 3 xy points**: ` `

+

+### Dataset YAML format

The Ultralytics framework uses a YAML file format to define the dataset and model configuration for training Detection Models. Here is an example of the YAML format used for defining a detection dataset:

```yaml

-train:

-val:

-

-nc:

-names: [ , , ..., ]

-

-```

-

-The `train` and `val` fields specify the paths to the directories containing the training and validation images, respectively.

+# Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

+path: ../datasets/coco8-seg # dataset root dir

+train: images/train # train images (relative to 'path') 4 images

+val: images/val # val images (relative to 'path') 4 images

+test: # test images (optional)

-The `nc` field specifies the number of object classes in the dataset.

-

-The `names` field is a list of the names of the object classes. The order of the names should match the order of the object class indices in the YOLO dataset files.

-

-NOTE: Either `nc` or `names` must be defined. Defining both are not mandatory.

-

-Alternatively, you can directly define class names like this:

-

-```yaml

+# Classes (80 COCO classes)

names:

0: person

1: bicycle

+ 2: car

+ ...

+ 77: teddy bear

+ 78: hair drier

+ 79: toothbrush

```

-** Example **

-

-```yaml

-train: data/train/

-val: data/val/

+The `train` and `val` fields specify the paths to the directories containing the training and validation images, respectively.

-nc: 2

-names: [ 'person', 'car' ]

-```

+`names` is a dictionary of class names. The order of the names should match the order of the object class indices in the YOLO dataset files.

## Usage

@@ -100,16 +90,29 @@ names: [ 'person', 'car' ]

## Supported Datasets

-## Port or Convert label formats

+* [COCO](coco.md): A large-scale dataset designed for object detection, segmentation, and captioning tasks with over 200K labeled images.

+* [COCO8-seg](coco8-seg.md): A smaller dataset for instance segmentation tasks, containing a subset of 8 COCO images with segmentation annotations.

-### COCO dataset format to YOLO format

+### Adding your own dataset

-```

+If you have your own dataset and would like to use it for training segmentation models with Ultralytics YOLO format, ensure that it follows the format specified above under "Ultralytics YOLO format". Convert your annotations to the required format and specify the paths, number of classes, and class names in the YAML configuration file.

+

+## Port or Convert Label Formats

+

+### COCO Dataset Format to YOLO Format

+

+You can easily convert labels from the popular COCO dataset format to the YOLO format using the following code snippet:

+

+```python

from ultralytics.yolo.data.converter import convert_coco

convert_coco(labels_dir='../coco/annotations/', use_segments=True)

```

+This conversion tool can be used to convert the COCO dataset or any dataset in the COCO format to the Ultralytics YOLO format.

+

+Remember to double-check if the dataset you want to use is compatible with your model and follows the necessary format conventions. Properly formatted datasets are crucial for training successful object detection models.

+

## Auto-Annotation

Auto-annotation is an essential feature that allows you to generate a segmentation dataset using a pre-trained detection model. It enables you to quickly and accurately annotate a large number of images without the need for manual labeling, saving time and effort.

diff --git a/docs/help/environmental-health-safety.md b/docs/help/environmental-health-safety.md

new file mode 100644

index 000000000..2d072a571

--- /dev/null

+++ b/docs/help/environmental-health-safety.md

@@ -0,0 +1,37 @@

+---

+comments: false

+description: Discover Ultralytics' commitment to Environmental, Health, and Safety (EHS). Learn about our policy, principles, and strategies for ensuring a sustainable and safe working environment.

+keywords: Ultralytics, Environmental Policy, Health and Safety, EHS, Sustainability, Workplace Safety, Environmental Compliance

+---

+

+# Ultralytics Environmental, Health and Safety (EHS) Policy

+

+At Ultralytics, we recognize that the long-term success of our company relies not only on the products and services we offer, but also the manner in which we conduct our business. We are committed to ensuring the safety and well-being of our employees, stakeholders, and the environment, and we will continuously strive to mitigate our impact on the environment while promoting health and safety.

+

+## Policy Principles

+

+1. **Compliance**: We will comply with all applicable laws, regulations, and standards related to EHS, and we will strive to exceed these standards where possible.

+

+2. **Prevention**: We will work to prevent accidents, injuries, and environmental harm by implementing risk management measures and ensuring all our operations and procedures are safe.

+

+3. **Continuous Improvement**: We will continuously improve our EHS performance by setting measurable objectives, monitoring our performance, auditing our operations, and revising our policies and procedures as needed.

+

+4. **Communication**: We will communicate openly about our EHS performance and will engage with stakeholders to understand and address their concerns and expectations.

+

+5. **Education and Training**: We will educate and train our employees and contractors in appropriate EHS procedures and practices.

+

+## Implementation Measures

+

+1. **Responsibility and Accountability**: Every employee and contractor working at or with Ultralytics is responsible for adhering to this policy. Managers and supervisors are accountable for ensuring this policy is implemented within their areas of control.

+

+2. **Risk Management**: We will identify, assess, and manage EHS risks associated with our operations and activities to prevent accidents, injuries, and environmental harm.

+

+3. **Resource Allocation**: We will allocate the necessary resources to ensure the effective implementation of our EHS policy, including the necessary equipment, personnel, and training.

+

+4. **Emergency Preparedness and Response**: We will develop, maintain, and test emergency preparedness and response plans to ensure we can respond effectively to EHS incidents.

+

+5. **Monitoring and Review**: We will monitor and review our EHS performance regularly to identify opportunities for improvement and ensure we are meeting our objectives.

+

+This policy reflects our commitment to minimizing our environmental footprint, ensuring the safety and well-being of our employees, and continuously improving our performance.

+

+Please remember that the implementation of an effective EHS policy requires the involvement and commitment of everyone working at or with Ultralytics. We encourage you to take personal responsibility for your safety and the safety of others, and to take care of the environment in which we live and work.

diff --git a/docs/help/index.md b/docs/help/index.md

index 9647552b3..ed6b93e6e 100644

--- a/docs/help/index.md

+++ b/docs/help/index.md

@@ -12,6 +12,7 @@ Welcome to the Ultralytics Help page! We are committed to providing you with com

- [Contributor License Agreement (CLA)](CLA.md): Familiarize yourself with our CLA to understand the terms and conditions for contributing to Ultralytics projects.

- [Minimum Reproducible Example (MRE) Guide](minimum_reproducible_example.md): Understand how to create an MRE when submitting bug reports to ensure that our team can quickly and efficiently address the issue.

- [Code of Conduct](code_of_conduct.md): Learn about our community guidelines and expectations to ensure a welcoming and inclusive environment for all participants.

+- [Environmental, Health and Safety (EHS) Policy](environmental-health-safety.md): Explore Ultralytics' dedicated approach towards maintaining a sustainable, safe, and healthy work environment for all our stakeholders.

- [Security Policy](../SECURITY.md): Understand our security practices and how to report security vulnerabilities responsibly.

We highly recommend going through these guides to make the most of your collaboration with the Ultralytics community. Our goal is to maintain a welcoming and supportive environment for all users and contributors. If you need further assistance, don't hesitate to reach out to us through GitHub Issues or the official discussion forum. Happy coding!

\ No newline at end of file

diff --git a/docs/help/minimum_reproducible_example.md b/docs/help/minimum_reproducible_example.md

index 758287cc0..1a8acd27b 100644

--- a/docs/help/minimum_reproducible_example.md

+++ b/docs/help/minimum_reproducible_example.md

@@ -6,7 +6,7 @@ keywords: Ultralytics, YOLO, bug report, minimum reproducible example, MRE, isol

# Creating a Minimum Reproducible Example for Bug Reports in Ultralytics YOLO Repositories

-When submitting a bug report for Ultralytics YOLO repositories, it's essential to provide a [minimum reproducible example](https://stackoverflow.com/help/minimal-reproducible-example) (MRE). An MRE is a small, self-contained piece of code that demonstrates the problem you're experiencing. Providing an MRE helps maintainers and contributors understand the issue and work on a fix more efficiently. This guide explains how to create an MRE when submitting bug reports to Ultralytics YOLO repositories.

+When submitting a bug report for Ultralytics YOLO repositories, it's essential to provide a [minimum reproducible example](https://docs.ultralytics.com/help/minimum_reproducible_example/) (MRE). An MRE is a small, self-contained piece of code that demonstrates the problem you're experiencing. Providing an MRE helps maintainers and contributors understand the issue and work on a fix more efficiently. This guide explains how to create an MRE when submitting bug reports to Ultralytics YOLO repositories.

## 1. Isolate the Problem

diff --git a/docs/hub/datasets.md b/docs/hub/datasets.md

index d09f77b32..c1bdc38ef 100644

--- a/docs/hub/datasets.md

+++ b/docs/hub/datasets.md

@@ -86,7 +86,7 @@ Also, you can analyze your dataset by click on the **Overview** tab.

-Next, [train a model](./models.md) on your dataset.

+Next, [train a model](https://docs.ultralytics.com/hub/models/#train-model) on your dataset.

diff --git a/docs/hub/index.md b/docs/hub/index.md

index 6e25dc278..30f1652b6 100644

--- a/docs/hub/index.md

+++ b/docs/hub/index.md

@@ -29,7 +29,7 @@ easily upload their data and train new models quickly. It offers a range of pre-

templates to choose from, making it easy for users to get started with training their own models. Once a model is

trained, it can be easily deployed and used for real-time object detection, instance segmentation and classification tasks.

-We hope that the resources here will help you get the most out of HUB. Please browse the HUB Docs for details, raise an issue on GitHub for support, and join our Discord community for questions and discussions!

+We hope that the resources here will help you get the most out of HUB. Please browse the HUB Docs for details, raise an issue on GitHub for support, and join our Discord community for questions and discussions!

- [**Quickstart**](./quickstart.md). Start training and deploying YOLO models with HUB in seconds.

- [**Datasets: Preparing and Uploading**](./datasets.md). Learn how to prepare and upload your datasets to HUB in YOLO format.

diff --git a/docs/hub/models.md b/docs/hub/models.md

index 5ae171f25..31cba2df9 100644

--- a/docs/hub/models.md

+++ b/docs/hub/models.md

@@ -4,18 +4,210 @@ description: Train and Deploy your Model to 13 different formats, including Tens

keywords: Ultralytics, HUB, models, artificial intelligence, APIs, export models, TensorFlow, ONNX, Paddle, OpenVINO, CoreML, iOS, Android

---

-# HUB Models

+# Ultralytics HUB Models

-## Train a Model

+Ultralytics HUB models provide a streamlined solution for training vision AI models on your custom datasets.

-Connect to the Ultralytics HUB notebook and use your model API key to begin training!

+The process is user-friendly and efficient, involving a simple three-step creation and accelerated training powered by Utralytics YOLOv8. During training, real-time updates on model metrics are available so that you can monitor each step of the progress. Once training is completed, you can preview your model and easily deploy it to real-world applications. Therefore, Ultralytics HUB offers a comprehensive yet straightforward system for model creation, training, evaluation, and deployment.

+

+## Train Model

+

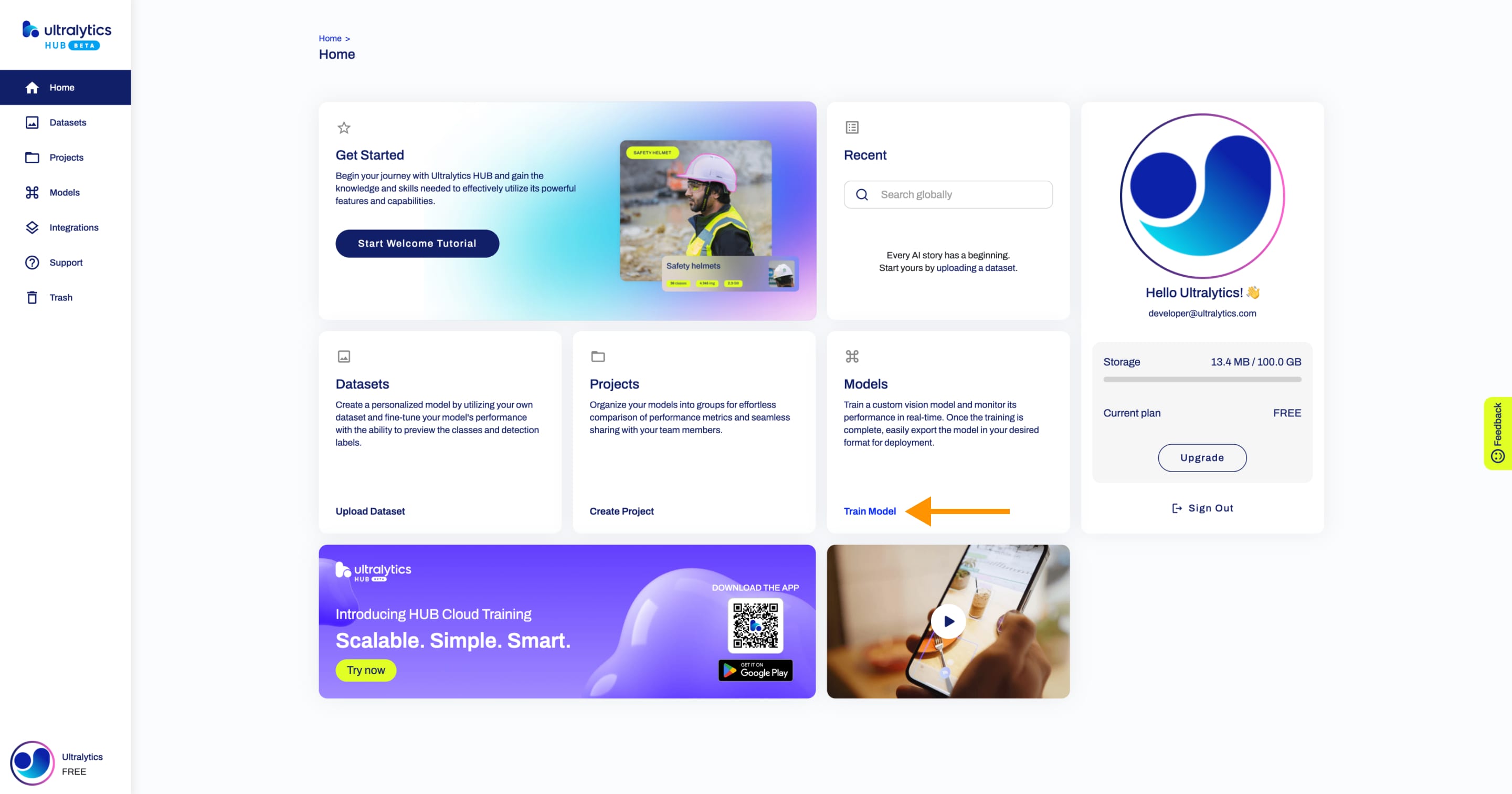

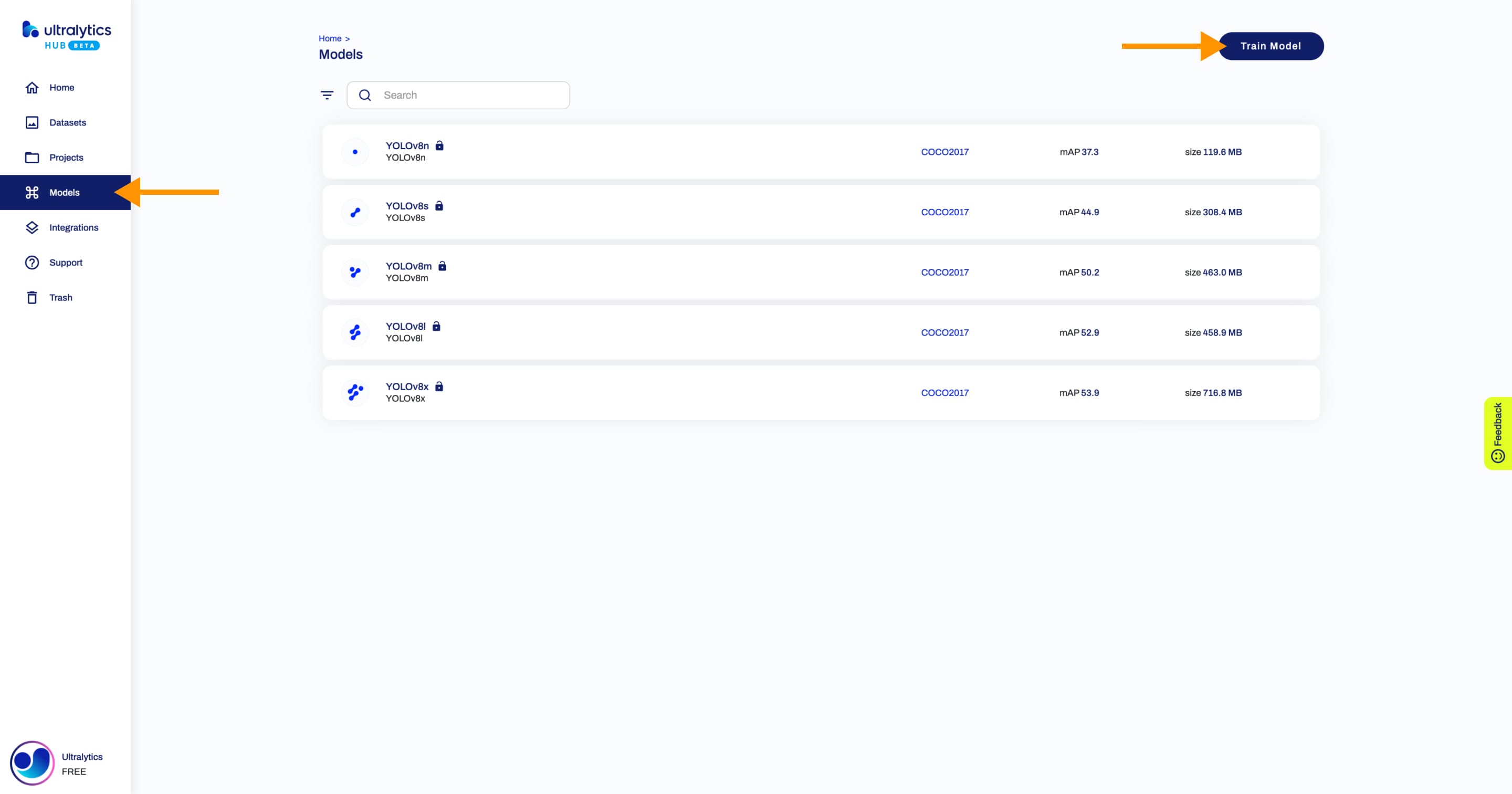

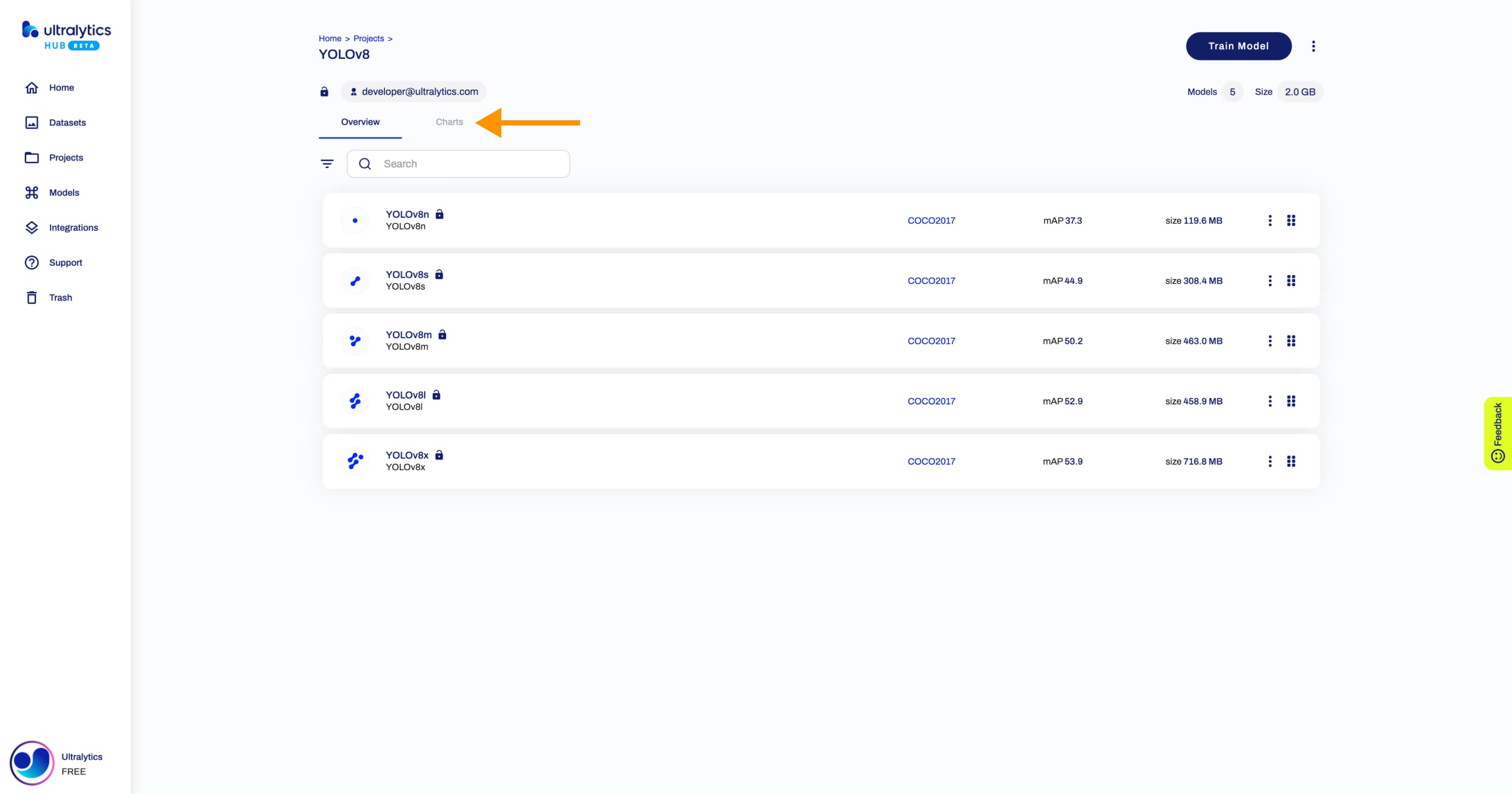

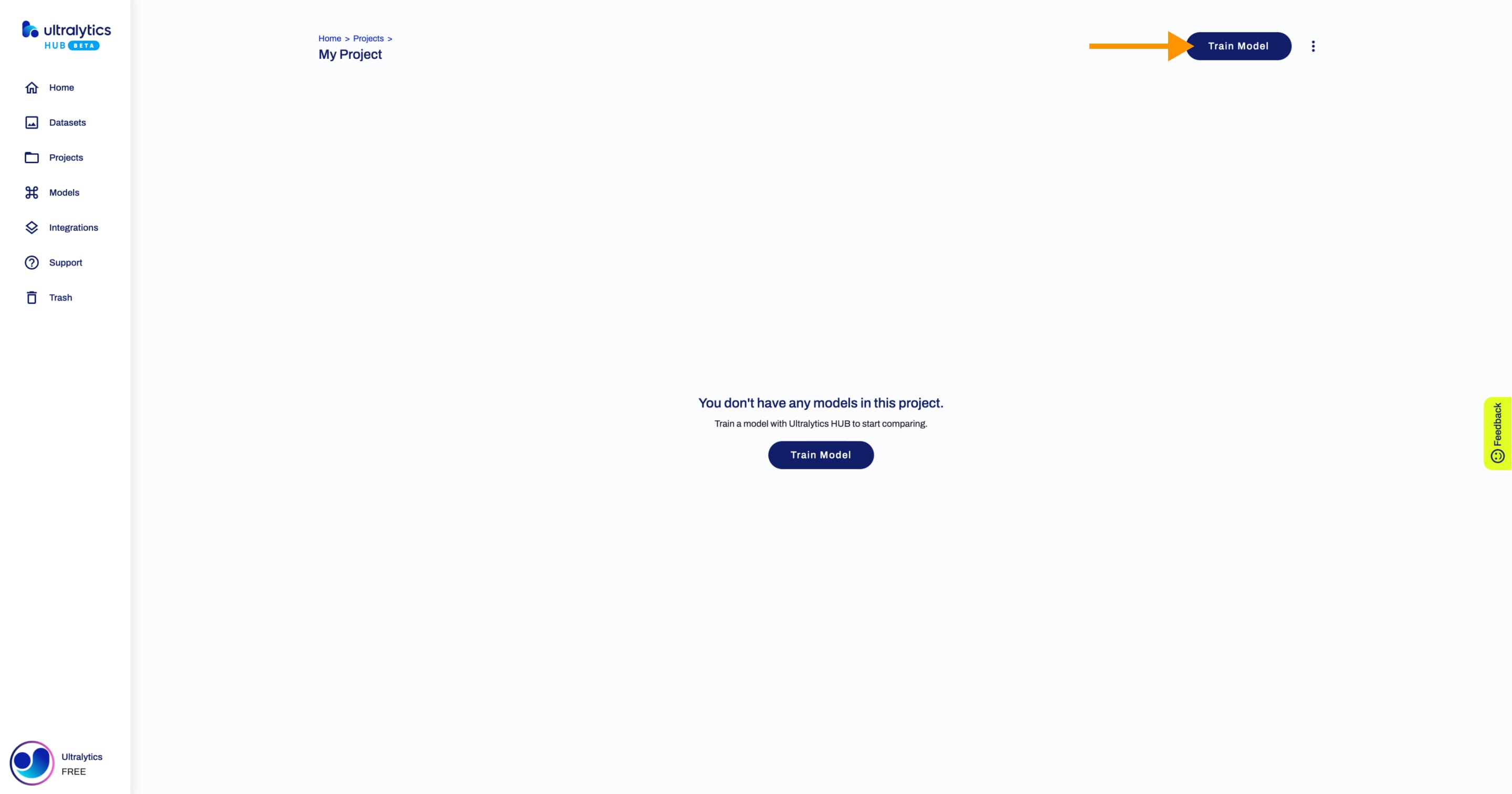

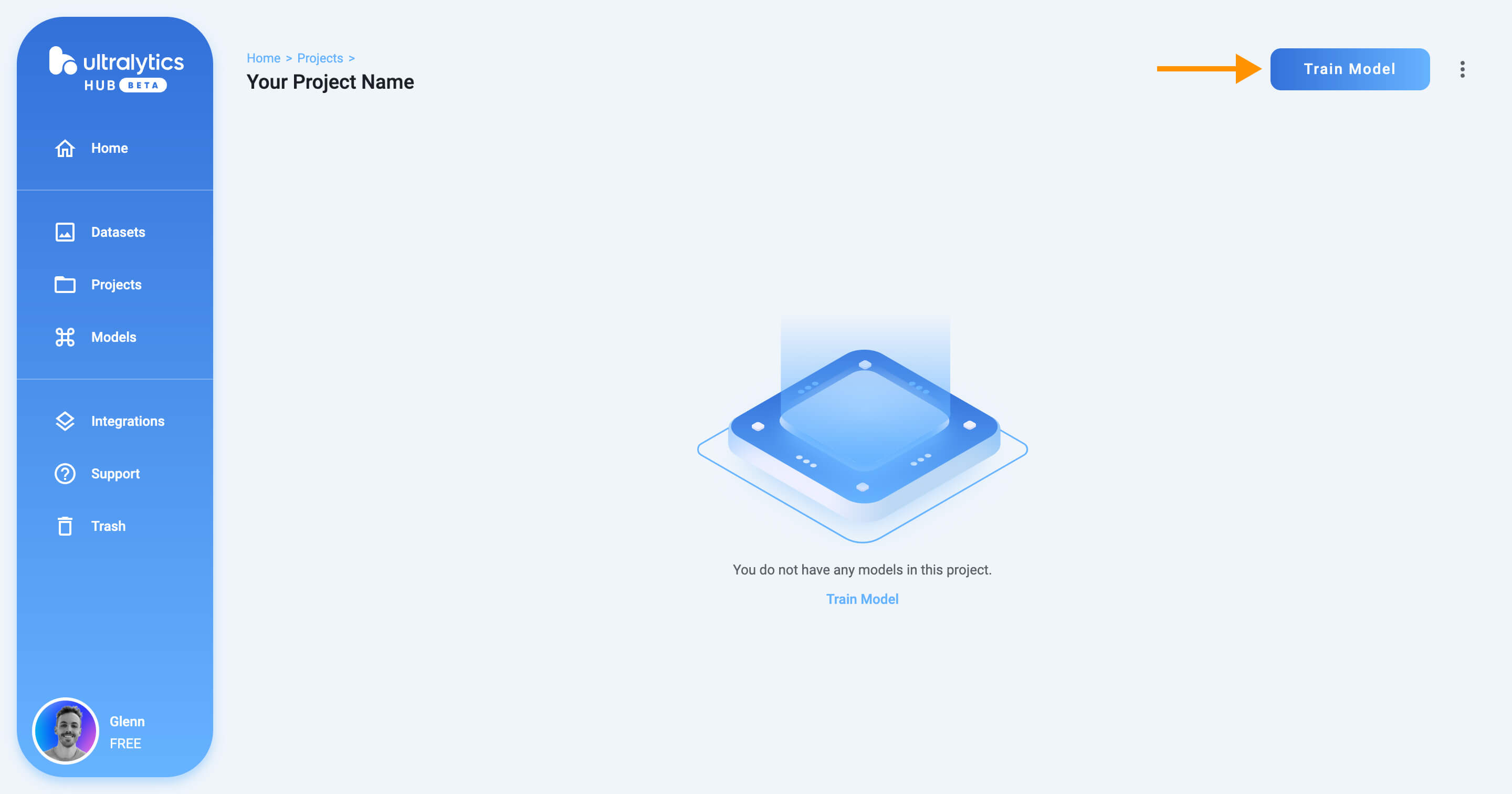

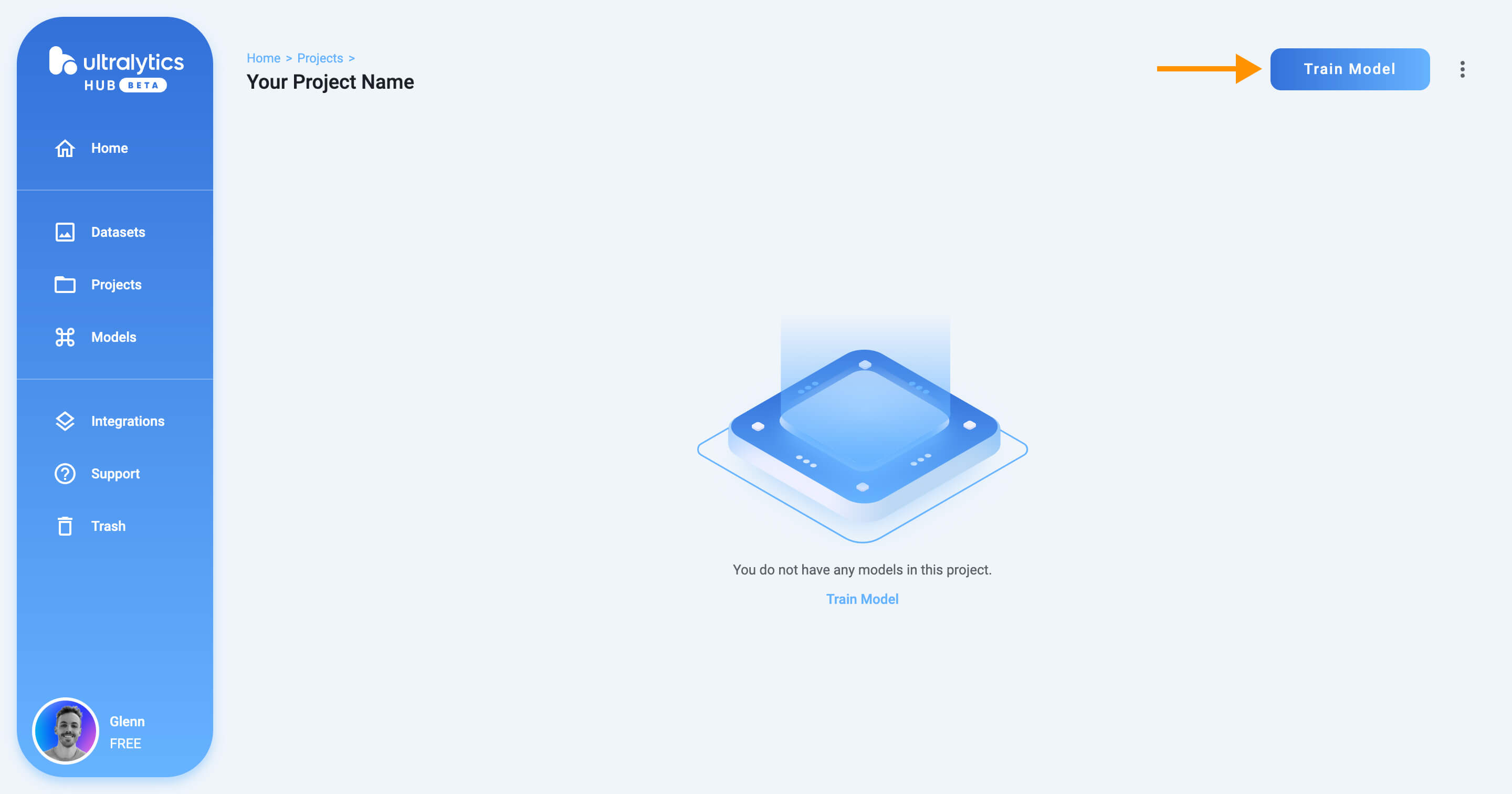

+Navigate to the [Models](https://hub.ultralytics.com/models) page by clicking on the **Models** button in the sidebar.

+

+

+

+??? tip "Tip"

+

+ You can also train a model directly from the [Home](https://hub.ultralytics.com/home) page.

+

+

+

+Click on the **Train Model** button on the top right of the page. This action will trigger the **Train Model** dialog.

+

+

+

+The **Train Model** dialog has three simple steps, explained below.

+

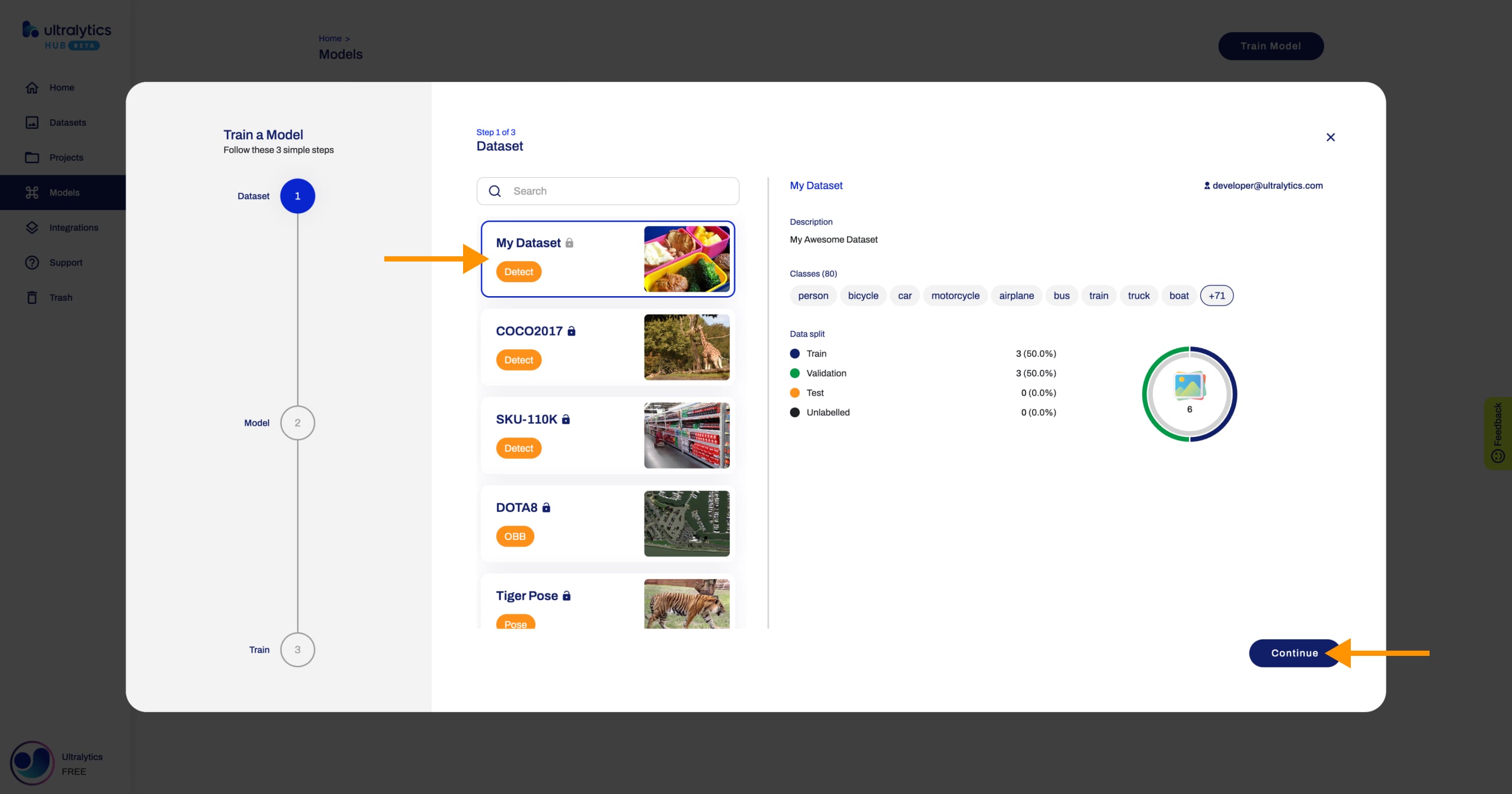

+### 1. Dataset

+

+In this step, you have to select the dataset you want to train your model on. After you selected a dataset, click **Continue**.

+

+

+

+??? tip "Tip"

+

+ You can skip this step if you train a model directly from the Dataset page.

+

+

+

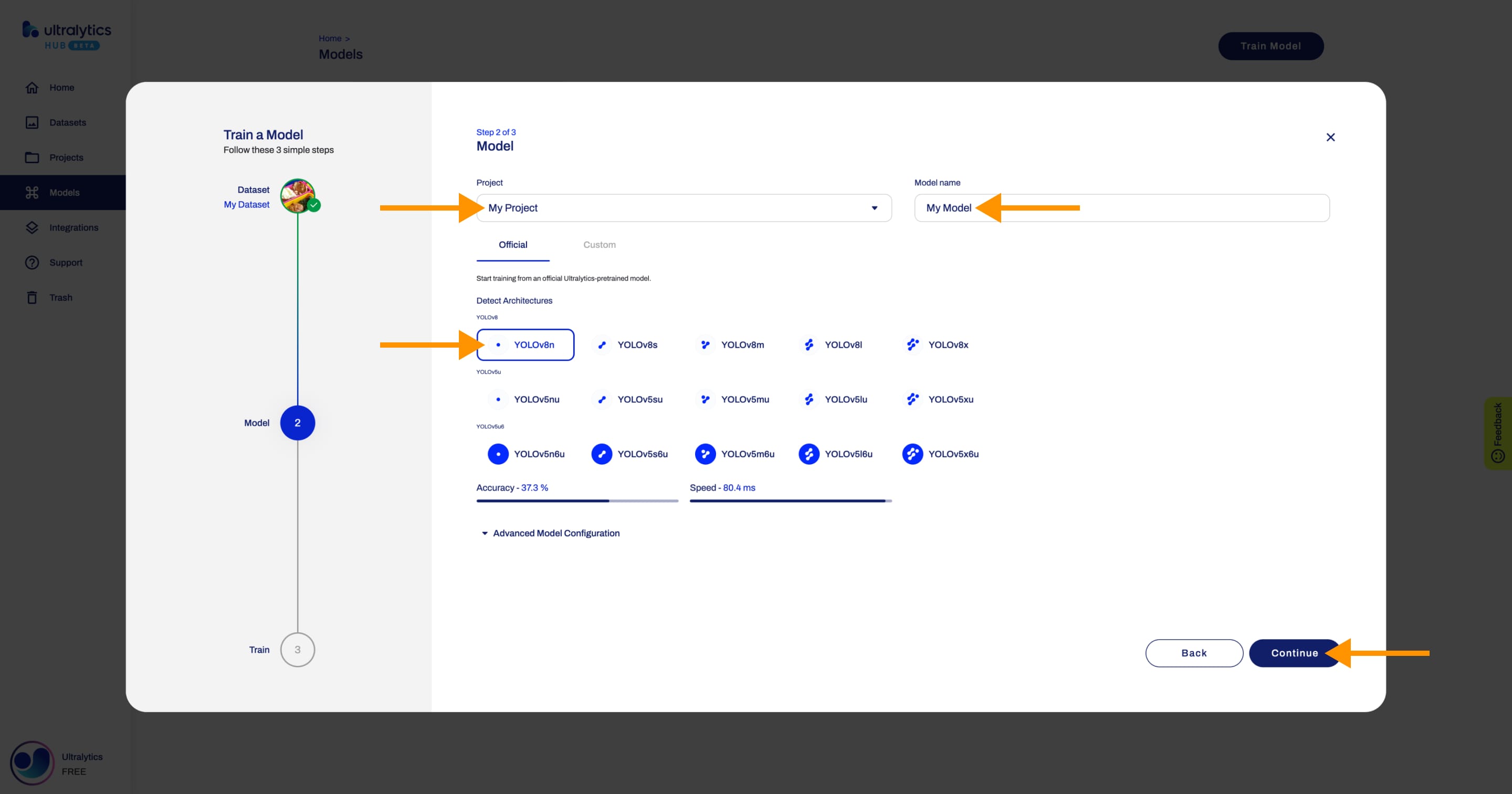

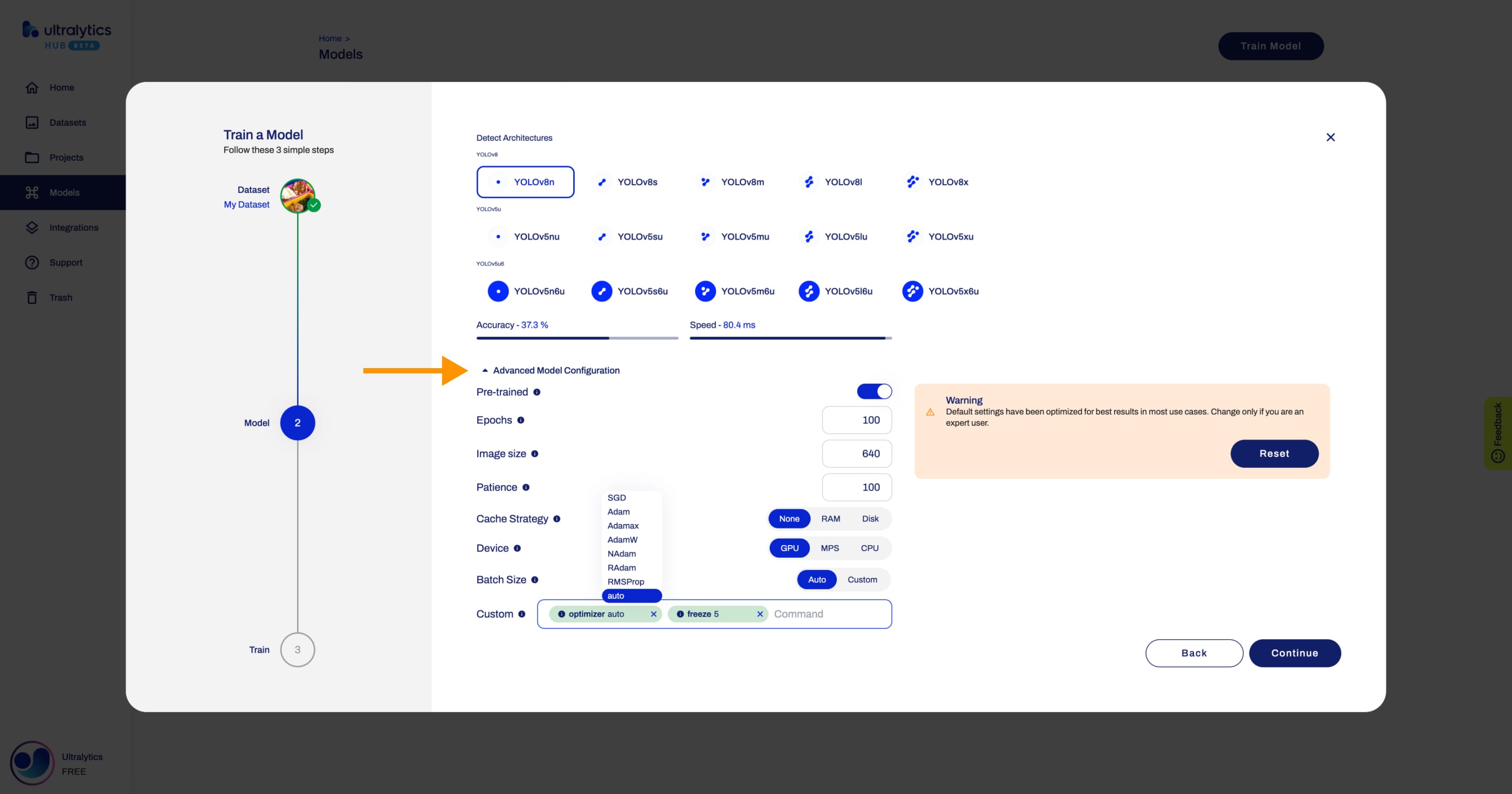

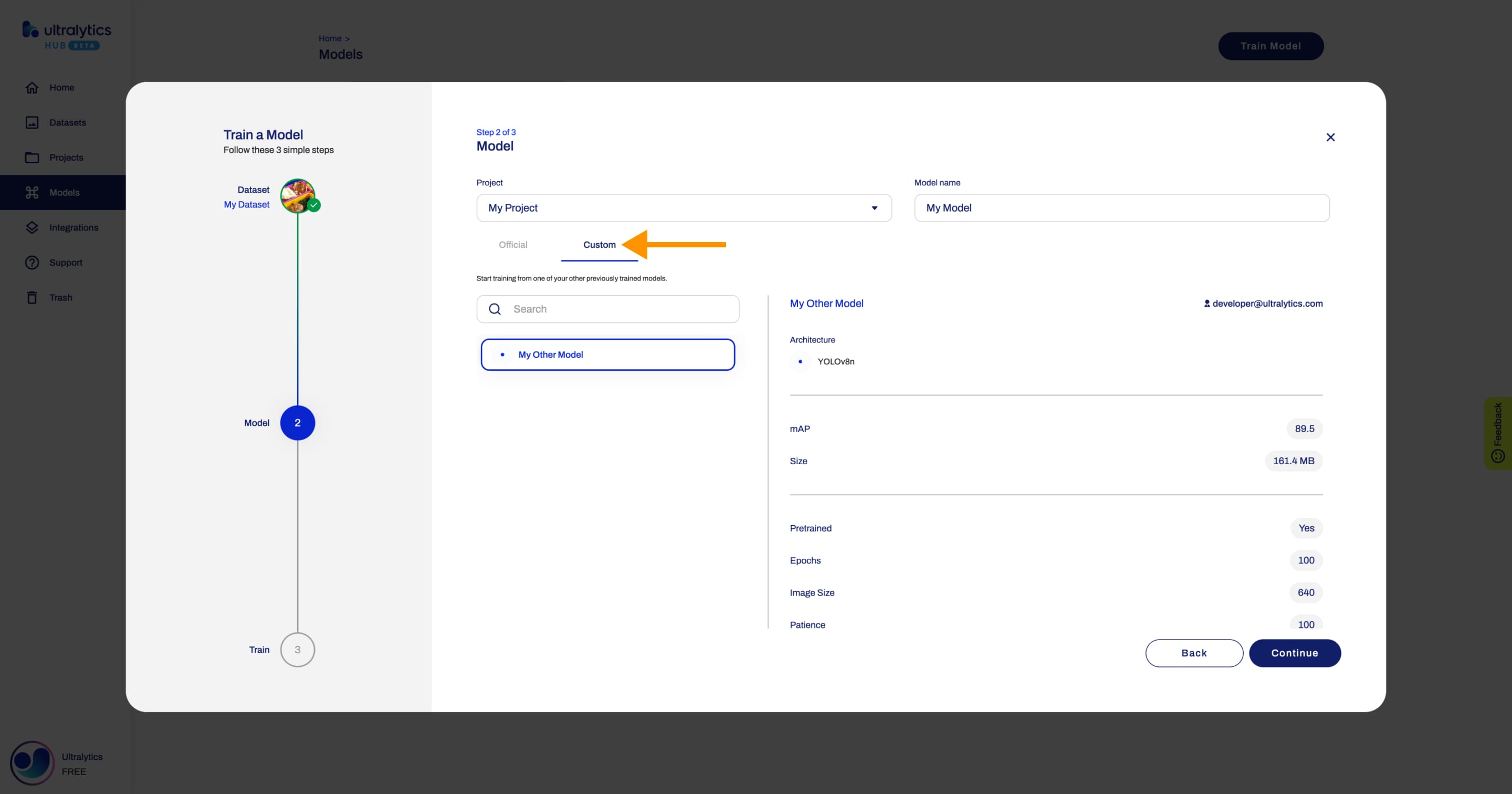

+### 2. Model

+

+In this step, you have to choose the project in which you want to create your model, the name of your model and your model's architecture.

+

+??? note "Note"

+

+ Ultralytics HUB will try to pre-select the project.

+

+ If you opened the **Train Model** dialog as described above, Ultralytics HUB will pre-select the last project you used.

+

+ If you opened the **Train Model** dialog from the Project page, Ultralytics HUB will pre-select the project you were inside of.

+

+

+

+ In case you don't have a project created yet, you can set the name of your project in this step and it will be created together with your model.

+

+

+

+!!! info "Info"

+

+ You can read more about the available [YOLOv8](https://docs.ultralytics.com/models/yolov8) (and [YOLOv5](https://docs.ultralytics.com/models/yolov5)) architectures in our documentation.

+

+When you're happy with your model configuration, click **Continue**.

+

+

+

+??? note "Note"

+

+ By default, your model will use a pre-trained model (trained on the [COCO](https://docs.ultralytics.com/datasets/detect/coco) dataset) to reduce training time.

+

+ You can change this behaviour by opening the **Advanced Options** accordion.

+

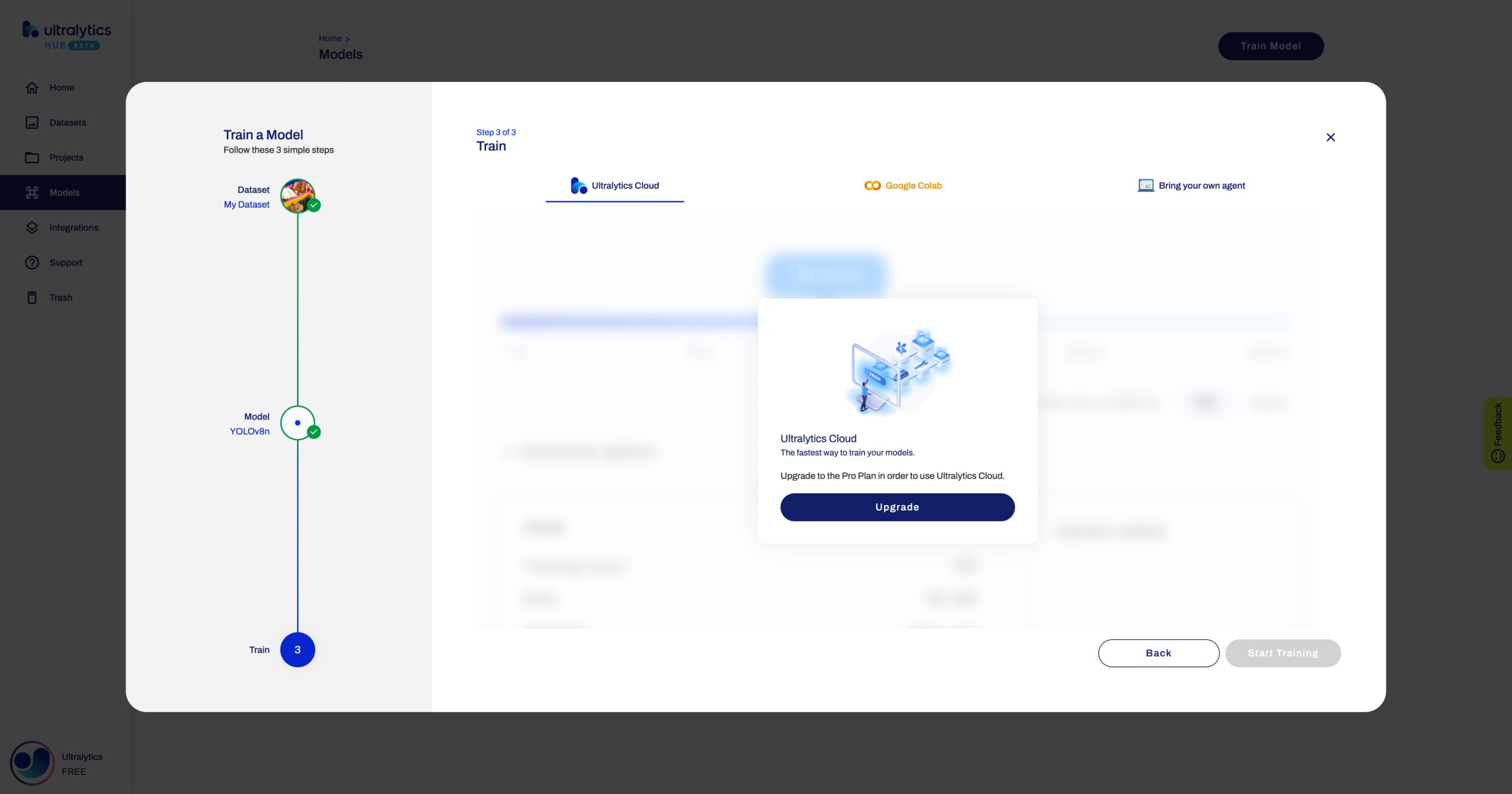

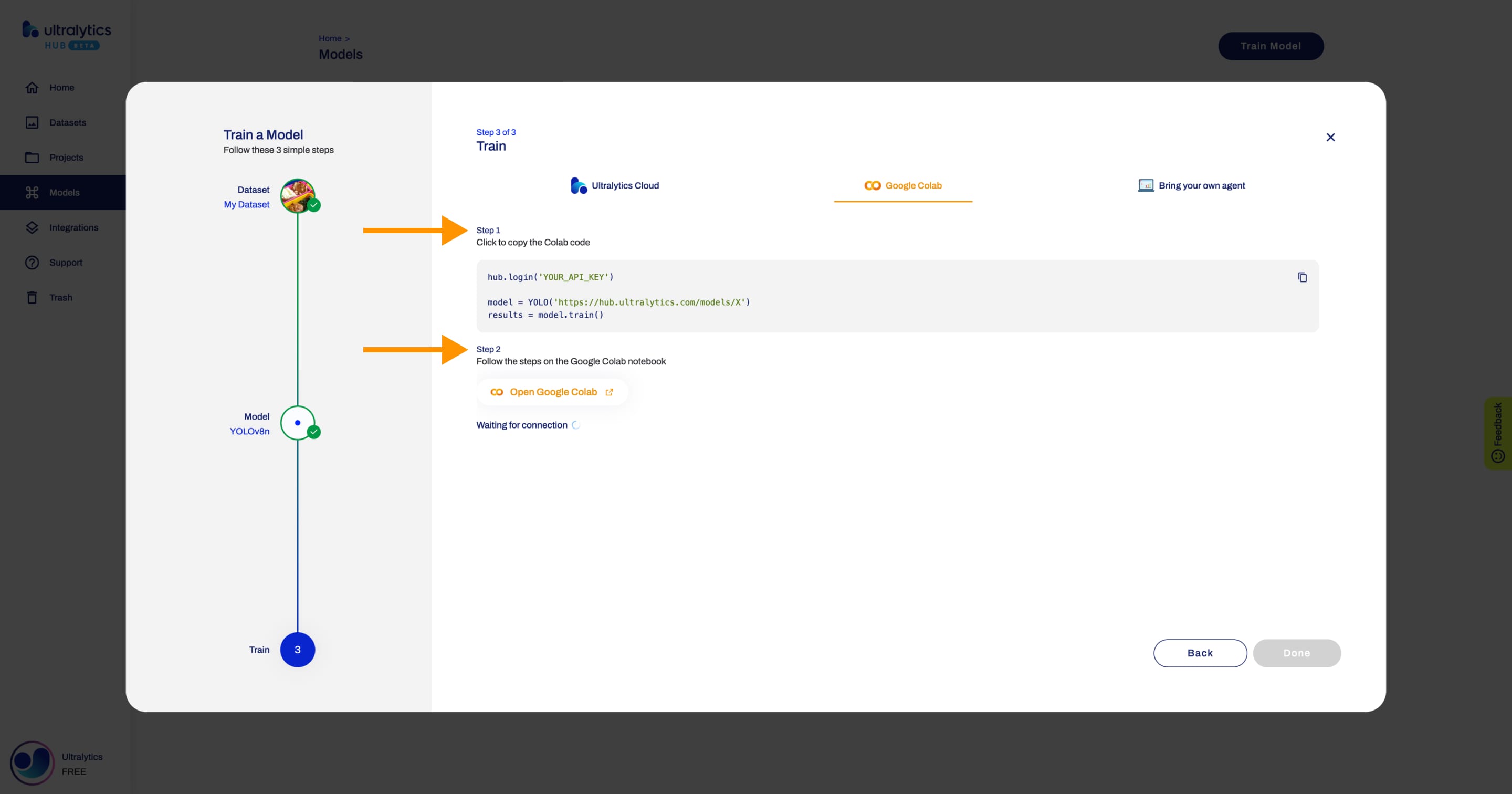

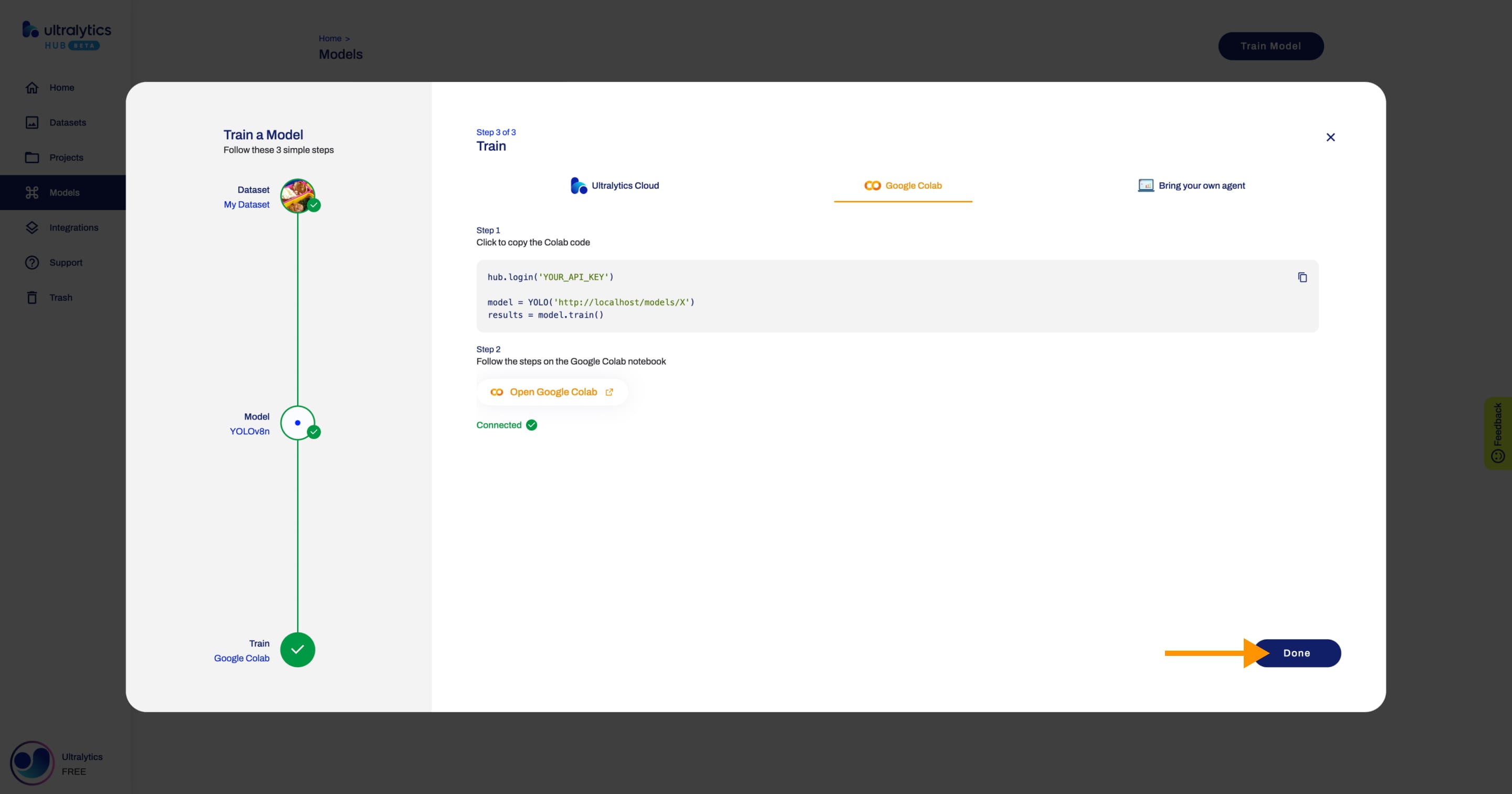

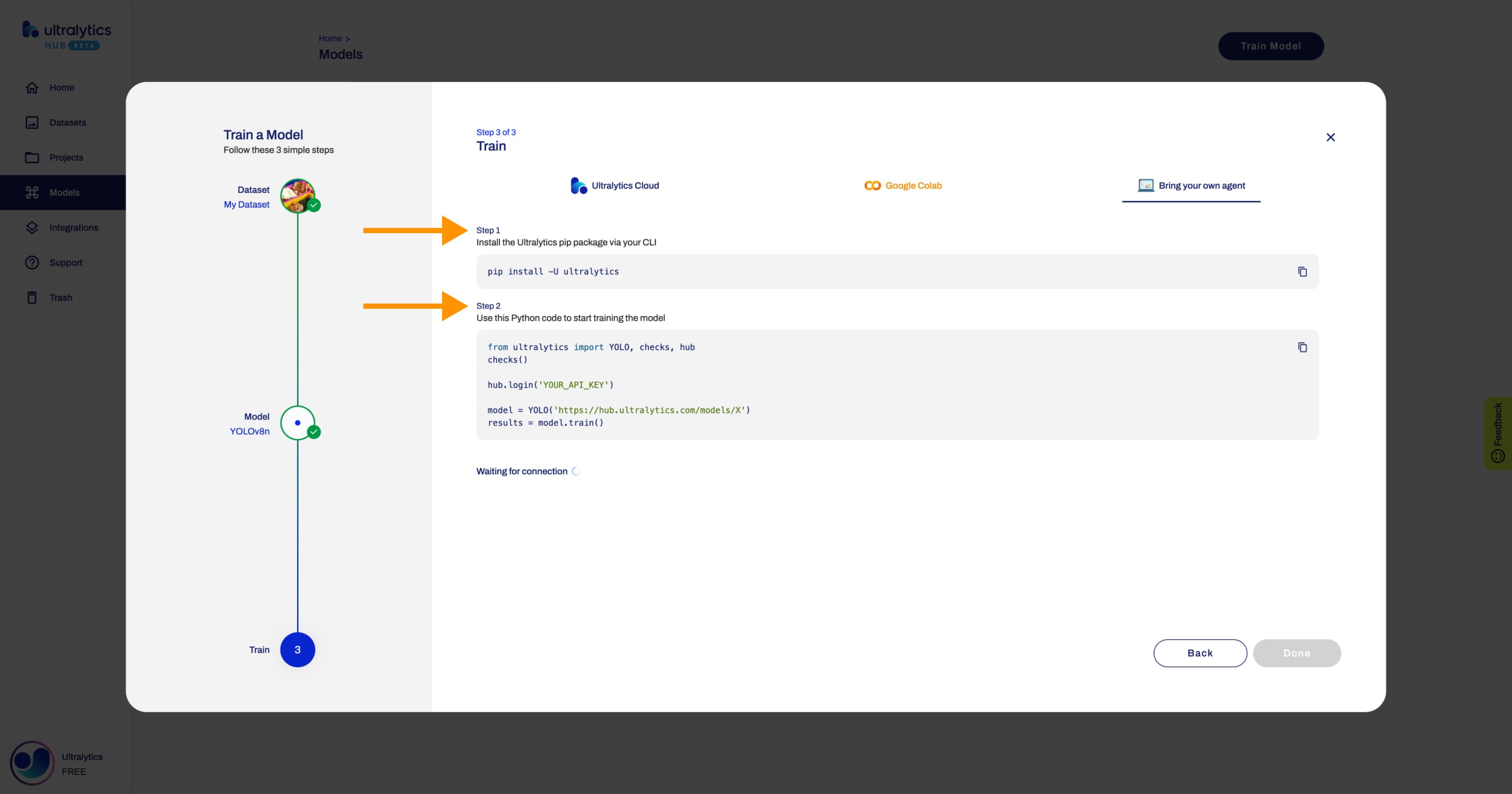

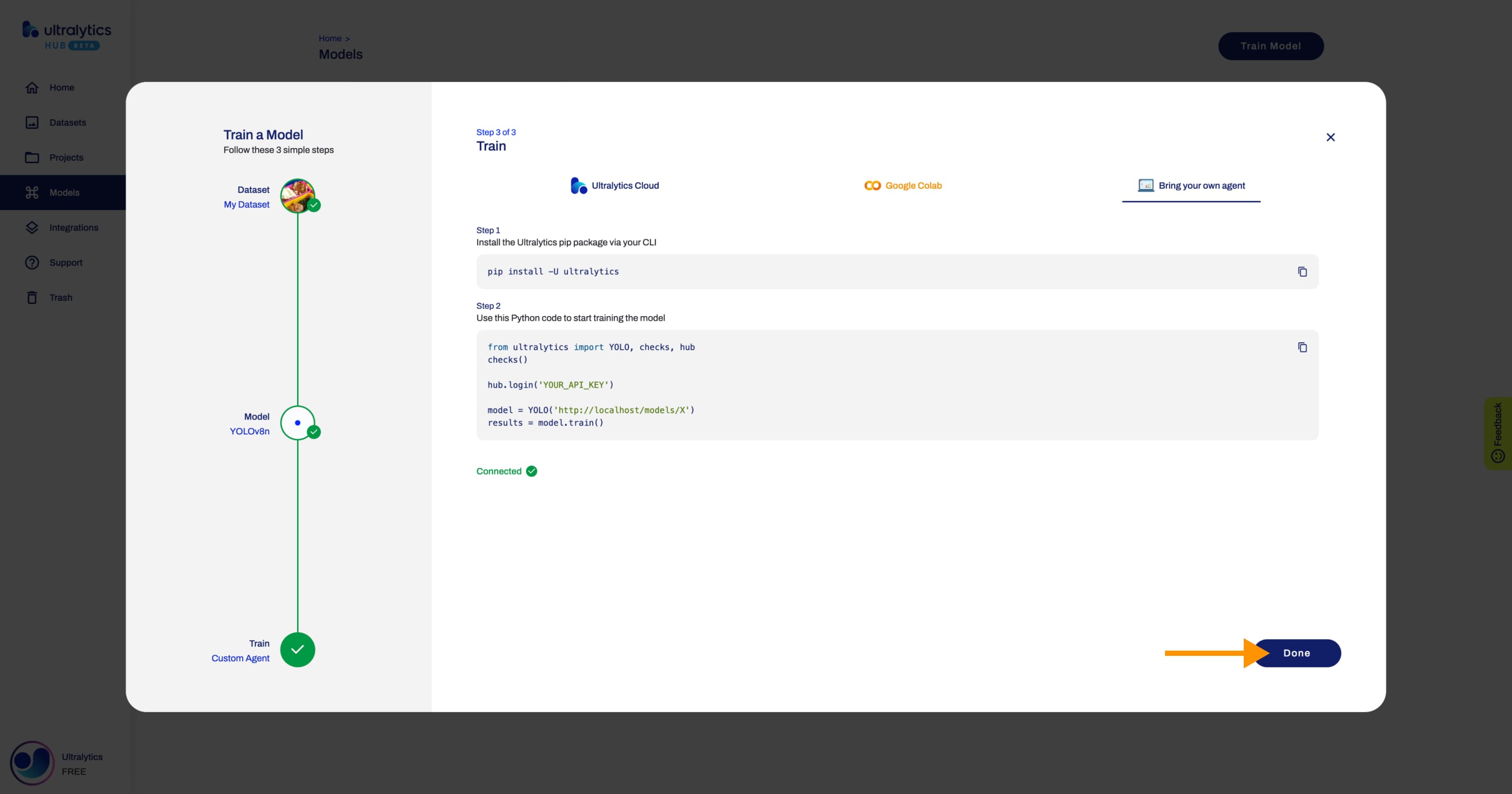

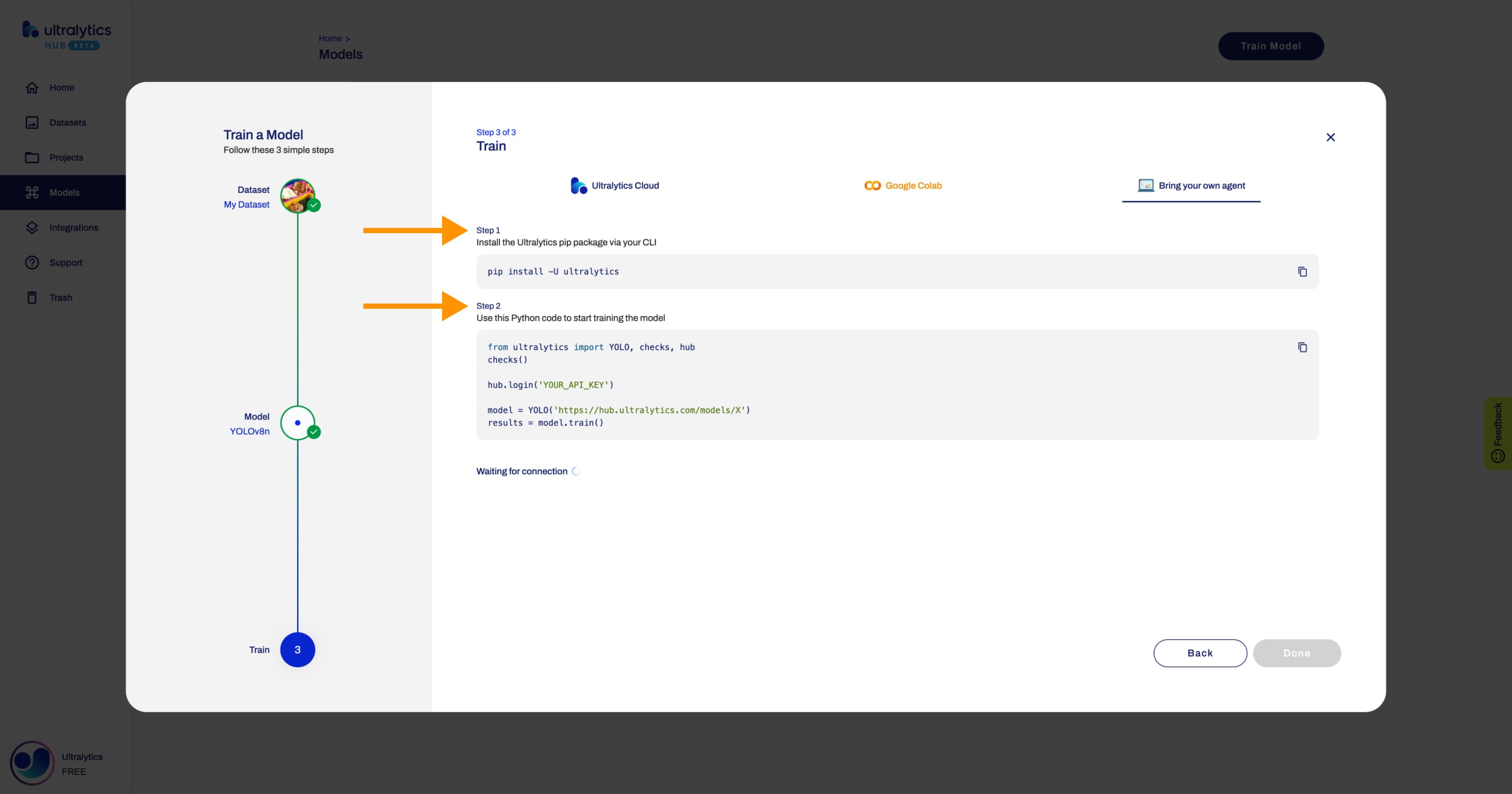

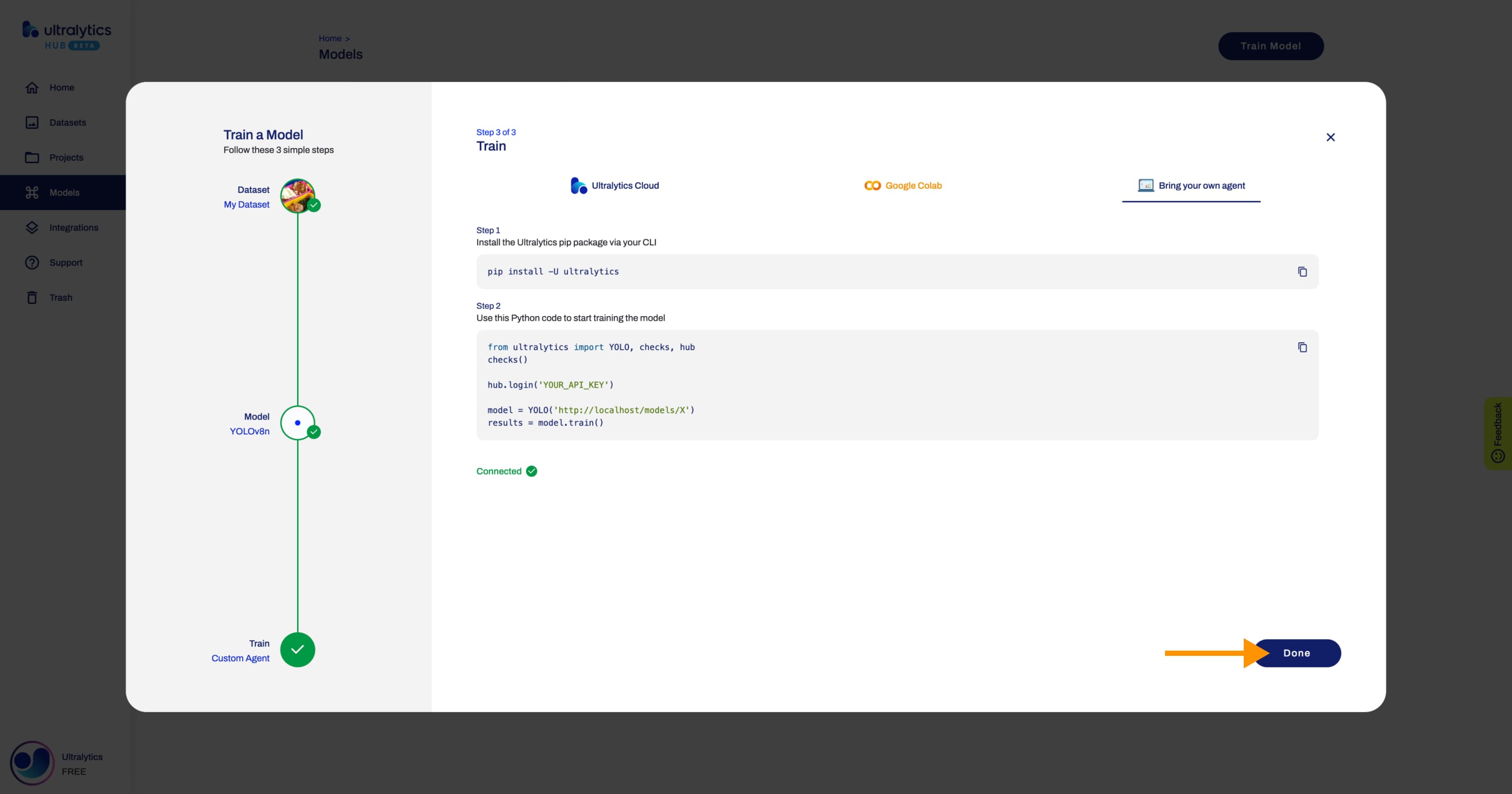

+### 3. Train

+

+In this step, you will start training you model.

+

+Ultralytics HUB offers three training options:

+

+- Ultralytics Cloud **(COMING SOON)**

+- Google Colab

+- Bring your own agent

+

+In order to start training your model, follow the instructions presented in this step.

+

+

+

+??? note "Note"

+

+ When you are on this step, before the training starts, you can change the default training configuration by opening the **Advanced Options** accordion.

+

+

+

+??? note "Note"

+

+ When you are on this step, you have the option to close the **Train Model** dialog and start training your model from the Model page later.

+

+

+

+To start training your model using Google Colab, simply follow the instructions shown above or on the Google Colab notebook.

- +

+  +

+

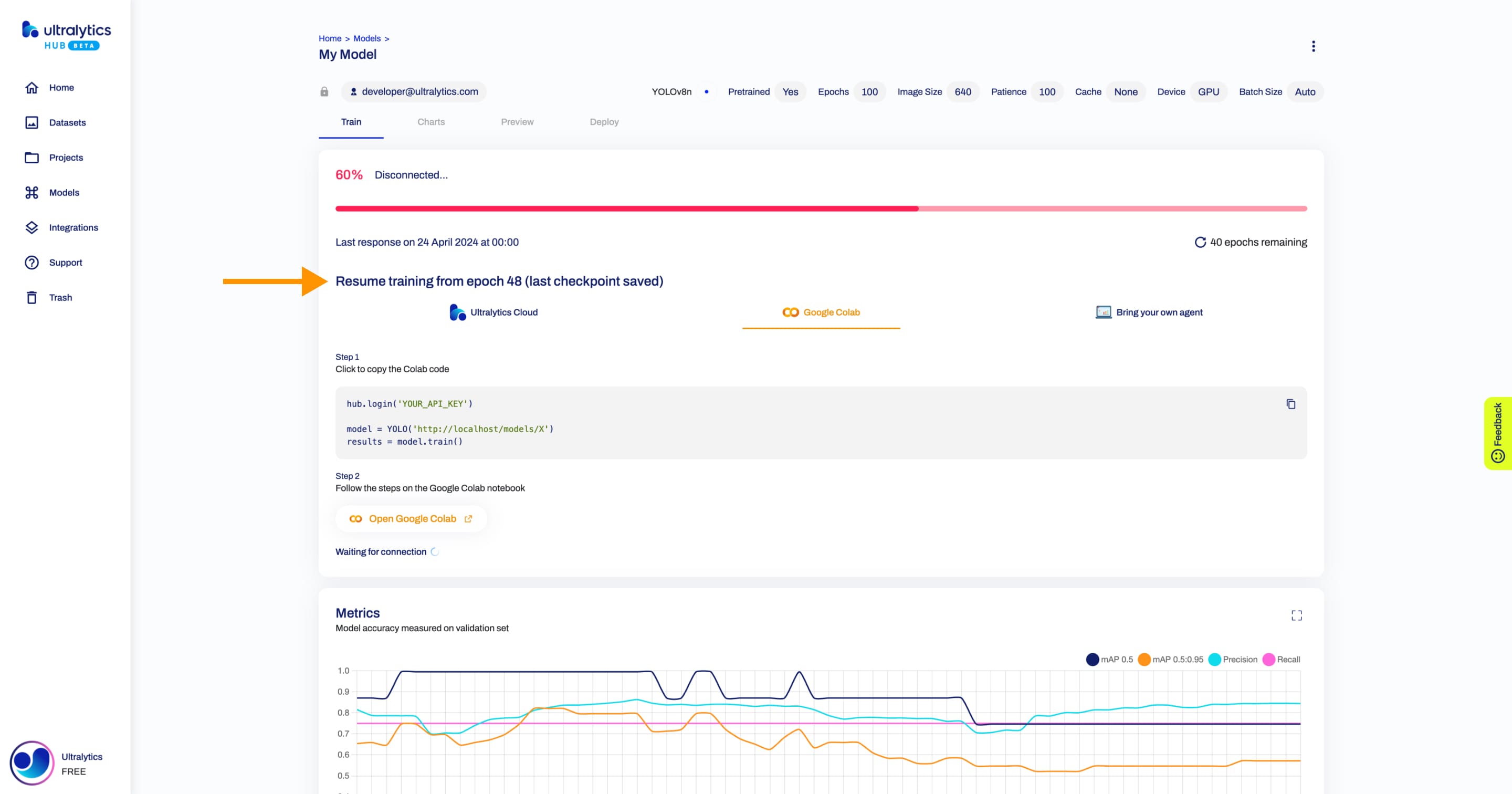

+When the training starts, you can click **Done** and monitor the training progress on the Model page.

+

+

+

+

+

+??? note "Note"

+

+ In case the training stops and a checkpoint was saved, you can resume training your model from the Model page.

+

+

+

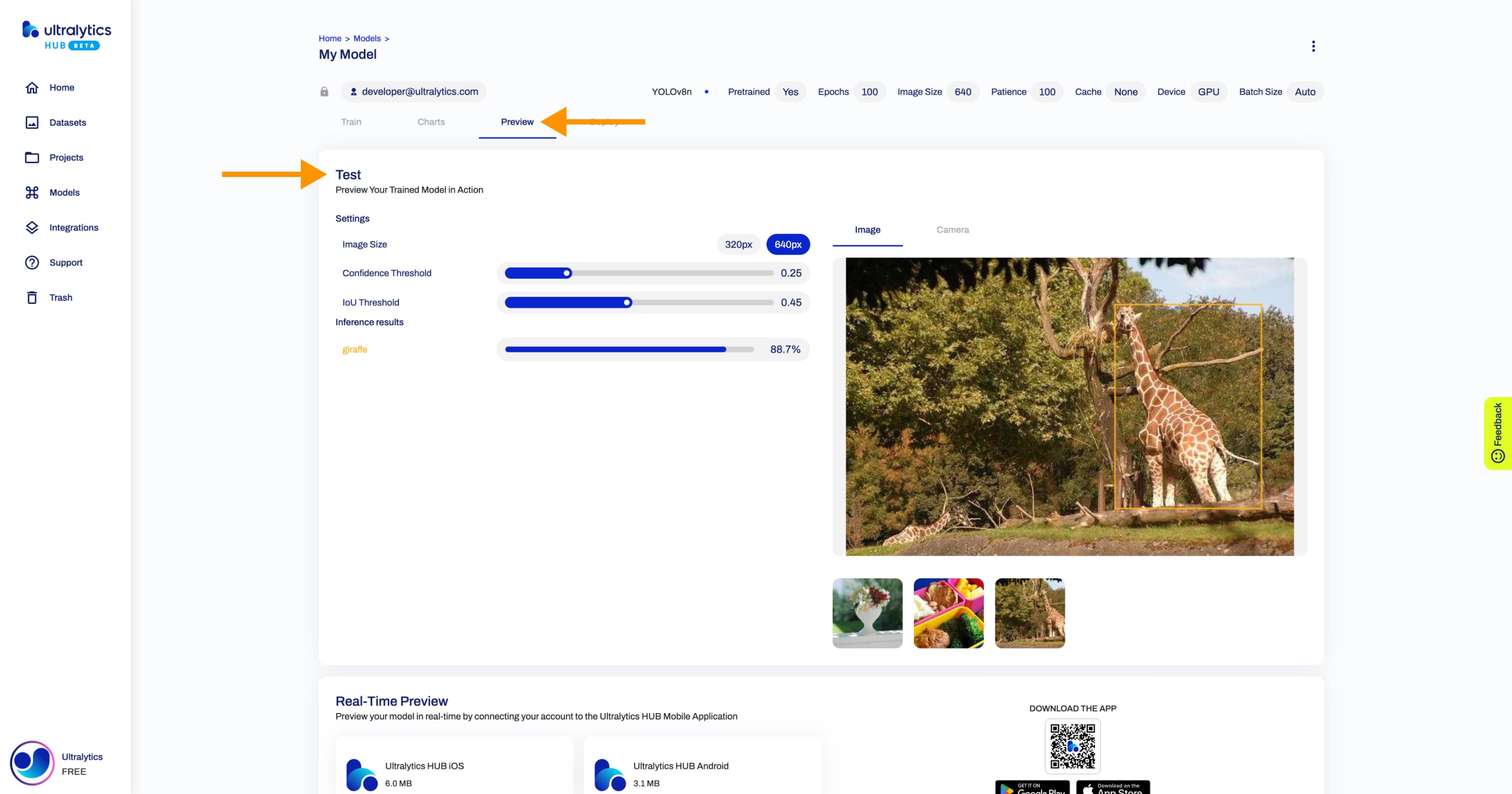

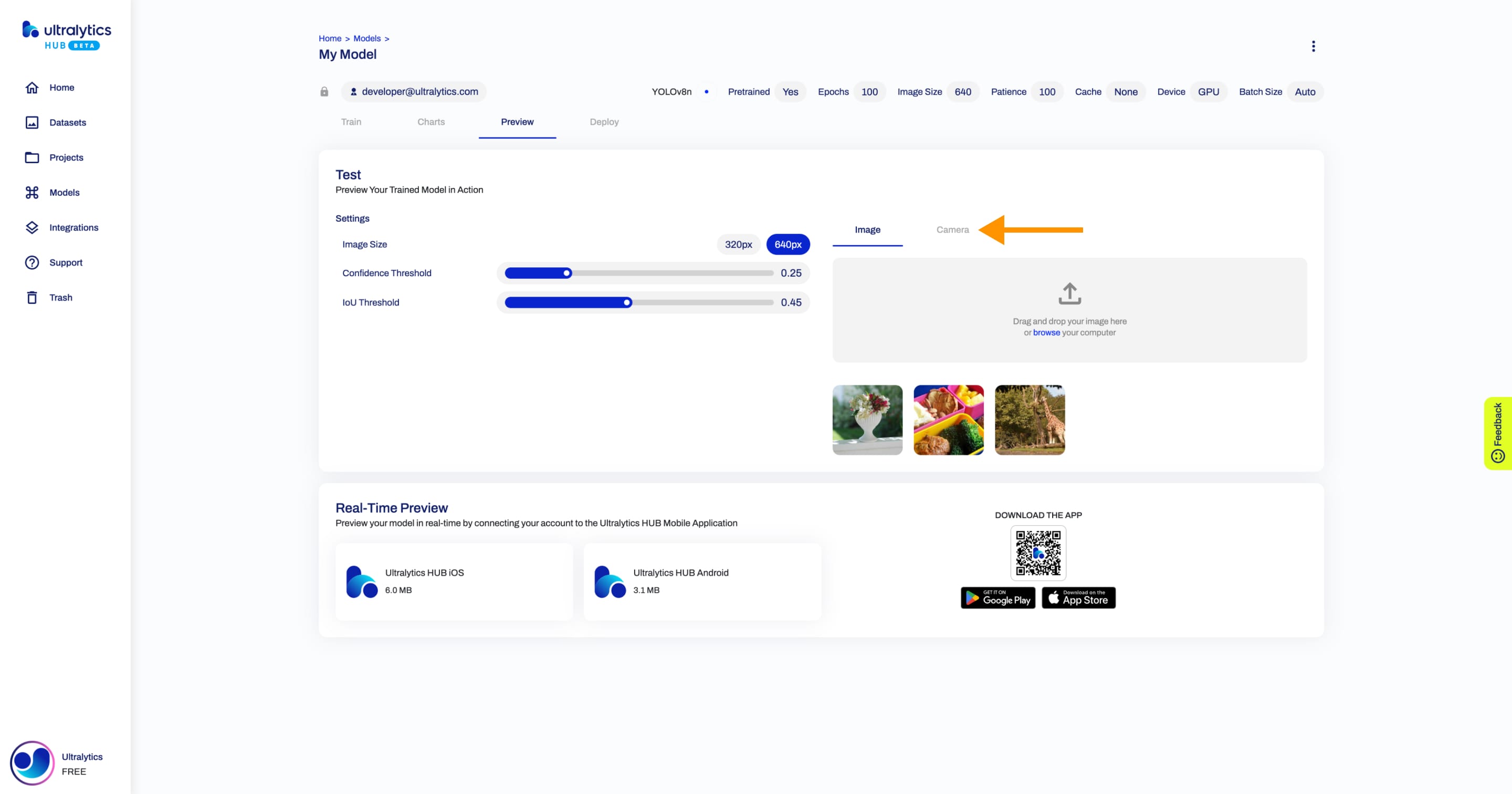

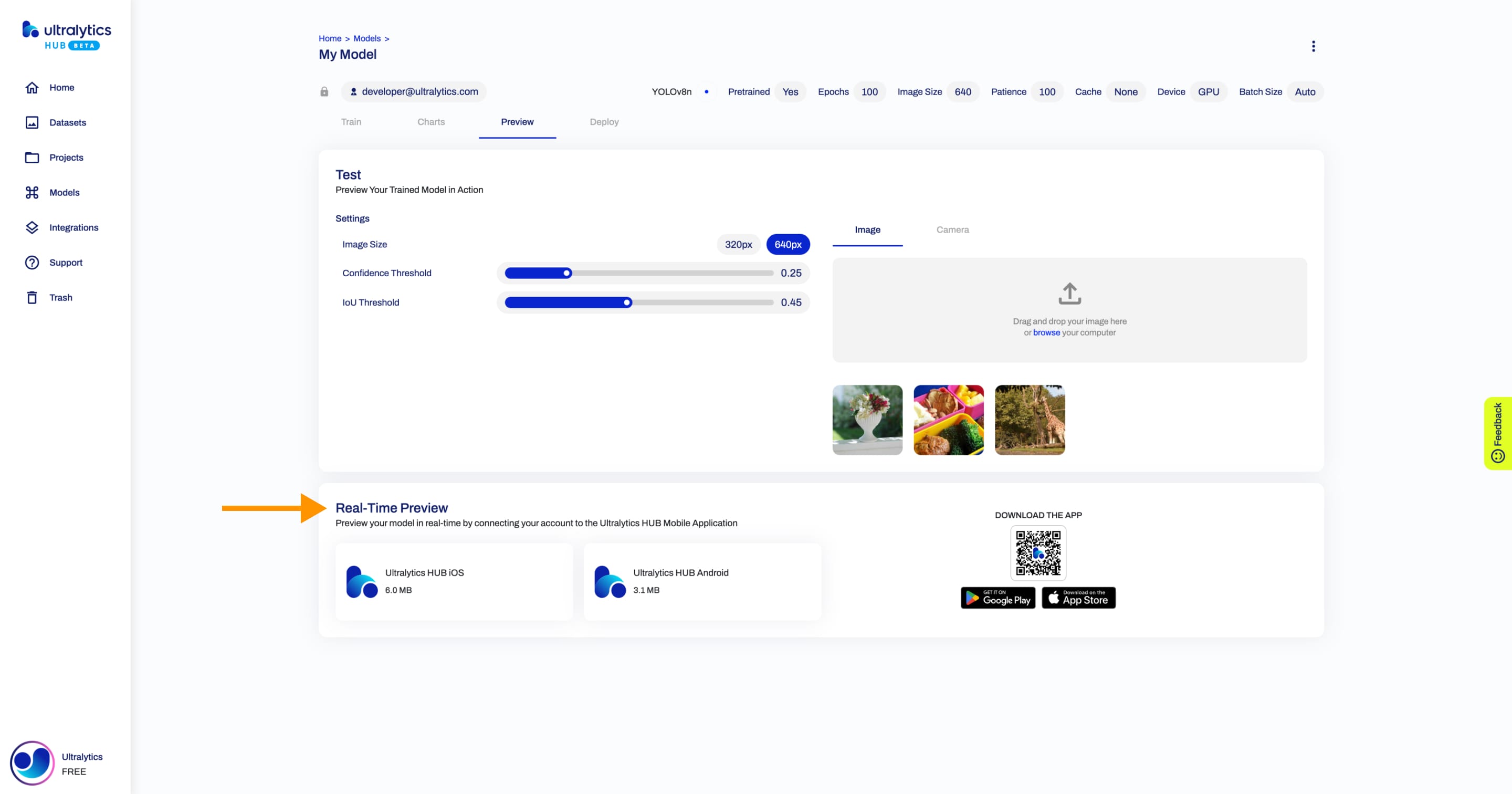

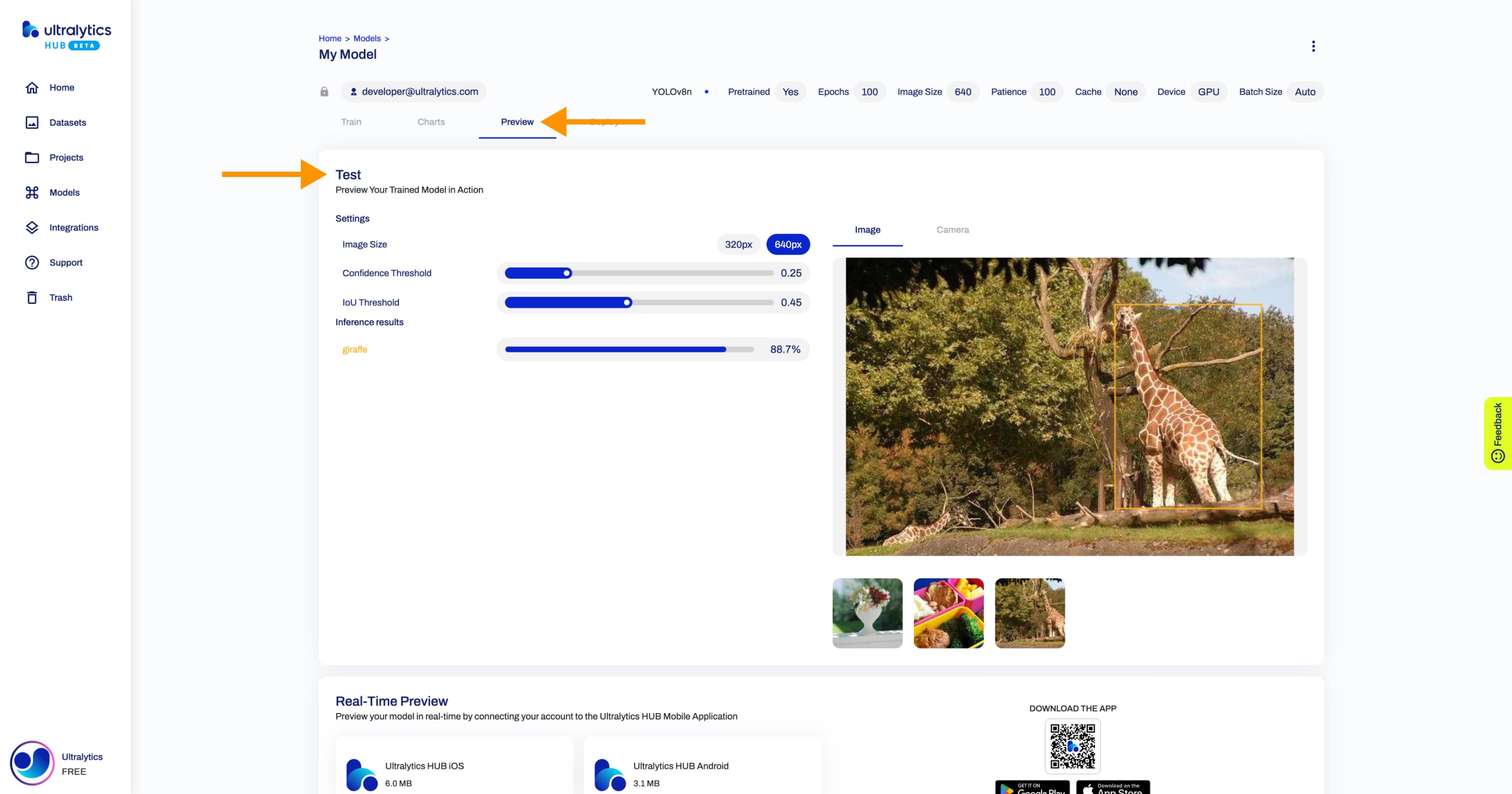

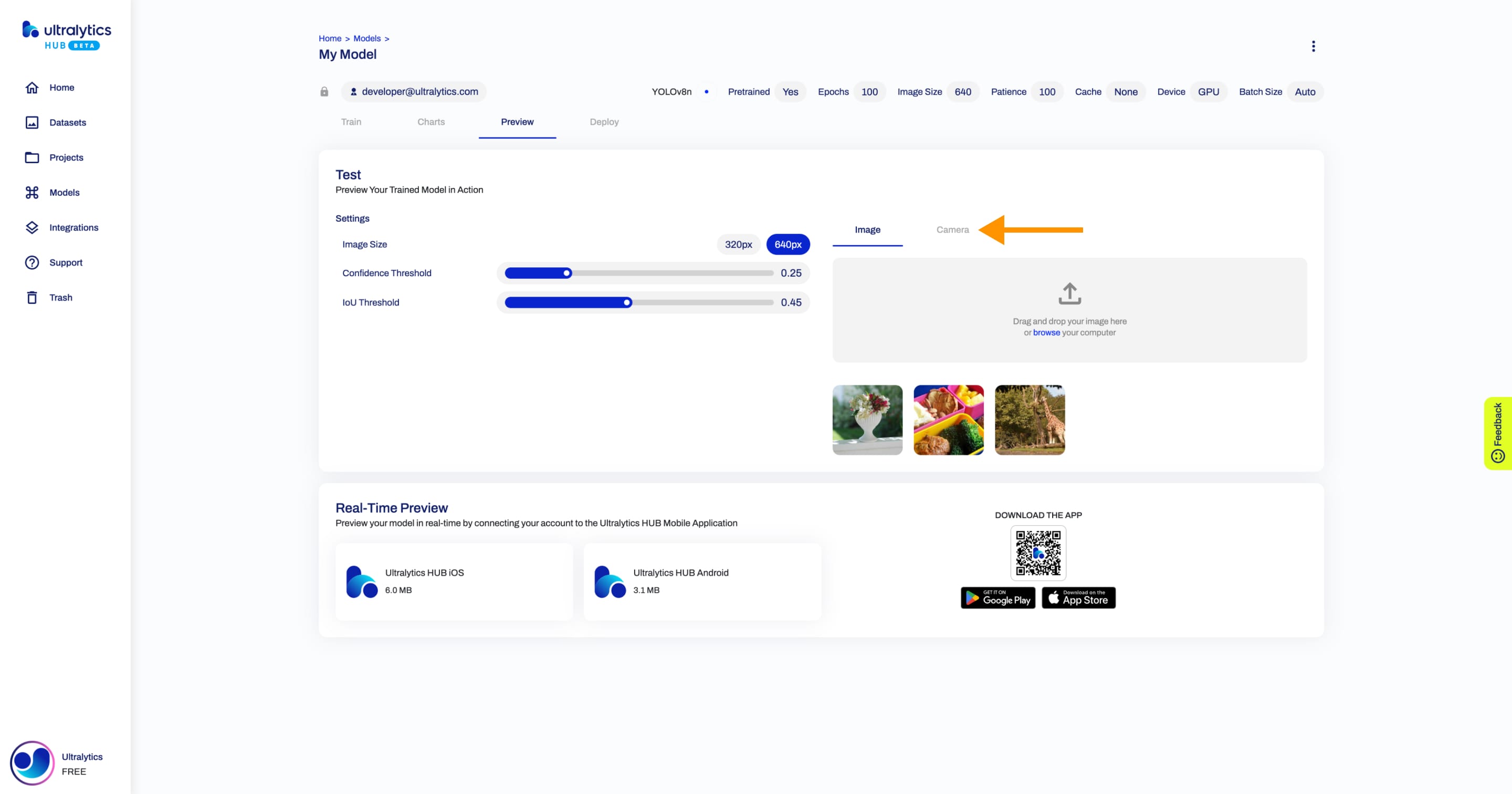

+## Preview Model

+

+Ultralytics HUB offers a variety of ways to preview your trained model.

+

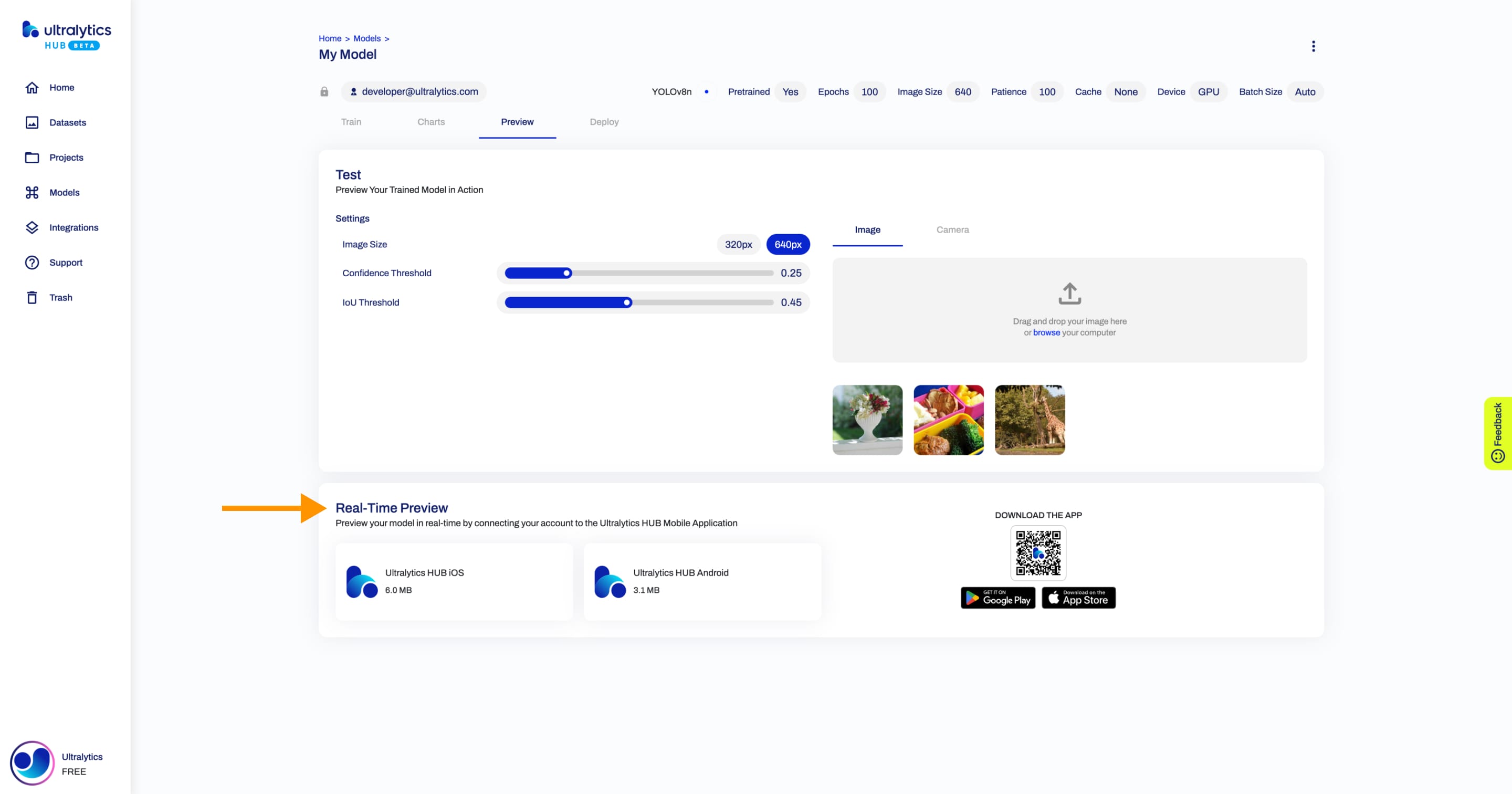

+You can preview your model if you click on the **Preview** tab and upload an image in the **Test** card.

+

+

+

+You can also use our Ultralytics Cloud API to effortlessly [run inference](https://docs.ultralytics.com/hub/inference_api) with your custom model.

+

+

+

+Furthermore, you can preview your model in real-time directly on your [iOS](https://apps.apple.com/xk/app/ultralytics/id1583935240) or [Android](https://play.google.com/store/apps/details?id=com.ultralytics.ultralytics_app) mobile device by [downloading](https://ultralytics.com/app_install) our [Ultralytics HUB Mobile Application](./app/index.md).

+

+

+

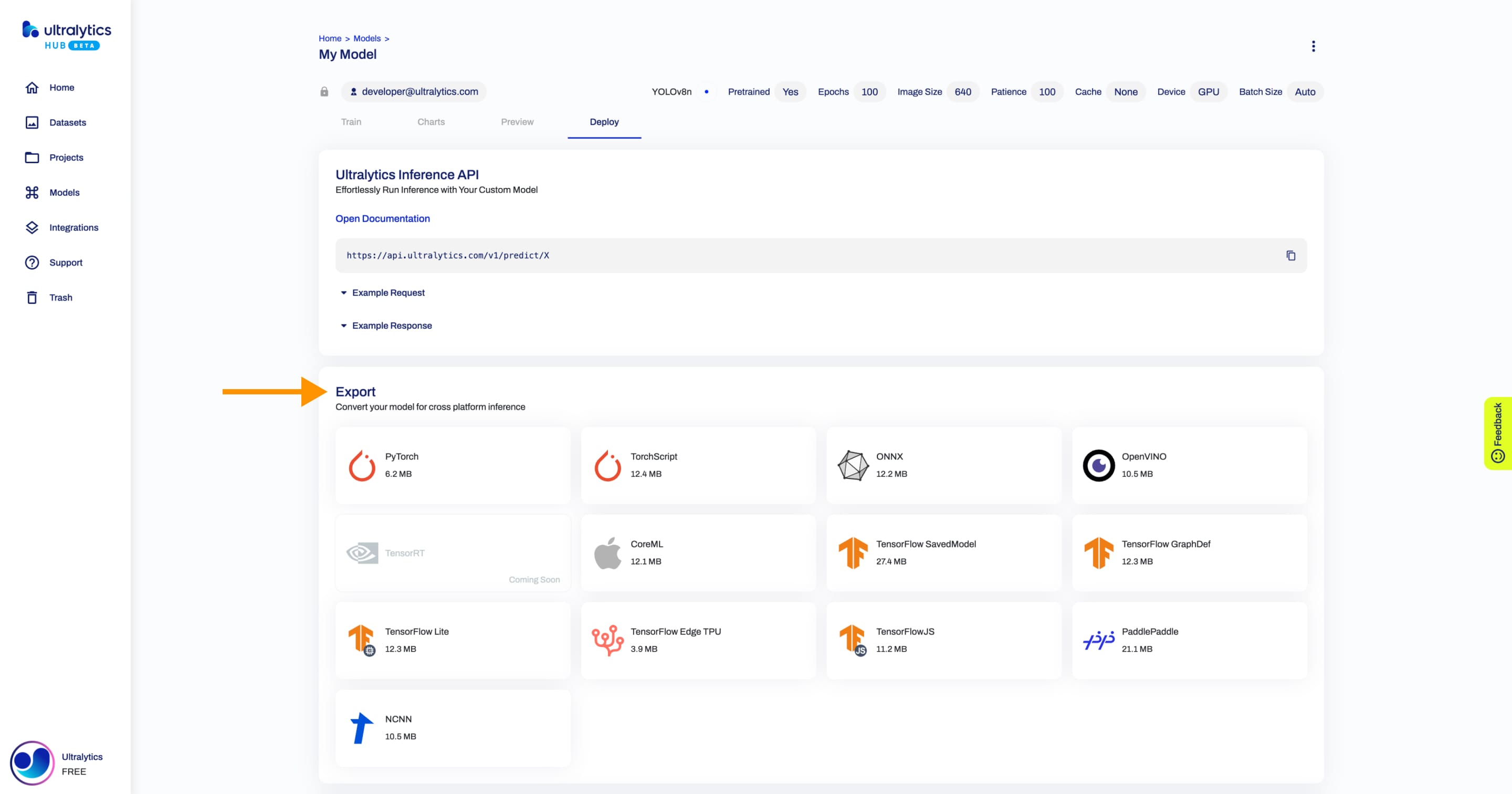

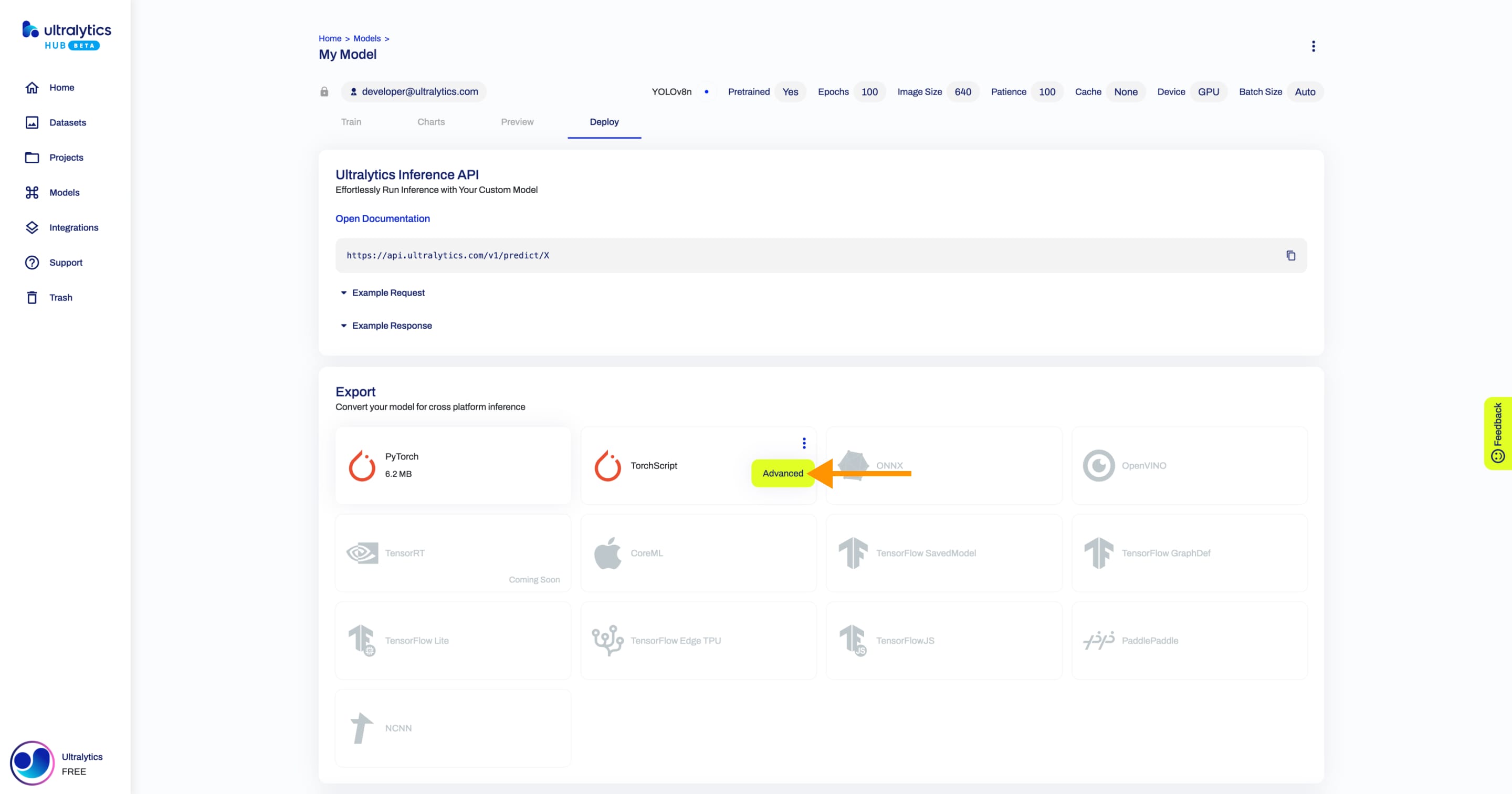

+## Deploy Model

+

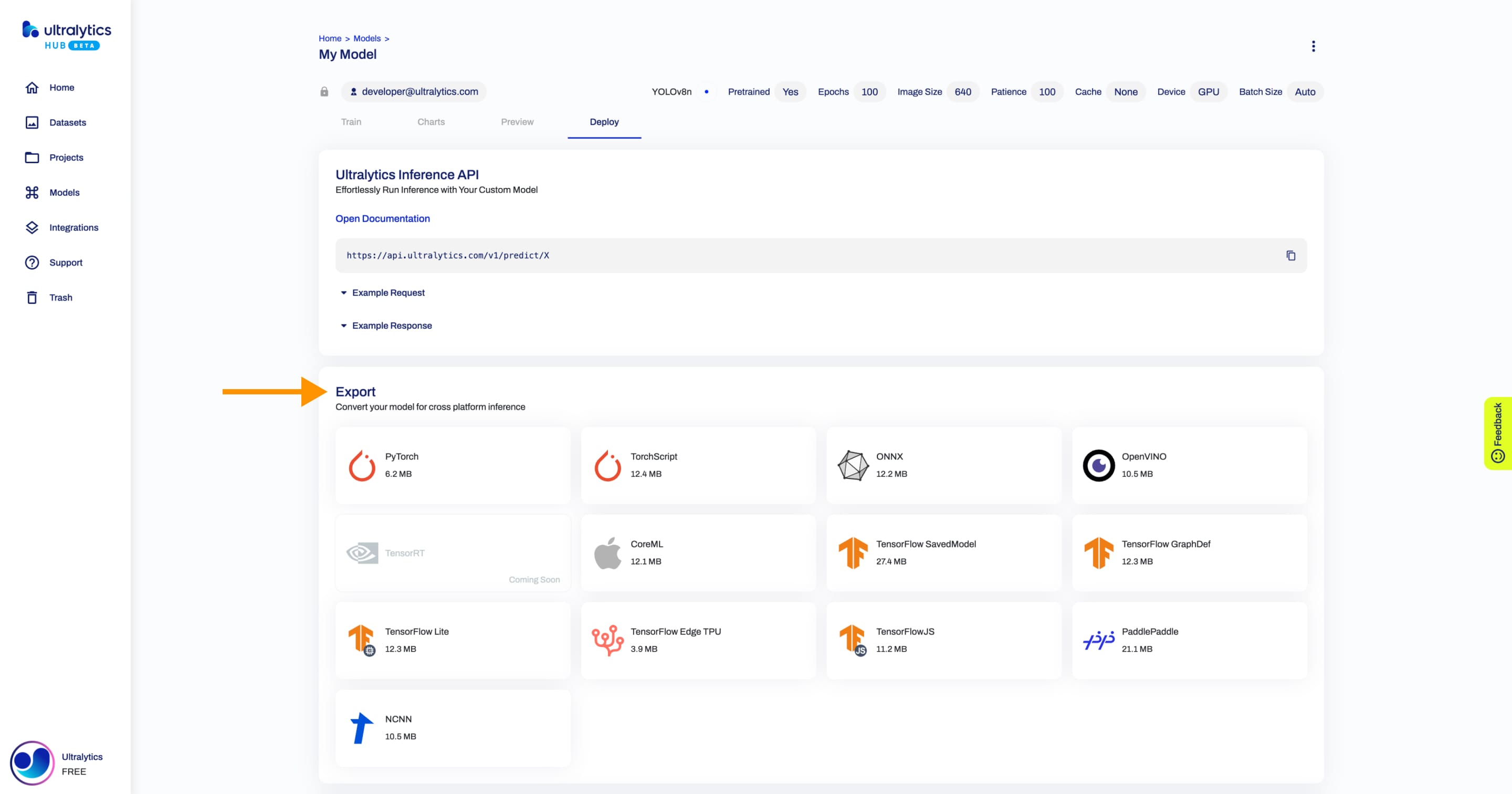

+You can export your model to 13 different formats, including ONNX, OpenVINO, CoreML, TensorFlow, Paddle and many others.

+

+

+

+??? tip "Tip"

+

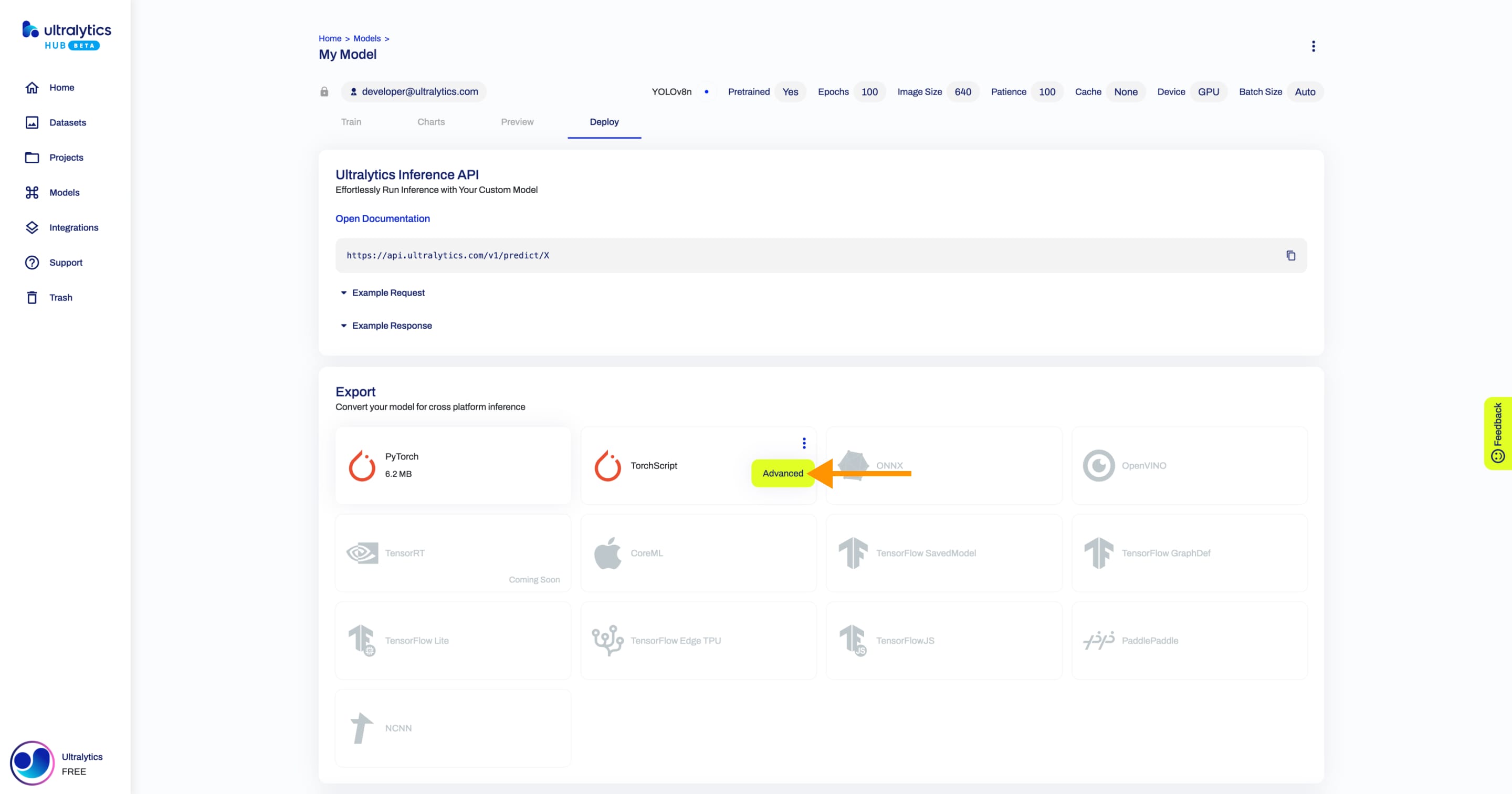

+ You can customize the export options of each format if you open the export actions dropdown and click on the **Advanced** option.

+

+

+

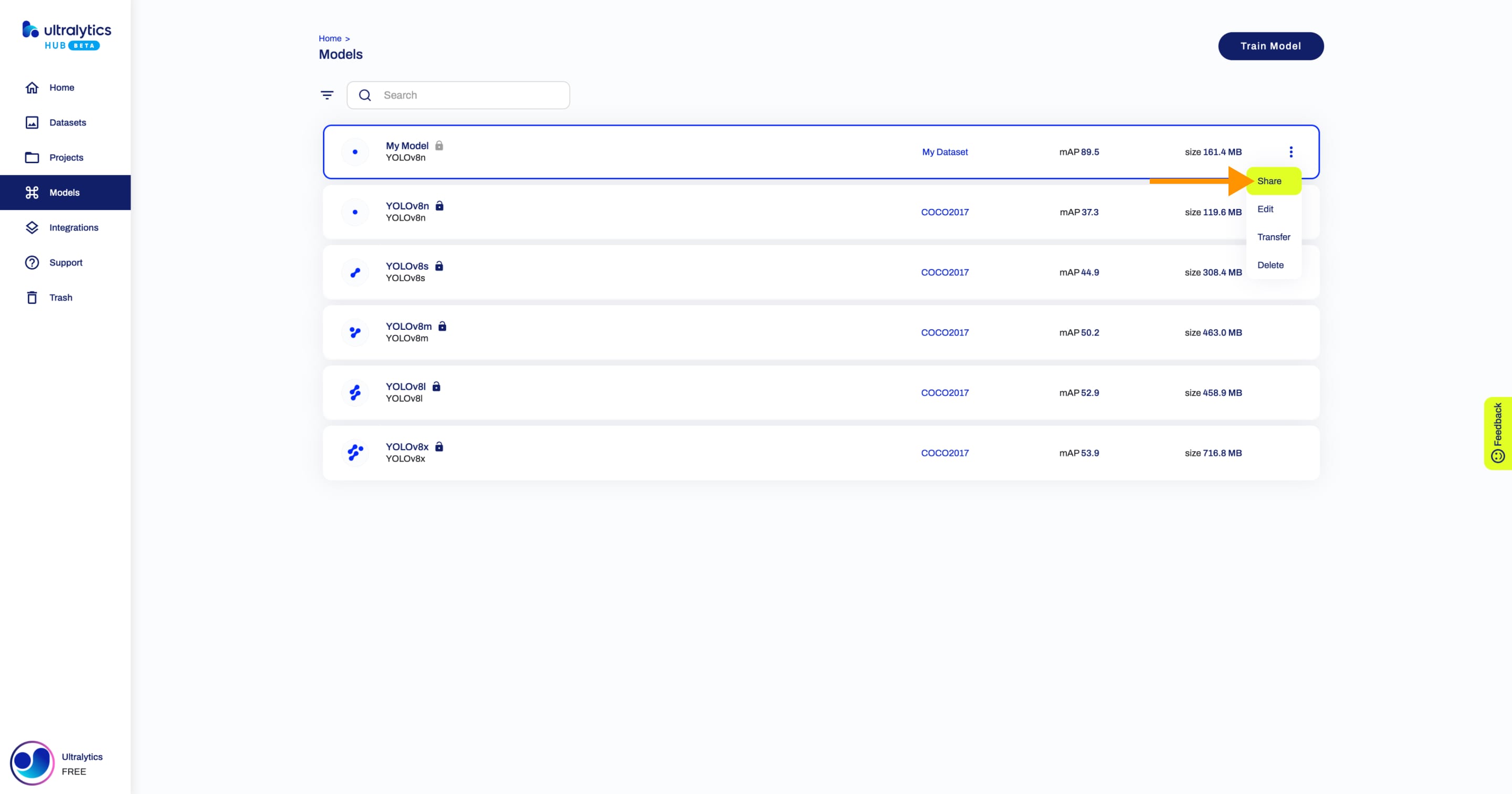

+## Share Model

+

+!!! info "Info"

+

+ Ultralytics HUB's sharing functionality provides a convenient way to share models with others. This feature is designed to accommodate both existing Ultralytics HUB users and those who have yet to create an account.

+

+??? note "Note"

+

+ You have control over the general access of your models.

+

+ You can choose to set the general access to "Private", in which case, only you will have access to it. Alternatively, you can set the general access to "Unlisted" which grants viewing access to anyone who has the direct link to the model, regardless of whether they have an Ultralytics HUB account or not.

+

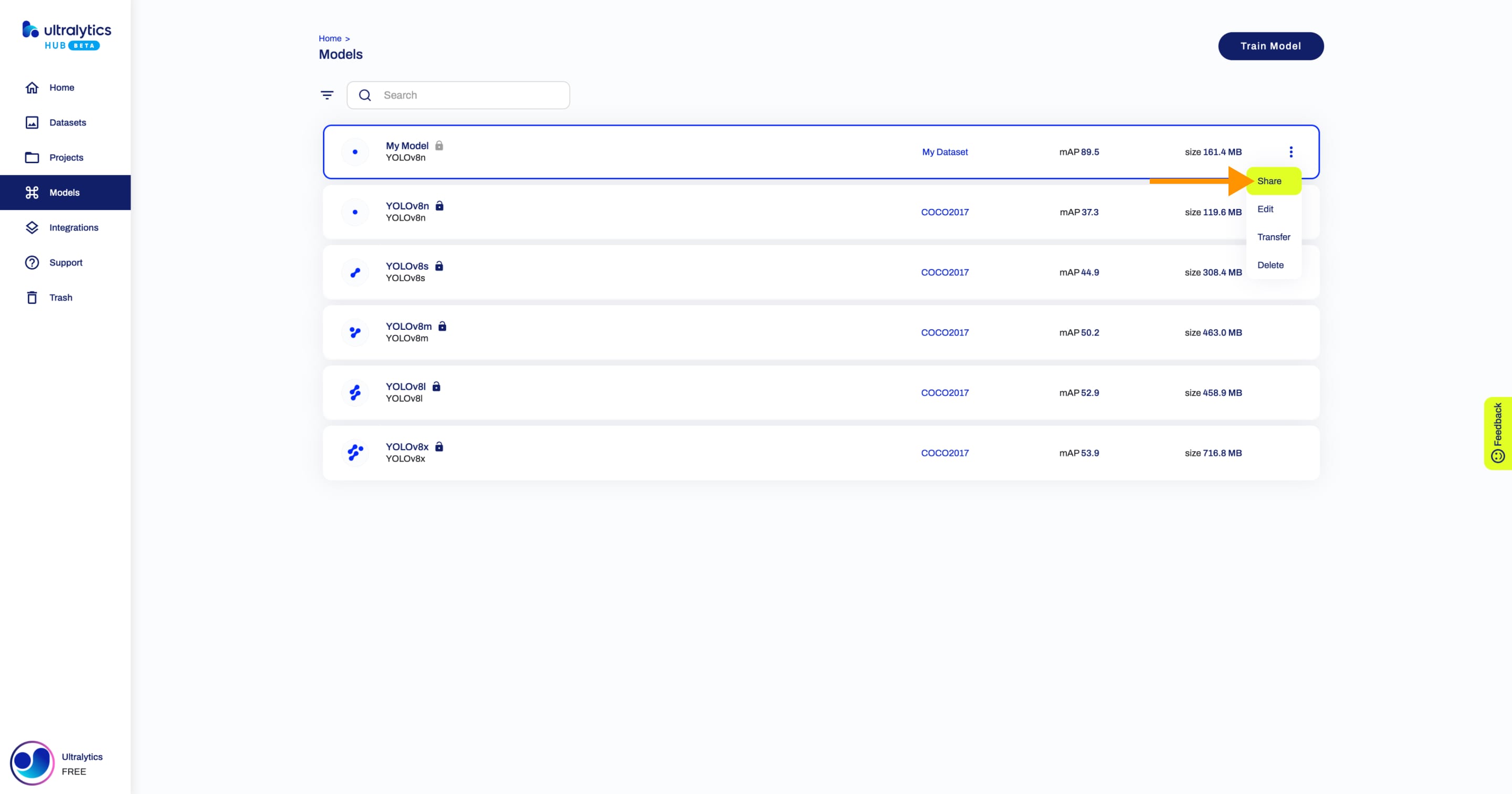

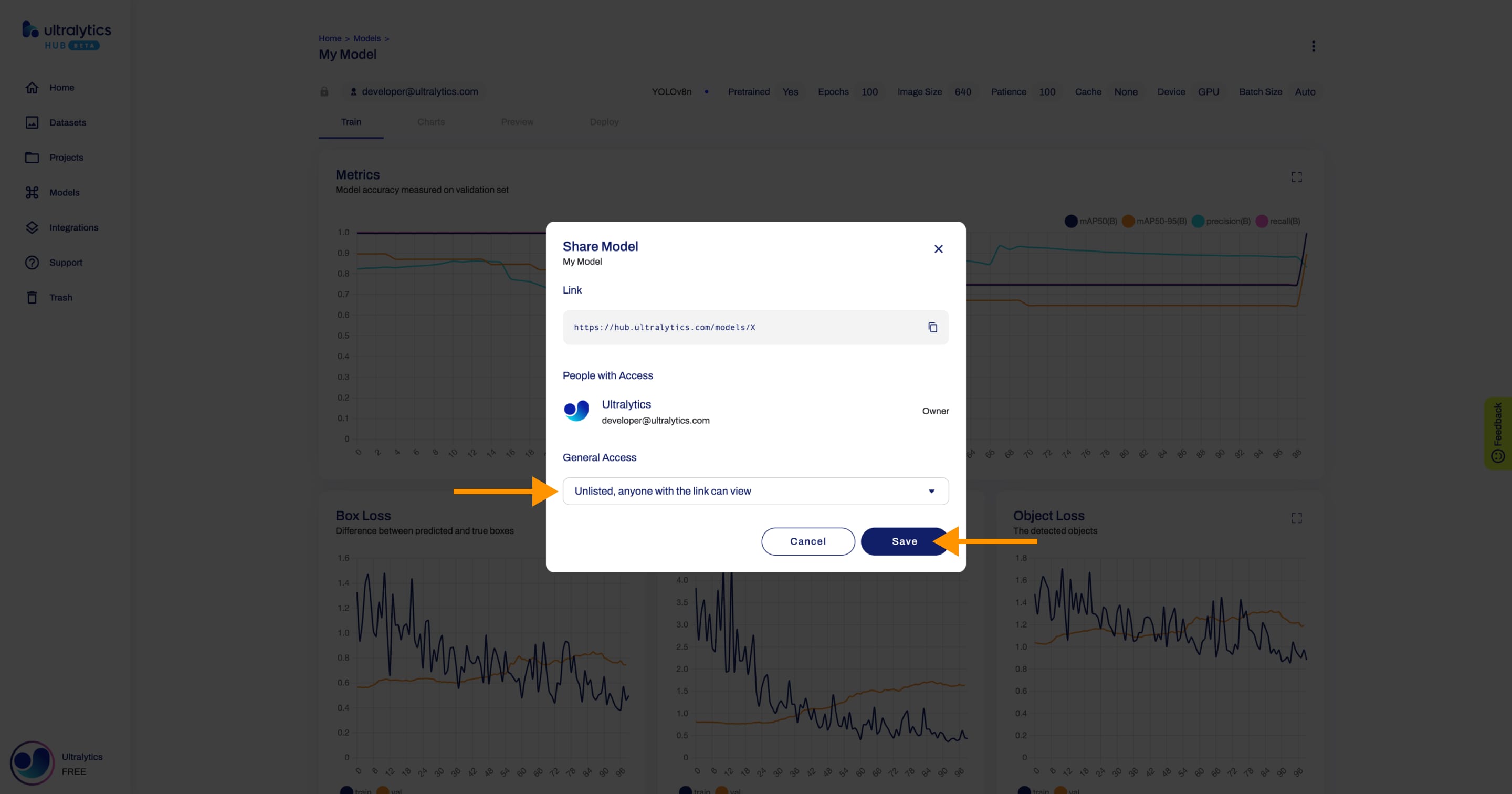

+Navigate to the Model page of the model you want to share, open the model actions dropdown and click on the **Share** option. This action will trigger the **Share Model** dialog.

+

+

+

+??? tip "Tip"

+

+ You can also share a model directly from the [Models](https://hub.ultralytics.com/models) page or from the Project page of the project where your model is located.

+

+

+

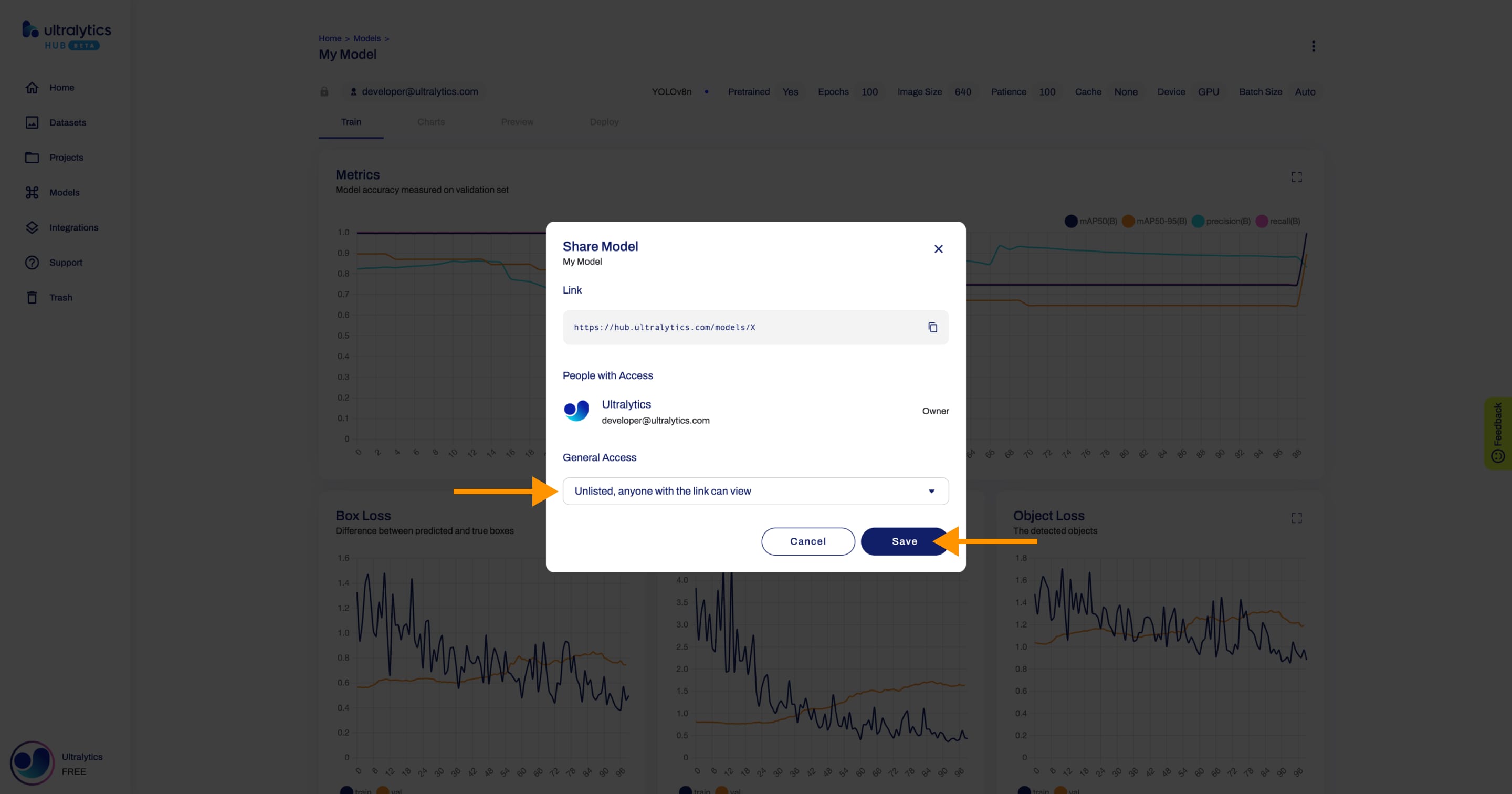

+Set the general access to "Unlisted" and click **Save**.

+

+

+

+Now, anyone who has the direct link to your model can view it.

+

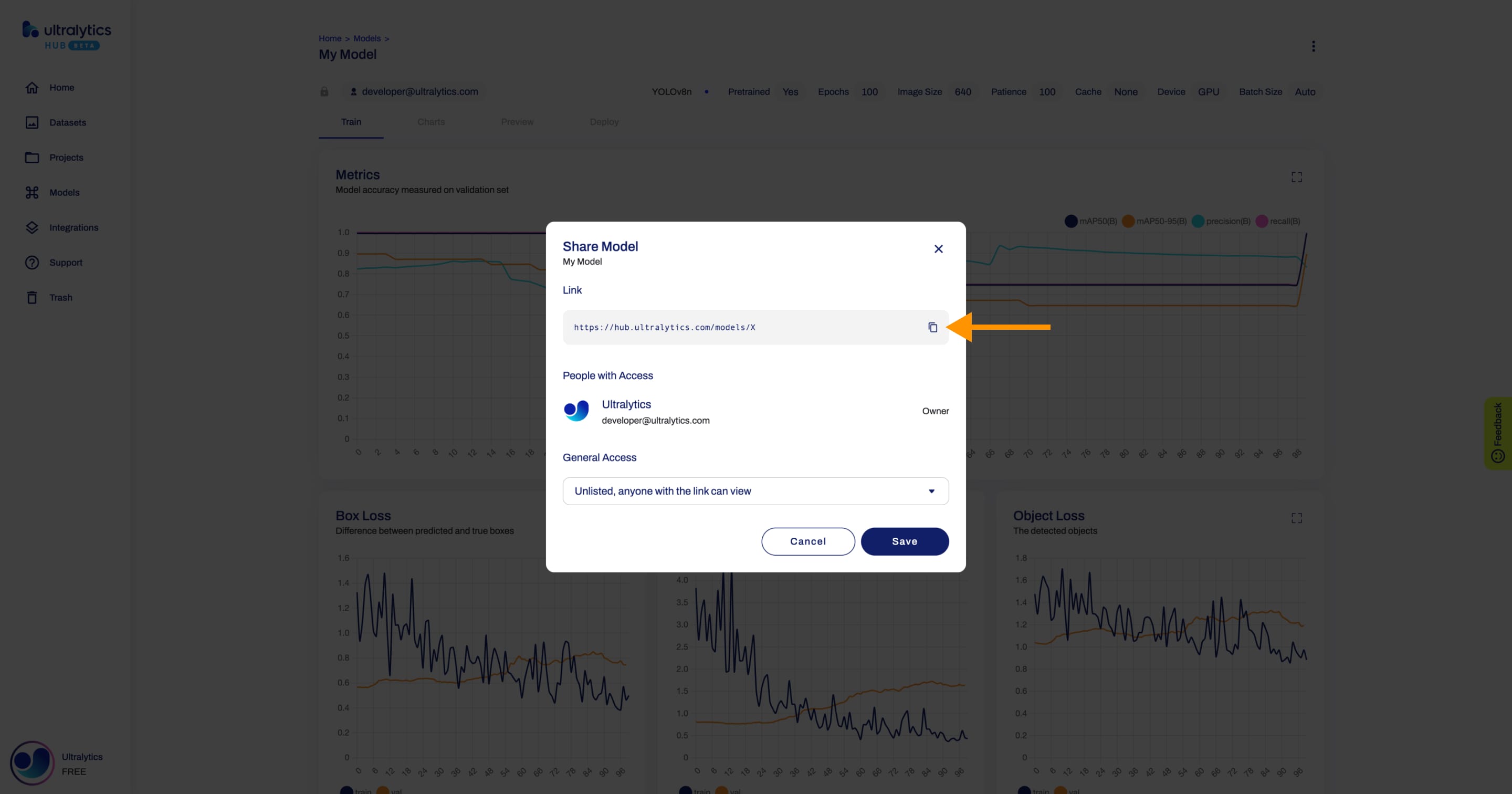

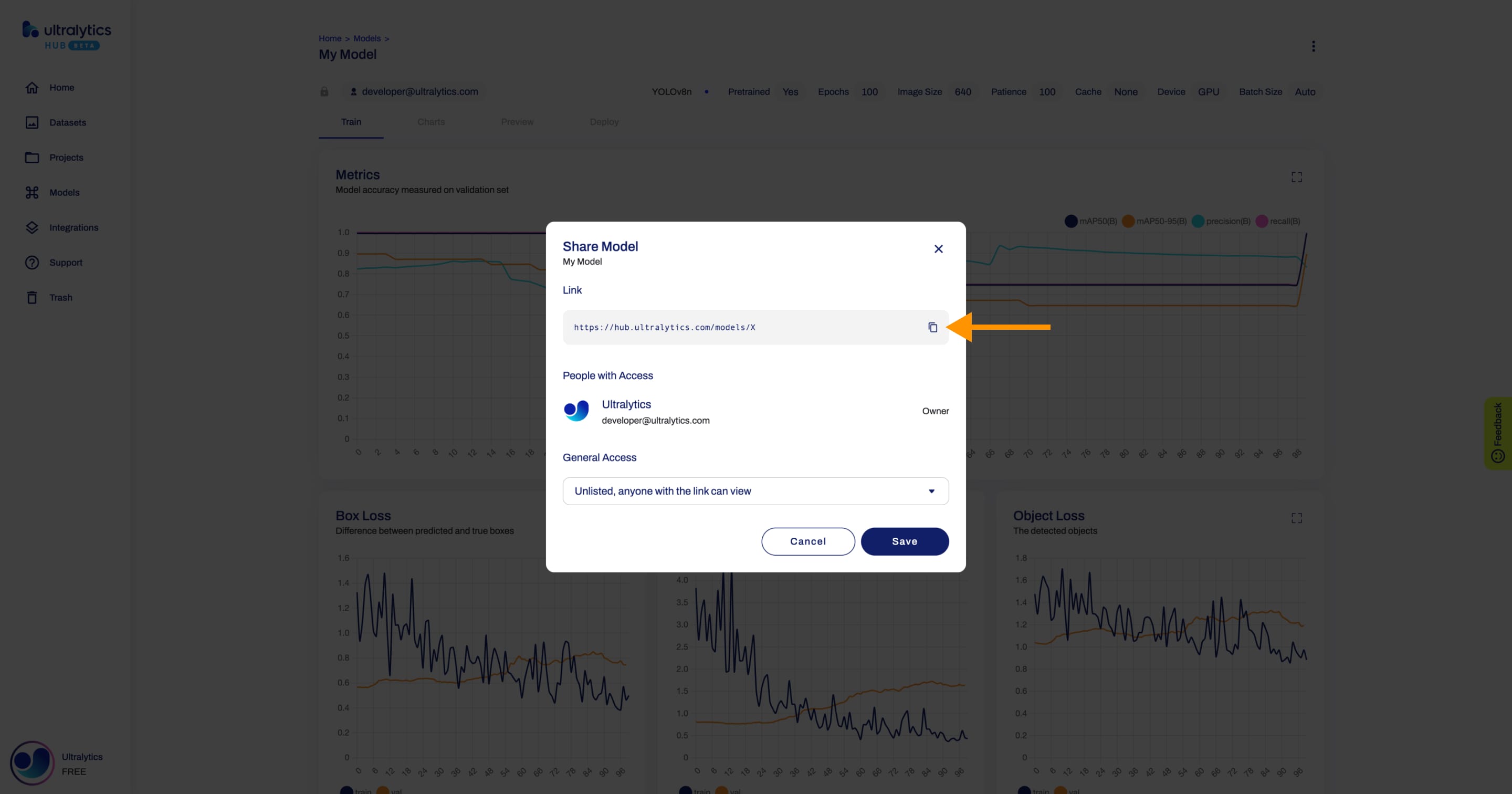

+??? tip "Tip"

+

+ You can easily click on the models's link shown in the **Share Model** dialog to copy it.

+

+

+

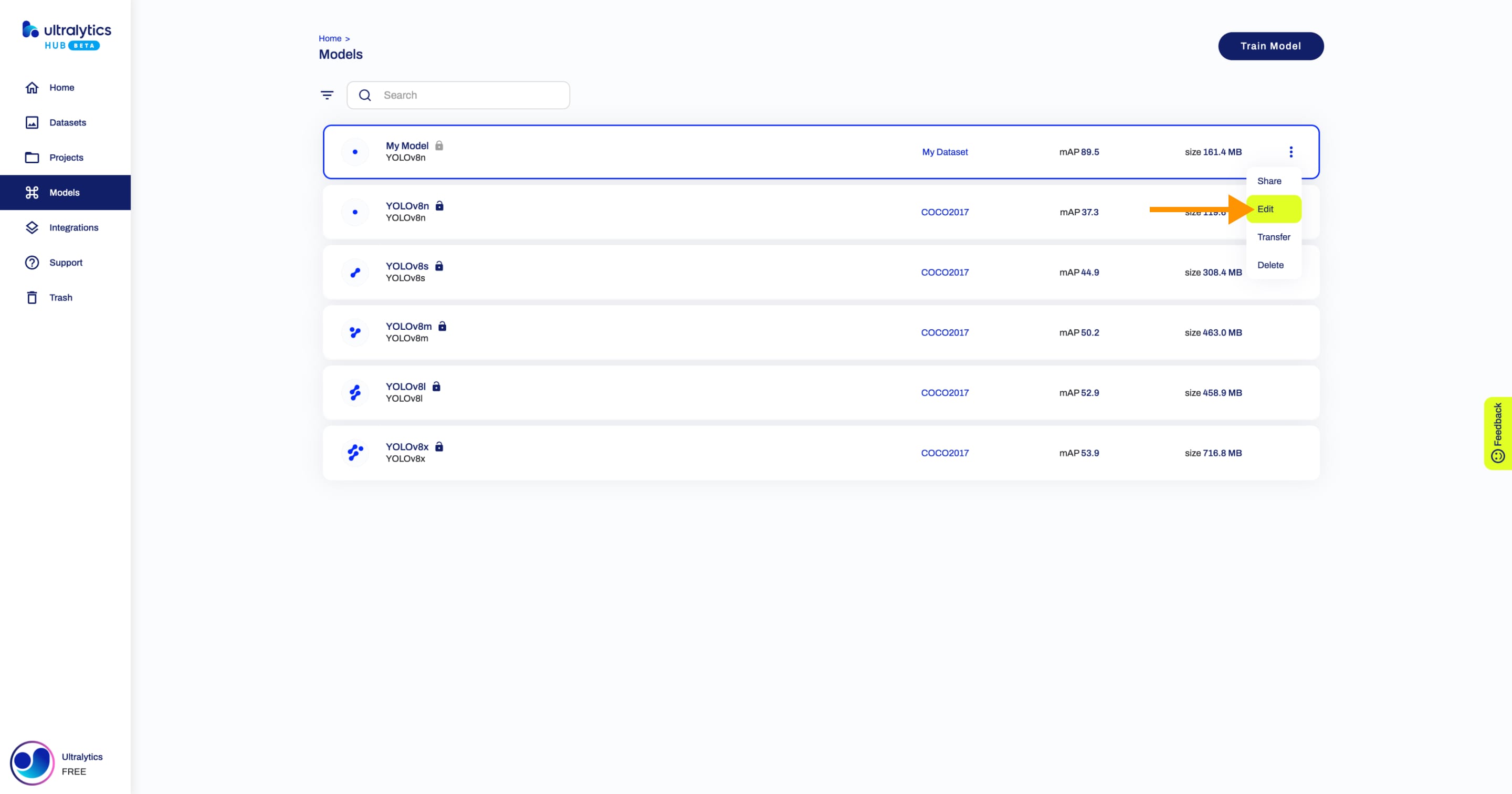

+## Edit Model

+

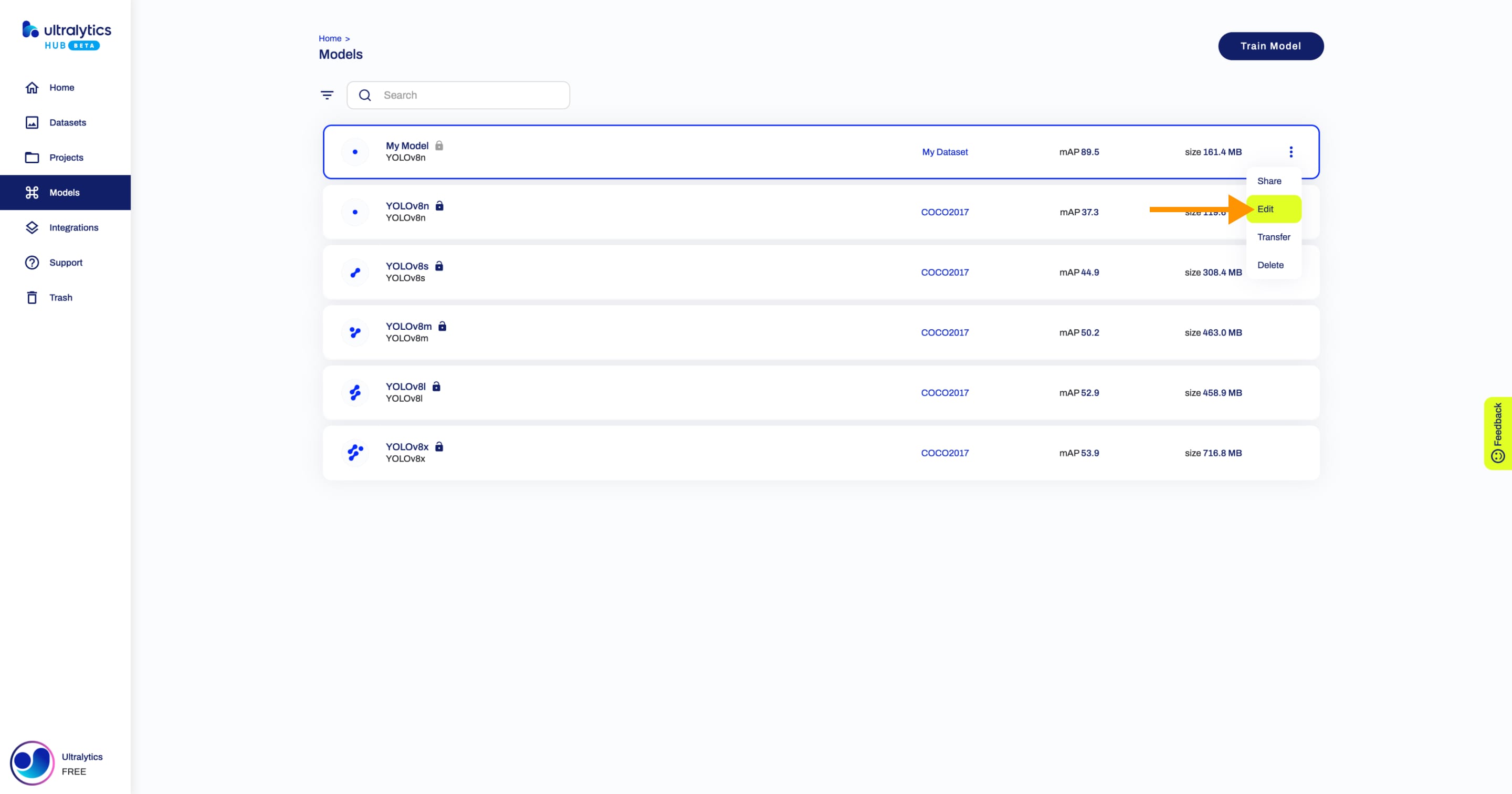

+Navigate to the Model page of the model you want to edit, open the model actions dropdown and click on the **Edit** option. This action will trigger the **Update Model** dialog.

+

+

+

+??? tip "Tip"

+

+ You can also edit a model directly from the [Models](https://hub.ultralytics.com/models) page or from the Project page of the project where your model is located.

+

+

+

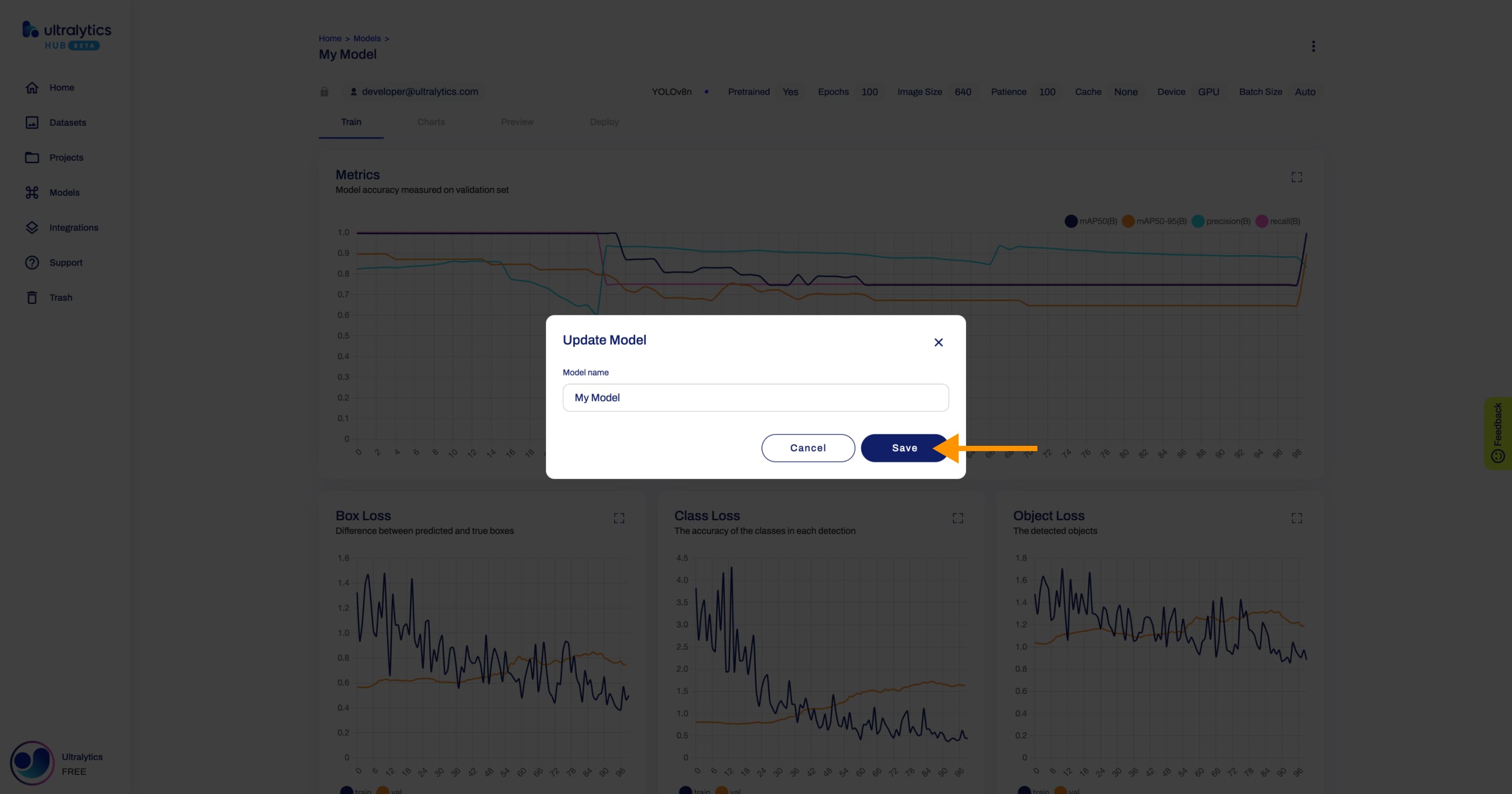

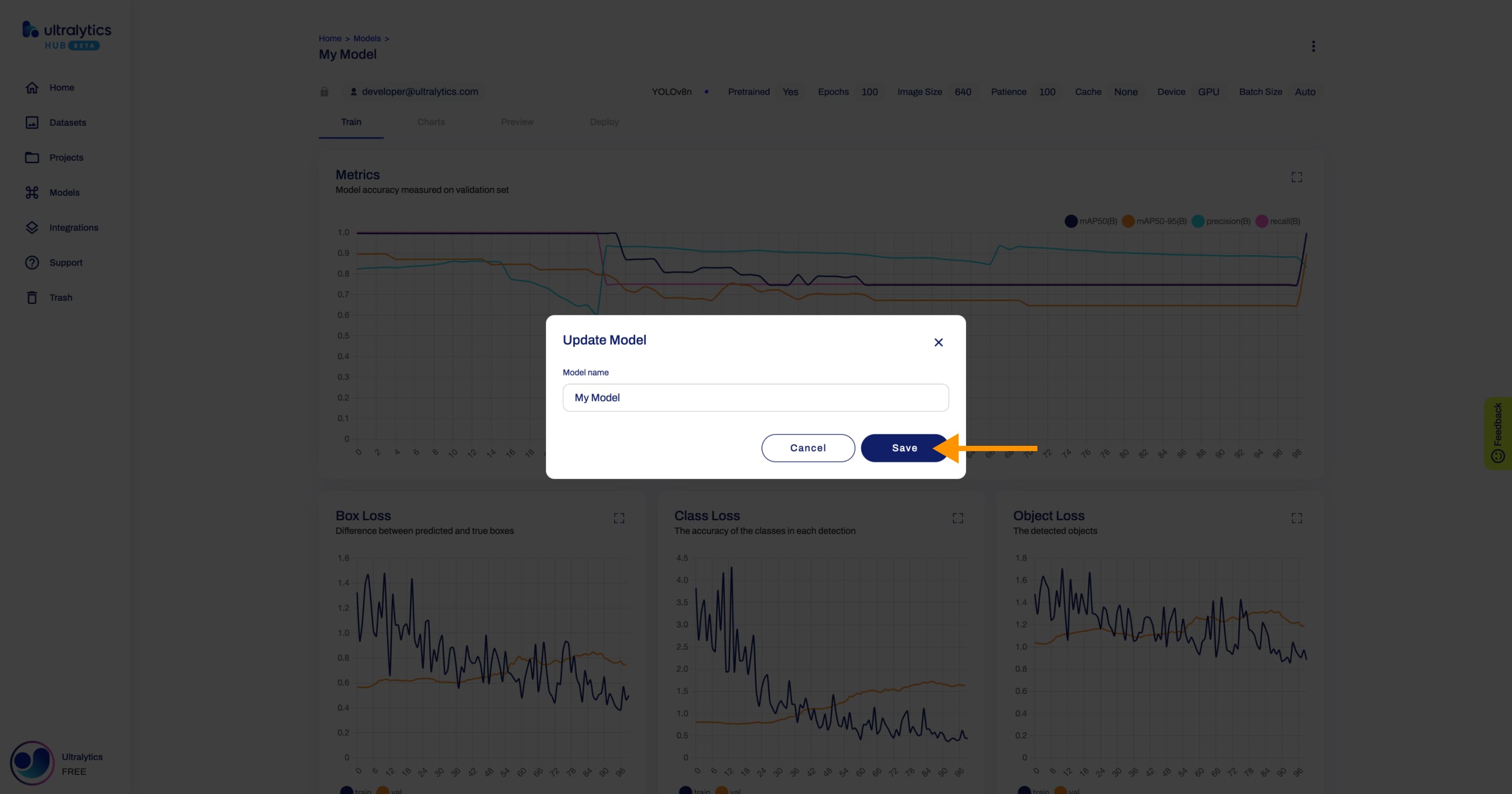

+Apply the desired modifications to your model and then confirm the changes by clicking **Save**.

+

+

+

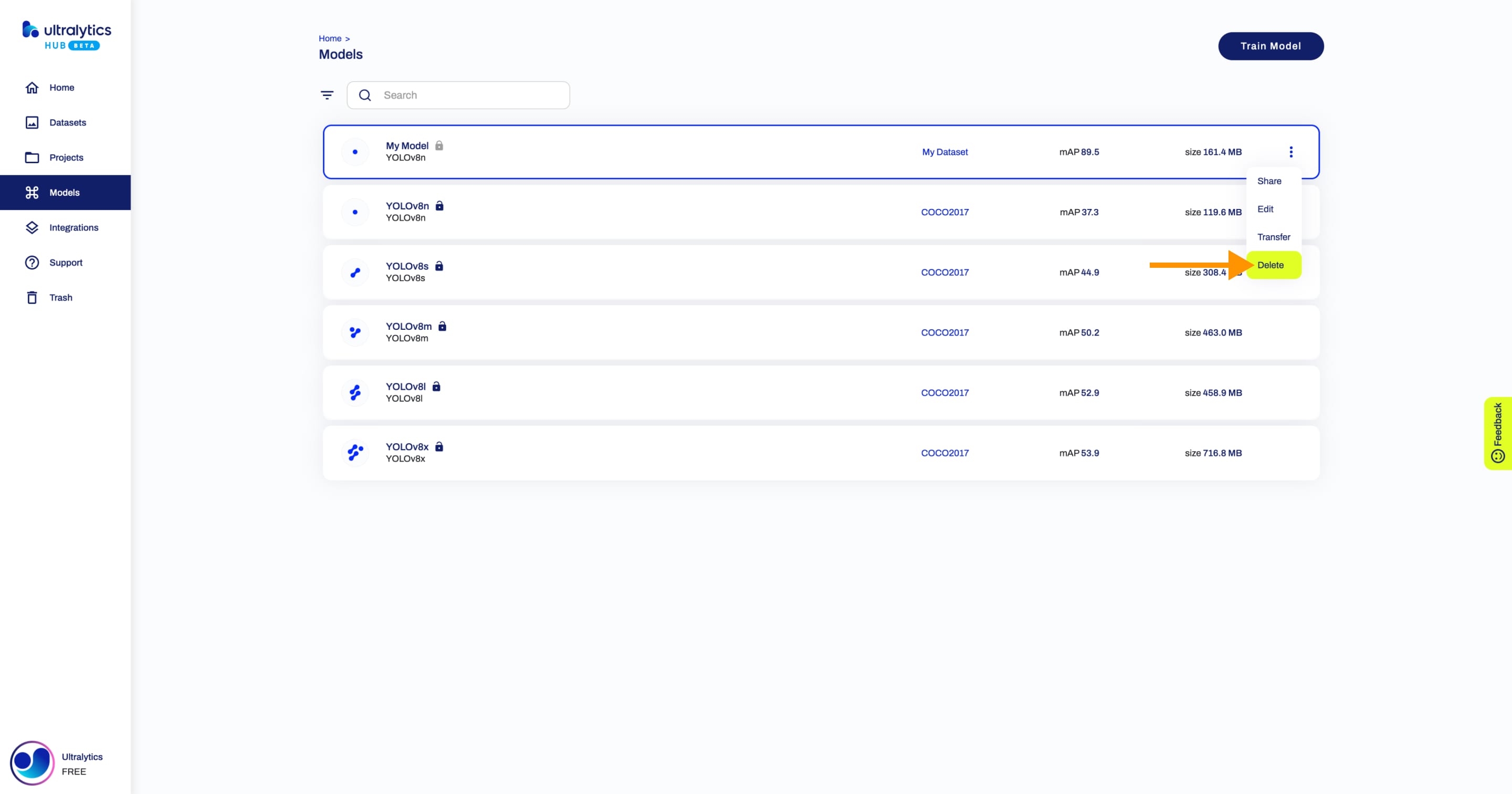

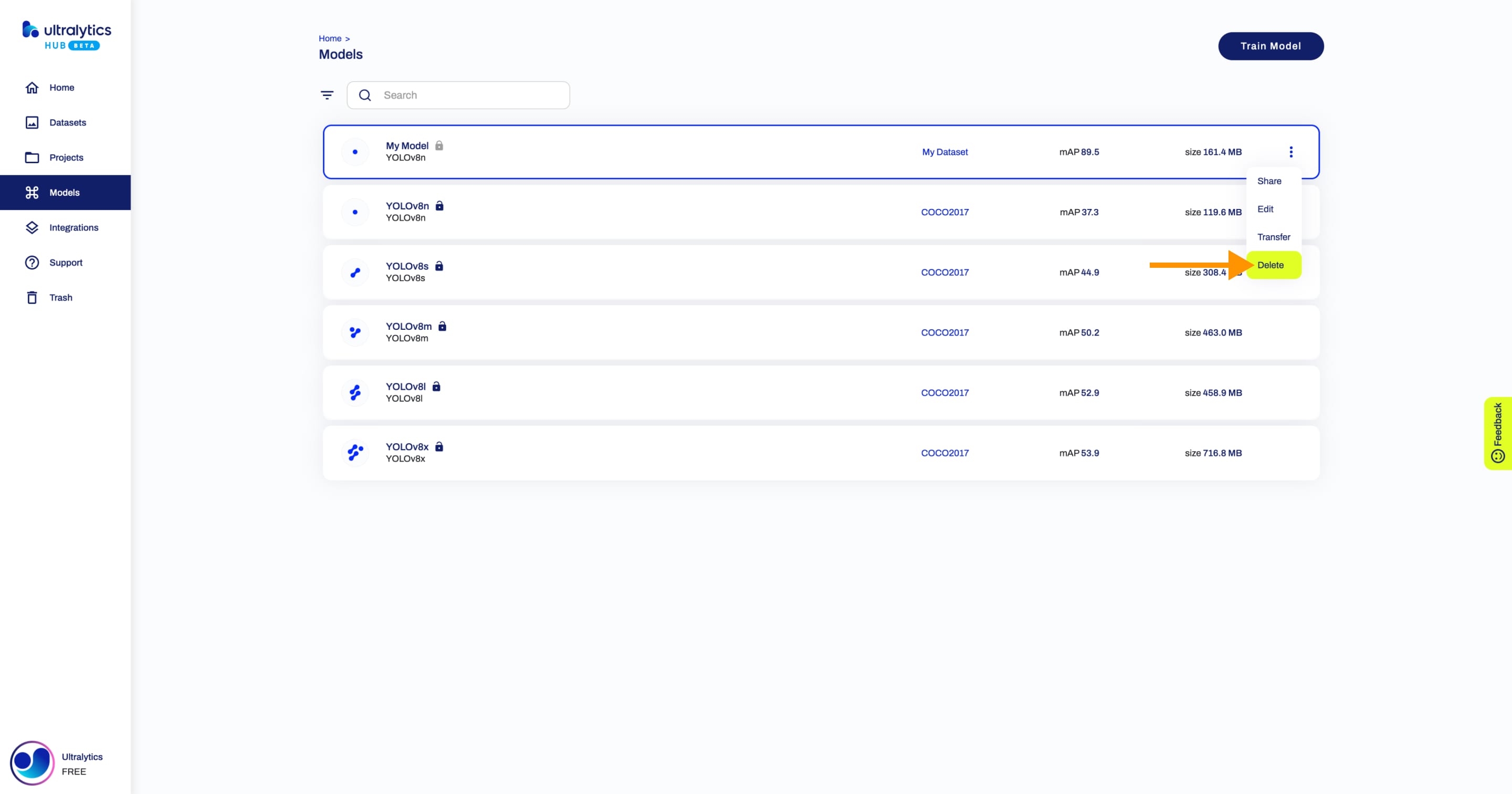

+## Delete Model

+

+Navigate to the Model page of the model you want to delete, open the model actions dropdown and click on the **Delete** option. This action will delete the model.

+

+

+

+??? tip "Tip"

+

+ You can also delete a model directly from the [Models](https://hub.ultralytics.com/models) page or from the Project page of the project where your model is located.

+

+

+

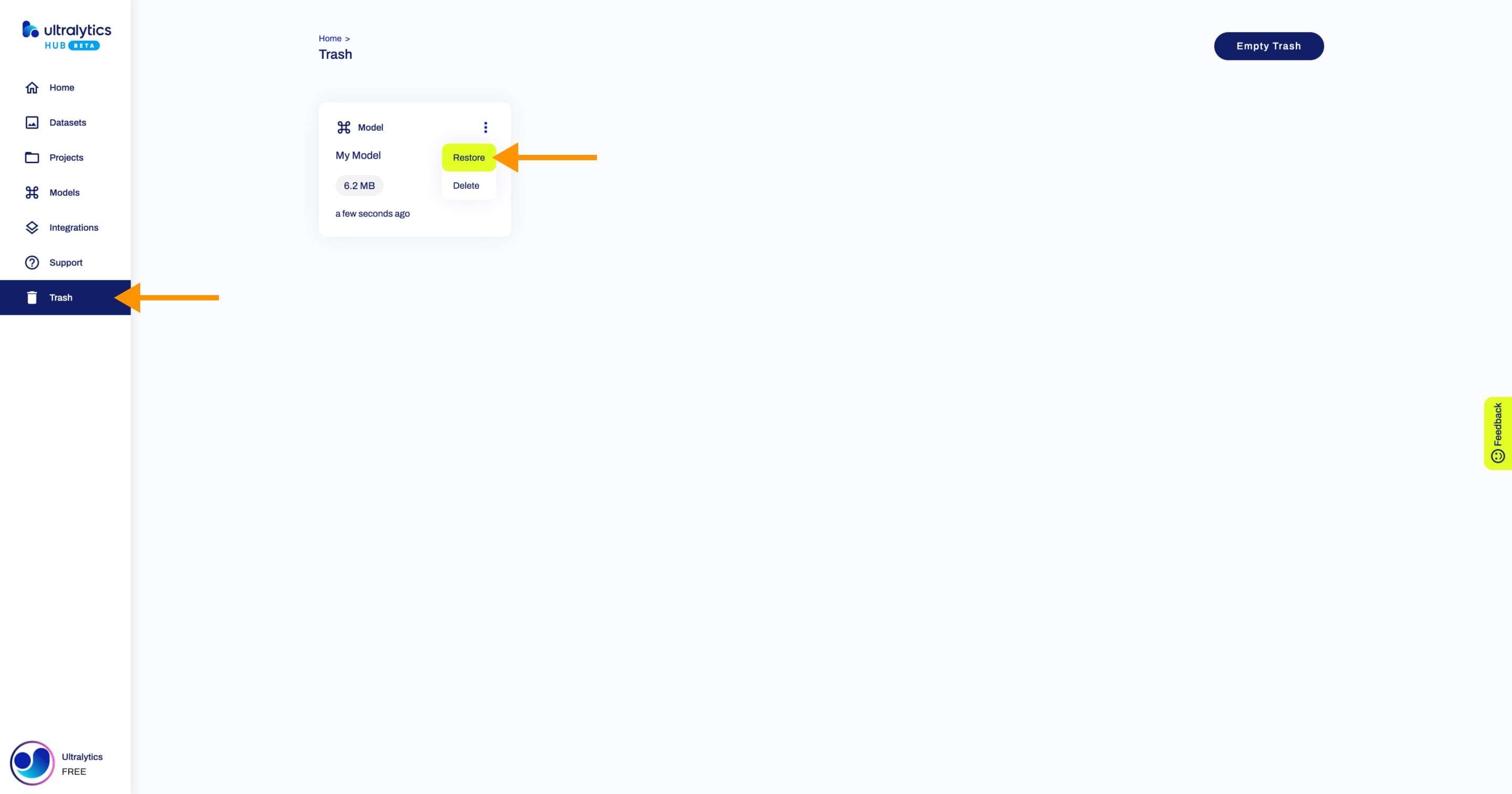

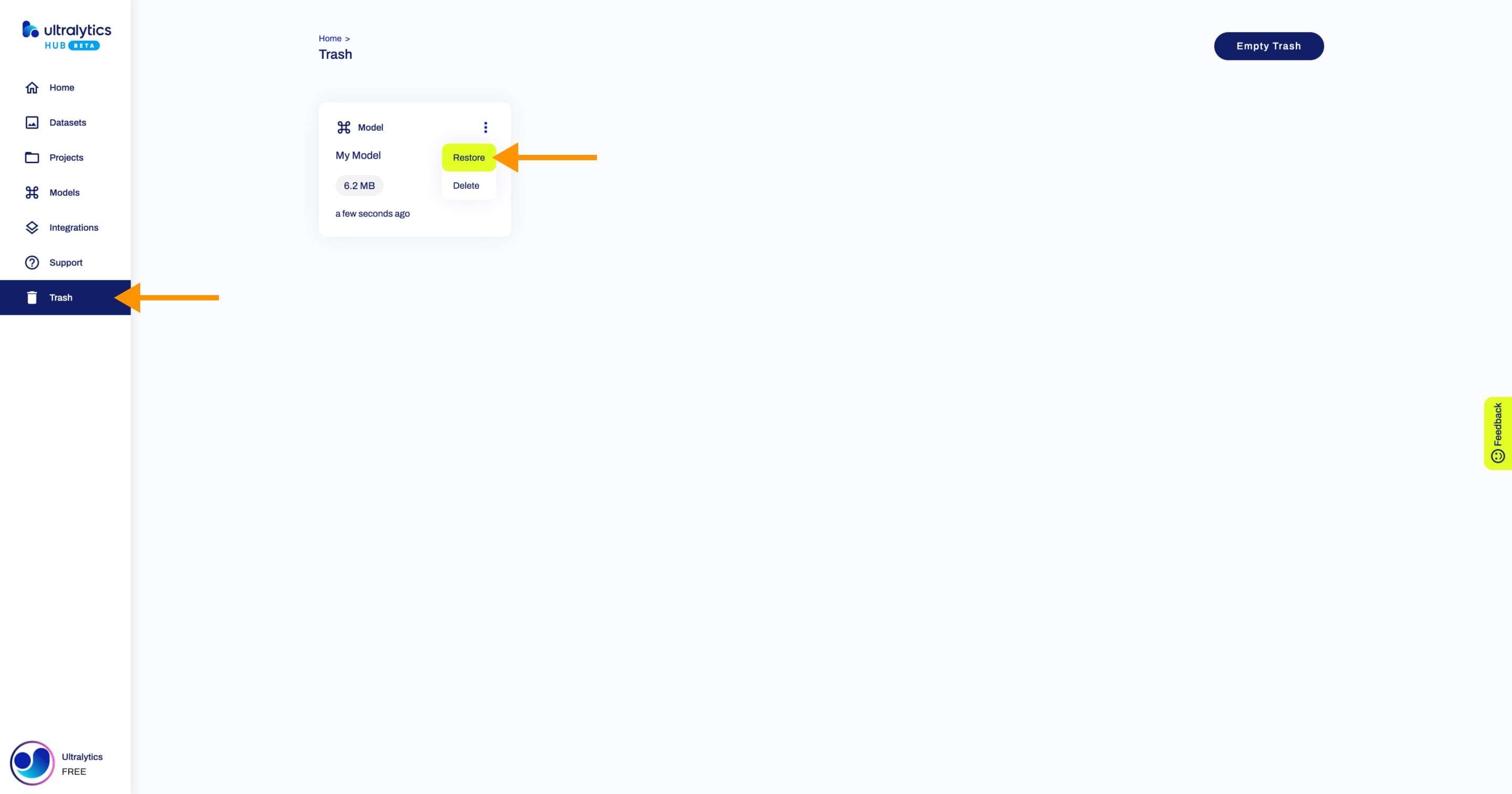

+??? note "Note"

-## Deploy to Real World

+ If you change your mind, you can restore the model from the [Trash](https://hub.ultralytics.com/trash) page.

-Export your model to 13 different formats, including TensorFlow, ONNX, OpenVINO, CoreML, Paddle and many others. Run

-models directly on your [iOS](https://apps.apple.com/xk/app/ultralytics/id1583935240) or

-[Android](https://play.google.com/store/apps/details?id=com.ultralytics.ultralytics_app) mobile device by downloading

-the [Ultralytics App](https://ultralytics.com/app_install)!

\ No newline at end of file

+

diff --git a/docs/hub/projects.md b/docs/hub/projects.md

index 6a006b365..d8e0f8649 100644

--- a/docs/hub/projects.md

+++ b/docs/hub/projects.md

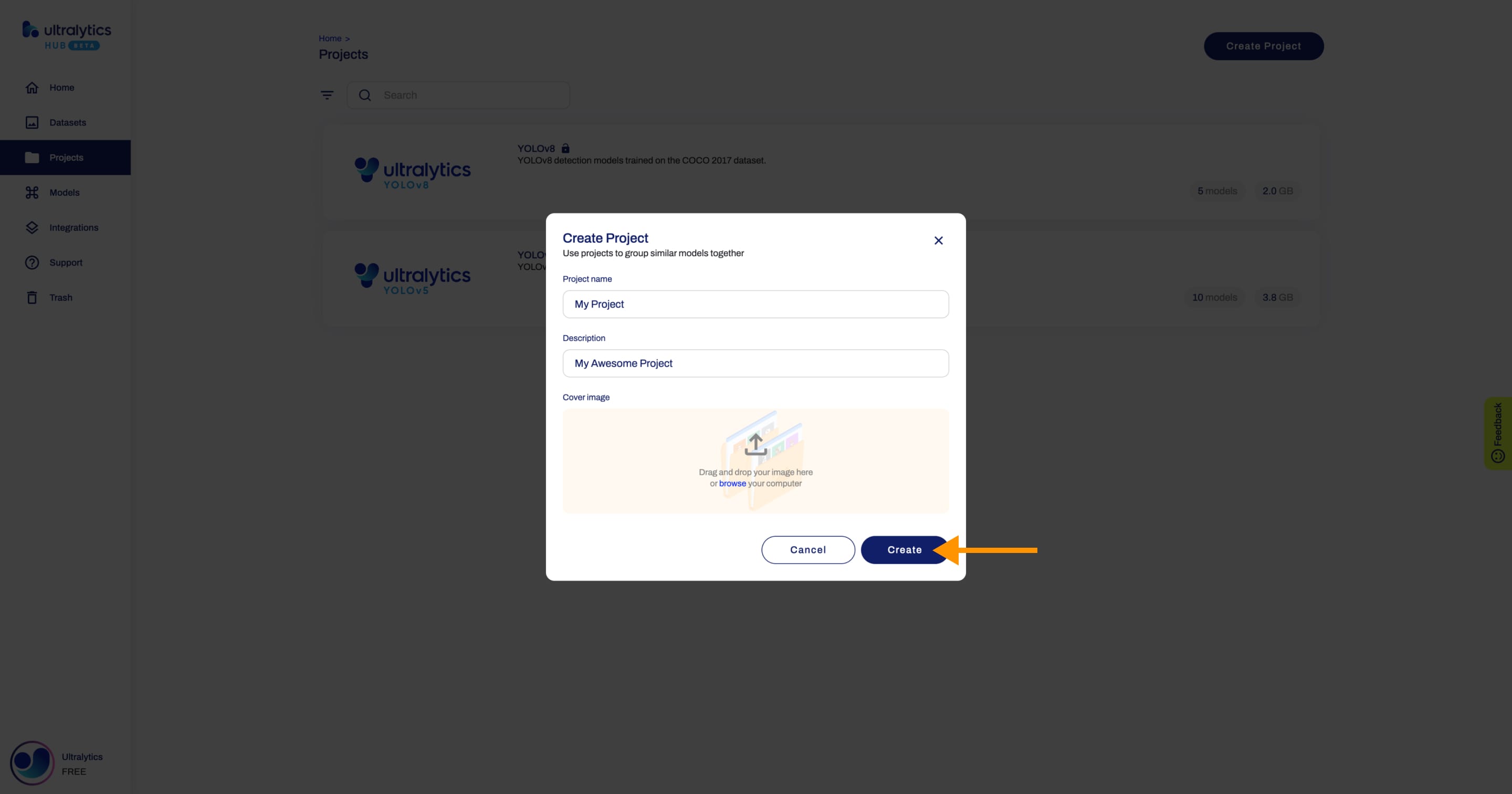

@@ -26,7 +26,7 @@ Click on the **Create Project** button on the top right of the page. This action

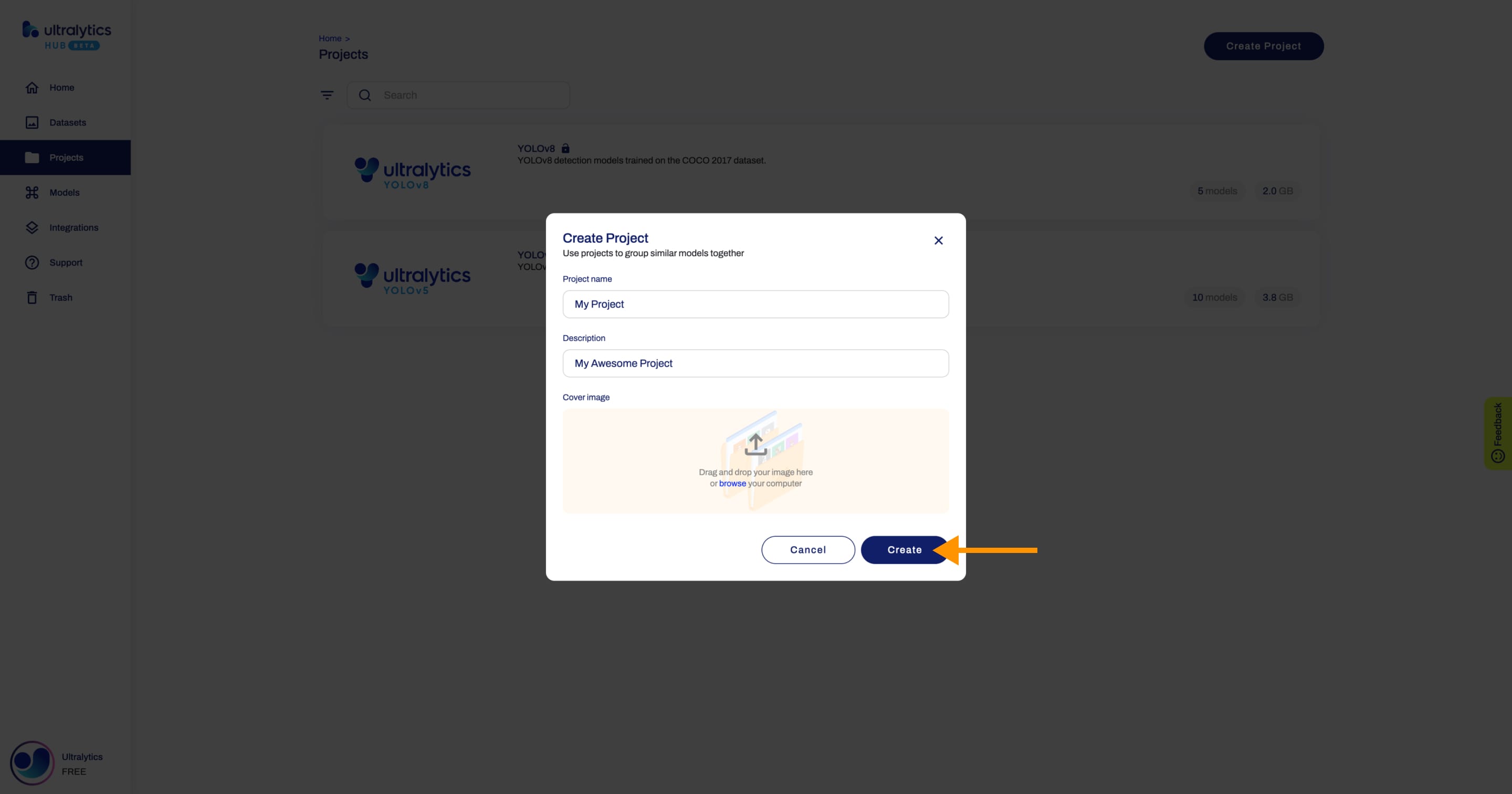

-Type the name of your project in the *Project name* field or keep the default name and finalize the project creation with a single click.

+Type the name of your project in the _Project name_ field or keep the default name and finalize the project creation with a single click.

You have the additional option to enrich your project with a description and a unique image, enhancing its recognizability on the Projects page.

@@ -38,9 +38,9 @@ After your project is created, you will be able to access it from the Projects p

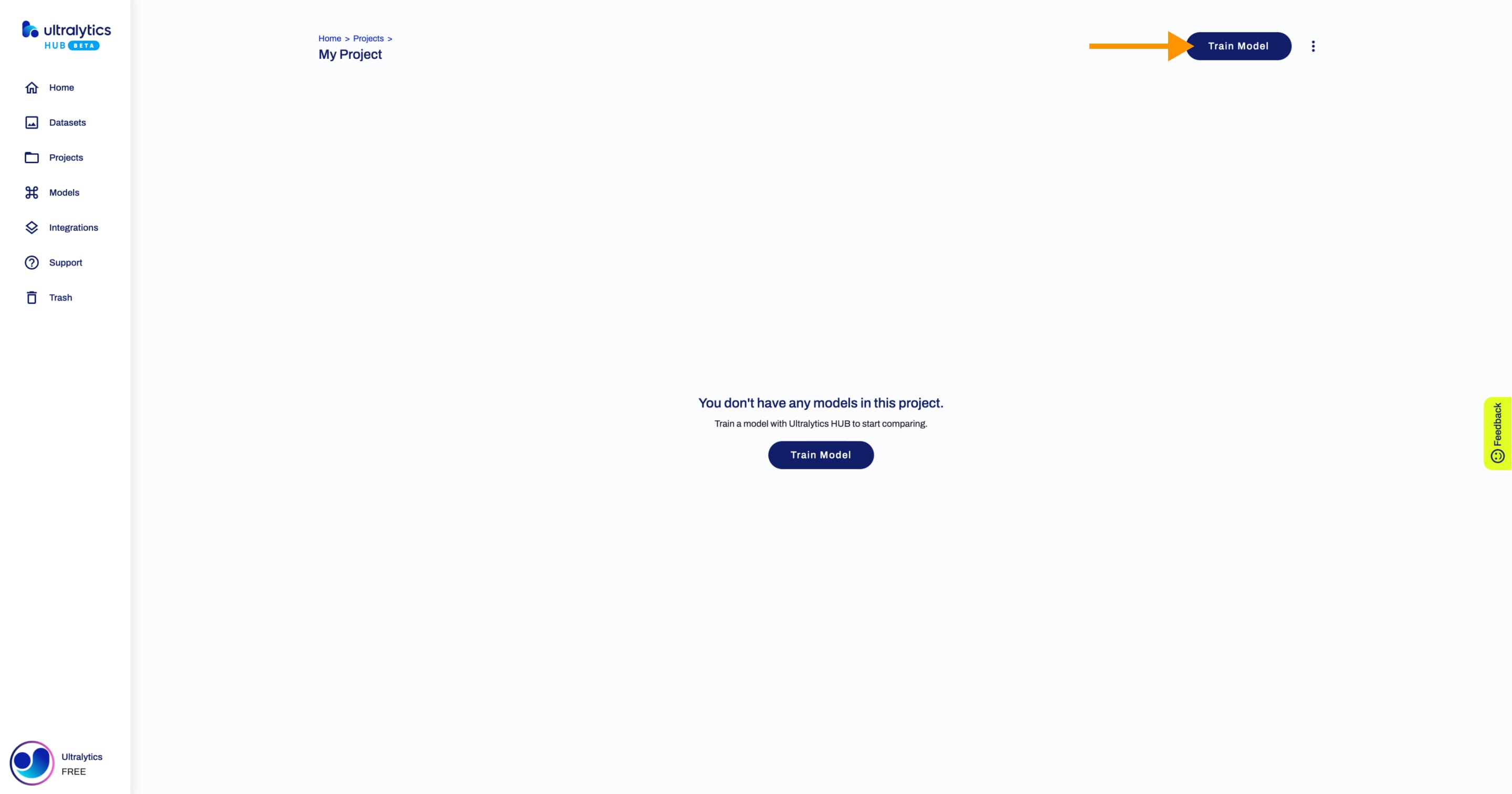

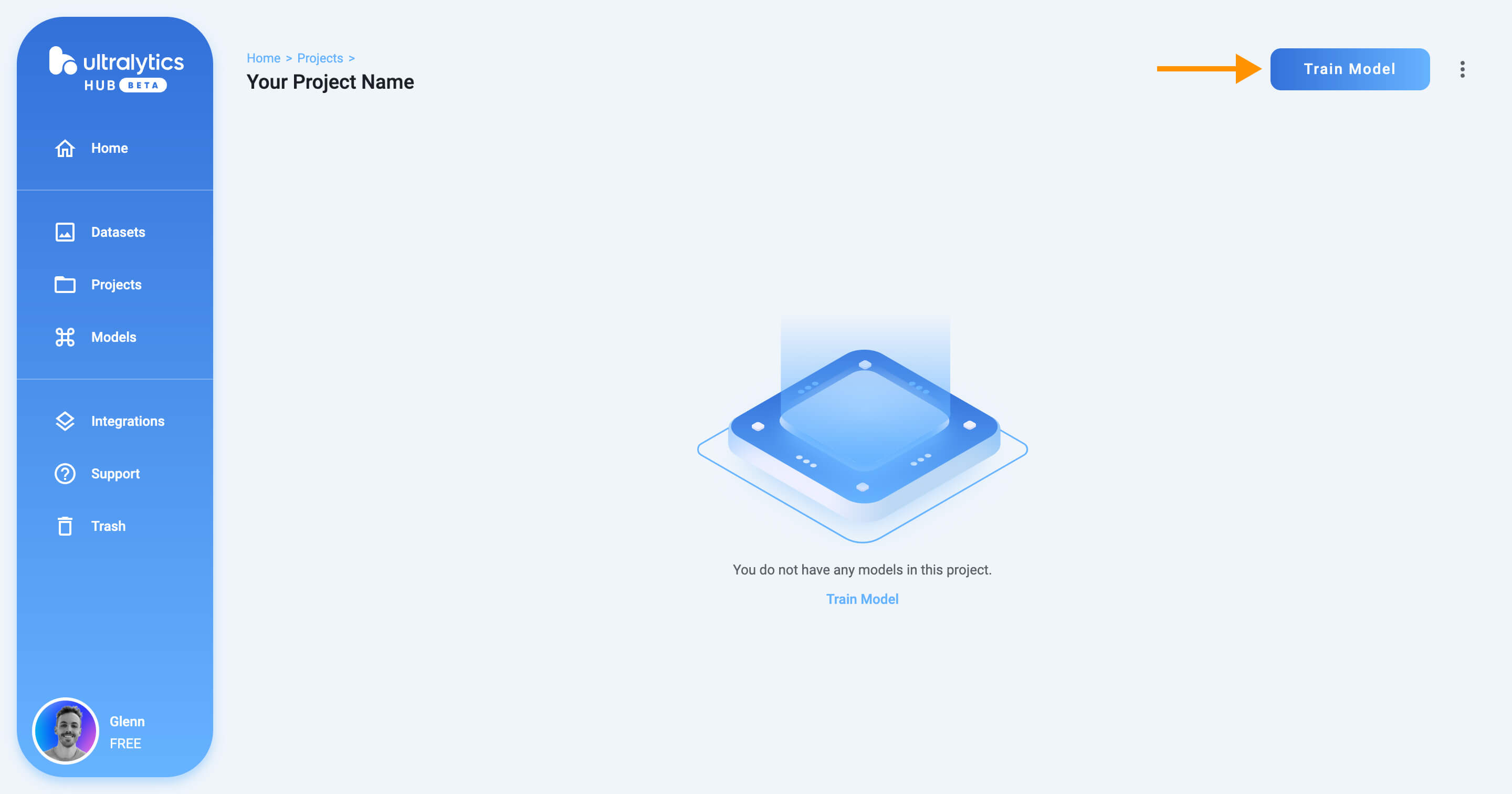

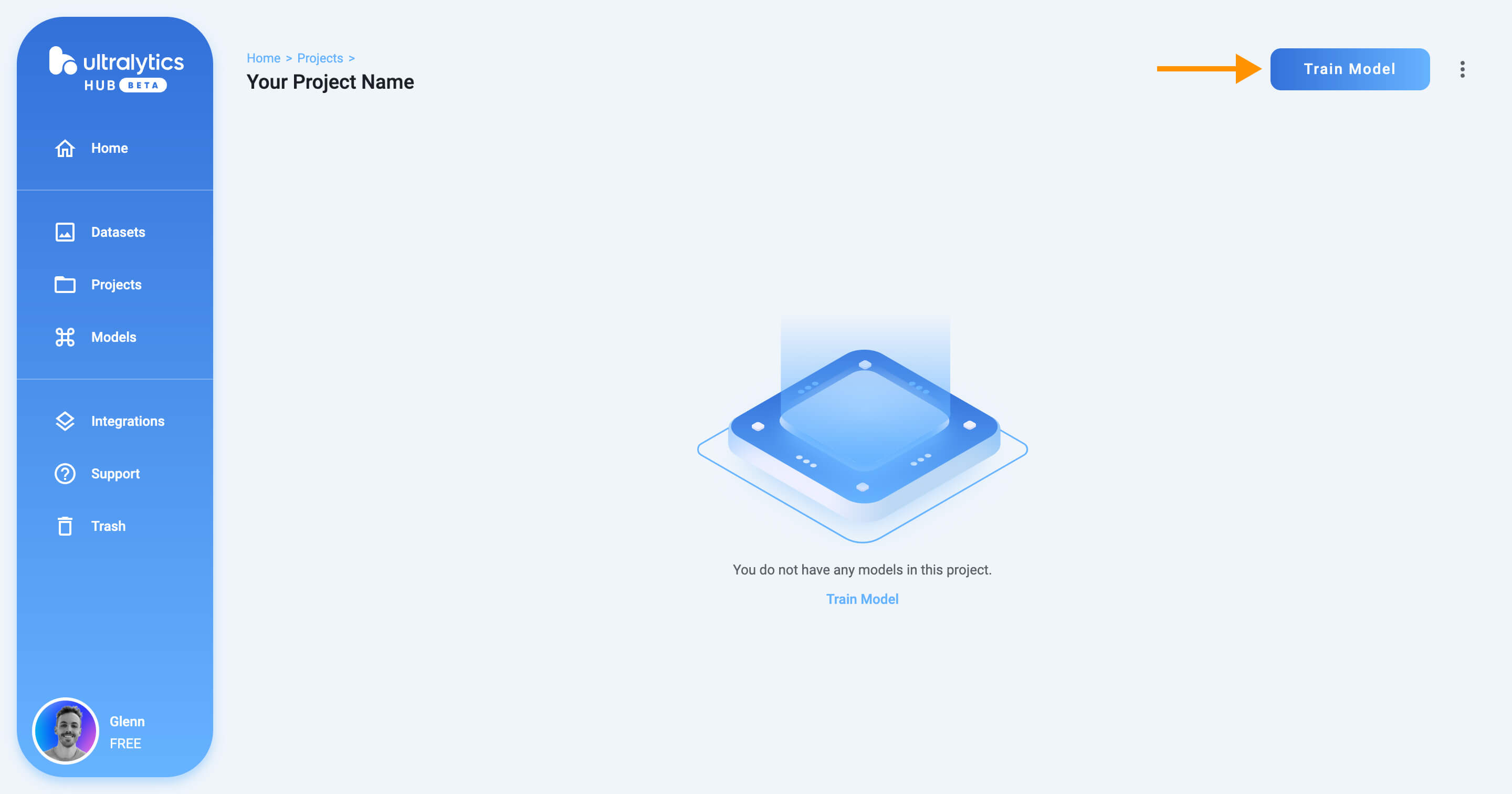

-Next, [create a model](./models.md) inside your project.

+Next, [train a model](https://docs.ultralytics.com/hub/models/#train-model) inside your project.

-

+

## Share Project

@@ -120,7 +120,7 @@ Navigate to the Project page of the project you want to delete, open the project

## Compare Models

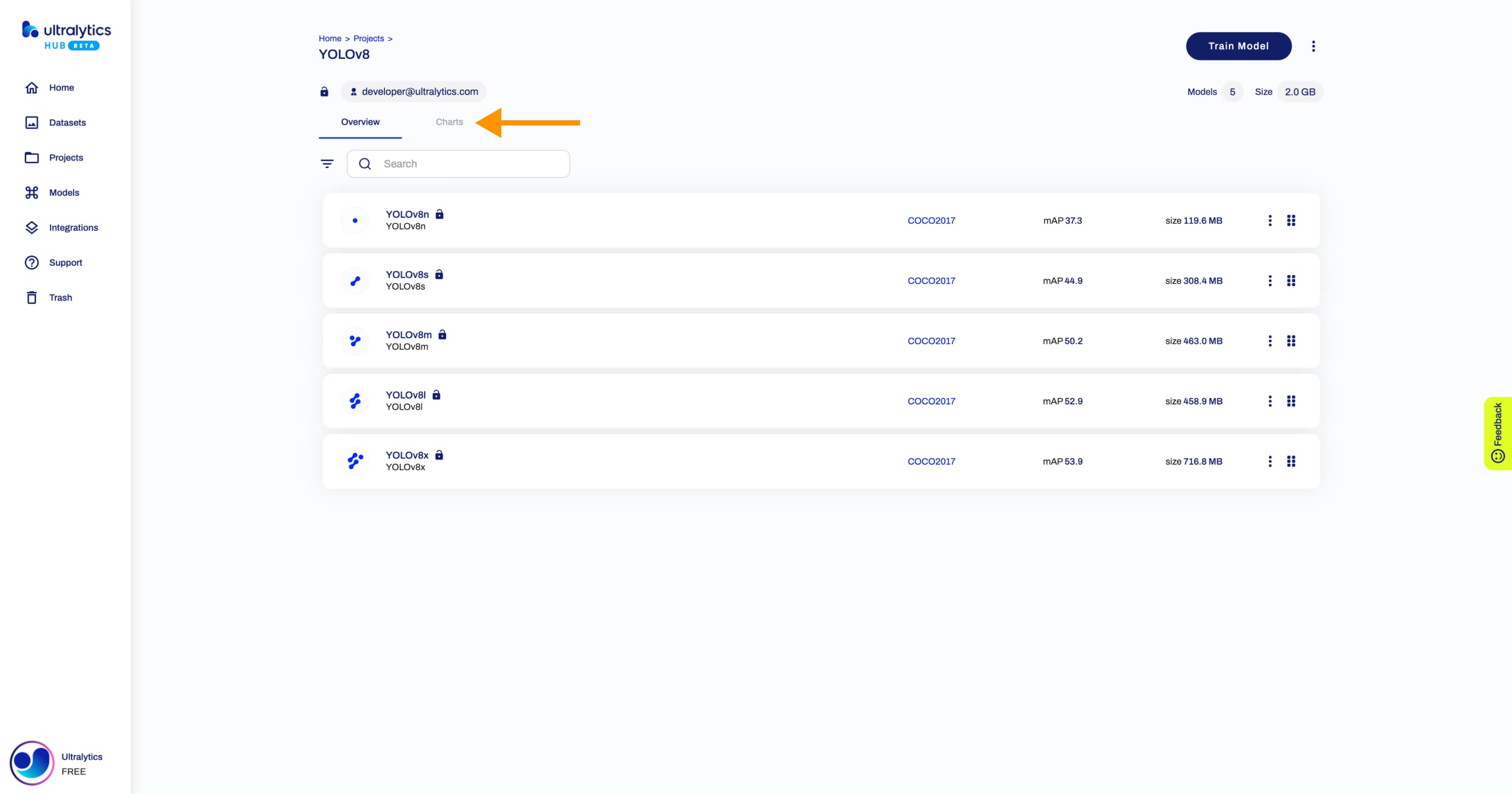

-Navigate to the Project page of the project where the models you want to compare are located. To use the model comparison feature, click on the **Charts** tab.

+Navigate to the Project page of the project where the models you want to compare are located. To use the model comparison feature, click on the **Charts** tab.

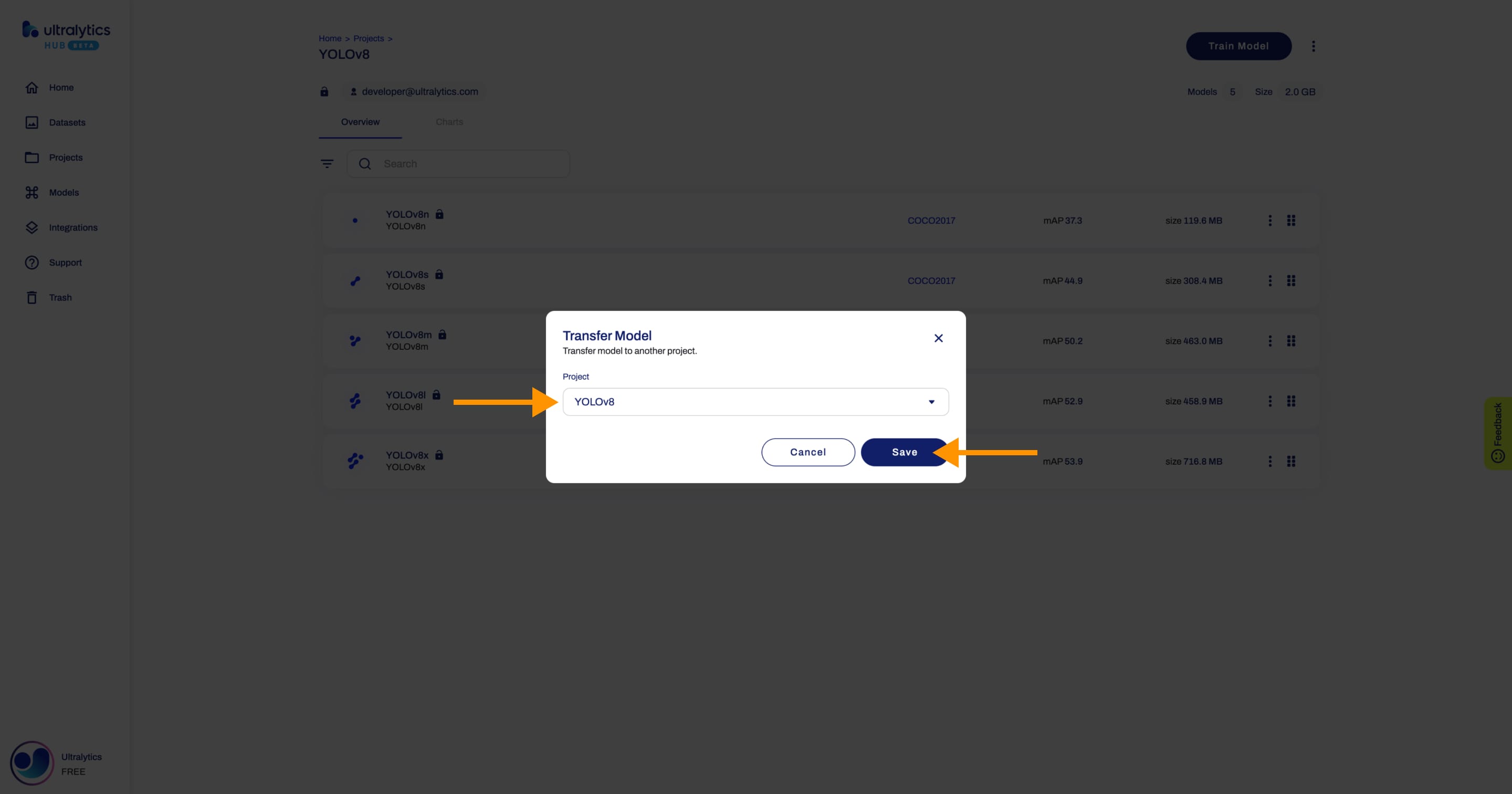

@@ -166,4 +166,4 @@ Navigate to the Project page of the project where the model you want to mode is

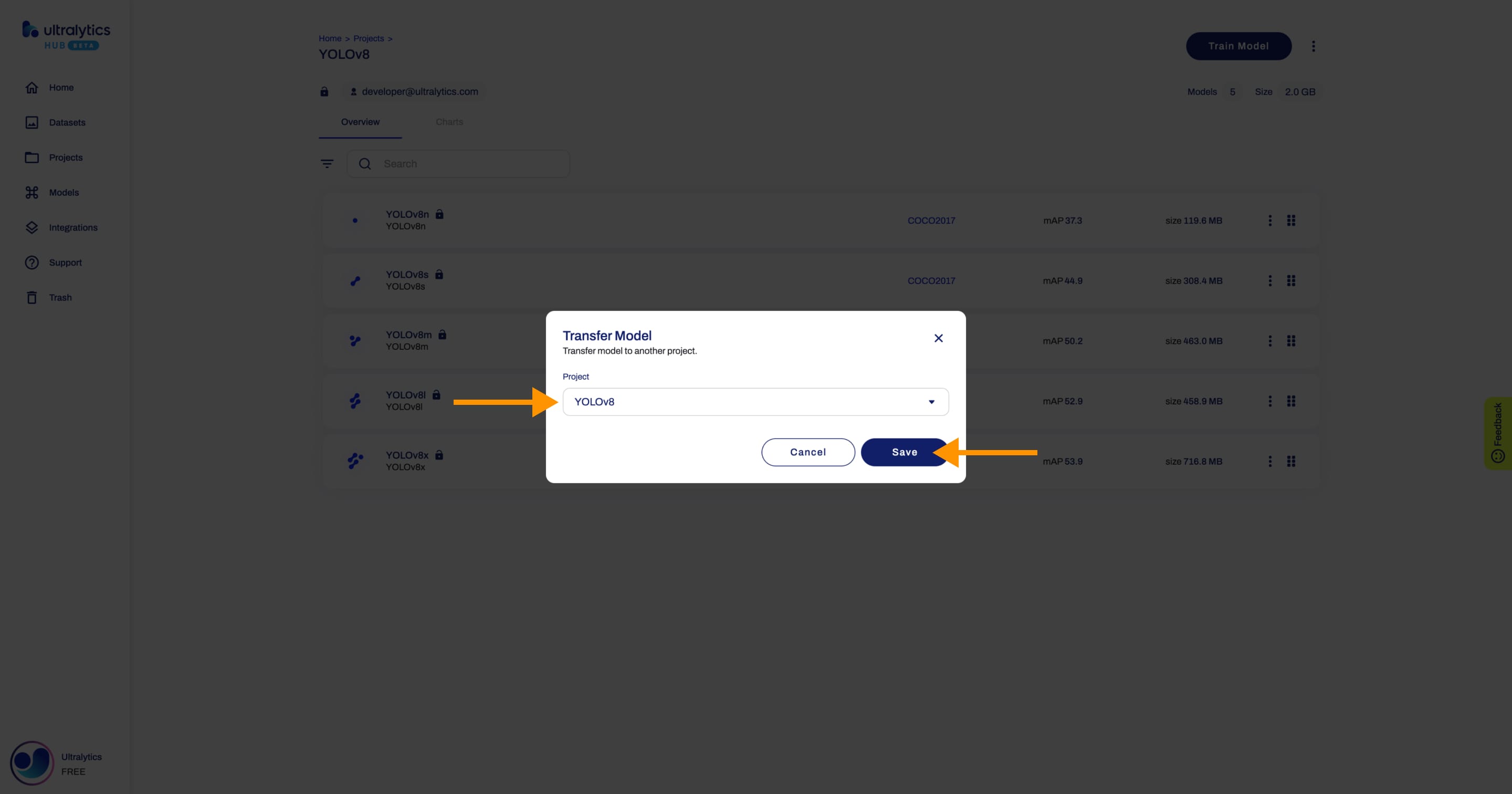

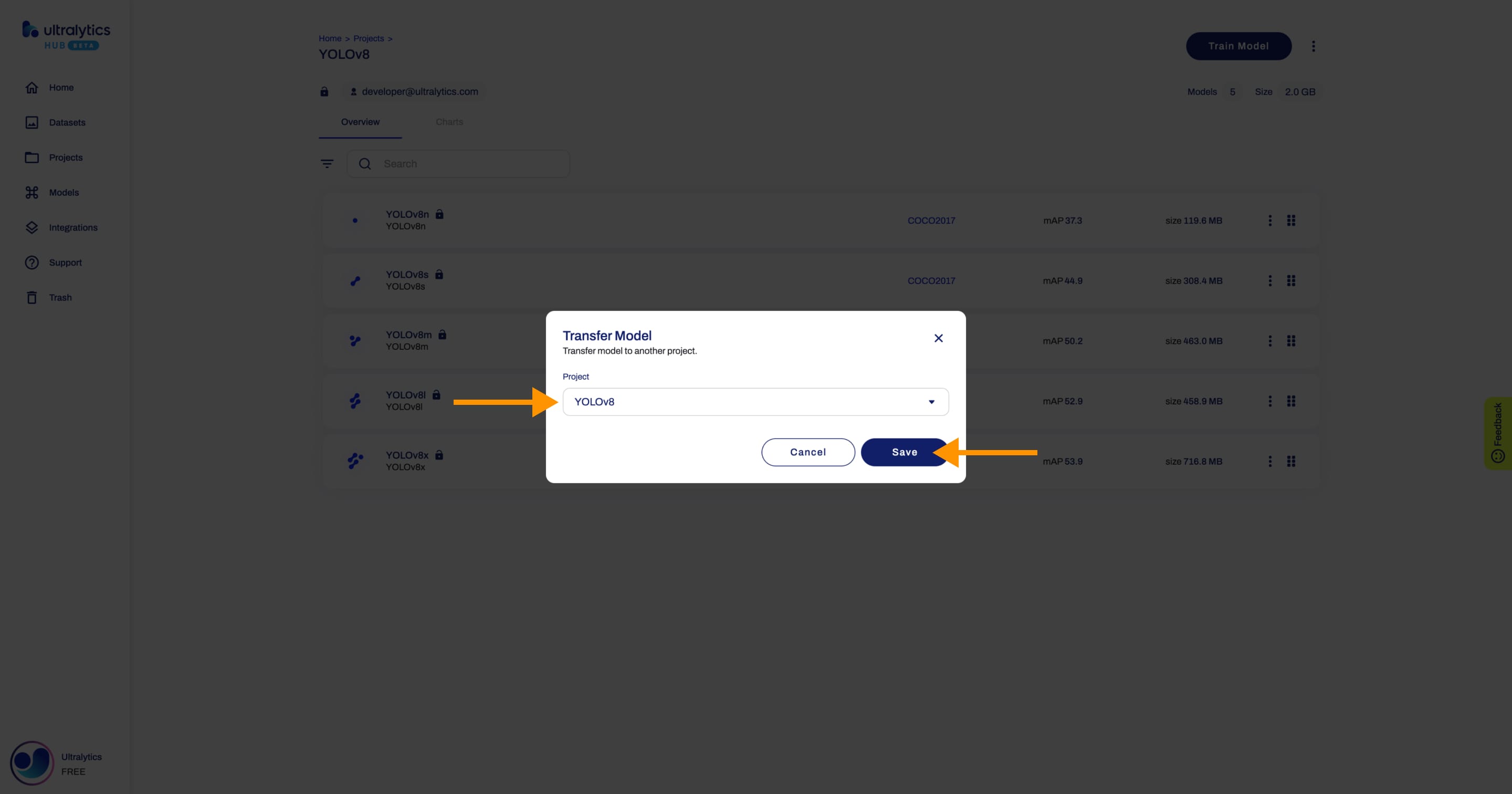

Select the project you want to transfer the model to and click **Save**.

-

\ No newline at end of file

+

diff --git a/docs/models/fast-sam.md b/docs/models/fast-sam.md

new file mode 100644

index 000000000..eefb58093

--- /dev/null

+++ b/docs/models/fast-sam.md

@@ -0,0 +1,169 @@

+---

+comments: true

+description: Explore the Fast Segment Anything Model (FastSAM), a real-time solution for the segment anything task that leverages a Convolutional Neural Network (CNN) for segmenting any object within an image, guided by user interaction prompts.

+keywords: FastSAM, Segment Anything Model, SAM, Convolutional Neural Network, CNN, image segmentation, real-time image processing

+---

+

+# Fast Segment Anything Model (FastSAM)

+

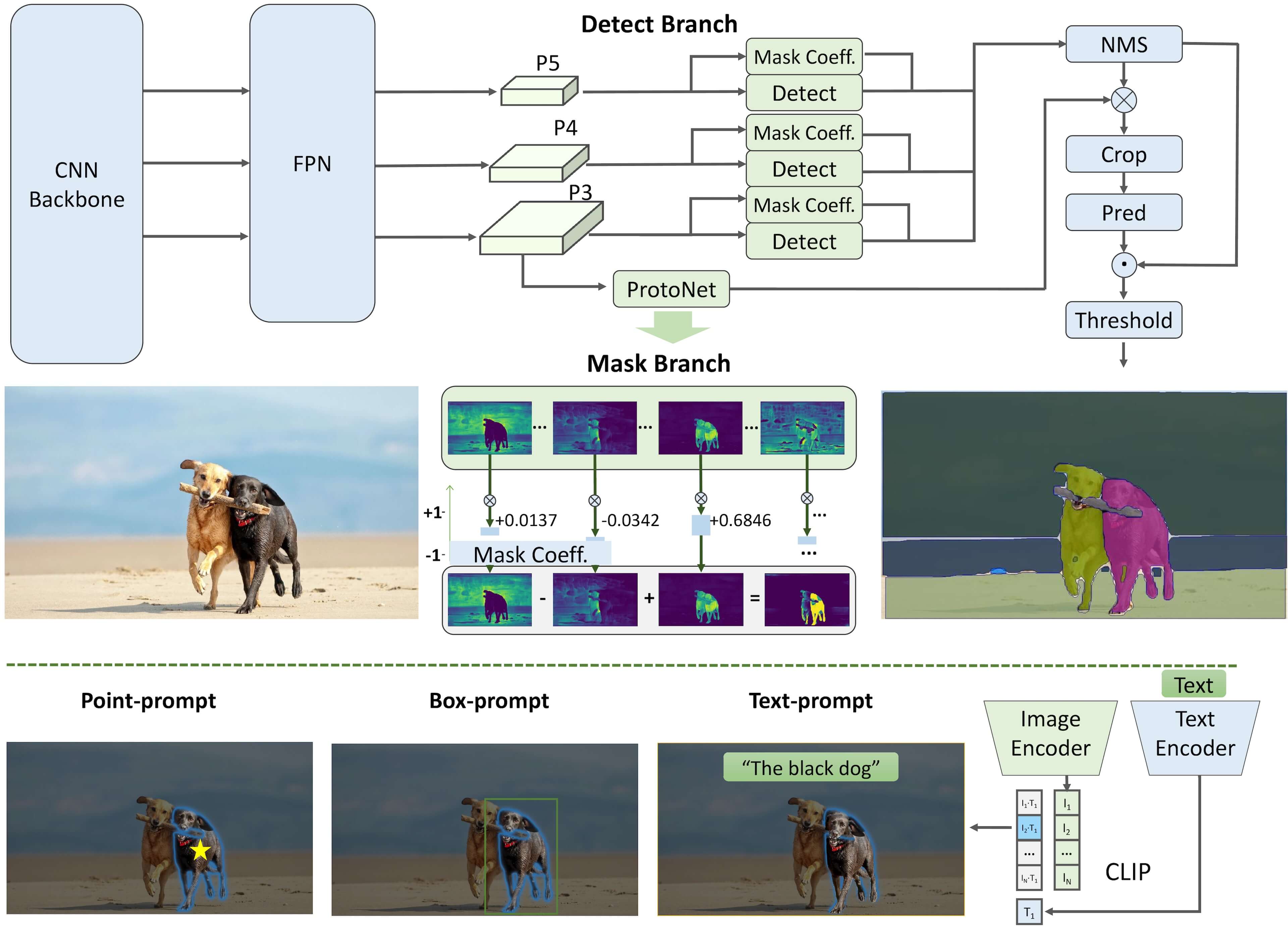

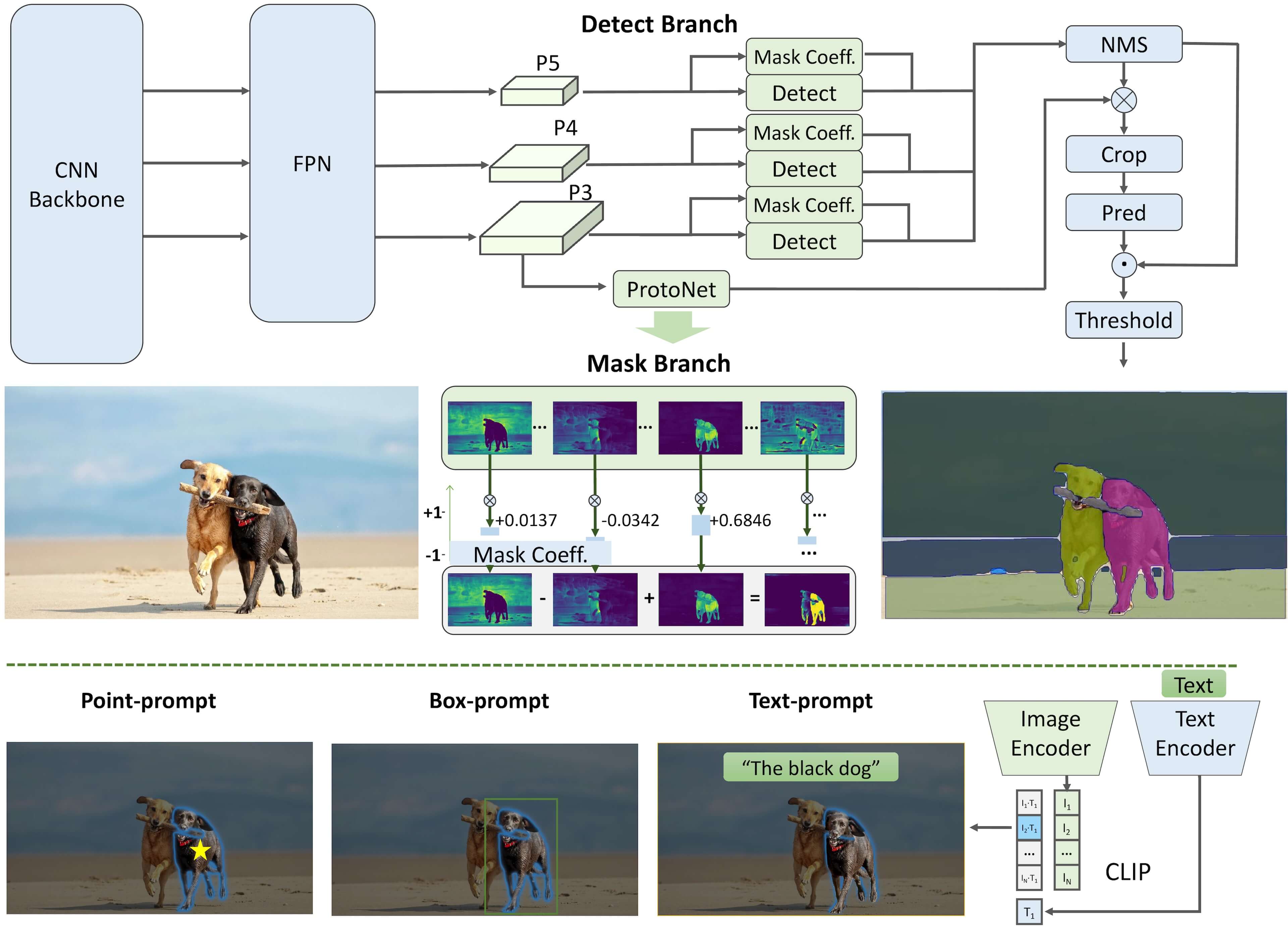

+The Fast Segment Anything Model (FastSAM) is a novel, real-time CNN-based solution for the Segment Anything task. This task is designed to segment any object within an image based on various possible user interaction prompts. FastSAM significantly reduces computational demands while maintaining competitive performance, making it a practical choice for a variety of vision tasks.

+

+

+

+## Overview

+

+FastSAM is designed to address the limitations of the [Segment Anything Model (SAM)](sam.md), a heavy Transformer model with substantial computational resource requirements. The FastSAM decouples the segment anything task into two sequential stages: all-instance segmentation and prompt-guided selection. The first stage uses [YOLOv8-seg](../tasks/segment.md) to produce the segmentation masks of all instances in the image. In the second stage, it outputs the region-of-interest corresponding to the prompt.

+

+## Key Features

+

+1. **Real-time Solution:** By leveraging the computational efficiency of CNNs, FastSAM provides a real-time solution for the segment anything task, making it valuable for industrial applications that require quick results.

+

+2. **Efficiency and Performance:** FastSAM offers a significant reduction in computational and resource demands without compromising on performance quality. It achieves comparable performance to SAM but with drastically reduced computational resources, enabling real-time application.

+

+3. **Prompt-guided Segmentation:** FastSAM can segment any object within an image guided by various possible user interaction prompts, providing flexibility and adaptability in different scenarios.

+

+4. **Based on YOLOv8-seg:** FastSAM is based on [YOLOv8-seg](../tasks/segment.md), an object detector equipped with an instance segmentation branch. This allows it to effectively produce the segmentation masks of all instances in an image.

+

+5. **Competitive Results on Benchmarks:** On the object proposal task on MS COCO, FastSAM achieves high scores at a significantly faster speed than [SAM](sam.md) on a single NVIDIA RTX 3090, demonstrating its efficiency and capability.

+

+6. **Practical Applications:** The proposed approach provides a new, practical solution for a large number of vision tasks at a really high speed, tens or hundreds of times faster than current methods.

+

+7. **Model Compression Feasibility:** FastSAM demonstrates the feasibility of a path that can significantly reduce the computational effort by introducing an artificial prior to the structure, thus opening new possibilities for large model architecture for general vision tasks.

+

+## Usage

+

+### Python API

+

+The FastSAM models are easy to integrate into your Python applications. Ultralytics provides a user-friendly Python API to streamline the process.

+

+#### Predict Usage

+

+To perform object detection on an image, use the `predict` method as shown below:

+

+```python

+from ultralytics import FastSAM

+from ultralytics.yolo.fastsam import FastSAMPrompt

+

+# Define image path and inference device

+IMAGE_PATH = 'ultralytics/assets/bus.jpg'

+DEVICE = 'cpu'

+

+# Create a FastSAM model

+model = FastSAM('FastSAM-s.pt') # or FastSAM-x.pt

+

+# Run inference on an image

+everything_results = model(IMAGE_PATH,

+ device=DEVICE,

+ retina_masks=True,

+ imgsz=1024,

+ conf=0.4,

+ iou=0.9)

+

+prompt_process = FastSAMPrompt(IMAGE_PATH, everything_results, device=DEVICE)

+

+# Everything prompt

+ann = prompt_process.everything_prompt()

+

+# Bbox default shape [0,0,0,0] -> [x1,y1,x2,y2]

+ann = prompt_process.box_prompt(bbox=[200, 200, 300, 300])

+

+# Text prompt

+ann = prompt_process.text_prompt(text='a photo of a dog')

+

+# Point prompt

+# points default [[0,0]] [[x1,y1],[x2,y2]]

+# point_label default [0] [1,0] 0:background, 1:foreground

+ann = prompt_process.point_prompt(points=[[200, 200]], pointlabel=[1])

+prompt_process.plot(annotations=ann, output='./')

+```

+

+This snippet demonstrates the simplicity of loading a pre-trained model and running a prediction on an image.

+

+#### Val Usage

+

+Validation of the model on a dataset can be done as follows:

+

+```python

+from ultralytics import FastSAM

+

+# Create a FastSAM model

+model = FastSAM('FastSAM-s.pt') # or FastSAM-x.pt

+

+# Validate the model

+results = model.val(data='coco8-seg.yaml')

+```

+

+Please note that FastSAM only supports detection and segmentation of a single class of object. This means it will recognize and segment all objects as the same class. Therefore, when preparing the dataset, you need to convert all object category IDs to 0.

+

+### FastSAM official Usage

+

+FastSAM is also available directly from the [https://github.com/CASIA-IVA-Lab/FastSAM](https://github.com/CASIA-IVA-Lab/FastSAM) repository. Here is a brief overview of the typical steps you might take to use FastSAM:

+

+#### Installation

+

+1. Clone the FastSAM repository:

+ ```shell

+ git clone https://github.com/CASIA-IVA-Lab/FastSAM.git

+ ```

+

+2. Create and activate a Conda environment with Python 3.9:

+ ```shell

+ conda create -n FastSAM python=3.9

+ conda activate FastSAM

+ ```

+

+3. Navigate to the cloned repository and install the required packages:

+ ```shell

+ cd FastSAM

+ pip install -r requirements.txt

+ ```

+

+4. Install the CLIP model:

+ ```shell

+ pip install git+https://github.com/openai/CLIP.git

+ ```

+

+#### Example Usage

+

+1. Download a [model checkpoint](https://drive.google.com/file/d/1m1sjY4ihXBU1fZXdQ-Xdj-mDltW-2Rqv/view?usp=sharing).

+

+2. Use FastSAM for inference. Example commands:

+

+ - Segment everything in an image:

+ ```shell

+ python Inference.py --model_path ./weights/FastSAM.pt --img_path ./images/dogs.jpg

+ ```

+

+ - Segment specific objects using text prompt:

+ ```shell

+ python Inference.py --model_path ./weights/FastSAM.pt --img_path ./images/dogs.jpg --text_prompt "the yellow dog"

+ ```

+

+ - Segment objects within a bounding box (provide box coordinates in xywh format):

+ ```shell

+ python Inference.py --model_path ./weights/FastSAM.pt --img_path ./images/dogs.jpg --box_prompt "[570,200,230,400]"

+ ```

+

+ - Segment objects near specific points:

+ ```shell

+ python Inference.py --model_path ./weights/FastSAM.pt --img_path ./images/dogs.jpg --point_prompt "[[520,360],[620,300]]" --point_label "[1,0]"

+ ```

+

+Additionally, you can try FastSAM through a [Colab demo](https://colab.research.google.com/drive/1oX14f6IneGGw612WgVlAiy91UHwFAvr9?usp=sharing) or on the [HuggingFace web demo](https://huggingface.co/spaces/An-619/FastSAM) for a visual experience.

+

+## Citations and Acknowledgements

+

+We would like to acknowledge the FastSAM authors for their significant contributions in the field of real-time instance segmentation:

+

+```bibtex

+@misc{zhao2023fast,

+ title={Fast Segment Anything},

+ author={Xu Zhao and Wenchao Ding and Yongqi An and Yinglong Du and Tao Yu and Min Li and Ming Tang and Jinqiao Wang},

+ year={2023},

+ eprint={2306.12156},

+ archivePrefix={arXiv},

+ primaryClass={cs.CV}

+}

+```

+

+The original FastSAM paper can be found on [arXiv](https://arxiv.org/abs/2306.12156). The authors have made their work publicly available, and the codebase can be accessed on [GitHub](https://github.com/CASIA-IVA-Lab/FastSAM). We appreciate their efforts in advancing the field and making their work accessible to the broader community.

diff --git a/docs/models/index.md b/docs/models/index.md

index cce8af13f..611cad7fb 100644

--- a/docs/models/index.md

+++ b/docs/models/index.md

@@ -17,24 +17,29 @@ In this documentation, we provide information on four major models:

5. [YOLOv7](./yolov7.md): Updated YOLO models released in 2022 by the authors of YOLOv4.

6. [YOLOv8](./yolov8.md): The latest version of the YOLO family, featuring enhanced capabilities such as instance segmentation, pose/keypoints estimation, and classification.

7. [Segment Anything Model (SAM)](./sam.md): Meta's Segment Anything Model (SAM).

-8. [YOLO-NAS](./yolo-nas.md): YOLO Neural Architecture Search (NAS) Models.

-9. [Realtime Detection Transformers (RT-DETR)](./rtdetr.md): Baidu's PaddlePaddle Realtime Detection Transformer (RT-DETR) models.

+8. [Fast Segment Anything Model (FastSAM)](./fast-sam.md): FastSAM by Image & Video Analysis Group, Institute of Automation, Chinese Academy of Sciences.

+9. [YOLO-NAS](./yolo-nas.md): YOLO Neural Architecture Search (NAS) Models.

+10. [Realtime Detection Transformers (RT-DETR)](./rtdetr.md): Baidu's PaddlePaddle Realtime Detection Transformer (RT-DETR) models.

-You can use these models directly in the Command Line Interface (CLI) or in a Python environment. Below are examples of how to use the models with CLI and Python:

+You can use many of these models directly in the Command Line Interface (CLI) or in a Python environment. Below are examples of how to use the models with CLI and Python:

## CLI Example

+Use the `model` argument to pass a model YAML such as `model=yolov8n.yaml` or a pretrained *.pt file such as `model=yolov8n.pt`

+

```bash

-yolo task=detect mode=train model=yolov8n.yaml data=coco128.yaml epochs=100

+yolo task=detect mode=train model=yolov8n.pt data=coco128.yaml epochs=100

```

## Python Example

+PyTorch pretrained models as well as model YAML files can also be passed to the `YOLO()`, `SAM()`, `NAS()` and `RTDETR()` classes to create a model instance in python:

+

```python

from ultralytics import YOLO

-model = YOLO("model.yaml") # build a YOLOv8n model from scratch

-# YOLO("model.pt") use pre-trained model if available

+model = YOLO("yolov8n.pt") # load a pretrained YOLOv8n model

+

model.info() # display model information

model.train(data="coco128.yaml", epochs=100) # train the model

```

diff --git a/docs/models/sam.md b/docs/models/sam.md

index 8dd1e35c2..79bbd01aa 100644

--- a/docs/models/sam.md

+++ b/docs/models/sam.md

@@ -30,13 +30,30 @@ For an in-depth look at the Segment Anything Model and the SA-1B dataset, please

The Segment Anything Model can be employed for a multitude of downstream tasks that go beyond its training data. This includes edge detection, object proposal generation, instance segmentation, and preliminary text-to-mask prediction. With prompt engineering, SAM can swiftly adapt to new tasks and data distributions in a zero-shot manner, establishing it as a versatile and potent tool for all your image segmentation needs.

-```python

-from ultralytics import SAM

-

-model = SAM('sam_b.pt')

-model.info() # display model information

-model.predict('path/to/image.jpg') # predict

-```

+!!! example "SAM prediction example"

+

+ Device is determined automatically. If a GPU is available then it will be used, otherwise inference will run on CPU.

+

+ === "Python"

+

+ ```python

+ from ultralytics import SAM

+

+ # Load a model

+ model = SAM('sam_b.pt')

+

+ # Display model information (optional)

+ model.info()

+

+ # Run inference with the model

+ model('path/to/image.jpg')

+ ```

+ === "CLI"

+

+ ```bash

+ # Run inference with a SAM model

+ yolo predict model=sam_b.pt source=path/to/image.jpg

+ ```

## Available Models and Supported Tasks

@@ -53,6 +70,33 @@ model.predict('path/to/image.jpg') # predict

| Validation | :x: |

| Training | :x: |

+## SAM comparison vs YOLOv8

+

+Here we compare Meta's smallest SAM model, SAM-b, with Ultralytics smallest segmentation model, [YOLOv8n-seg](../tasks/segment.md):

+

+| Model | Size | Parameters | Speed (CPU) |

+|------------------------------------------------|----------------------------|------------------------|-------------------------|

+| Meta's SAM-b | 358 MB | 94.7 M | 51096 ms |

+| Ultralytics [YOLOv8n-seg](../tasks/segment.md) | **6.7 MB** (53.4x smaller) | **3.4 M** (27.9x less) | **59 ms** (866x faster) |

+

+This comparison shows the order-of-magnitude differences in the model sizes and speeds. Whereas SAM presents unique capabilities for automatic segmenting, it is not a direct competitor to YOLOv8 segment models, which are smaller, faster and more efficient since they are dedicated to more targeted use cases.

+

+To reproduce this test:

+

+```python

+from ultralytics import SAM, YOLO

+

+# Profile SAM-b

+model = SAM('sam_b.pt')

+model.info()

+model('ultralytics/assets')

+

+# Profile YOLOv8n-seg

+model = YOLO('yolov8n-seg.pt')

+model.info()

+model('ultralytics/assets')

+```

+

## Auto-Annotation: A Quick Path to Segmentation Datasets

Auto-annotation is a key feature of SAM, allowing users to generate a [segmentation dataset](https://docs.ultralytics.com/datasets/segment) using a pre-trained detection model. This feature enables rapid and accurate annotation of a large number of images, bypassing the need for time-consuming manual labeling.

diff --git a/docs/models/yolov5.md b/docs/models/yolov5.md

index 959c06af1..884fea6bc 100644

--- a/docs/models/yolov5.md

+++ b/docs/models/yolov5.md

@@ -75,7 +75,7 @@ If you use YOLOv5 or YOLOv5u in your research, please cite the Ultralytics YOLOv

```bibtex

@software{yolov5,

- title = {YOLOv5 by Ultralytics},

+ title = {Ultralytics YOLOv5},

author = {Glenn Jocher},

year = {2020},

version = {7.0},

diff --git a/docs/models/yolov8.md b/docs/models/yolov8.md

index 8c78d87e7..8907248cd 100644

--- a/docs/models/yolov8.md

+++ b/docs/models/yolov8.md

@@ -103,7 +103,7 @@ If you use the YOLOv8 model or any other software from this repository in your w

```bibtex

@software{yolov8_ultralytics,

author = {Glenn Jocher and Ayush Chaurasia and Jing Qiu},

- title = {YOLO by Ultralytics},

+ title = {Ultralytics YOLOv8},

version = {8.0.0},

year = {2023},

url = {https://github.com/ultralytics/ultralytics},

diff --git a/docs/modes/benchmark.md b/docs/modes/benchmark.md

index c1c159e6b..0c5b35fae 100644

--- a/docs/modes/benchmark.md

+++ b/docs/modes/benchmark.md

@@ -70,5 +70,6 @@ Benchmarks will attempt to run automatically on all possible export formats belo

| [TF Edge TPU](https://coral.ai/docs/edgetpu/models-intro/) | `edgetpu` | `yolov8n_edgetpu.tflite` | ✅ |

| [TF.js](https://www.tensorflow.org/js) | `tfjs` | `yolov8n_web_model/` | ✅ |

| [PaddlePaddle](https://github.com/PaddlePaddle) | `paddle` | `yolov8n_paddle_model/` | ✅ |

+| [ncnn](https://github.com/Tencent/ncnn) | `ncnn` | `yolov8n_ncnn_model/` | ✅ |

See full `export` details in the [Export](https://docs.ultralytics.com/modes/export/) page.

\ No newline at end of file

diff --git a/docs/modes/export.md b/docs/modes/export.md

index 42e45272a..bff65d334 100644

--- a/docs/modes/export.md

+++ b/docs/modes/export.md

@@ -1,7 +1,7 @@

---

comments: true

description: 'Export mode: Create a deployment-ready YOLOv8 model by converting it to various formats. Export to ONNX or OpenVINO for up to 3x CPU speedup.'

-keywords: ultralytics docs, YOLOv8, export YOLOv8, YOLOv8 model deployment, exporting YOLOv8, ONNX, OpenVINO, TensorRT, CoreML, TF SavedModel, PaddlePaddle, TorchScript, ONNX format, OpenVINO format, TensorRT format, CoreML format, TF SavedModel format, PaddlePaddle format

+keywords: ultralytics docs, YOLOv8, export YOLOv8, YOLOv8 model deployment, exporting YOLOv8, ONNX, OpenVINO, TensorRT, CoreML, TF SavedModel, PaddlePaddle, TorchScript, ONNX format, OpenVINO format, TensorRT format, CoreML format, TF SavedModel format, PaddlePaddle format, Tencent ncnn format

---

+

+

+When the training starts, you can click **Done** and monitor the training progress on the Model page.

+

+

+

+

+

+??? note "Note"

+

+ In case the training stops and a checkpoint was saved, you can resume training your model from the Model page.

+

+

+

+## Preview Model

+

+Ultralytics HUB offers a variety of ways to preview your trained model.

+

+You can preview your model if you click on the **Preview** tab and upload an image in the **Test** card.

+

+

+

+You can also use our Ultralytics Cloud API to effortlessly [run inference](https://docs.ultralytics.com/hub/inference_api) with your custom model.

+

+

+

+Furthermore, you can preview your model in real-time directly on your [iOS](https://apps.apple.com/xk/app/ultralytics/id1583935240) or [Android](https://play.google.com/store/apps/details?id=com.ultralytics.ultralytics_app) mobile device by [downloading](https://ultralytics.com/app_install) our [Ultralytics HUB Mobile Application](./app/index.md).

+

+

+

+## Deploy Model

+

+You can export your model to 13 different formats, including ONNX, OpenVINO, CoreML, TensorFlow, Paddle and many others.

+

+

+

+??? tip "Tip"

+

+ You can customize the export options of each format if you open the export actions dropdown and click on the **Advanced** option.

+

+

+

+## Share Model

+

+!!! info "Info"

+

+ Ultralytics HUB's sharing functionality provides a convenient way to share models with others. This feature is designed to accommodate both existing Ultralytics HUB users and those who have yet to create an account.

+

+??? note "Note"

+

+ You have control over the general access of your models.

+

+ You can choose to set the general access to "Private", in which case, only you will have access to it. Alternatively, you can set the general access to "Unlisted" which grants viewing access to anyone who has the direct link to the model, regardless of whether they have an Ultralytics HUB account or not.

+

+Navigate to the Model page of the model you want to share, open the model actions dropdown and click on the **Share** option. This action will trigger the **Share Model** dialog.

+

+

+

+??? tip "Tip"

+

+ You can also share a model directly from the [Models](https://hub.ultralytics.com/models) page or from the Project page of the project where your model is located.

+

+

+

+Set the general access to "Unlisted" and click **Save**.

+

+

+

+Now, anyone who has the direct link to your model can view it.

+

+??? tip "Tip"

+

+ You can easily click on the models's link shown in the **Share Model** dialog to copy it.

+

+

+

+## Edit Model

+

+Navigate to the Model page of the model you want to edit, open the model actions dropdown and click on the **Edit** option. This action will trigger the **Update Model** dialog.

+

+

+

+??? tip "Tip"

+

+ You can also edit a model directly from the [Models](https://hub.ultralytics.com/models) page or from the Project page of the project where your model is located.

+

+

+

+Apply the desired modifications to your model and then confirm the changes by clicking **Save**.

+

+

+

+## Delete Model

+

+Navigate to the Model page of the model you want to delete, open the model actions dropdown and click on the **Delete** option. This action will delete the model.

+

+

+

+??? tip "Tip"

+

+ You can also delete a model directly from the [Models](https://hub.ultralytics.com/models) page or from the Project page of the project where your model is located.

+

+

+

+??? note "Note"

-## Deploy to Real World

+ If you change your mind, you can restore the model from the [Trash](https://hub.ultralytics.com/trash) page.

-Export your model to 13 different formats, including TensorFlow, ONNX, OpenVINO, CoreML, Paddle and many others. Run

-models directly on your [iOS](https://apps.apple.com/xk/app/ultralytics/id1583935240) or

-[Android](https://play.google.com/store/apps/details?id=com.ultralytics.ultralytics_app) mobile device by downloading

-the [Ultralytics App](https://ultralytics.com/app_install)!

\ No newline at end of file

+

diff --git a/docs/hub/projects.md b/docs/hub/projects.md

index 6a006b365..d8e0f8649 100644

--- a/docs/hub/projects.md

+++ b/docs/hub/projects.md

@@ -26,7 +26,7 @@ Click on the **Create Project** button on the top right of the page. This action

-Type the name of your project in the *Project name* field or keep the default name and finalize the project creation with a single click.

+Type the name of your project in the _Project name_ field or keep the default name and finalize the project creation with a single click.

You have the additional option to enrich your project with a description and a unique image, enhancing its recognizability on the Projects page.

@@ -38,9 +38,9 @@ After your project is created, you will be able to access it from the Projects p

-Next, [create a model](./models.md) inside your project.

+Next, [train a model](https://docs.ultralytics.com/hub/models/#train-model) inside your project.

-

+

## Share Project

@@ -120,7 +120,7 @@ Navigate to the Project page of the project you want to delete, open the project

## Compare Models

-Navigate to the Project page of the project where the models you want to compare are located. To use the model comparison feature, click on the **Charts** tab.

+Navigate to the Project page of the project where the models you want to compare are located. To use the model comparison feature, click on the **Charts** tab.

@@ -166,4 +166,4 @@ Navigate to the Project page of the project where the model you want to mode is

Select the project you want to transfer the model to and click **Save**.

-

\ No newline at end of file

+

diff --git a/docs/models/fast-sam.md b/docs/models/fast-sam.md

new file mode 100644

index 000000000..eefb58093

--- /dev/null

+++ b/docs/models/fast-sam.md

@@ -0,0 +1,169 @@

+---

+comments: true

+description: Explore the Fast Segment Anything Model (FastSAM), a real-time solution for the segment anything task that leverages a Convolutional Neural Network (CNN) for segmenting any object within an image, guided by user interaction prompts.

+keywords: FastSAM, Segment Anything Model, SAM, Convolutional Neural Network, CNN, image segmentation, real-time image processing

+---

+

+# Fast Segment Anything Model (FastSAM)

+

+The Fast Segment Anything Model (FastSAM) is a novel, real-time CNN-based solution for the Segment Anything task. This task is designed to segment any object within an image based on various possible user interaction prompts. FastSAM significantly reduces computational demands while maintaining competitive performance, making it a practical choice for a variety of vision tasks.

+

+

+

+## Overview

+

+FastSAM is designed to address the limitations of the [Segment Anything Model (SAM)](sam.md), a heavy Transformer model with substantial computational resource requirements. The FastSAM decouples the segment anything task into two sequential stages: all-instance segmentation and prompt-guided selection. The first stage uses [YOLOv8-seg](../tasks/segment.md) to produce the segmentation masks of all instances in the image. In the second stage, it outputs the region-of-interest corresponding to the prompt.

+

+## Key Features

+

+1. **Real-time Solution:** By leveraging the computational efficiency of CNNs, FastSAM provides a real-time solution for the segment anything task, making it valuable for industrial applications that require quick results.

+

+2. **Efficiency and Performance:** FastSAM offers a significant reduction in computational and resource demands without compromising on performance quality. It achieves comparable performance to SAM but with drastically reduced computational resources, enabling real-time application.

+

+3. **Prompt-guided Segmentation:** FastSAM can segment any object within an image guided by various possible user interaction prompts, providing flexibility and adaptability in different scenarios.

+

+4. **Based on YOLOv8-seg:** FastSAM is based on [YOLOv8-seg](../tasks/segment.md), an object detector equipped with an instance segmentation branch. This allows it to effectively produce the segmentation masks of all instances in an image.

+

+5. **Competitive Results on Benchmarks:** On the object proposal task on MS COCO, FastSAM achieves high scores at a significantly faster speed than [SAM](sam.md) on a single NVIDIA RTX 3090, demonstrating its efficiency and capability.

+

+6. **Practical Applications:** The proposed approach provides a new, practical solution for a large number of vision tasks at a really high speed, tens or hundreds of times faster than current methods.

+

+7. **Model Compression Feasibility:** FastSAM demonstrates the feasibility of a path that can significantly reduce the computational effort by introducing an artificial prior to the structure, thus opening new possibilities for large model architecture for general vision tasks.

+

+## Usage

+

+### Python API

+

+The FastSAM models are easy to integrate into your Python applications. Ultralytics provides a user-friendly Python API to streamline the process.

+

+#### Predict Usage

+

+To perform object detection on an image, use the `predict` method as shown below:

+

+```python

+from ultralytics import FastSAM

+from ultralytics.yolo.fastsam import FastSAMPrompt

+

+# Define image path and inference device

+IMAGE_PATH = 'ultralytics/assets/bus.jpg'

+DEVICE = 'cpu'

+

+# Create a FastSAM model

+model = FastSAM('FastSAM-s.pt') # or FastSAM-x.pt

+

+# Run inference on an image

+everything_results = model(IMAGE_PATH,

+ device=DEVICE,

+ retina_masks=True,

+ imgsz=1024,

+ conf=0.4,

+ iou=0.9)

+

+prompt_process = FastSAMPrompt(IMAGE_PATH, everything_results, device=DEVICE)

+

+# Everything prompt

+ann = prompt_process.everything_prompt()

+

+# Bbox default shape [0,0,0,0] -> [x1,y1,x2,y2]

+ann = prompt_process.box_prompt(bbox=[200, 200, 300, 300])

+

+# Text prompt

+ann = prompt_process.text_prompt(text='a photo of a dog')

+

+# Point prompt

+# points default [[0,0]] [[x1,y1],[x2,y2]]

+# point_label default [0] [1,0] 0:background, 1:foreground

+ann = prompt_process.point_prompt(points=[[200, 200]], pointlabel=[1])

+prompt_process.plot(annotations=ann, output='./')

+```

+

+This snippet demonstrates the simplicity of loading a pre-trained model and running a prediction on an image.

+

+#### Val Usage

+

+Validation of the model on a dataset can be done as follows:

+

+```python

+from ultralytics import FastSAM

+

+# Create a FastSAM model

+model = FastSAM('FastSAM-s.pt') # or FastSAM-x.pt

+

+# Validate the model

+results = model.val(data='coco8-seg.yaml')

+```

+

+Please note that FastSAM only supports detection and segmentation of a single class of object. This means it will recognize and segment all objects as the same class. Therefore, when preparing the dataset, you need to convert all object category IDs to 0.

+

+### FastSAM official Usage

+

+FastSAM is also available directly from the [https://github.com/CASIA-IVA-Lab/FastSAM](https://github.com/CASIA-IVA-Lab/FastSAM) repository. Here is a brief overview of the typical steps you might take to use FastSAM:

+

+#### Installation

+

+1. Clone the FastSAM repository:

+ ```shell

+ git clone https://github.com/CASIA-IVA-Lab/FastSAM.git

+ ```

+

+2. Create and activate a Conda environment with Python 3.9:

+ ```shell

+ conda create -n FastSAM python=3.9

+ conda activate FastSAM

+ ```

+

+3. Navigate to the cloned repository and install the required packages:

+ ```shell

+ cd FastSAM

+ pip install -r requirements.txt

+ ```

+

+4. Install the CLIP model:

+ ```shell

+ pip install git+https://github.com/openai/CLIP.git

+ ```

+

+#### Example Usage

+

+1. Download a [model checkpoint](https://drive.google.com/file/d/1m1sjY4ihXBU1fZXdQ-Xdj-mDltW-2Rqv/view?usp=sharing).

+

+2. Use FastSAM for inference. Example commands:

+

+ - Segment everything in an image:

+ ```shell

+ python Inference.py --model_path ./weights/FastSAM.pt --img_path ./images/dogs.jpg

+ ```

+

+ - Segment specific objects using text prompt:

+ ```shell

+ python Inference.py --model_path ./weights/FastSAM.pt --img_path ./images/dogs.jpg --text_prompt "the yellow dog"

+ ```

+

+ - Segment objects within a bounding box (provide box coordinates in xywh format):

+ ```shell

+ python Inference.py --model_path ./weights/FastSAM.pt --img_path ./images/dogs.jpg --box_prompt "[570,200,230,400]"

+ ```

+

+ - Segment objects near specific points:

+ ```shell

+ python Inference.py --model_path ./weights/FastSAM.pt --img_path ./images/dogs.jpg --point_prompt "[[520,360],[620,300]]" --point_label "[1,0]"

+ ```

+

+Additionally, you can try FastSAM through a [Colab demo](https://colab.research.google.com/drive/1oX14f6IneGGw612WgVlAiy91UHwFAvr9?usp=sharing) or on the [HuggingFace web demo](https://huggingface.co/spaces/An-619/FastSAM) for a visual experience.

+

+## Citations and Acknowledgements

+

+We would like to acknowledge the FastSAM authors for their significant contributions in the field of real-time instance segmentation:

+

+```bibtex

+@misc{zhao2023fast,

+ title={Fast Segment Anything},

+ author={Xu Zhao and Wenchao Ding and Yongqi An and Yinglong Du and Tao Yu and Min Li and Ming Tang and Jinqiao Wang},

+ year={2023},

+ eprint={2306.12156},

+ archivePrefix={arXiv},

+ primaryClass={cs.CV}

+}

+```

+

+The original FastSAM paper can be found on [arXiv](https://arxiv.org/abs/2306.12156). The authors have made their work publicly available, and the codebase can be accessed on [GitHub](https://github.com/CASIA-IVA-Lab/FastSAM). We appreciate their efforts in advancing the field and making their work accessible to the broader community.

diff --git a/docs/models/index.md b/docs/models/index.md

index cce8af13f..611cad7fb 100644

--- a/docs/models/index.md

+++ b/docs/models/index.md

@@ -17,24 +17,29 @@ In this documentation, we provide information on four major models:

5. [YOLOv7](./yolov7.md): Updated YOLO models released in 2022 by the authors of YOLOv4.

6. [YOLOv8](./yolov8.md): The latest version of the YOLO family, featuring enhanced capabilities such as instance segmentation, pose/keypoints estimation, and classification.

7. [Segment Anything Model (SAM)](./sam.md): Meta's Segment Anything Model (SAM).

-8. [YOLO-NAS](./yolo-nas.md): YOLO Neural Architecture Search (NAS) Models.

-9. [Realtime Detection Transformers (RT-DETR)](./rtdetr.md): Baidu's PaddlePaddle Realtime Detection Transformer (RT-DETR) models.

+8. [Fast Segment Anything Model (FastSAM)](./fast-sam.md): FastSAM by Image & Video Analysis Group, Institute of Automation, Chinese Academy of Sciences.

+9. [YOLO-NAS](./yolo-nas.md): YOLO Neural Architecture Search (NAS) Models.

+10. [Realtime Detection Transformers (RT-DETR)](./rtdetr.md): Baidu's PaddlePaddle Realtime Detection Transformer (RT-DETR) models.

-You can use these models directly in the Command Line Interface (CLI) or in a Python environment. Below are examples of how to use the models with CLI and Python:

+You can use many of these models directly in the Command Line Interface (CLI) or in a Python environment. Below are examples of how to use the models with CLI and Python:

## CLI Example

+Use the `model` argument to pass a model YAML such as `model=yolov8n.yaml` or a pretrained *.pt file such as `model=yolov8n.pt`

+

```bash

-yolo task=detect mode=train model=yolov8n.yaml data=coco128.yaml epochs=100

+yolo task=detect mode=train model=yolov8n.pt data=coco128.yaml epochs=100

```

## Python Example

+PyTorch pretrained models as well as model YAML files can also be passed to the `YOLO()`, `SAM()`, `NAS()` and `RTDETR()` classes to create a model instance in python:

+

```python

from ultralytics import YOLO

-model = YOLO("model.yaml") # build a YOLOv8n model from scratch

-# YOLO("model.pt") use pre-trained model if available

+model = YOLO("yolov8n.pt") # load a pretrained YOLOv8n model

+

model.info() # display model information

model.train(data="coco128.yaml", epochs=100) # train the model

```

diff --git a/docs/models/sam.md b/docs/models/sam.md

index 8dd1e35c2..79bbd01aa 100644

--- a/docs/models/sam.md

+++ b/docs/models/sam.md

@@ -30,13 +30,30 @@ For an in-depth look at the Segment Anything Model and the SA-1B dataset, please

The Segment Anything Model can be employed for a multitude of downstream tasks that go beyond its training data. This includes edge detection, object proposal generation, instance segmentation, and preliminary text-to-mask prediction. With prompt engineering, SAM can swiftly adapt to new tasks and data distributions in a zero-shot manner, establishing it as a versatile and potent tool for all your image segmentation needs.

-```python

-from ultralytics import SAM

-

-model = SAM('sam_b.pt')

-model.info() # display model information

-model.predict('path/to/image.jpg') # predict

-```

+!!! example "SAM prediction example"

+

+ Device is determined automatically. If a GPU is available then it will be used, otherwise inference will run on CPU.

+

+ === "Python"

+

+ ```python

+ from ultralytics import SAM

+

+ # Load a model

+ model = SAM('sam_b.pt')

+