# Coral Edge TPU on a Raspberry Pi with Ultralytics YOLOv8 🚀

<palign="center">

<imgwidth="800"src="https://images.ctfassets.net/2lpsze4g694w/5XK2dV0w55U0TefijPli1H/bf0d119d77faef9a5d2cc0dad2aa4b42/Edge-TPU-USB-Accelerator-and-Pi.jpg?w=800"alt="Raspberry Pi single board computer with USB Edge TPU accelerator">

<imgwidth="800"src="https://images.ctfassets.net/2lpsze4g694w/5XK2dV0w55U0TefijPli1H/bf0d119d77faef9a5d2cc0dad2aa4b42/Edge-TPU-USB-Accelerator-and-Pi.jpg"alt="Raspberry Pi single board computer with USB Edge TPU accelerator">

@ -73,7 +73,7 @@ Here are some other benefits of data augmentation:

Common augmentation techniques include flipping, rotation, scaling, and color adjustments. Several libraries, such as Albumentations, Imgaug, and TensorFlow's ImageDataGenerator, can generate these augmentations.

<palign="center">

<imgwidth="100%"src="https://i0.wp.com/ubiai.tools/wp-content/uploads/2023/11/UKwFg.jpg?fit=2204%2C775&ssl=1"alt="Overview of Data Augmentations">

<imgwidth="100%"src="https://i0.wp.com/ubiai.tools/wp-content/uploads/2023/11/UKwFg.jpg"alt="Overview of Data Augmentations">

</p>

With respect to YOLOv8, you can [augment your custom dataset](../modes/train.md) by modifying the dataset configuration file, a .yaml file. In this file, you can add an augmentation section with parameters that specify how you want to augment your data.

@ -15,7 +15,7 @@ You can train [Ultralytics YOLOv8 models](https://github.com/ultralytics/ultraly

[Watsonx](https://www.ibm.com/watsonx) is IBM's cloud-based platform designed for commercial generative AI and scientific data. IBM Watsonx's three components - watsonx.ai, watsonx.data, and watsonx.governance - come together to create an end-to-end, trustworthy AI platform that can accelerate AI projects aimed at solving business problems. It provides powerful tools for building, training, and [deploying machine learning models](../guides/model-deployment-options.md) and makes it easy to connect with various data sources.

<palign="center">

<imgwidth="800"src="https://cdn.stackoverflow.co/images/jo7n4k8s/production/48b67e6aec41f89031a3426cbd1f78322e6776cb-8800x4950.jpg?auto=format"alt="Overview of IBM Watsonx">

<imgwidth="800"src="https://cdn.stackoverflow.co/images/jo7n4k8s/production/48b67e6aec41f89031a3426cbd1f78322e6776cb-8800x4950.jpg"alt="Overview of IBM Watsonx">

</p>

Its user-friendly interface and collaborative capabilities streamline the development process and help with efficient model management and deployment. Whether for computer vision, predictive analytics, natural language processing, or other AI applications, IBM Watsonx provides the tools and support needed to drive innovation.

Developed by Baidu, [PaddlePaddle](https://www.paddlepaddle.org.cn/en) (**PA**rallel **D**istributed **D**eep **LE**arning) is China's first open-source deep learning platform. Unlike some frameworks built mainly for research, PaddlePaddle prioritizes ease of use and smooth integration across industries.

@ -53,9 +53,9 @@ Here is the comparison of the whole pipeline:

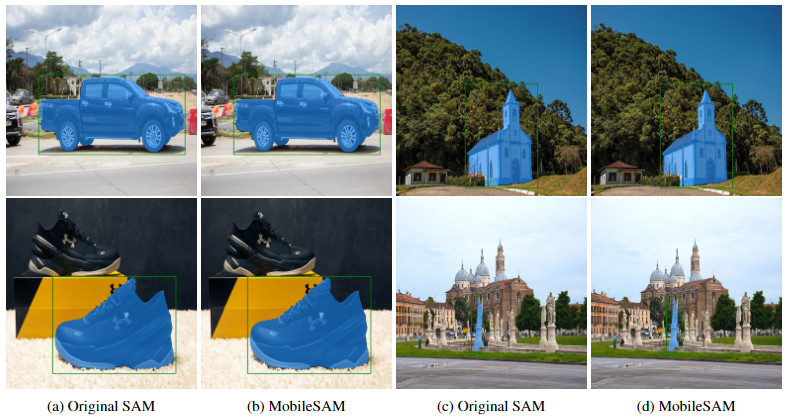

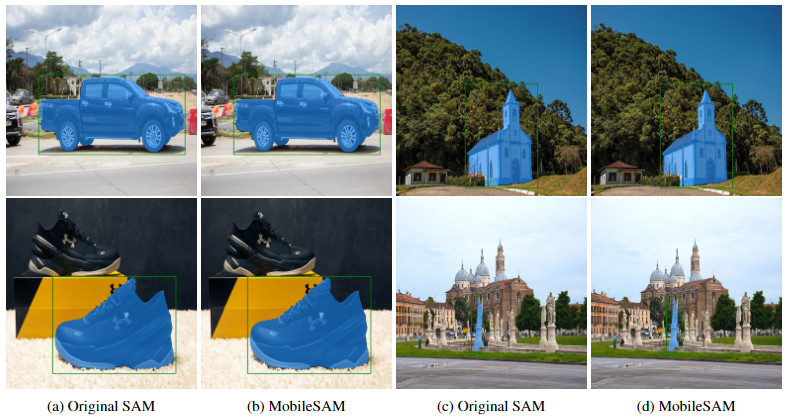

The performance of MobileSAM and the original SAM are demonstrated using both a point and a box as prompts.

With its superior performance, MobileSAM is approximately 5 times smaller and 7 times faster than the current FastSAM. More details are available at the [MobileSAM project page](https://github.com/ChaoningZhang/MobileSAM).

@ -8,7 +8,7 @@ keywords: SAM 2, Segment Anything, video segmentation, image segmentation, promp

SAM 2, the successor to Meta's [Segment Anything Model (SAM)](sam.md), is a cutting-edge tool designed for comprehensive object segmentation in both images and videos. It excels in handling complex visual data through a unified, promptable model architecture that supports real-time processing and zero-shot generalization.

## Key Features

@ -54,7 +54,7 @@ SAM 2 sets a new benchmark in the field, outperforming previous models on variou

- **Memory Mechanism**: Includes a memory encoder, memory bank, and memory attention module. These components collectively store and utilize information from past frames, enabling the model to maintain consistent object tracking over time.

- **Mask Decoder**: Generates the final segmentation masks based on the encoded image features and prompts. In video, it also uses memory context to ensure accurate tracking across frames.