diff --git a/.github/workflows/publish.yml b/.github/workflows/publish.yml

index 0f183abafd..3c0c255081 100644

--- a/.github/workflows/publish.yml

+++ b/.github/workflows/publish.yml

@@ -168,6 +168,7 @@ jobs:

PERSONAL_ACCESS_TOKEN: ${{ secrets.PERSONAL_ACCESS_TOKEN }}

INDEXNOW_KEY: ${{ secrets.INDEXNOW_KEY_DOCS }}

run: |

+ pip install black

export JUPYTER_PLATFORM_DIRS=1

python docs/build_docs.py

git clone https://github.com/ultralytics/docs.git docs-repo

diff --git a/docs/en/guides/model-monitoring-and-maintenance.md b/docs/en/guides/model-monitoring-and-maintenance.md

index 7074de6de7..a301cf16b9 100644

--- a/docs/en/guides/model-monitoring-and-maintenance.md

+++ b/docs/en/guides/model-monitoring-and-maintenance.md

@@ -135,3 +135,38 @@ Using these resources will help you solve challenges and stay up-to-date with th

## Key Takeaways

We covered key tips for monitoring, maintaining, and documenting your computer vision models. Regular updates and re-training help the model adapt to new data patterns. Detecting and fixing data drift helps your model stay accurate. Continuous monitoring catches issues early, and good documentation makes collaboration and future updates easier. Following these steps will help your computer vision project stay successful and effective over time.

+

+## FAQ

+

+### How do I monitor the performance of my deployed computer vision model?

+

+Monitoring the performance of your deployed computer vision model is crucial to ensure its accuracy and reliability over time. You can use tools like [Prometheus](https://prometheus.io/), [Grafana](https://grafana.com/), and [Evidently AI](https://www.evidentlyai.com/) to track key metrics, detect anomalies, and identify data drift. Regularly monitor inputs and outputs, set up alerts for unusual behavior, and use diverse data sources to get a comprehensive view of your model's performance. For more details, check out our section on [Model Monitoring](#model-monitoring-is-key).

+

+### What are the best practices for maintaining computer vision models after deployment?

+

+Maintaining computer vision models involves regular updates, retraining, and monitoring to ensure continued accuracy and relevance. Best practices include:

+

+- **Continuous Monitoring**: Track performance metrics and data quality regularly.

+- **Data Drift Detection**: Use statistical techniques to identify changes in data distributions.

+- **Regular Updates and Retraining**: Implement incremental learning or periodic full retraining based on data changes.

+- **Documentation**: Maintain detailed documentation of model architecture, training processes, and evaluation metrics. For more insights, visit our [Model Maintenance](#model-maintenance) section.

+

+### Why is data drift detection important for AI models?

+

+Data drift detection is essential because it helps identify when the statistical properties of the input data change over time, which can degrade model performance. Techniques like continuous monitoring, statistical tests (e.g., Kolmogorov-Smirnov test), and feature drift analysis can help spot issues early. Addressing data drift ensures that your model remains accurate and relevant in changing environments. Learn more about data drift detection in our [Data Drift Detection](#data-drift-detection) section.

+

+### What tools can I use for anomaly detection in computer vision models?

+

+For anomaly detection in computer vision models, tools like [Prometheus](https://prometheus.io/), [Grafana](https://grafana.com/), and [Evidently AI](https://www.evidentlyai.com/) are highly effective. These tools can help you set up alert systems to detect unusual data points or patterns that deviate from expected behavior. Configurable alerts and standardized messages can help you respond quickly to potential issues. Explore more in our [Anomaly Detection and Alert Systems](#anomaly-detection-and-alert-systems) section.

+

+### How can I document my computer vision project effectively?

+

+Effective documentation of a computer vision project should include:

+

+- **Project Overview**: High-level summary, problem statement, and solution approach.

+- **Model Architecture**: Details of the model structure, components, and hyperparameters.

+- **Data Preparation**: Information on data sources, preprocessing steps, and transformations.

+- **Training Process**: Description of the training procedure, datasets used, and challenges encountered.

+- **Evaluation Metrics**: Metrics used for performance evaluation and analysis.

+- **Deployment Steps**: Steps taken for model deployment and any specific challenges.

+- **Monitoring and Maintenance Procedure**: Plan for ongoing monitoring and maintenance. For more comprehensive guidelines, refer to our [Documentation](#documentation) section.

diff --git a/docs/en/guides/steps-of-a-cv-project.md b/docs/en/guides/steps-of-a-cv-project.md

index a734ecf29b..a1fbdb5e97 100644

--- a/docs/en/guides/steps-of-a-cv-project.md

+++ b/docs/en/guides/steps-of-a-cv-project.md

@@ -10,6 +10,17 @@ keywords: Computer Vision, AI, Object Detection, Image Classification, Instance

Computer vision is a subfield of artificial intelligence (AI) that helps computers see and understand the world like humans do. It processes and analyzes images or videos to extract information, recognize patterns, and make decisions based on that data.

+

+

+

+

+ Watch: How to Do Computer Vision Projects | A Step-by-Step Guide

+

+

Computer vision techniques like [object detection](../tasks/detect.md), [image classification](../tasks/classify.md), and [instance segmentation](../tasks/segment.md) can be applied across various industries, from [autonomous driving](https://www.ultralytics.com/solutions/ai-in-self-driving) to [medical imaging](https://www.ultralytics.com/solutions/ai-in-healthcare) to gain valuable insights.

diff --git a/docs/en/hub/index.md b/docs/en/hub/index.md

index a88fce70ca..cc5177ca18 100644

--- a/docs/en/hub/index.md

+++ b/docs/en/hub/index.md

@@ -76,3 +76,52 @@ We hope that the resources here will help you get the most out of HUB. Please br

- [**Ultralytics HUB App**](app/index.md): Learn about the Ultralytics HUB App, which allows you to run models directly on your mobile device.

- [**iOS**](app/ios.md): Explore CoreML acceleration on iPhones and iPads.

- [**Android**](app/android.md): Explore TFLite acceleration on Android devices.

+

+## FAQ

+

+### How do I get started with Ultralytics HUB for training YOLO models?

+

+To get started with [Ultralytics HUB](https://ultralytics.com/hub), follow these steps:

+

+1. **Sign Up:** Create an account on the [Ultralytics HUB](https://ultralytics.com/hub).

+2. **Upload Dataset:** Navigate to the [Datasets](datasets.md) section to upload your custom dataset.

+3. **Train Model:** Go to the [Models](models.md) section and select a pre-trained YOLOv5 or YOLOv8 model to start training.

+4. **Deploy Model:** Once trained, preview and deploy your model using the [Ultralytics HUB App](app/index.md) for real-time tasks.

+

+For a detailed guide, refer to the [Quickstart](quickstart.md) page.

+

+### What are the benefits of using Ultralytics HUB over other AI platforms?

+

+[Ultralytics HUB](https://ultralytics.com/hub) offers several unique benefits:

+

+- **User-Friendly Interface:** Intuitive design for easy dataset uploads and model training.

+- **Pre-Trained Models:** Access to a variety of pre-trained YOLOv5 and YOLOv8 models.

+- **Cloud Training:** Seamless cloud training capabilities, detailed on the [Cloud Training](cloud-training.md) page.

+- **Real-Time Deployment:** Effortlessly deploy models for real-time applications using the [Ultralytics HUB App](app/index.md).

+- **Team Collaboration:** Collaborate with your team efficiently through the [Teams](teams.md) feature.

+

+Explore more about the advantages in our [Ultralytics HUB Blog](https://www.ultralytics.com/blog/ultralytics-hub-a-game-changer-for-computer-vision).

+

+### Can I use Ultralytics HUB for object detection on mobile devices?

+

+Yes, Ultralytics HUB supports object detection on mobile devices. You can run YOLOv5 and YOLOv8 models on both iOS and Android devices using the Ultralytics HUB App. For more details:

+

+- **iOS:** Learn about CoreML acceleration on iPhones and iPads in the [iOS](app/ios.md) section.

+- **Android:** Explore TFLite acceleration on Android devices in the [Android](app/android.md) section.

+

+### How do I manage and organize my projects in Ultralytics HUB?

+

+Ultralytics HUB allows you to manage and organize your projects efficiently. You can group your models into projects for better organization. To learn more:

+

+- Visit the [Projects](projects.md) page for detailed instructions on creating, editing, and managing projects.

+- Use the [Teams](teams.md) feature to collaborate with team members and share resources.

+

+### What integrations are available with Ultralytics HUB?

+

+Ultralytics HUB offers seamless integrations with various platforms to enhance your machine learning workflows. Some key integrations include:

+

+- **Roboflow:** For dataset management and model training. Learn more on the [Integrations](integrations.md) page.

+- **Google Colab:** Efficiently train models using Google Colab's cloud-based environment. Detailed steps are available in the [Google Colab](https://docs.ultralytics.com/integrations/google-colab) section.

+- **Weights & Biases:** For enhanced experiment tracking and visualization. Explore the [Weights & Biases](https://docs.ultralytics.com/integrations/weights-biases) integration.

+

+For a complete list of integrations, refer to the [Integrations](integrations.md) page.

diff --git a/docs/en/integrations/comet.md b/docs/en/integrations/comet.md

index bc7cc05af5..7c9ab2a9c5 100644

--- a/docs/en/integrations/comet.md

+++ b/docs/en/integrations/comet.md

@@ -53,7 +53,7 @@ Then, you can initialize your Comet project. Comet will automatically detect the

```python

import comet_ml

-comet_ml.init(project_name="comet-example-yolov8-coco128")

+comet_ml.login(project_name="comet-example-yolov8-coco128")

```

If you are using a Google Colab notebook, the code above will prompt you to enter your API key for initialization.

@@ -72,7 +72,7 @@ Before diving into the usage instructions, be sure to check out the range of [YO

# Load a model

model = YOLO("yolov8n.pt")

- # train the model

+ # Train the model

results = model.train(

data="coco8.yaml",

project="comet-example-yolov8-coco128",

@@ -200,7 +200,7 @@ To integrate Comet ML with Ultralytics YOLOv8, follow these steps:

```python

import comet_ml

- comet_ml.init(project_name="comet-example-yolov8-coco128")

+ comet_ml.login(project_name="comet-example-yolov8-coco128")

```

4. **Train your YOLOv8 model and log metrics**:

@@ -210,7 +210,12 @@ To integrate Comet ML with Ultralytics YOLOv8, follow these steps:

model = YOLO("yolov8n.pt")

results = model.train(

- data="coco8.yaml", project="comet-example-yolov8-coco128", batch=32, save_period=1, save_json=True, epochs=3

+ data="coco8.yaml",

+ project="comet-example-yolov8-coco128",

+ batch=32,

+ save_period=1,

+ save_json=True,

+ epochs=3,

)

```

@@ -255,7 +260,7 @@ Comet ML allows for extensive customization of its logging behavior using enviro

os.environ["COMET_EVAL_LOG_CONFUSION_MATRIX"] = "false"

```

-For more customization options, refer to the [Customizing Comet ML Logging](#customizing-comet-ml-logging) section.

+Refer to the [Customizing Comet ML Logging](#customizing-comet-ml-logging) section for more customization options.

### How do I view detailed metrics and visualizations of my YOLOv8 training on Comet ML?

diff --git a/docs/en/integrations/ibm-watsonx.md b/docs/en/integrations/ibm-watsonx.md

index da53b9c048..e6fae564d7 100644

--- a/docs/en/integrations/ibm-watsonx.md

+++ b/docs/en/integrations/ibm-watsonx.md

@@ -321,3 +321,90 @@ We explored IBM Watsonx key features, and how to train a YOLOv8 model using IBM

For further details on usage, visit [IBM Watsonx official documentation](https://www.ibm.com/watsonx).

Also, be sure to check out the [Ultralytics integration guide page](./index.md), to learn more about different exciting integrations.

+

+## FAQ

+

+### How do I train a YOLOv8 model using IBM Watsonx?

+

+To train a YOLOv8 model using IBM Watsonx, follow these steps:

+

+1. **Set Up Your Environment**: Create an IBM Cloud account and set up a Watsonx.ai project. Use a Jupyter Notebook for your coding environment.

+2. **Install Libraries**: Install necessary libraries like `torch`, `opencv`, and `ultralytics`.

+3. **Load Data**: Use the Kaggle API to load your dataset into Watsonx.

+4. **Preprocess Data**: Organize your dataset into the required directory structure and update the `.yaml` configuration file.

+5. **Train the Model**: Use the YOLO command-line interface to train your model with specific parameters like `epochs`, `batch size`, and `learning rate`.

+6. **Test and Evaluate**: Run inference to test the model and evaluate its performance using metrics like precision and recall.

+

+For detailed instructions, refer to our [YOLOv8 Model Training guide](../modes/train.md).

+

+### What are the key features of IBM Watsonx for AI model training?

+

+IBM Watsonx offers several key features for AI model training:

+

+- **Watsonx.ai**: Provides tools for AI development, including access to IBM-supported custom models and third-party models like Llama 3. It includes the Prompt Lab, Tuning Studio, and Flows Engine for comprehensive AI lifecycle management.

+- **Watsonx.data**: Supports cloud and on-premises deployments, offering centralized data access, efficient query engines like Presto and Spark, and an AI-powered semantic layer.

+- **Watsonx.governance**: Automates compliance, manages risk with alerts, and provides tools for detecting issues like bias and drift. It also includes dashboards and reporting tools for collaboration.

+

+For more information, visit the [IBM Watsonx official documentation](https://www.ibm.com/watsonx).

+

+### Why should I use IBM Watsonx for training Ultralytics YOLOv8 models?

+

+IBM Watsonx is an excellent choice for training Ultralytics YOLOv8 models due to its comprehensive suite of tools that streamline the AI lifecycle. Key benefits include:

+

+- **Scalability**: Easily scale your model training with IBM Cloud services.

+- **Integration**: Seamlessly integrate with various data sources and APIs.

+- **User-Friendly Interface**: Simplifies the development process with a collaborative and intuitive interface.

+- **Advanced Tools**: Access to powerful tools like the Prompt Lab, Tuning Studio, and Flows Engine for enhancing model performance.

+

+Learn more about [Ultralytics YOLOv8](https://github.com/ultralytics/ultralytics) and how to train models using IBM Watsonx in our [integration guide](./index.md).

+

+### How can I preprocess my dataset for YOLOv8 training on IBM Watsonx?

+

+To preprocess your dataset for YOLOv8 training on IBM Watsonx:

+

+1. **Organize Directories**: Ensure your dataset follows the YOLO directory structure with separate subdirectories for images and labels within the train/val/test split.

+2. **Update .yaml File**: Modify the `.yaml` configuration file to reflect the new directory structure and class names.

+3. **Run Preprocessing Script**: Use a Python script to reorganize your dataset and update the `.yaml` file accordingly.

+

+Here's a sample script to organize your dataset:

+

+```python

+import os

+import shutil

+

+

+def organize_files(directory):

+ for subdir in ["train", "test", "val"]:

+ subdir_path = os.path.join(directory, subdir)

+ if not os.path.exists(subdir_path):

+ continue

+

+ images_dir = os.path.join(subdir_path, "images")

+ labels_dir = os.path.join(subdir_path, "labels")

+

+ os.makedirs(images_dir, exist_ok=True)

+ os.makedirs(labels_dir, exist_ok=True)

+

+ for filename in os.listdir(subdir_path):

+ if filename.endswith(".txt"):

+ shutil.move(os.path.join(subdir_path, filename), os.path.join(labels_dir, filename))

+ elif filename.endswith(".jpg") or filename.endswith(".png") or filename.endswith(".jpeg"):

+ shutil.move(os.path.join(subdir_path, filename), os.path.join(images_dir, filename))

+

+

+if __name__ == "__main__":

+ directory = f"{work_dir}/trash_ICRA19/dataset"

+ organize_files(directory)

+```

+

+For more details, refer to our [data preprocessing guide](../guides/preprocessing_annotated_data.md).

+

+### What are the prerequisites for training a YOLOv8 model on IBM Watsonx?

+

+Before you start training a YOLOv8 model on IBM Watsonx, ensure you have the following prerequisites:

+

+- **IBM Cloud Account**: Create an account on IBM Cloud to access Watsonx.ai.

+- **Kaggle Account**: For loading datasets, you'll need a Kaggle account and an API key.

+- **Jupyter Notebook**: Set up a Jupyter Notebook environment within Watsonx.ai for coding and model training.

+

+For more information on setting up your environment, visit our [Ultralytics Installation guide](../quickstart.md).

diff --git a/docs/en/integrations/jupyterlab.md b/docs/en/integrations/jupyterlab.md

index 8e2a68029f..9a67394d63 100644

--- a/docs/en/integrations/jupyterlab.md

+++ b/docs/en/integrations/jupyterlab.md

@@ -108,3 +108,102 @@ We've explored how JupyterLab can be a powerful tool for experimenting with Ultr

For more details, visit the [JupyterLab FAQ Page](https://jupyterlab.readthedocs.io/en/stable/getting_started/faq.html).

Interested in more YOLOv8 integrations? Check out the [Ultralytics integration guide](./index.md) to explore additional tools and capabilities for your machine learning projects.

+

+## FAQ

+

+### How do I use JupyterLab to train a YOLOv8 model?

+

+To train a YOLOv8 model using JupyterLab:

+

+1. Install JupyterLab and the Ultralytics package:

+

+ ```python

+ pip install jupyterlab ultralytics

+ ```

+

+2. Launch JupyterLab and open a new notebook.

+

+3. Import the YOLO model and load a pretrained model:

+

+ ```python

+ from ultralytics import YOLO

+

+ model = YOLO("yolov8n.pt")

+ ```

+

+4. Train the model on your custom dataset:

+

+ ```python

+ results = model.train(data="path/to/your/data.yaml", epochs=100, imgsz=640)

+ ```

+

+5. Visualize training results using JupyterLab's built-in plotting capabilities:

+ ```python

+ %matplotlib inline

+ from ultralytics.utils.plotting import plot_results

+ plot_results(results)

+ ```

+

+JupyterLab's interactive environment allows you to easily modify parameters, visualize results, and iterate on your model training process.

+

+### What are the key features of JupyterLab that make it suitable for YOLOv8 projects?

+

+JupyterLab offers several features that make it ideal for YOLOv8 projects:

+

+1. Interactive code execution: Test and debug YOLOv8 code snippets in real-time.

+2. Integrated file browser: Easily manage datasets, model weights, and configuration files.

+3. Flexible layout: Arrange multiple notebooks, terminals, and output windows side-by-side for efficient workflow.

+4. Rich output display: Visualize YOLOv8 detection results, training curves, and model performance metrics inline.

+5. Markdown support: Document your YOLOv8 experiments and findings with rich text and images.

+6. Extension ecosystem: Enhance functionality with extensions for version control, [remote computing](google-colab.md), and more.

+

+These features allow for a seamless development experience when working with YOLOv8 models, from data preparation to model deployment.

+

+### How can I optimize YOLOv8 model performance using JupyterLab?

+

+To optimize YOLOv8 model performance in JupyterLab:

+

+1. Use the autobatch feature to determine the optimal batch size:

+

+ ```python

+ from ultralytics.utils.autobatch import autobatch

+

+ optimal_batch_size = autobatch(model)

+ ```

+

+2. Implement [hyperparameter tuning](../guides/hyperparameter-tuning.md) using libraries like Ray Tune:

+

+ ```python

+ from ultralytics.utils.tuner import run_ray_tune

+

+ best_results = run_ray_tune(model, data="path/to/data.yaml")

+ ```

+

+3. Visualize and analyze model metrics using JupyterLab's plotting capabilities:

+

+ ```python

+ from ultralytics.utils.plotting import plot_results

+

+ plot_results(results.results_dict)

+ ```

+

+4. Experiment with different model architectures and [export formats](../modes/export.md) to find the best balance of speed and accuracy for your specific use case.

+

+JupyterLab's interactive environment allows for quick iterations and real-time feedback, making it easier to optimize your YOLOv8 models efficiently.

+

+### How do I handle common issues when working with JupyterLab and YOLOv8?

+

+When working with JupyterLab and YOLOv8, you might encounter some common issues. Here's how to handle them:

+

+1. GPU memory issues:

+

+ - Use `torch.cuda.empty_cache()` to clear GPU memory between runs.

+ - Adjust batch size or image size to fit your GPU memory.

+

+2. Package conflicts:

+

+ - Create a separate conda environment for your YOLOv8 projects to avoid conflicts.

+ - Use `!pip install package_name` in a notebook cell to install missing packages.

+

+3. Kernel crashes:

+ - Restart the kernel and run cells one by one to identify the problematic code.

diff --git a/docs/en/integrations/kaggle.md b/docs/en/integrations/kaggle.md

index 12b7468158..3ac789cd59 100644

--- a/docs/en/integrations/kaggle.md

+++ b/docs/en/integrations/kaggle.md

@@ -90,3 +90,50 @@ We've seen how Kaggle can boost your YOLOv8 projects by providing free access to

For more details, visit [Kaggle's documentation](https://www.kaggle.com/docs).

Interested in more YOLOv8 integrations? Check out the[ Ultralytics integration guide](https://docs.ultralytics.com/integrations/) to explore additional tools and capabilities for your machine learning projects.

+

+## FAQ

+

+### How do I train a YOLOv8 model on Kaggle?

+

+Training a YOLOv8 model on Kaggle is straightforward. First, access the [Kaggle YOLOv8 Notebook](https://www.kaggle.com/ultralytics/yolov8). Sign in to your Kaggle account, copy and edit the notebook, and select a GPU under the accelerator settings. Run the notebook cells to start training. For more detailed steps, refer to our [YOLOv8 Model Training guide](../modes/train.md).

+

+### What are the benefits of using Kaggle for YOLOv8 model training?

+

+Kaggle offers several advantages for training YOLOv8 models:

+

+- **Free GPU Access**: Utilize powerful GPUs like Nvidia Tesla P100 or T4 x2 for up to 30 hours per week.

+- **Pre-installed Libraries**: Libraries like TensorFlow and PyTorch are pre-installed, simplifying the setup.

+- **Community Collaboration**: Engage with a vast community of data scientists and machine learning enthusiasts.

+- **Version Control**: Easily manage different versions of your notebooks and revert to previous versions if needed.

+

+For more details, visit our [Ultralytics integration guide](https://docs.ultralytics.com/integrations/).

+

+### What common issues might I encounter when using Kaggle for YOLOv8, and how can I resolve them?

+

+Common issues include:

+

+- **Access to GPUs**: Ensure you activate a GPU in your notebook settings. Kaggle allows up to 30 hours of GPU usage per week.

+- **Dataset Licenses**: Check the license of each dataset to understand usage restrictions.

+- **Saving and Committing Notebooks**: Click "Save Version" to save your notebook's state and access output files from the Output tab.

+- **Collaboration**: Kaggle supports asynchronous collaboration; multiple users cannot edit a notebook simultaneously.

+

+For more troubleshooting tips, see our [Common Issues guide](../guides/yolo-common-issues.md).

+

+### Why should I choose Kaggle over other platforms like Google Colab for training YOLOv8 models?

+

+Kaggle offers unique features that make it an excellent choice:

+

+- **Public Notebooks**: Share your work with the community for feedback and collaboration.

+- **Free Access to TPUs**: Speed up training with powerful TPUs without extra costs.

+- **Comprehensive History**: Track changes over time with a detailed history of notebook commits.

+- **Resource Availability**: Significant resources are provided for each notebook session, including 12 hours of execution time for CPU and GPU sessions.

+ For a comparison with Google Colab, refer to our [Google Colab guide](./google-colab.md).

+

+### How can I revert to a previous version of my Kaggle notebook?

+

+To revert to a previous version:

+

+1. Open the notebook and click on the three vertical dots in the top right corner.

+2. Select "View Versions."

+3. Find the version you want to revert to, click on the "..." menu next to it, and select "Revert to Version."

+4. Click "Save Version" to commit the changes.

diff --git a/docs/en/models/index.md b/docs/en/models/index.md

index d848e78920..0b294801d5 100644

--- a/docs/en/models/index.md

+++ b/docs/en/models/index.md

@@ -20,12 +20,13 @@ Here are some of the key models supported:

6. **[YOLOv8](yolov8.md) NEW 🚀**: The latest version of the YOLO family, featuring enhanced capabilities such as instance segmentation, pose/keypoints estimation, and classification.

7. **[YOLOv9](yolov9.md)**: An experimental model trained on the Ultralytics [YOLOv5](yolov5.md) codebase implementing Programmable Gradient Information (PGI).

8. **[YOLOv10](yolov10.md)**: By Tsinghua University, featuring NMS-free training and efficiency-accuracy driven architecture, delivering state-of-the-art performance and latency.

-9. **[Segment Anything Model (SAM)](sam.md)**: Meta's Segment Anything Model (SAM).

-10. **[Mobile Segment Anything Model (MobileSAM)](mobile-sam.md)**: MobileSAM for mobile applications, by Kyung Hee University.

-11. **[Fast Segment Anything Model (FastSAM)](fast-sam.md)**: FastSAM by Image & Video Analysis Group, Institute of Automation, Chinese Academy of Sciences.

-12. **[YOLO-NAS](yolo-nas.md)**: YOLO Neural Architecture Search (NAS) Models.

-13. **[Realtime Detection Transformers (RT-DETR)](rtdetr.md)**: Baidu's PaddlePaddle Realtime Detection Transformer (RT-DETR) models.

-14. **[YOLO-World](yolo-world.md)**: Real-time Open Vocabulary Object Detection models from Tencent AI Lab.

+9. **[Segment Anything Model (SAM)](sam.md)**: Meta's original Segment Anything Model (SAM).

+10. **[Segment Anything Model 2 (SAM2)](sam-2.md)**: The next generation of Meta's Segment Anything Model (SAM) for videos and images.

+11. **[Mobile Segment Anything Model (MobileSAM)](mobile-sam.md)**: MobileSAM for mobile applications, by Kyung Hee University.

+12. **[Fast Segment Anything Model (FastSAM)](fast-sam.md)**: FastSAM by Image & Video Analysis Group, Institute of Automation, Chinese Academy of Sciences.

+13. **[YOLO-NAS](yolo-nas.md)**: YOLO Neural Architecture Search (NAS) Models.

+14. **[Realtime Detection Transformers (RT-DETR)](rtdetr.md)**: Baidu's PaddlePaddle Realtime Detection Transformer (RT-DETR) models.

+15. **[YOLO-World](yolo-world.md)**: Real-time Open Vocabulary Object Detection models from Tencent AI Lab.

diff --git a/docs/en/models/sam-2.md b/docs/en/models/sam-2.md

new file mode 100644

index 0000000000..001bd89b60

--- /dev/null

+++ b/docs/en/models/sam-2.md

@@ -0,0 +1,349 @@

+---

+comments: true

+description: Discover SAM 2, the next generation of Meta's Segment Anything Model, supporting real-time promptable segmentation in both images and videos with state-of-the-art performance. Learn about its key features, datasets, and how to use it.

+keywords: SAM 2, Segment Anything, video segmentation, image segmentation, promptable segmentation, zero-shot performance, SA-V dataset, Ultralytics, real-time segmentation, AI, machine learning

+---

+

+# SAM 2: Segment Anything Model 2

+

+!!! Note "🚧 SAM 2 Integration In Progress 🚧"

+

+ The SAM 2 features described in this documentation are currently not enabled in the `ultralytics` package. The Ultralytics team is actively working on integrating SAM 2, and these capabilities should be available soon. We appreciate your patience as we work to implement this exciting new model.

+

+SAM 2, the successor to Meta's [Segment Anything Model (SAM)](sam.md), is a cutting-edge tool designed for comprehensive object segmentation in both images and videos. It excels in handling complex visual data through a unified, promptable model architecture that supports real-time processing and zero-shot generalization.

+

+

+

+## Key Features

+

+### Unified Model Architecture

+

+SAM 2 combines the capabilities of image and video segmentation in a single model. This unification simplifies deployment and allows for consistent performance across different media types. It leverages a flexible prompt-based interface, enabling users to specify objects of interest through various prompt types, such as points, bounding boxes, or masks.

+

+### Real-Time Performance

+

+The model achieves real-time inference speeds, processing approximately 44 frames per second. This makes SAM 2 suitable for applications requiring immediate feedback, such as video editing and augmented reality.

+

+### Zero-Shot Generalization

+

+SAM 2 can segment objects it has never encountered before, demonstrating strong zero-shot generalization. This is particularly useful in diverse or evolving visual domains where pre-defined categories may not cover all possible objects.

+

+### Interactive Refinement

+

+Users can iteratively refine the segmentation results by providing additional prompts, allowing for precise control over the output. This interactivity is essential for fine-tuning results in applications like video annotation or medical imaging.

+

+### Advanced Handling of Visual Challenges

+

+SAM 2 includes mechanisms to manage common video segmentation challenges, such as object occlusion and reappearance. It uses a sophisticated memory mechanism to keep track of objects across frames, ensuring continuity even when objects are temporarily obscured or exit and re-enter the scene.

+

+For a deeper understanding of SAM 2's architecture and capabilities, explore the [SAM 2 research paper](https://arxiv.org/abs/2401.12741).

+

+## Performance and Technical Details

+

+SAM 2 sets a new benchmark in the field, outperforming previous models on various metrics:

+

+| Metric | SAM 2 | Previous SOTA |

+| ---------------------------------- | ------------- | ------------- |

+| **Interactive Video Segmentation** | **Best** | - |

+| **Human Interactions Required** | **3x fewer** | Baseline |

+| **Image Segmentation Accuracy** | **Improved** | SAM |

+| **Inference Speed** | **6x faster** | SAM |

+

+## Model Architecture

+

+### Core Components

+

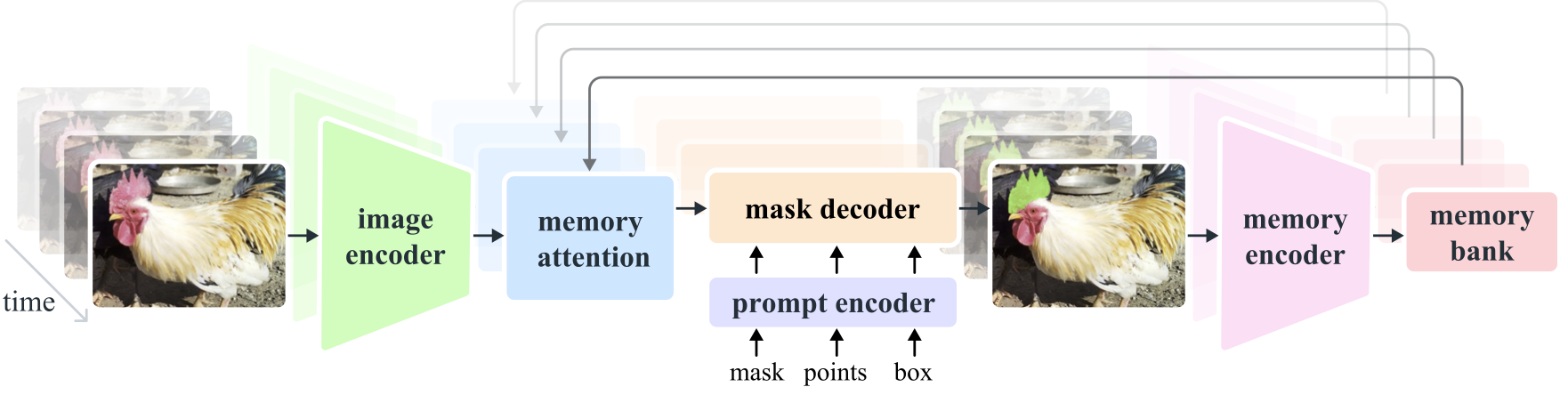

+- **Image and Video Encoder**: Utilizes a transformer-based architecture to extract high-level features from both images and video frames. This component is responsible for understanding the visual content at each timestep.

+- **Prompt Encoder**: Processes user-provided prompts (points, boxes, masks) to guide the segmentation task. This allows SAM 2 to adapt to user input and target specific objects within a scene.

+- **Memory Mechanism**: Includes a memory encoder, memory bank, and memory attention module. These components collectively store and utilize information from past frames, enabling the model to maintain consistent object tracking over time.

+- **Mask Decoder**: Generates the final segmentation masks based on the encoded image features and prompts. In video, it also uses memory context to ensure accurate tracking across frames.

+

+

+

+### Memory Mechanism and Occlusion Handling

+

+The memory mechanism allows SAM 2 to handle temporal dependencies and occlusions in video data. As objects move and interact, SAM 2 records their features in a memory bank. When an object becomes occluded, the model can rely on this memory to predict its position and appearance when it reappears. The occlusion head specifically handles scenarios where objects are not visible, predicting the likelihood of an object being occluded.

+

+### Multi-Mask Ambiguity Resolution

+

+In situations with ambiguity (e.g., overlapping objects), SAM 2 can generate multiple mask predictions. This feature is crucial for accurately representing complex scenes where a single mask might not sufficiently describe the scene's nuances.

+

+## SA-V Dataset

+

+The SA-V dataset, developed for SAM 2's training, is one of the largest and most diverse video segmentation datasets available. It includes:

+

+- **51,000+ Videos**: Captured across 47 countries, providing a wide range of real-world scenarios.

+- **600,000+ Mask Annotations**: Detailed spatio-temporal mask annotations, referred to as "masklets," covering whole objects and parts.

+- **Dataset Scale**: It features 4.5 times more videos and 53 times more annotations than previous largest datasets, offering unprecedented diversity and complexity.

+

+## Benchmarks

+

+### Video Object Segmentation

+

+SAM 2 has demonstrated superior performance across major video segmentation benchmarks:

+

+| Dataset | J&F | J | F |

+| --------------- | ---- | ---- | ---- |

+| **DAVIS 2017** | 82.5 | 79.8 | 85.2 |

+| **YouTube-VOS** | 81.2 | 78.9 | 83.5 |

+

+### Interactive Segmentation

+

+In interactive segmentation tasks, SAM 2 shows significant efficiency and accuracy:

+

+| Dataset | NoC@90 | AUC |

+| --------------------- | ------ | ----- |

+| **DAVIS Interactive** | 1.54 | 0.872 |

+

+## Installation

+

+To install SAM 2, use the following command. All SAM 2 models will automatically download on first use.

+

+```bash

+pip install ultralytics

+```

+

+## How to Use SAM 2: Versatility in Image and Video Segmentation

+

+!!! Note "🚧 SAM 2 Integration In Progress 🚧"

+

+ The SAM 2 features described in this documentation are currently not enabled in the `ultralytics` package. The Ultralytics team is actively working on integrating SAM 2, and these capabilities should be available soon. We appreciate your patience as we work to implement this exciting new model.

+

+The following table details the available SAM 2 models, their pre-trained weights, supported tasks, and compatibility with different operating modes like [Inference](../modes/predict.md), [Validation](../modes/val.md), [Training](../modes/train.md), and [Export](../modes/export.md).

+

+| Model Type | Pre-trained Weights | Tasks Supported | Inference | Validation | Training | Export |

+| ----------- | ------------------------------------------------------------------------------------- | -------------------------------------------- | --------- | ---------- | -------- | ------ |

+| SAM 2 tiny | [sam2_t.pt](https://github.com/ultralytics/assets/releases/download/v8.2.0/sam2_t.pt) | [Instance Segmentation](../tasks/segment.md) | ✅ | ❌ | ❌ | ❌ |

+| SAM 2 small | [sam2_s.pt](https://github.com/ultralytics/assets/releases/download/v8.2.0/sam2_s.pt) | [Instance Segmentation](../tasks/segment.md) | ✅ | ❌ | ❌ | ❌ |

+| SAM 2 base | [sam2_b.pt](https://github.com/ultralytics/assets/releases/download/v8.2.0/sam2_b.pt) | [Instance Segmentation](../tasks/segment.md) | ✅ | ❌ | ❌ | ❌ |

+| SAM 2 large | [sam2_l.pt](https://github.com/ultralytics/assets/releases/download/v8.2.0/sam2_l.pt) | [Instance Segmentation](../tasks/segment.md) | ✅ | ❌ | ❌ | ❌ |

+

+### SAM 2 Prediction Examples

+

+SAM 2 can be utilized across a broad spectrum of tasks, including real-time video editing, medical imaging, and autonomous systems. Its ability to segment both static and dynamic visual data makes it a versatile tool for researchers and developers.

+

+#### Segment with Prompts

+

+!!! Example "Segment with Prompts"

+

+ Use prompts to segment specific objects in images or videos.

+

+ === "Python"

+

+ ```python

+ from ultralytics import SAM

+

+ # Load a model

+ model = SAM("sam2_b.pt")

+

+ # Display model information (optional)

+ model.info()

+

+ # Segment with bounding box prompt

+ results = model("path/to/image.jpg", bboxes=[100, 100, 200, 200])

+

+ # Segment with point prompt

+ results = model("path/to/image.jpg", points=[150, 150], labels=[1])

+ ```

+

+#### Segment Everything

+

+!!! Example "Segment Everything"

+

+ Segment the entire image or video content without specific prompts.

+

+ === "Python"

+

+ ```python

+ from ultralytics import SAM

+

+ # Load a model

+ model = SAM("sam2_b.pt")

+

+ # Display model information (optional)

+ model.info()

+

+ # Run inference

+ model("path/to/video.mp4")

+ ```

+

+ === "CLI"

+

+ ```bash

+ # Run inference with a SAM 2 model

+ yolo predict model=sam2_b.pt source=path/to/video.mp4

+ ```

+

+- This example demonstrates how SAM 2 can be used to segment the entire content of an image or video if no prompts (bboxes/points/masks) are provided.

+

+## SAM comparison vs YOLOv8

+

+Here we compare Meta's smallest SAM model, SAM-b, with Ultralytics smallest segmentation model, [YOLOv8n-seg](../tasks/segment.md):

+

+| Model | Size | Parameters | Speed (CPU) |

+| ---------------------------------------------- | -------------------------- | ---------------------- | -------------------------- |

+| Meta's SAM-b | 358 MB | 94.7 M | 51096 ms/im |

+| [MobileSAM](mobile-sam.md) | 40.7 MB | 10.1 M | 46122 ms/im |

+| [FastSAM-s](fast-sam.md) with YOLOv8 backbone | 23.7 MB | 11.8 M | 115 ms/im |

+| Ultralytics [YOLOv8n-seg](../tasks/segment.md) | **6.7 MB** (53.4x smaller) | **3.4 M** (27.9x less) | **59 ms/im** (866x faster) |

+

+This comparison shows the order-of-magnitude differences in the model sizes and speeds between models. Whereas SAM presents unique capabilities for automatic segmenting, it is not a direct competitor to YOLOv8 segment models, which are smaller, faster and more efficient.

+

+Tests run on a 2023 Apple M2 Macbook with 16GB of RAM. To reproduce this test:

+

+!!! Example

+

+ === "Python"

+

+ ```python

+ from ultralytics import SAM, YOLO, FastSAM

+

+ # Profile SAM-b

+ model = SAM("sam_b.pt")

+ model.info()

+ model("ultralytics/assets")

+

+ # Profile MobileSAM

+ model = SAM("mobile_sam.pt")

+ model.info()

+ model("ultralytics/assets")

+

+ # Profile FastSAM-s

+ model = FastSAM("FastSAM-s.pt")

+ model.info()

+ model("ultralytics/assets")

+

+ # Profile YOLOv8n-seg

+ model = YOLO("yolov8n-seg.pt")

+ model.info()

+ model("ultralytics/assets")

+ ```

+

+## Auto-Annotation: Efficient Dataset Creation

+

+Auto-annotation is a powerful feature of SAM 2, enabling users to generate segmentation datasets quickly and accurately by leveraging pre-trained models. This capability is particularly useful for creating large, high-quality datasets without extensive manual effort.

+

+### How to Auto-Annotate with SAM 2

+

+To auto-annotate your dataset using SAM 2, follow this example:

+

+!!! Example "Auto-Annotation Example"

+

+ ```python

+ from ultralytics.data.annotator import auto_annotate

+

+ auto_annotate(data="path/to/images", det_model="yolov8x.pt", sam_model="sam2_b.pt")

+ ```

+

+| Argument | Type | Description | Default |

+| ------------ | ----------------------- | ------------------------------------------------------------------------------------------------------- | -------------- |

+| `data` | `str` | Path to a folder containing images to be annotated. | |

+| `det_model` | `str`, optional | Pre-trained YOLO detection model. Defaults to 'yolov8x.pt'. | `'yolov8x.pt'` |

+| `sam_model` | `str`, optional | Pre-trained SAM 2 segmentation model. Defaults to 'sam2_b.pt'. | `'sam2_b.pt'` |

+| `device` | `str`, optional | Device to run the models on. Defaults to an empty string (CPU or GPU, if available). | |

+| `output_dir` | `str`, `None`, optional | Directory to save the annotated results. Defaults to a 'labels' folder in the same directory as 'data'. | `None` |

+

+This function facilitates the rapid creation of high-quality segmentation datasets, ideal for researchers and developers aiming to accelerate their projects.

+

+## Limitations

+

+Despite its strengths, SAM 2 has certain limitations:

+

+- **Tracking Stability**: SAM 2 may lose track of objects during extended sequences or significant viewpoint changes.

+- **Object Confusion**: The model can sometimes confuse similar-looking objects, particularly in crowded scenes.

+- **Efficiency with Multiple Objects**: Segmentation efficiency decreases when processing multiple objects simultaneously due to the lack of inter-object communication.

+- **Detail Accuracy**: May miss fine details, especially with fast-moving objects. Additional prompts can partially address this issue, but temporal smoothness is not guaranteed.

+

+## Citations and Acknowledgements

+

+If SAM 2 is a crucial part of your research or development work, please cite it using the following reference:

+

+!!! Quote ""

+

+ === "BibTeX"

+

+ ```bibtex

+ @article{ravi2024sam2,

+ title={SAM 2: Segment Anything in Images and Videos},

+ author={Ravi, Nikhila and Gabeur, Valentin and Hu, Yuan-Ting and Hu, Ronghang and Ryali, Chaitanya and Ma, Tengyu and Khedr, Haitham and R{\"a}dle, Roman and Rolland, Chloe and Gustafson, Laura and Mintun, Eric and Pan, Junting and Alwala, Kalyan Vasudev and Carion, Nicolas and Wu, Chao-Yuan and Girshick, Ross and Doll{\'a}r, Piotr and Feichtenhofer, Christoph},

+ journal={arXiv preprint},

+ year={2024}

+ }

+ ```

+

+We extend our gratitude to Meta AI for their contributions to the AI community with this groundbreaking model and dataset.

+

+## FAQ

+

+### What is SAM 2 and how does it improve upon the original Segment Anything Model (SAM)?

+

+SAM 2, the successor to Meta's [Segment Anything Model (SAM)](sam.md), is a cutting-edge tool designed for comprehensive object segmentation in both images and videos. It excels in handling complex visual data through a unified, promptable model architecture that supports real-time processing and zero-shot generalization. SAM 2 offers several improvements over the original SAM, including:

+

+- **Unified Model Architecture**: Combines image and video segmentation capabilities in a single model.

+- **Real-Time Performance**: Processes approximately 44 frames per second, making it suitable for applications requiring immediate feedback.

+- **Zero-Shot Generalization**: Segments objects it has never encountered before, useful in diverse visual domains.

+- **Interactive Refinement**: Allows users to iteratively refine segmentation results by providing additional prompts.

+- **Advanced Handling of Visual Challenges**: Manages common video segmentation challenges like object occlusion and reappearance.

+

+For more details on SAM 2's architecture and capabilities, explore the [SAM 2 research paper](https://arxiv.org/abs/2401.12741).

+

+### How can I use SAM 2 for real-time video segmentation?

+

+SAM 2 can be utilized for real-time video segmentation by leveraging its promptable interface and real-time inference capabilities. Here's a basic example:

+

+!!! Example "Segment with Prompts"

+

+ Use prompts to segment specific objects in images or videos.

+

+ === "Python"

+

+ ```python

+ from ultralytics import SAM

+

+ # Load a model

+ model = SAM("sam2_b.pt")

+

+ # Display model information (optional)

+ model.info()

+

+ # Segment with bounding box prompt

+ results = model("path/to/image.jpg", bboxes=[100, 100, 200, 200])

+

+ # Segment with point prompt

+ results = model("path/to/image.jpg", points=[150, 150], labels=[1])

+ ```

+

+For more comprehensive usage, refer to the [How to Use SAM 2](#how-to-use-sam-2-versatility-in-image-and-video-segmentation) section.

+

+### What datasets are used to train SAM 2, and how do they enhance its performance?

+

+SAM 2 is trained on the SA-V dataset, one of the largest and most diverse video segmentation datasets available. The SA-V dataset includes:

+

+- **51,000+ Videos**: Captured across 47 countries, providing a wide range of real-world scenarios.

+- **600,000+ Mask Annotations**: Detailed spatio-temporal mask annotations, referred to as "masklets," covering whole objects and parts.

+- **Dataset Scale**: Features 4.5 times more videos and 53 times more annotations than previous largest datasets, offering unprecedented diversity and complexity.

+

+This extensive dataset allows SAM 2 to achieve superior performance across major video segmentation benchmarks and enhances its zero-shot generalization capabilities. For more information, see the [SA-V Dataset](#sa-v-dataset) section.

+

+### How does SAM 2 handle occlusions and object reappearances in video segmentation?

+

+SAM 2 includes a sophisticated memory mechanism to manage temporal dependencies and occlusions in video data. The memory mechanism consists of:

+

+- **Memory Encoder and Memory Bank**: Stores features from past frames.

+- **Memory Attention Module**: Utilizes stored information to maintain consistent object tracking over time.

+- **Occlusion Head**: Specifically handles scenarios where objects are not visible, predicting the likelihood of an object being occluded.

+

+This mechanism ensures continuity even when objects are temporarily obscured or exit and re-enter the scene. For more details, refer to the [Memory Mechanism and Occlusion Handling](#memory-mechanism-and-occlusion-handling) section.

+

+### How does SAM 2 compare to other segmentation models like YOLOv8?

+

+SAM 2 and Ultralytics YOLOv8 serve different purposes and excel in different areas. While SAM 2 is designed for comprehensive object segmentation with advanced features like zero-shot generalization and real-time performance, YOLOv8 is optimized for speed and efficiency in object detection and segmentation tasks. Here's a comparison:

+

+| Model | Size | Parameters | Speed (CPU) |

+| ---------------------------------------------- | -------------------------- | ---------------------- | -------------------------- |

+| Meta's SAM-b | 358 MB | 94.7 M | 51096 ms/im |

+| [MobileSAM](mobile-sam.md) | 40.7 MB | 10.1 M | 46122 ms/im |

+| [FastSAM-s](fast-sam.md) with YOLOv8 backbone | 23.7 MB | 11.8 M | 115 ms/im |

+| Ultralytics [YOLOv8n-seg](../tasks/segment.md) | **6.7 MB** (53.4x smaller) | **3.4 M** (27.9x less) | **59 ms/im** (866x faster) |

+

+For more details, see the [SAM comparison vs YOLOv8](#sam-comparison-vs-yolov8) section.

diff --git a/docs/en/models/sam.md b/docs/en/models/sam.md

index b3301700ba..d7da2be334 100644

--- a/docs/en/models/sam.md

+++ b/docs/en/models/sam.md

@@ -195,13 +195,13 @@ To auto-annotate your dataset with the Ultralytics framework, use the `auto_anno

auto_annotate(data="path/to/images", det_model="yolov8x.pt", sam_model="sam_b.pt")

```

-| Argument | Type | Description | Default |

-| ---------- | ------------------- | ------------------------------------------------------------------------------------------------------- | ------------ |

-| data | str | Path to a folder containing images to be annotated. | |

-| det_model | str, optional | Pre-trained YOLO detection model. Defaults to 'yolov8x.pt'. | 'yolov8x.pt' |

-| sam_model | str, optional | Pre-trained SAM segmentation model. Defaults to 'sam_b.pt'. | 'sam_b.pt' |

-| device | str, optional | Device to run the models on. Defaults to an empty string (CPU or GPU, if available). | |

-| output_dir | str, None, optional | Directory to save the annotated results. Defaults to a 'labels' folder in the same directory as 'data'. | None |

+| Argument | Type | Description | Default |

+| ------------ | --------------------- | ------------------------------------------------------------------------------------------------------- | -------------- |

+| `data` | `str` | Path to a folder containing images to be annotated. | |

+| `det_model` | `str`, optional | Pre-trained YOLO detection model. Defaults to 'yolov8x.pt'. | `'yolov8x.pt'` |

+| `sam_model` | `str`, optional | Pre-trained SAM segmentation model. Defaults to 'sam_b.pt'. | `'sam_b.pt'` |

+| `device` | `str`, optional | Device to run the models on. Defaults to an empty string (CPU or GPU, if available). | |

+| `output_dir` | `str`, None, optional | Directory to save the annotated results. Defaults to a 'labels' folder in the same directory as 'data'. | `None` |

The `auto_annotate` function takes the path to your images, with optional arguments for specifying the pre-trained detection and SAM segmentation models, the device to run the models on, and the output directory for saving the annotated results.

@@ -278,7 +278,3 @@ This function takes the path to your images and optional arguments for pre-train

### What datasets are used to train the Segment Anything Model (SAM)?

SAM is trained on the extensive [SA-1B dataset](https://ai.facebook.com/datasets/segment-anything/) which comprises over 1 billion masks across 11 million images. SA-1B is the largest segmentation dataset to date, providing high-quality and diverse training data, ensuring impressive zero-shot performance in varied segmentation tasks. For more details, visit the [Dataset section](#key-features-of-the-segment-anything-model-sam).

-

----

-

-This FAQ aims to address common questions related to the Segment Anything Model (SAM) from Ultralytics, enhancing user understanding and facilitating effective use of Ultralytics products. For additional information, explore the relevant sections linked throughout.

diff --git a/docs/en/models/yolov10.md b/docs/en/models/yolov10.md

index 4646eb5d45..98a164a42e 100644

--- a/docs/en/models/yolov10.md

+++ b/docs/en/models/yolov10.md

@@ -10,6 +10,17 @@ YOLOv10, built on the [Ultralytics](https://ultralytics.com) [Python package](ht

+

+

+

+

+ Watch: How to Train YOLOv10 on SKU-110k Dataset using Ultralytics | Retail Dataset

+

+

## Overview

Real-time object detection aims to accurately predict object categories and positions in images with low latency. The YOLO series has been at the forefront of this research due to its balance between performance and efficiency. However, reliance on NMS and architectural inefficiencies have hindered optimal performance. YOLOv10 addresses these issues by introducing consistent dual assignments for NMS-free training and a holistic efficiency-accuracy driven model design strategy.

diff --git a/docs/en/reference/models/sam/modules/decoders.md b/docs/en/reference/models/sam/modules/decoders.md

index ff3aaa9e7a..a224738b70 100644

--- a/docs/en/reference/models/sam/modules/decoders.md

+++ b/docs/en/reference/models/sam/modules/decoders.md

@@ -13,8 +13,4 @@ keywords: Ultralytics, MaskDecoder, MLP, machine learning, transformer architect

## ::: ultralytics.models.sam.modules.decoders.MaskDecoder

-

diff --git a/docs/en/reference/models/sam/modules/sam.md b/docs/en/reference/models/sam/modules/sam.md

index 5a7c30e42b..a31cece155 100644

--- a/docs/en/reference/models/sam/modules/sam.md

+++ b/docs/en/reference/models/sam/modules/sam.md

@@ -1,6 +1,6 @@

---

-description: Discover the Ultralytics Sam module for object segmentation. Learn about its components, such as image encoders and mask decoders, in this comprehensive guide.

-keywords: Ultralytics, Sam Module, object segmentation, image encoder, mask decoder, prompt encoder, AI, machine learning

+description: Discover the Ultralytics SAM module for object segmentation. Learn about its components, such as image encoders and mask decoders, in this comprehensive guide.

+keywords: Ultralytics, SAM Module, object segmentation, image encoder, mask decoder, prompt encoder, AI, machine learning

---

# Reference for `ultralytics/models/sam/modules/sam.py`

@@ -11,6 +11,6 @@ keywords: Ultralytics, Sam Module, object segmentation, image encoder, mask deco

-## ::: ultralytics.models.sam.modules.sam.Sam

+## ::: ultralytics.models.sam.modules.sam.SAMModel

diff --git a/docs/en/reference/models/sam2/build.md b/docs/en/reference/models/sam2/build.md

new file mode 100644

index 0000000000..94e9f5befb

--- /dev/null

+++ b/docs/en/reference/models/sam2/build.md

@@ -0,0 +1,36 @@

+---

+description: Discover detailed instructions for building various Segment Anything Model 2 (SAM 2) architectures with Ultralytics.

+keywords: Ultralytics, SAM 2 model, Segment Anything Model 2, SAM, model building, deep learning, AI

+---

+

+# Reference for `ultralytics/models/sam2/build.py`

+

+!!! Note

+

+ This file is available at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/models/sam2/build.py](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/models/sam2/build.py). If you spot a problem please help fix it by [contributing](https://docs.ultralytics.com/help/contributing/) a [Pull Request](https://github.com/ultralytics/ultralytics/edit/main/ultralytics/models/sam2/build.py) 🛠️. Thank you 🙏!

+

+

+

+## ::: ultralytics.models.sam2.build.build_sam2_t

+

+

diff --git a/docs/en/reference/models/sam2/model.md b/docs/en/reference/models/sam2/model.md

new file mode 100644

index 0000000000..fc0d2e2502

--- /dev/null

+++ b/docs/en/reference/models/sam2/model.md

@@ -0,0 +1,16 @@

+---

+description: Explore the SAM 2 (Segment Anything Model 2) interface for real-time image segmentation. Learn about promptable segmentation and zero-shot capabilities.

+keywords: Ultralytics, SAM 2, Segment Anything Model 2, image segmentation, real-time segmentation, zero-shot performance, promptable segmentation, SA-1B dataset

+---

+

+# Reference for `ultralytics/models/sam2/model.py`

+

+!!! Note

+

+ This file is available at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/models/sam2/model.py](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/models/sam2/model.py). If you spot a problem please help fix it by [contributing](https://docs.ultralytics.com/help/contributing/) a [Pull Request](https://github.com/ultralytics/ultralytics/edit/main/ultralytics/models/sam2/model.py) 🛠️. Thank you 🙏!

+

+

+

+## ::: ultralytics.models.sam2.model.SAM2

+

+

diff --git a/docs/en/reference/models/sam2/modules/decoders.md b/docs/en/reference/models/sam2/modules/decoders.md

new file mode 100644

index 0000000000..989169a770

--- /dev/null

+++ b/docs/en/reference/models/sam2/modules/decoders.md

@@ -0,0 +1,16 @@

+---

+description: Explore the MaskDecoder and MLP modules in Ultralytics for efficient mask prediction using transformer architecture. Detailed attributes, functionalities, and implementation.

+keywords: Ultralytics, MaskDecoder, MLP, machine learning, transformer architecture, mask prediction, neural networks, PyTorch modules

+---

+

+# Reference for `ultralytics/models/sam2/modules/decoders.py`

+

+!!! Note

+

+ This file is available at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/models/sam2/modules/decoders.py](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/models/sam2/modules/decoders.py). If you spot a problem please help fix it by [contributing](https://docs.ultralytics.com/help/contributing/) a [Pull Request](https://github.com/ultralytics/ultralytics/edit/main/ultralytics/models/sam2/modules/decoders.py) 🛠️. Thank you 🙏!

+

+

+

+## ::: ultralytics.models.sam2.modules.decoders.MaskDecoder

+

+

diff --git a/docs/en/reference/models/sam2/modules/encoders.md b/docs/en/reference/models/sam2/modules/encoders.md

new file mode 100644

index 0000000000..a3da286e1e

--- /dev/null

+++ b/docs/en/reference/models/sam2/modules/encoders.md

@@ -0,0 +1,28 @@

+---

+description: Discover the Ultralytics SAM 2 module for object segmentation. Learn about its components, such as image encoders and mask decoders, in this comprehensive guide.

+keywords: Ultralytics, SAM 2 Module, object segmentation, image encoder, mask decoder, prompt encoder, AI, machine learning

+---

+

+# Reference for `ultralytics/models/sam2/modules/encoders.py`

+

+!!! Note

+

+ This file is available at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/models/sam2/modules/encoders.py](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/models/sam2/modules/encoders.py). If you spot a problem please help fix it by [contributing](https://docs.ultralytics.com/help/contributing/) a [Pull Request](https://github.com/ultralytics/ultralytics/edit/main/ultralytics/models/sam2/modules/encoders.py) 🛠️. Thank you 🙏!

+

+

+

+## ::: ultralytics.models.sam2.modules.encoders.MemoryEncoder

+

+

diff --git a/docs/en/reference/models/sam2/modules/memory_attention.md b/docs/en/reference/models/sam2/modules/memory_attention.md

new file mode 100644

index 0000000000..fef22174ee

--- /dev/null

+++ b/docs/en/reference/models/sam2/modules/memory_attention.md

@@ -0,0 +1,20 @@

+---

+description: Explore detailed documentation of various SAM 2 encoder modules such as MemoryAttentionLayer, MemoryAttention, available in Ultralytics' repository.

+keywords: Ultralytics, SAM 2 encoder, MemoryAttentionLayer, MemoryAttention

+---

+

+# Reference for `ultralytics/models/sam2/modules/memory_attention.py`

+

+!!! Note

+

+ This file is available at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/models/sam2/modules/memory_attention.py](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/models/sam2/modules/memory_attention.py). If you spot a problem please help fix it by [contributing](https://docs.ultralytics.com/help/contributing/) a [Pull Request](https://github.com/ultralytics/ultralytics/edit/main/ultralytics/models/sam2/modules/memory_attention.py) 🛠️. Thank you 🙏!

+

+

+

+## ::: ultralytics.models.sam2.modules.memory_attention.MemoryAttentionLayer

+

+

diff --git a/docs/en/reference/models/sam2/modules/sam2.md b/docs/en/reference/models/sam2/modules/sam2.md

new file mode 100644

index 0000000000..fcd47bbdca

--- /dev/null

+++ b/docs/en/reference/models/sam2/modules/sam2.md

@@ -0,0 +1,16 @@

+---

+description: Discover the Ultralytics SAM 2 module for object segmentation. Learn about its components, such as image encoders and mask decoders, in this comprehensive guide.

+keywords: Ultralytics, SAM 2 Module, object segmentation, image encoder, mask decoder, prompt encoder, AI, machine learning

+---

+

+# Reference for `ultralytics/models/sam2/modules/sam2.py`

+

+!!! Note

+

+ This file is available at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/models/sam2/modules/sam2.py](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/models/sam2/modules/sam2.py). If you spot a problem please help fix it by [contributing](https://docs.ultralytics.com/help/contributing/) a [Pull Request](https://github.com/ultralytics/ultralytics/edit/main/ultralytics/models/sam2/modules/sam2.py) 🛠️. Thank you 🙏!

+

+

+

+## ::: ultralytics.models.sam2.modules.sam2.SAM2Model

+

+

diff --git a/docs/en/reference/models/sam2/modules/sam2_blocks.md b/docs/en/reference/models/sam2/modules/sam2_blocks.md

new file mode 100644

index 0000000000..796669a03e

--- /dev/null

+++ b/docs/en/reference/models/sam2/modules/sam2_blocks.md

@@ -0,0 +1,56 @@

+---

+description: Explore detailed documentation of various SAM 2 modules such as MaskDownSampler, CXBlock, and more, available in Ultralytics' repository.

+keywords: Ultralytics, SAM 2 encoder, DropPath, MaskDownSampler, CXBlock, Fuser, TwoWayTransformer, TwoWayAttentionBlock, RoPEAttention, MultiScaleAttention, MultiScaleBlock. PositionEmbeddingSine, do_pool

+---

+

+# Reference for `ultralytics/models/sam2/modules/sam2_blocks.py`

+

+!!! Note

+

+ This file is available at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/models/sam2/modules/sam2_blocks.py](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/models/sam2/modules/sam2_blocks.py). If you spot a problem please help fix it by [contributing](https://docs.ultralytics.com/help/contributing/) a [Pull Request](https://github.com/ultralytics/ultralytics/edit/main/ultralytics/models/sam2/modules/sam2_blocks.py) 🛠️. Thank you 🙏!

+

+

+

+## ::: ultralytics.models.sam2.modules.sam2_blocks.DropPath

+

+

diff --git a/docs/en/reference/models/sam2/modules/utils.md b/docs/en/reference/models/sam2/modules/utils.md

new file mode 100644

index 0000000000..357cea6237

--- /dev/null

+++ b/docs/en/reference/models/sam2/modules/utils.md

@@ -0,0 +1,44 @@

+---

+description: Explore the detailed API reference for Ultralytics SAM 2 models.

+keywords: Ultralytics, SAM 2, API Reference, models, window partition, data processing, YOLO

+---

+

+# Reference for `ultralytics/models/sam2/modules/utils.py`

+

+!!! Note

+

+ This file is available at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/models/sam2/modules/utils.py](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/models/sam2/modules/utils.py). If you spot a problem please help fix it by [contributing](https://docs.ultralytics.com/help/contributing/) a [Pull Request](https://github.com/ultralytics/ultralytics/edit/main/ultralytics/models/sam2/modules/utils.py) 🛠️. Thank you 🙏!

+

+

+

+## ::: ultralytics.models.sam2.modules.utils.select_closest_cond_frames

+

+

diff --git a/docs/en/reference/models/sam2/predict.md b/docs/en/reference/models/sam2/predict.md

new file mode 100644

index 0000000000..23b30140bc

--- /dev/null

+++ b/docs/en/reference/models/sam2/predict.md

@@ -0,0 +1,16 @@

+---

+description: Explore Ultralytics SAM 2 Predictor for advanced, real-time image segmentation using the Segment Anything Model 2 (SAM 2). Complete implementation details and auxiliary utilities.

+keywords: Ultralytics, SAM 2, Segment Anything Model 2, image segmentation, real-time, prediction, AI, machine learning, Python, torch, inference

+---

+

+# Reference for `ultralytics/models/sam2/predict.py`

+

+!!! Note

+

+ This file is available at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/models/sam2/predict.py](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/models/sam2/predict.py). If you spot a problem please help fix it by [contributing](https://docs.ultralytics.com/help/contributing/) a [Pull Request](https://github.com/ultralytics/ultralytics/edit/main/ultralytics/models/sam2/predict.py) 🛠️. Thank you 🙏!

+

+

+

+## ::: ultralytics.models.sam2.predict.SAM2Predictor

+

+

diff --git a/mkdocs.yml b/mkdocs.yml

index d849c01e87..fcf5516b55 100644

--- a/mkdocs.yml

+++ b/mkdocs.yml

@@ -239,6 +239,7 @@ nav:

- YOLOv9: models/yolov9.md

- YOLOv10: models/yolov10.md

- SAM (Segment Anything Model): models/sam.md

+ - SAM 2 (Segment Anything Model 2): models/sam-2.md

- MobileSAM (Mobile Segment Anything Model): models/mobile-sam.md

- FastSAM (Fast Segment Anything Model): models/fast-sam.md

- YOLO-NAS (Neural Architecture Search): models/yolo-nas.md

@@ -508,6 +509,17 @@ nav:

- tiny_encoder: reference/models/sam/modules/tiny_encoder.md

- transformer: reference/models/sam/modules/transformer.md

- predict: reference/models/sam/predict.md

+ - sam2:

+ - build: reference/models/sam2/build.md

+ - model: reference/models/sam2/model.md

+ - modules:

+ - decoders: reference/models/sam2/modules/decoders.md

+ - encoders: reference/models/sam2/modules/encoders.md

+ - memory_attention: reference/models/sam2/modules/memory_attention.md

+ - sam2: reference/models/sam2/modules/sam2.md

+ - sam2_blocks: reference/models/sam2/modules/sam2_blocks.md

+ - utils: reference/models/sam2/modules/utils.md

+ - predict: reference/models/sam2/predict.md

- utils:

- loss: reference/models/utils/loss.md

- ops: reference/models/utils/ops.md

@@ -658,6 +670,7 @@ plugins:

sdk.md: index.md

hub/inference_api.md: hub/inference-api.md

usage/hyperparameter_tuning.md: integrations/ray-tune.md

+ models/sam2.md: models/sam-2.md

reference/base_pred.md: reference/engine/predictor.md

reference/base_trainer.md: reference/engine/trainer.md

reference/exporter.md: reference/engine/exporter.md

diff --git a/ultralytics/__init__.py b/ultralytics/__init__.py

index 8c31352897..affb8e35c7 100644

--- a/ultralytics/__init__.py

+++ b/ultralytics/__init__.py

@@ -1,6 +1,6 @@

# Ultralytics YOLO 🚀, AGPL-3.0 license

-__version__ = "8.2.69"

+__version__ = "8.2.70"

import os

@@ -8,7 +8,7 @@ import os

os.environ["OMP_NUM_THREADS"] = "1" # reduce CPU utilization during training

from ultralytics.data.explorer.explorer import Explorer

-from ultralytics.models import NAS, RTDETR, SAM, YOLO, FastSAM, YOLOWorld

+from ultralytics.models import NAS, RTDETR, SAM, SAM2, YOLO, FastSAM, YOLOWorld

from ultralytics.utils import ASSETS, SETTINGS

from ultralytics.utils.checks import check_yolo as checks

from ultralytics.utils.downloads import download

@@ -21,6 +21,7 @@ __all__ = (

"YOLOWorld",

"NAS",

"SAM",

+ "SAM2",

"FastSAM",

"RTDETR",

"checks",

diff --git a/ultralytics/cfg/__init__.py b/ultralytics/cfg/__init__.py

index 4f7ad00eef..4a23ec4267 100644

--- a/ultralytics/cfg/__init__.py

+++ b/ultralytics/cfg/__init__.py

@@ -793,6 +793,10 @@ def entrypoint(debug=""):

from ultralytics import FastSAM

model = FastSAM(model)

+ elif "sam2" in stem:

+ from ultralytics import SAM2

+

+ model = SAM2(model)

elif "sam" in stem:

from ultralytics import SAM

diff --git a/ultralytics/models/__init__.py b/ultralytics/models/__init__.py

index aff620a9a9..bbade04724 100644

--- a/ultralytics/models/__init__.py

+++ b/ultralytics/models/__init__.py

@@ -4,6 +4,7 @@ from .fastsam import FastSAM

from .nas import NAS

from .rtdetr import RTDETR

from .sam import SAM

+from .sam2 import SAM2

from .yolo import YOLO, YOLOWorld

-__all__ = "YOLO", "RTDETR", "SAM", "FastSAM", "NAS", "YOLOWorld" # allow simpler import

+__all__ = "YOLO", "RTDETR", "SAM", "FastSAM", "NAS", "YOLOWorld", "SAM2" # allow simpler import

diff --git a/ultralytics/models/fastsam/predict.py b/ultralytics/models/fastsam/predict.py

index cd9b302384..2a15c3f39f 100644

--- a/ultralytics/models/fastsam/predict.py

+++ b/ultralytics/models/fastsam/predict.py

@@ -21,6 +21,7 @@ class FastSAMPredictor(SegmentationPredictor):

"""

def __init__(self, cfg=DEFAULT_CFG, overrides=None, _callbacks=None):

+ """Initializes a FastSAMPredictor for fast SAM segmentation tasks in Ultralytics YOLO framework."""

super().__init__(cfg, overrides, _callbacks)

self.prompts = {}

diff --git a/ultralytics/models/sam/build.py b/ultralytics/models/sam/build.py

index cb3a7c6867..253c0159d1 100644

--- a/ultralytics/models/sam/build.py

+++ b/ultralytics/models/sam/build.py

@@ -14,7 +14,7 @@ from ultralytics.utils.downloads import attempt_download_asset

from .modules.decoders import MaskDecoder

from .modules.encoders import ImageEncoderViT, PromptEncoder

-from .modules.sam import Sam

+from .modules.sam import SAMModel

from .modules.tiny_encoder import TinyViT

from .modules.transformer import TwoWayTransformer

@@ -105,7 +105,7 @@ def _build_sam(

out_chans=prompt_embed_dim,

)

)

- sam = Sam(

+ sam = SAMModel(

image_encoder=image_encoder,

prompt_encoder=PromptEncoder(

embed_dim=prompt_embed_dim,

diff --git a/ultralytics/models/sam/model.py b/ultralytics/models/sam/model.py

index feaeb551a7..a819b6eafd 100644

--- a/ultralytics/models/sam/model.py

+++ b/ultralytics/models/sam/model.py

@@ -44,6 +44,7 @@ class SAM(Model):

"""

if model and Path(model).suffix not in {".pt", ".pth"}:

raise NotImplementedError("SAM prediction requires pre-trained *.pt or *.pth model.")

+ self.is_sam2 = "sam2" in Path(model).stem

super().__init__(model=model, task="segment")

def _load(self, weights: str, task=None):

@@ -54,7 +55,12 @@ class SAM(Model):

weights (str): Path to the weights file.

task (str, optional): Task name. Defaults to None.

"""

- self.model = build_sam(weights)

+ if self.is_sam2:

+ from ..sam2.build import build_sam2

+

+ self.model = build_sam2(weights)

+ else:

+ self.model = build_sam(weights)

def predict(self, source, stream=False, bboxes=None, points=None, labels=None, **kwargs):

"""

@@ -112,4 +118,6 @@ class SAM(Model):

Returns:

(dict): A dictionary mapping the 'segment' task to its corresponding 'Predictor'.

"""

- return {"segment": {"predictor": Predictor}}

+ from ..sam2.predict import SAM2Predictor

+

+ return {"segment": {"predictor": SAM2Predictor if self.is_sam2 else Predictor}}

diff --git a/ultralytics/models/sam/modules/decoders.py b/ultralytics/models/sam/modules/decoders.py

index 073b1ad40c..eeaab6b453 100644

--- a/ultralytics/models/sam/modules/decoders.py

+++ b/ultralytics/models/sam/modules/decoders.py

@@ -4,9 +4,8 @@ from typing import List, Tuple, Type

import torch

from torch import nn

-from torch.nn import functional as F

-from ultralytics.nn.modules import LayerNorm2d

+from ultralytics.nn.modules import MLP, LayerNorm2d

class MaskDecoder(nn.Module):

@@ -28,7 +27,6 @@ class MaskDecoder(nn.Module):

def __init__(

self,

- *,

transformer_dim: int,

transformer: nn.Module,

num_multimask_outputs: int = 3,

@@ -149,42 +147,3 @@ class MaskDecoder(nn.Module):

iou_pred = self.iou_prediction_head(iou_token_out)

return masks, iou_pred

-

-

-class MLP(nn.Module):

- """

- MLP (Multi-Layer Perceptron) model lightly adapted from

- https://github.com/facebookresearch/MaskFormer/blob/main/mask_former/modeling/transformer/transformer_predictor.py

- """

-

- def __init__(

- self,

- input_dim: int,

- hidden_dim: int,

- output_dim: int,

- num_layers: int,

- sigmoid_output: bool = False,

- ) -> None:

- """

- Initializes the MLP (Multi-Layer Perceptron) model.

-

- Args:

- input_dim (int): The dimensionality of the input features.

- hidden_dim (int): The dimensionality of the hidden layers.

- output_dim (int): The dimensionality of the output layer.

- num_layers (int): The number of hidden layers.

- sigmoid_output (bool, optional): Apply a sigmoid activation to the output layer. Defaults to False.

- """

- super().__init__()

- self.num_layers = num_layers

- h = [hidden_dim] * (num_layers - 1)

- self.layers = nn.ModuleList(nn.Linear(n, k) for n, k in zip([input_dim] + h, h + [output_dim]))

- self.sigmoid_output = sigmoid_output

-

- def forward(self, x):

- """Executes feedforward within the neural network module and applies activation."""

- for i, layer in enumerate(self.layers):

- x = F.relu(layer(x)) if i < self.num_layers - 1 else layer(x)

- if self.sigmoid_output:

- x = torch.sigmoid(x)

- return x

diff --git a/ultralytics/models/sam/modules/encoders.py b/ultralytics/models/sam/modules/encoders.py

index a51c34721a..2bf3945dc5 100644

--- a/ultralytics/models/sam/modules/encoders.py

+++ b/ultralytics/models/sam/modules/encoders.py

@@ -211,6 +211,8 @@ class PromptEncoder(nn.Module):

point_embedding[labels == -1] += self.not_a_point_embed.weight

point_embedding[labels == 0] += self.point_embeddings[0].weight

point_embedding[labels == 1] += self.point_embeddings[1].weight

+ point_embedding[labels == 2] += self.point_embeddings[2].weight

+ point_embedding[labels == 3] += self.point_embeddings[3].weight

return point_embedding

def _embed_boxes(self, boxes: torch.Tensor) -> torch.Tensor:

@@ -226,8 +228,8 @@ class PromptEncoder(nn.Module):

"""Embeds mask inputs."""

return self.mask_downscaling(masks)

+ @staticmethod

def _get_batch_size(

- self,

points: Optional[Tuple[torch.Tensor, torch.Tensor]],

boxes: Optional[torch.Tensor],

masks: Optional[torch.Tensor],

diff --git a/ultralytics/models/sam/modules/sam.py b/ultralytics/models/sam/modules/sam.py

index 95d9bbe662..1617527f07 100644

--- a/ultralytics/models/sam/modules/sam.py

+++ b/ultralytics/models/sam/modules/sam.py

@@ -15,15 +15,14 @@ from .decoders import MaskDecoder

from .encoders import ImageEncoderViT, PromptEncoder

-class Sam(nn.Module):

+class SAMModel(nn.Module):

"""

- Sam (Segment Anything Model) is designed for object segmentation tasks. It uses image encoders to generate image

- embeddings, and prompt encoders to encode various types of input prompts. These embeddings are then used by the mask

- decoder to predict object masks.

+ SAMModel (Segment Anything Model) is designed for object segmentation tasks. It uses image encoders to generate

+ image embeddings, and prompt encoders to encode various types of input prompts. These embeddings are then used by

+ the mask decoder to predict object masks.

Attributes:

mask_threshold (float): Threshold value for mask prediction.

- image_format (str): Format of the input image, default is 'RGB'.

image_encoder (ImageEncoderViT): The backbone used to encode the image into embeddings.

prompt_encoder (PromptEncoder): Encodes various types of input prompts.

mask_decoder (MaskDecoder): Predicts object masks from the image and prompt embeddings.

@@ -32,7 +31,6 @@ class Sam(nn.Module):

"""

mask_threshold: float = 0.0

- image_format: str = "RGB"

def __init__(

self,

@@ -43,7 +41,7 @@ class Sam(nn.Module):