@ -109,7 +109,7 @@ path = model.export(format="onnx") # export the model to ONNX format

YOLOv8 [Detect](https://docs.ultralytics.com/tasks/detect), [Segment](https://docs.ultralytics.com/tasks/segment) and [Pose](https://docs.ultralytics.com/tasks/pose) models pretrained on the [COCO](https://docs.ultralytics.com/datasets/detect/coco) dataset are available here, as well as YOLOv8 [Classify](https://docs.ultralytics.com/tasks/classify) models pretrained on the [ImageNet](https://docs.ultralytics.com/datasets/classify/imagenet) dataset. [Track](https://docs.ultralytics.com/modes/track) mode is available for all Detect, Segment and Pose models.

All [Models](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/cfg/models) download automatically from the latest Ultralytics [release](https://github.com/ultralytics/assets/releases) on first use.

@ -243,7 +243,7 @@ Our key integrations with leading AI platforms extend the functionality of Ultra

Experience seamless AI with [Ultralytics HUB](https://bit.ly/ultralytics_hub) ⭐, the all-in-one solution for data visualization, YOLOv5 and YOLOv8 🚀 model training and deployment, without any coding. Transform images into actionable insights and bring your AI visions to life with ease using our cutting-edge platform and user-friendly [Ultralytics App](https://ultralytics.com/app_install). Start your journey for **Free** now!

The [SKU-110k](https://github.com/eg4000/SKU110K_CVPR19) dataset is a collection of densely packed retail shelf images, designed to support research in object detection tasks. Developed by Eran Goldman et al., the dataset contains over 110,000 unique store keeping unit (SKU) categories with densely packed objects, often looking similar or even identical, positioned in close proximity.

@ -67,7 +67,7 @@ To train a YOLOv8n model on the SKU-110K dataset for 100 epochs with an image si

The SKU-110k dataset contains a diverse set of retail shelf images with densely packed objects, providing rich context for object detection tasks. Here are some examples of data from the dataset, along with their corresponding annotations:

- **Densely packed retail shelf image**: This image demonstrates an example of densely packed objects in a retail shelf setting. Objects are annotated with bounding boxes and SKU category labels.

@ -69,7 +69,7 @@ To train a model on the xView dataset for 100 epochs with an image size of 640,

The xView dataset contains high-resolution satellite images with a diverse set of objects annotated using bounding boxes. Here are some examples of data from the dataset, along with their corresponding annotations:

- **Overhead Imagery**: This image demonstrates an example of object detection in overhead imagery, where objects are annotated with bounding boxes. The dataset provides high-resolution satellite images to facilitate the development of models for this task.

@ -8,7 +8,7 @@ keywords: Ultralytics YOLO, COCO-Pose Dataset, Deep Learning, Pose Estimation, T

The [COCO-Pose](https://cocodataset.org/#keypoints-2017) dataset is a specialized version of the COCO (Common Objects in Context) dataset, designed for pose estimation tasks. It leverages the COCO Keypoints 2017 images and labels to enable the training of models like YOLO for pose estimation tasks.

@ -174,7 +174,7 @@ Now, anyone who has the direct link to your model can view it.

??? tip "Tip"

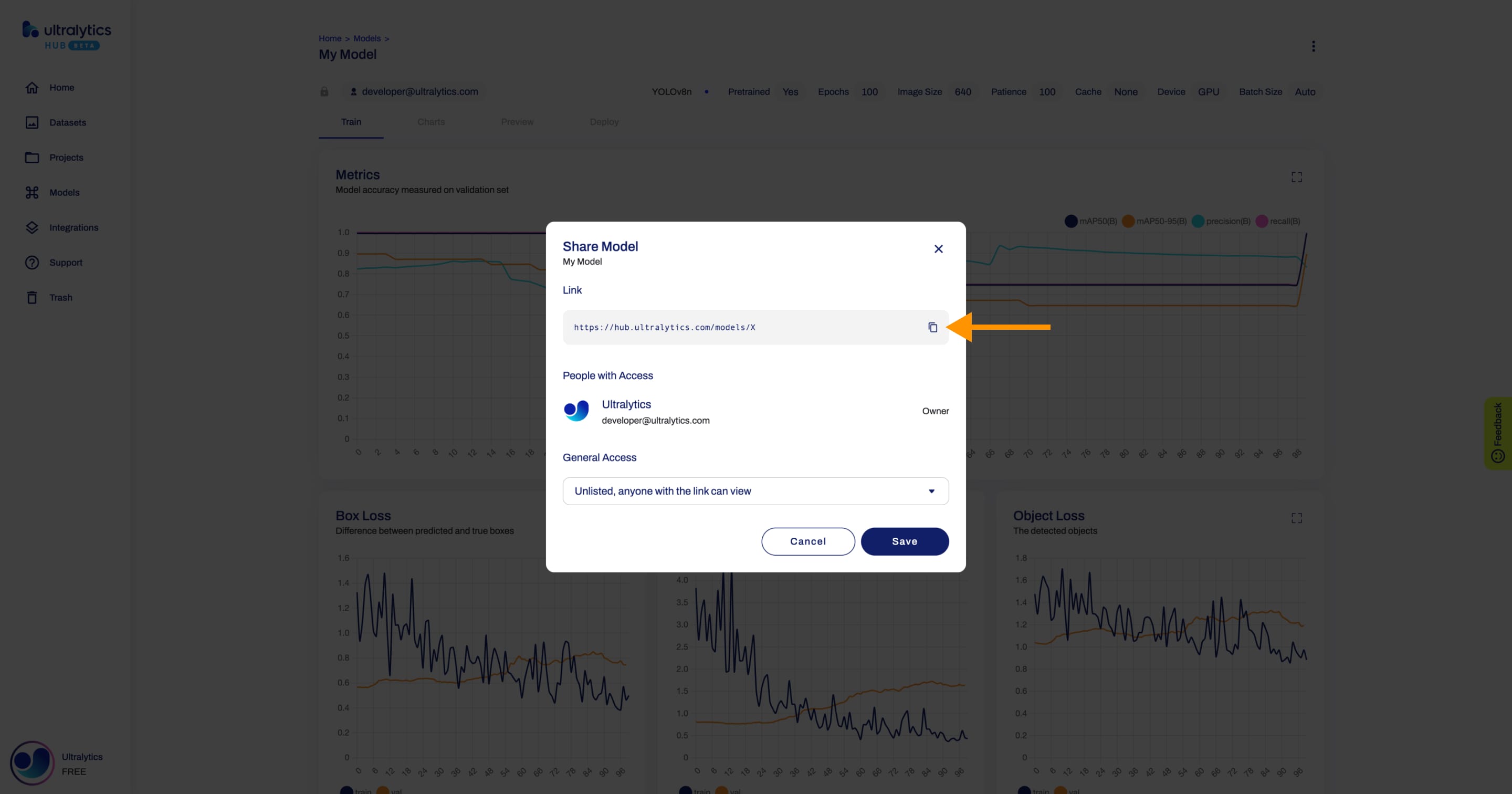

You can easily click on the models's link shown in the **Share Model** dialog to copy it.

You can easily click on the model's link shown in the **Share Model** dialog to copy it.

@ -38,7 +38,7 @@ Explore the YOLOv8 Docs, a comprehensive resource designed to help you understan

allowfullscreen>

</iframe>

<br>

<strong>Watch:</strong> How to Train a YOLOv8 model on Your Custom Dataset in Google Colab.

<strong>Watch:</strong> How to Train a YOLOv8 model on Your Custom Dataset in <ahref="https://colab.research.google.com/github/ultralytics/ultralytics/blob/main/examples/tutorial.ipynb"target="_blank">Google Colab</a>.

Welcome to the Ultralytics Integrations page! This page provides an overview of our partnerships with various tools and platforms, designed to streamline your machine learning workflows, enhance dataset management, simplify model training, and facilitate efficient deployment.

@ -120,11 +120,11 @@ In this example, we demonstrate how to use a custom search space for hyperparame

In the code snippet above, we create a YOLO model with the "yolov8n.pt" pretrained weights. Then, we call the `tune()` method, specifying the dataset configuration with "coco128.yaml". We provide a custom search space for the initial learning rate `lr0` using a dictionary with the key "lr0" and the value `tune.uniform(1e-5, 1e-1)`. Finally, we pass additional training arguments, such as the number of epochs directly to the tune method as `epochs=50`.

# Processing Ray Tune Results

## Processing Ray Tune Results

After running a hyperparameter tuning experiment with Ray Tune, you might want to perform various analyses on the obtained results. This guide will take you through common workflows for processing and analyzing these results.

## Loading Tune Experiment Results from a Directory

### Loading Tune Experiment Results from a Directory

After running the tuning experiment with `tuner.fit()`, you can load the results from a directory. This is useful, especially if you're performing the analysis after the initial training script has exited.

YOLOv8 is an AI framework that supports multiple computer vision **tasks**. The framework can be used to perform [detection](detect.md), [segmentation](segment.md), [classification](classify.md), and [pose](pose.md) estimation. Each of these tasks has a different objective and use case.