Add `instance segmentation` and `vision-eye mapping` in Docs + Fix minor code bug in other `real-world-projects` (#6972)

Co-authored-by: pre-commit-ci[bot] <66853113+pre-commit-ci[bot]@users.noreply.github.com> Co-authored-by: Glenn Jocher <glenn.jocher@ultralytics.com>pull/7045/head^2

parent

e9def85f1f

commit

34b10b2db3

10 changed files with 385 additions and 56 deletions

@ -0,0 +1,127 @@ |

||||

--- |

||||

comments: true |

||||

description: Instance Segmentation with Object Tracking using Ultralytics YOLOv8 |

||||

keywords: Ultralytics, YOLOv8, Instance Segmentation, Object Detection, Object Tracking, Segbbox, Computer Vision, Notebook, IPython Kernel, CLI, Python SDK |

||||

--- |

||||

|

||||

# Instance Segmentation and Tracking using Ultralytics YOLOv8 🚀 |

||||

|

||||

## What is Instance Segmentation? |

||||

|

||||

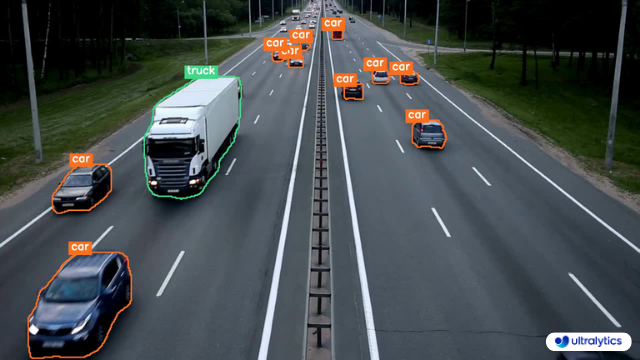

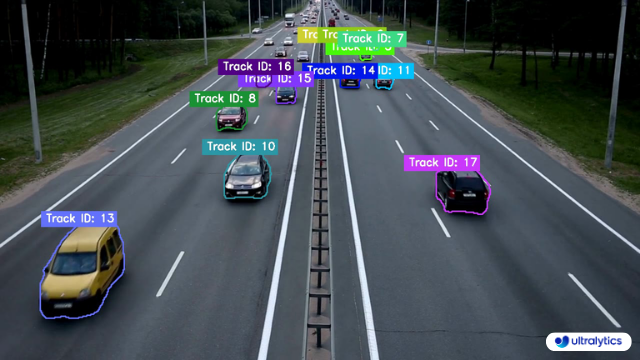

[Ultralytics YOLOv8](https://github.com/ultralytics/ultralytics/) Instance segmentation involves identifying and outlining individual objects in an image, providing a detailed understanding of spatial distribution. Unlike semantic segmentation, it uniquely labels and precisely delineates each object, crucial for tasks like object detection and medical imaging. |

||||

Two Types of instance segmentation by Ultralytics YOLOv8. |

||||

|

||||

- **Instance Segmentation with Class Objects:** Each class object is assigned a unique color for clear visual separation. |

||||

|

||||

- **Instance Segmentation with Object Tracks:** Every track is represented by a distinct color, facilitating easy identification and tracking. |

||||

|

||||

## Samples |

||||

|

||||

| Instance Segmentation | Instance Segmentation + Object Tracking | |

||||

|:---------------------------------------------------------------------------------------------------------------------------------------:|:------------------------------------------------------------------------------------------------------------------------------------------------------------:| |

||||

|  |  | |

||||

| Ultralytics Instance Segmentation 😍 | Ultralytics Instance Segmentation with Object Tracking 🔥 | |

||||

|

||||

|

||||

!!! Example "Instance Segmentation and Tracking" |

||||

|

||||

=== "Instance Segmentation" |

||||

```python |

||||

import cv2 |

||||

from ultralytics import YOLO |

||||

from ultralytics.utils.plotting import Annotator, colors |

||||

|

||||

model = YOLO("yolov8n-seg.pt") |

||||

names = model.model.names |

||||

cap = cv2.VideoCapture("path/to/video/file.mp4") |

||||

|

||||

out = cv2.VideoWriter('instance-segmentation.avi', |

||||

cv2.VideoWriter_fourcc(*'MJPG'), |

||||

30, (int(cap.get(3)), int(cap.get(4)))) |

||||

|

||||

while True: |

||||

ret, im0 = cap.read() |

||||

if not ret: |

||||

print("Video frame is empty or video processing has been successfully completed.") |

||||

break |

||||

|

||||

results = model.predict(im0) |

||||

clss = results[0].boxes.cls.cpu().tolist() |

||||

masks = results[0].masks.xy |

||||

|

||||

annotator = Annotator(im0, line_width=2) |

||||

|

||||

for mask, cls in zip(masks, clss): |

||||

annotator.seg_bbox(mask=mask, |

||||

mask_color=colors(int(cls), True), |

||||

det_label=names[int(cls)]) |

||||

|

||||

out.write(im0) |

||||

cv2.imshow("instance-segmentation", im0) |

||||

|

||||

if cv2.waitKey(1) & 0xFF == ord('q'): |

||||

break |

||||

|

||||

out.release() |

||||

cap.release() |

||||

cv2.destroyAllWindows() |

||||

|

||||

``` |

||||

|

||||

=== "Instance Segmentation with Object Tracking" |

||||

```python |

||||

import cv2 |

||||

from ultralytics import YOLO |

||||

from ultralytics.utils.plotting import Annotator, colors |

||||

|

||||

from collections import defaultdict |

||||

|

||||

track_history = defaultdict(lambda: []) |

||||

|

||||

model = YOLO("yolov8n-seg.pt") |

||||

cap = cv2.VideoCapture("path/to/video/file.mp4") |

||||

|

||||

out = cv2.VideoWriter('instance-segmentation-object-tracking.avi', |

||||

cv2.VideoWriter_fourcc(*'MJPG'), |

||||

30, (int(cap.get(3)), int(cap.get(4)))) |

||||

|

||||

while True: |

||||

ret, im0 = cap.read() |

||||

if not ret: |

||||

print("Video frame is empty or video processing has been successfully completed.") |

||||

break |

||||

|

||||

results = model.track(im0, persist=True) |

||||

masks = results[0].masks.xy |

||||

track_ids = results[0].boxes.id.int().cpu().tolist() |

||||

|

||||

annotator = Annotator(im0, line_width=2) |

||||

|

||||

for mask, track_id in zip(masks, track_ids): |

||||

annotator.seg_bbox(mask=mask, |

||||

mask_color=colors(track_id, True), |

||||

track_label=str(track_id)) |

||||

|

||||

out.write(im0) |

||||

cv2.imshow("instance-segmentation-object-tracking", im0) |

||||

|

||||

if cv2.waitKey(1) & 0xFF == ord('q'): |

||||

break |

||||

|

||||

out.release() |

||||

cap.release() |

||||

cv2.destroyAllWindows() |

||||

``` |

||||

|

||||

### `seg_bbox` Arguments |

||||

|

||||

| Name | Type | Default | Description | |

||||

|---------------|---------|-----------------|----------------------------------------| |

||||

| `mask` | `array` | `None` | Segmentation mask coordinates | |

||||

| `mask_color` | `tuple` | `(255, 0, 255)` | Mask color for every segmented box | |

||||

| `det_label` | `str` | `None` | Label for segmented object | |

||||

| `track_label` | `str` | `None` | Label for segmented and tracked object | |

||||

|

||||

## Note |

||||

|

||||

For any inquiries, feel free to post your questions in the [Ultralytics Issue Section](https://github.com/ultralytics/ultralytics/issues/new/choose) or the discussion section mentioned below. |

||||

@ -0,0 +1,127 @@ |

||||

--- |

||||

comments: true |

||||

description: VisionEye View Object Mapping using Ultralytics YOLOv8 |

||||

keywords: Ultralytics, YOLOv8, Object Detection, Object Tracking, IDetection, VisionEye, Computer Vision, Notebook, IPython Kernel, CLI, Python SDK |

||||

--- |

||||

|

||||

# VisionEye View Object Mapping using Ultralytics YOLOv8 🚀 |

||||

|

||||

## What is VisionEye Object Mapping? |

||||

|

||||

[Ultralytics YOLOv8](https://github.com/ultralytics/ultralytics/) VisionEye offers the capability for computers to identify and pinpoint objects, simulating the observational precision of the human eye. This functionality enables computers to discern and focus on specific objects, much like the way the human eye observes details from a particular viewpoint. |

||||

|

||||

<p align="center"> |

||||

<br> |

||||

<iframe width="720" height="405" src="https://www.youtube.com/embed/in6xF7KgF7Q" |

||||

title="YouTube video player" frameborder="0" |

||||

allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share" |

||||

allowfullscreen> |

||||

</iframe> |

||||

<br> |

||||

<strong>Watch:</strong> VisionEye Mapping using Ultralytics YOLOv8 |

||||

</p> |

||||

|

||||

## Samples |

||||

| VisionEye View | VisionEye View With Object Tracking | |

||||

|:------------------------------------------------------------------------------------------------------------------------------------------------------------:|:---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------:| |

||||

|  |  | |

||||

| VisionEye View Object Mapping using Ultralytics YOLOv8 | VisionEye View Object Mapping with Object Tracking using Ultralytics YOLOv8 | |

||||

|

||||

|

||||

!!! Example "VisionEye Object Mapping using YOLOv8" |

||||

|

||||

=== "VisionEye Object Mapping" |

||||

```python |

||||

import cv2 |

||||

from ultralytics import YOLO |

||||

from ultralytics.utils.plotting import colors, Annotator |

||||

|

||||

model = YOLO("yolov8n.pt") |

||||

names = model.model.names |

||||

cap = cv2.VideoCapture("path/to/video/file.mp4") |

||||

|

||||

out = cv2.VideoWriter('visioneye-pinpoint.avi', cv2.VideoWriter_fourcc(*'MJPG'), |

||||

30, (int(cap.get(3)), int(cap.get(4)))) |

||||

|

||||

center_point = (-10, int(cap.get(4))) |

||||

|

||||

while True: |

||||

ret, im0 = cap.read() |

||||

if not ret: |

||||

print("Video frame is empty or video processing has been successfully completed.") |

||||

break |

||||

|

||||

results = model.predict(im0) |

||||

boxes = results[0].boxes.xyxy.cpu() |

||||

clss = results[0].boxes.cls.cpu().tolist() |

||||

|

||||

annotator = Annotator(im0, line_width=2) |

||||

|

||||

for box, cls in zip(boxes, clss): |

||||

annotator.box_label(box, label=names[int(cls)], color=colors(int(cls))) |

||||

annotator.visioneye(box, center_point) |

||||

|

||||

out.write(im0) |

||||

cv2.imshow("visioneye-pinpoint", im0) |

||||

|

||||

if cv2.waitKey(1) & 0xFF == ord('q'): |

||||

break |

||||

|

||||

out.release() |

||||

cap.release() |

||||

cv2.destroyAllWindows() |

||||

``` |

||||

|

||||

=== "VisionEye Object Mapping with Object Tracking" |

||||

```python |

||||

import cv2 |

||||

from ultralytics import YOLO |

||||

from ultralytics.utils.plotting import colors, Annotator |

||||

|

||||

model = YOLO("yolov8n.pt") |

||||

cap = cv2.VideoCapture("path/to/video/file.mp4") |

||||

|

||||

out = cv2.VideoWriter('visioneye-pinpoint.avi', cv2.VideoWriter_fourcc(*'MJPG'), |

||||

30, (int(cap.get(3)), int(cap.get(4)))) |

||||

|

||||

center_point = (-10, int(cap.get(4))) |

||||

|

||||

while True: |

||||

ret, im0 = cap.read() |

||||

if not ret: |

||||

print("Video frame is empty or video processing has been successfully completed.") |

||||

break |

||||

|

||||

results = model.track(im0, persist=True) |

||||

boxes = results[0].boxes.xyxy.cpu() |

||||

track_ids = results[0].boxes.id.int().cpu().tolist() |

||||

|

||||

annotator = Annotator(im0, line_width=2) |

||||

|

||||

for box, track_id in zip(boxes, track_ids): |

||||

annotator.box_label(box, label=str(track_id), color=colors(int(track_id))) |

||||

annotator.visioneye(box, center_point) |

||||

|

||||

out.write(im0) |

||||

cv2.imshow("visioneye-pinpoint", im0) |

||||

|

||||

if cv2.waitKey(1) & 0xFF == ord('q'): |

||||

break |

||||

|

||||

out.release() |

||||

cap.release() |

||||

cv2.destroyAllWindows() |

||||

``` |

||||

|

||||

### `visioneye` Arguments |

||||

|

||||

| Name | Type | Default | Description | |

||||

|---------------|---------|------------------|--------------------------------------------------| |

||||

| `color` | `tuple` | `(235, 219, 11)` | Line and object centroid color | |

||||

| `pin_color` | `tuple` | `(255, 0, 255)` | VisionEye pinpoint color | |

||||

| `thickness` | `int` | `2` | pinpoint to object line thickness | |

||||

| `pins_radius` | `int` | `10` | Pinpoint and object centroid point circle radius | |

||||

|

||||

## Note |

||||

|

||||

For any inquiries, feel free to post your questions in the [Ultralytics Issue Section](https://github.com/ultralytics/ultralytics/issues/new/choose) or the discussion section mentioned below. |

||||

Loading…

Reference in new issue