|

|

|

|

@ -1,7 +1,169 @@ |

|

|

|

|

--- |

|

|

|

|

comments: true |

|

|

|

|

description: Efficiently manage and compare AI models with Ultralytics HUB Projects. Create, share, and edit projects for streamlined model development. |

|

|

|

|

keywords: Ultralytics HUB projects, model management, model comparison, create project, share project, edit project, delete project, compare models |

|

|

|

|

--- |

|

|

|

|

|

|

|

|

|

# 🚧 Page Under Construction ⚒ |

|

|

|

|

# Ultralytics HUB Projects |

|

|

|

|

|

|

|

|

|

This page is currently under construction!️ 👷Please check back later for updates. 😃🔜 |

|

|

|

|

Ultralytics HUB projects provide an effective solution for consolidating and managing your models. If you are working with several models that perform similar tasks or have related purposes, Ultralytics HUB projects allow you to group these models together. |

|

|

|

|

|

|

|

|

|

This creates a unified and organized workspace that facilitates easier model management, comparison and development. Having similar models or various iterations together can facilitate rapid benchmarking, as you can compare their effectiveness. This can lead to faster, more insightful iterative development and refinement of your models. |

|

|

|

|

|

|

|

|

|

## Create Project |

|

|

|

|

|

|

|

|

|

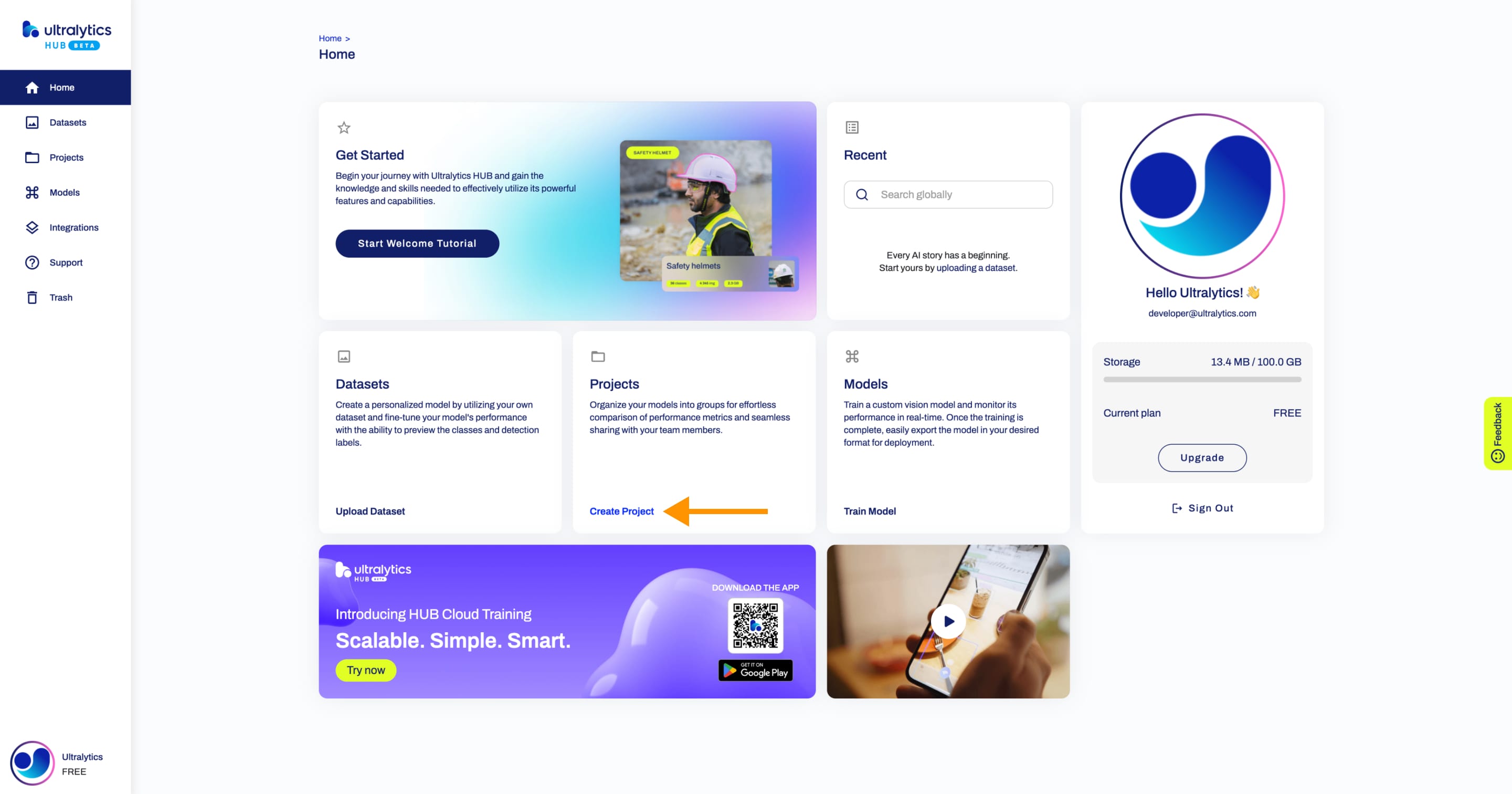

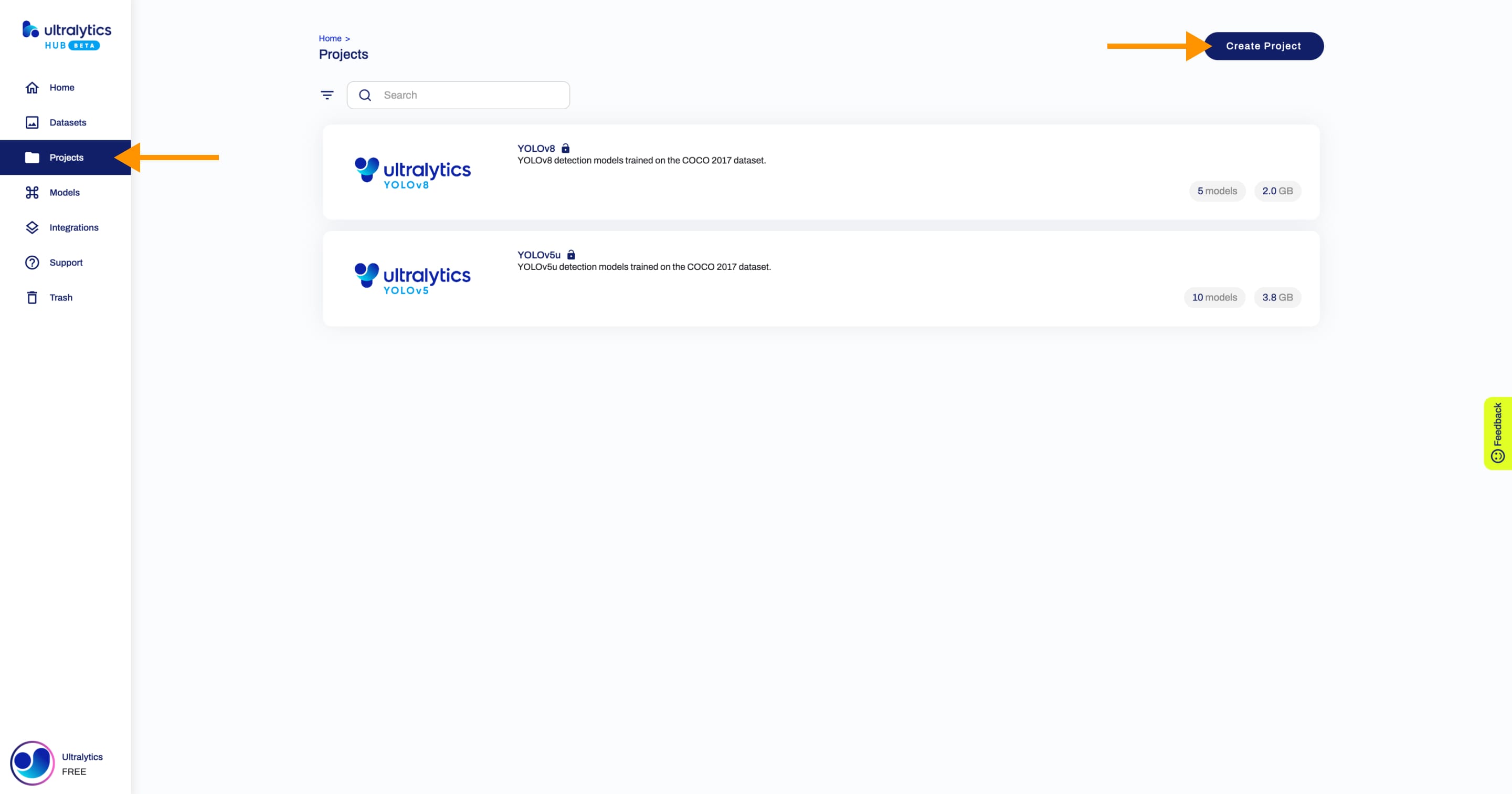

Navigate to the [Projects](https://hub.ultralytics.com/projects) page by clicking on the **Projects** button in the sidebar. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

??? tip "Tip" |

|

|

|

|

|

|

|

|

|

You can also create a project directly from the [Home](https://hub.ultralytics.com/home) page. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Click on the **Create Project** button on the top right of the page. This action will trigger the **Create Project** dialog, opening up a suite of options for tailoring your project to your needs. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

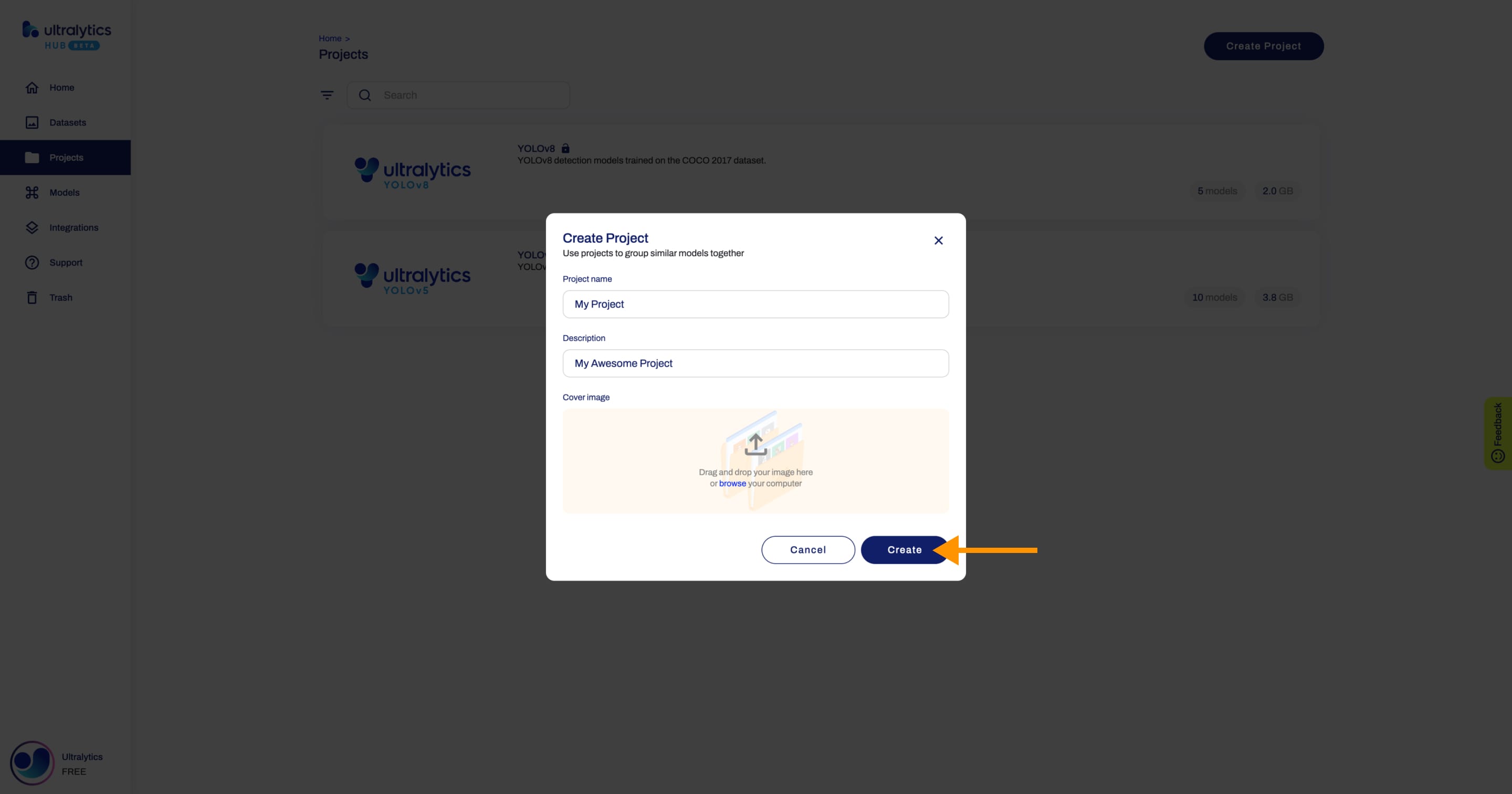

Type the name of your project in the *Project name* field or keep the default name and finalize the project creation with a single click. |

|

|

|

|

|

|

|

|

|

You have the additional option to enrich your project with a description and a unique image, enhancing its recognizability on the Projects page. |

|

|

|

|

|

|

|

|

|

When you're happy with your project configuration, click **Create**. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

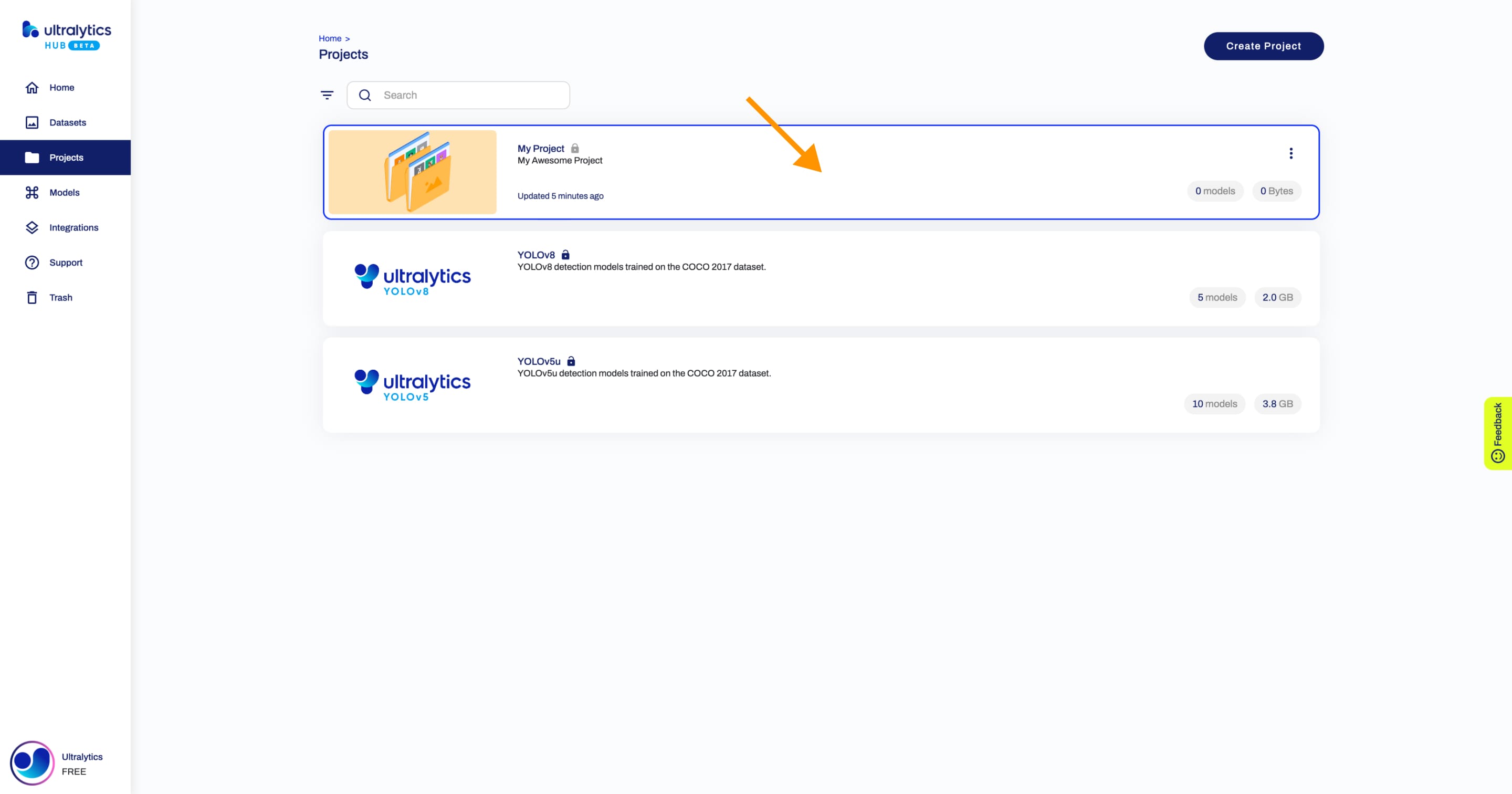

After your project is created, you will be able to access it from the Projects page. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

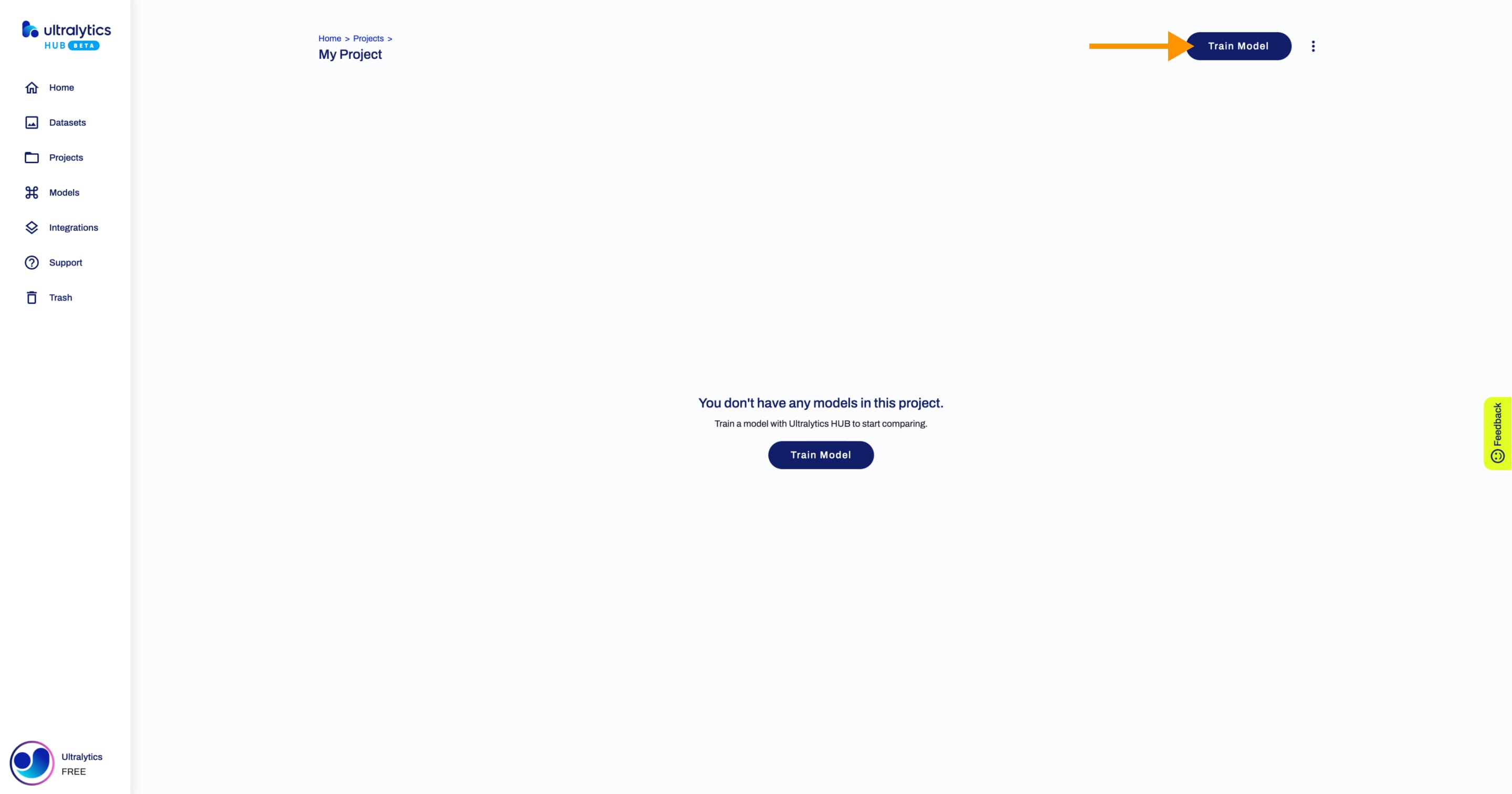

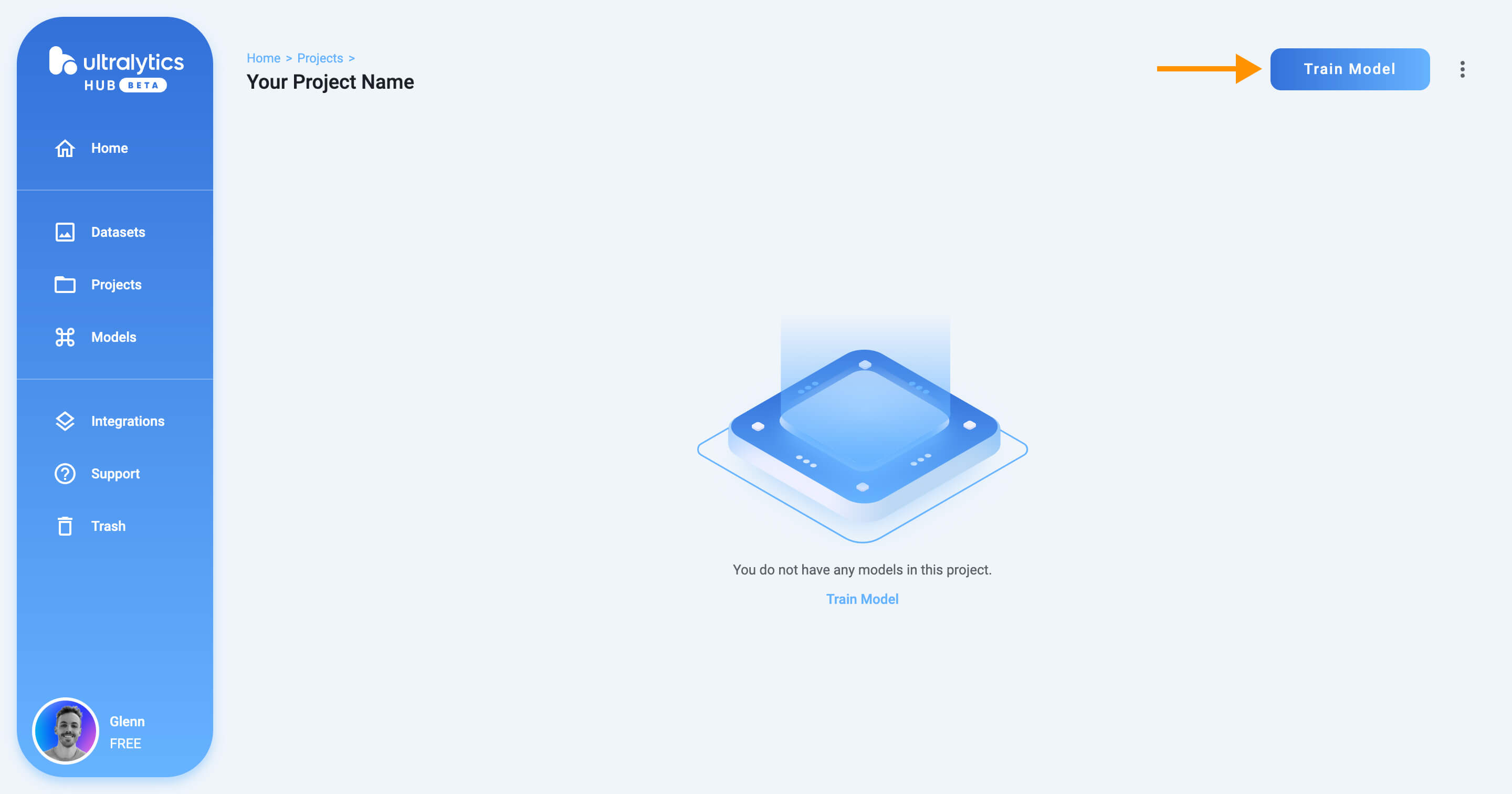

Next, [create a model](./models.md) inside your project. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

## Share Project |

|

|

|

|

|

|

|

|

|

!!! info "Info" |

|

|

|

|

|

|

|

|

|

Ultralytics HUB's sharing functionality provides a convenient way to share projects with others. This feature is designed to accommodate both existing Ultralytics HUB users and those who have yet to create an account. |

|

|

|

|

|

|

|

|

|

??? note "Note" |

|

|

|

|

|

|

|

|

|

You have control over the general access of your projects. |

|

|

|

|

|

|

|

|

|

You can choose to set the general access to "Private", in which case, only you will have access to it. Alternatively, you can set the general access to "Unlisted" which grants viewing access to anyone who has the direct link to the project, regardless of whether they have an Ultralytics HUB account or not. |

|

|

|

|

|

|

|

|

|

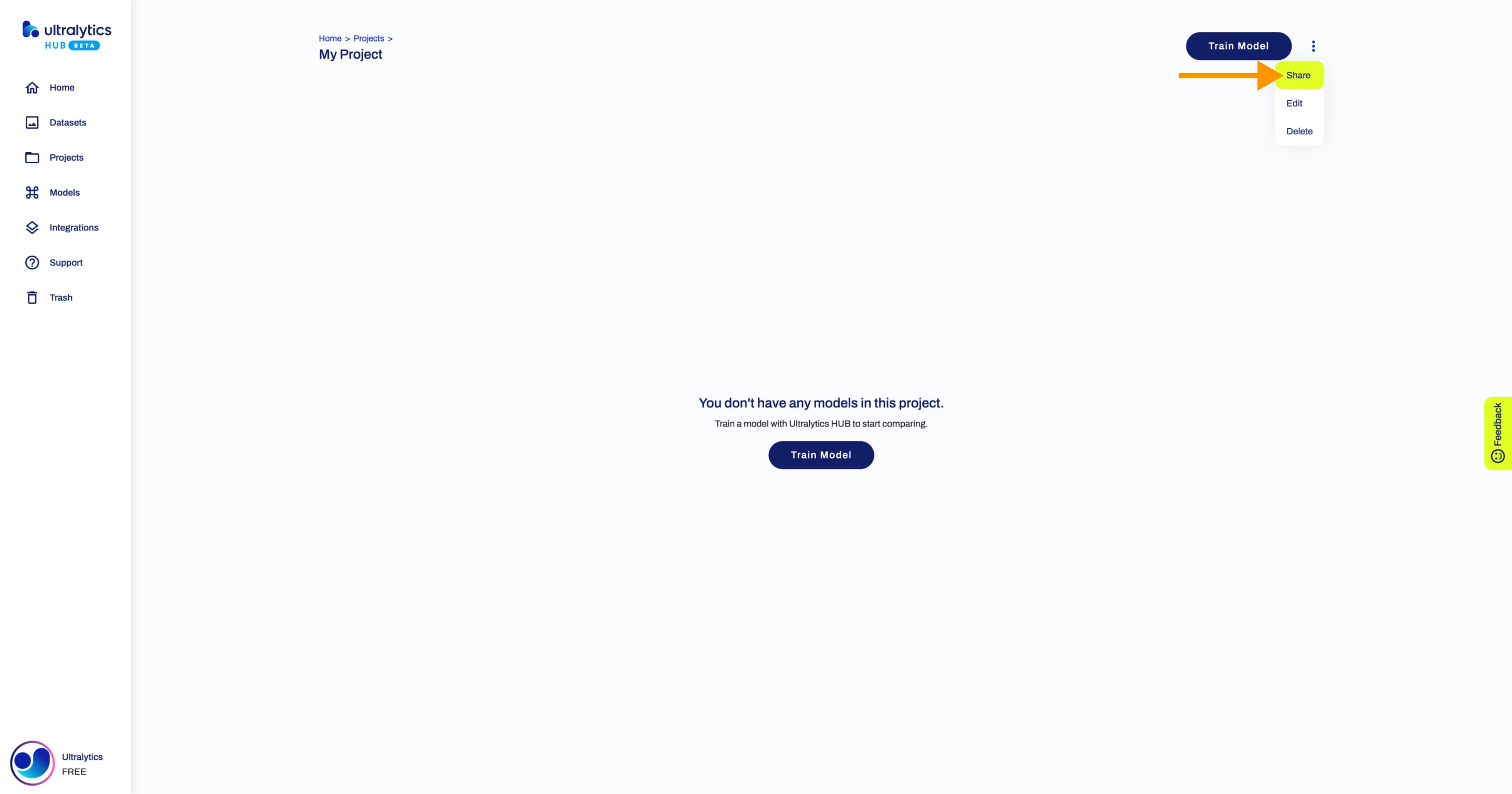

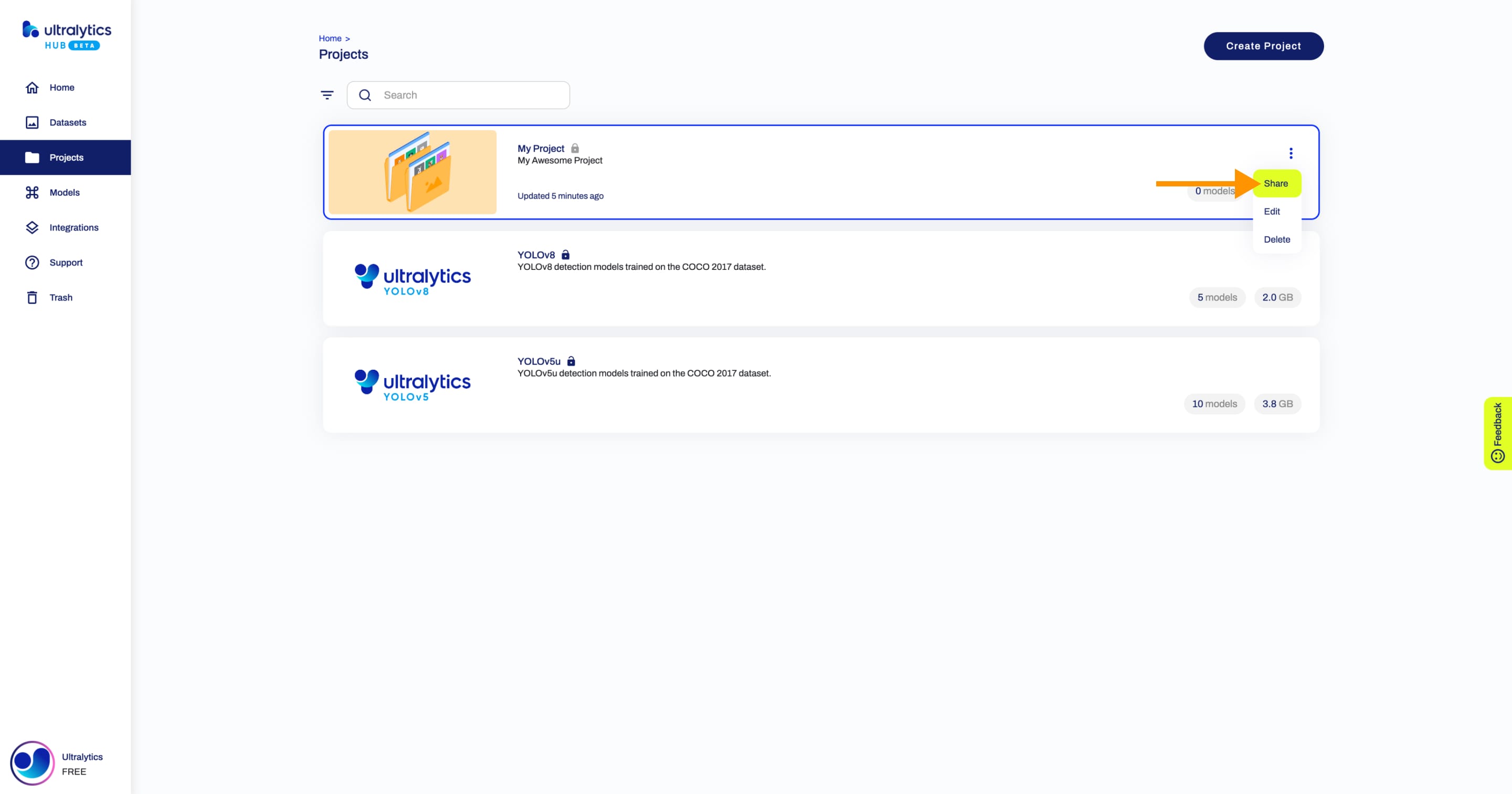

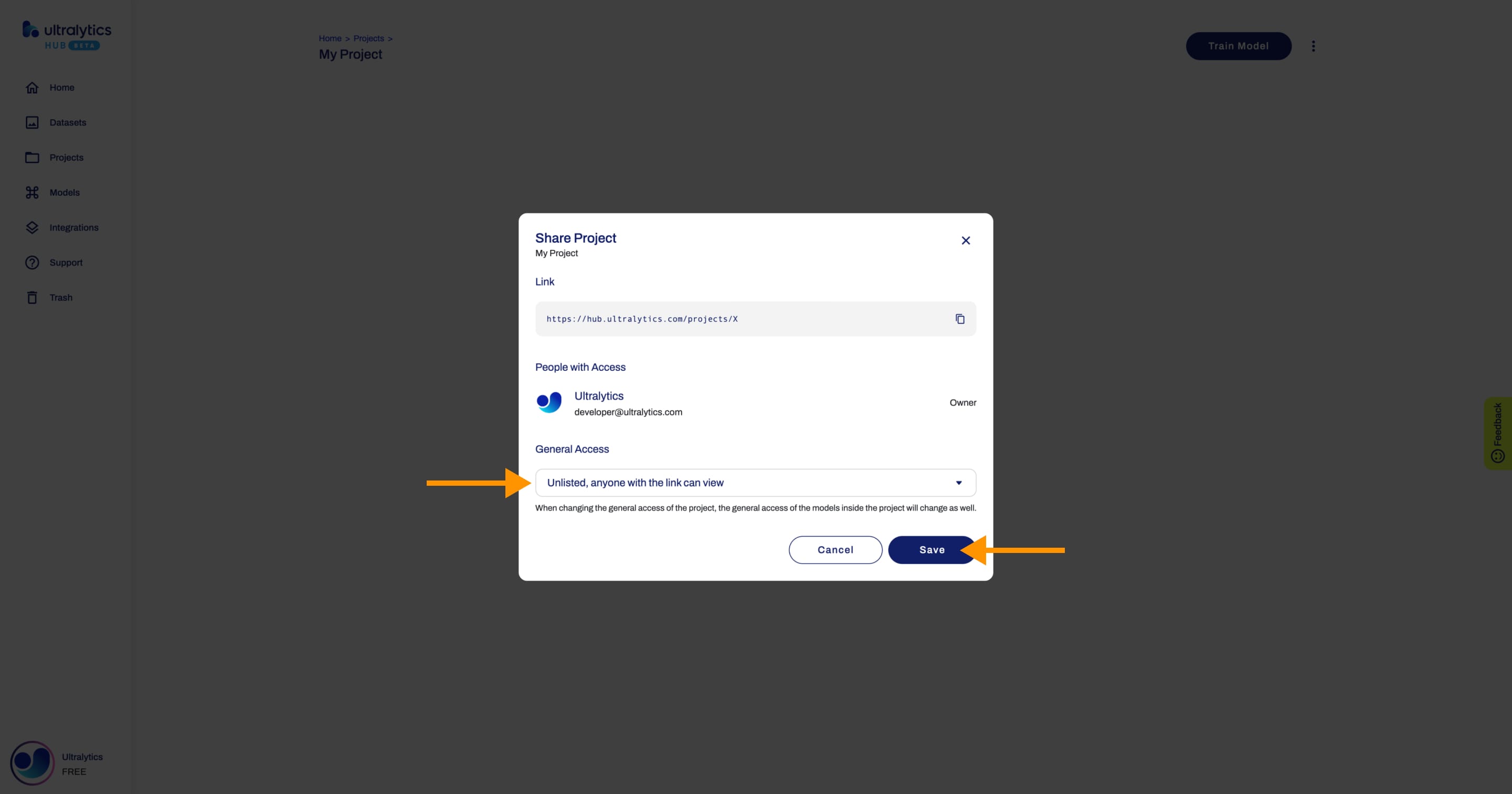

Navigate to the Project page of the project you want to share, open the project actions dropdown and click on the **Share** option. This action will trigger the **Share Project** dialog. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

??? tip "Tip" |

|

|

|

|

|

|

|

|

|

You can also share a project directly from the [Projects](https://hub.ultralytics.com/projects) page. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Set the general access to "Unlisted" and click **Save**. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

!!! warning "Warning" |

|

|

|

|

|

|

|

|

|

When changing the general access of a project, the general access of the models inside the project will be changed as well. |

|

|

|

|

|

|

|

|

|

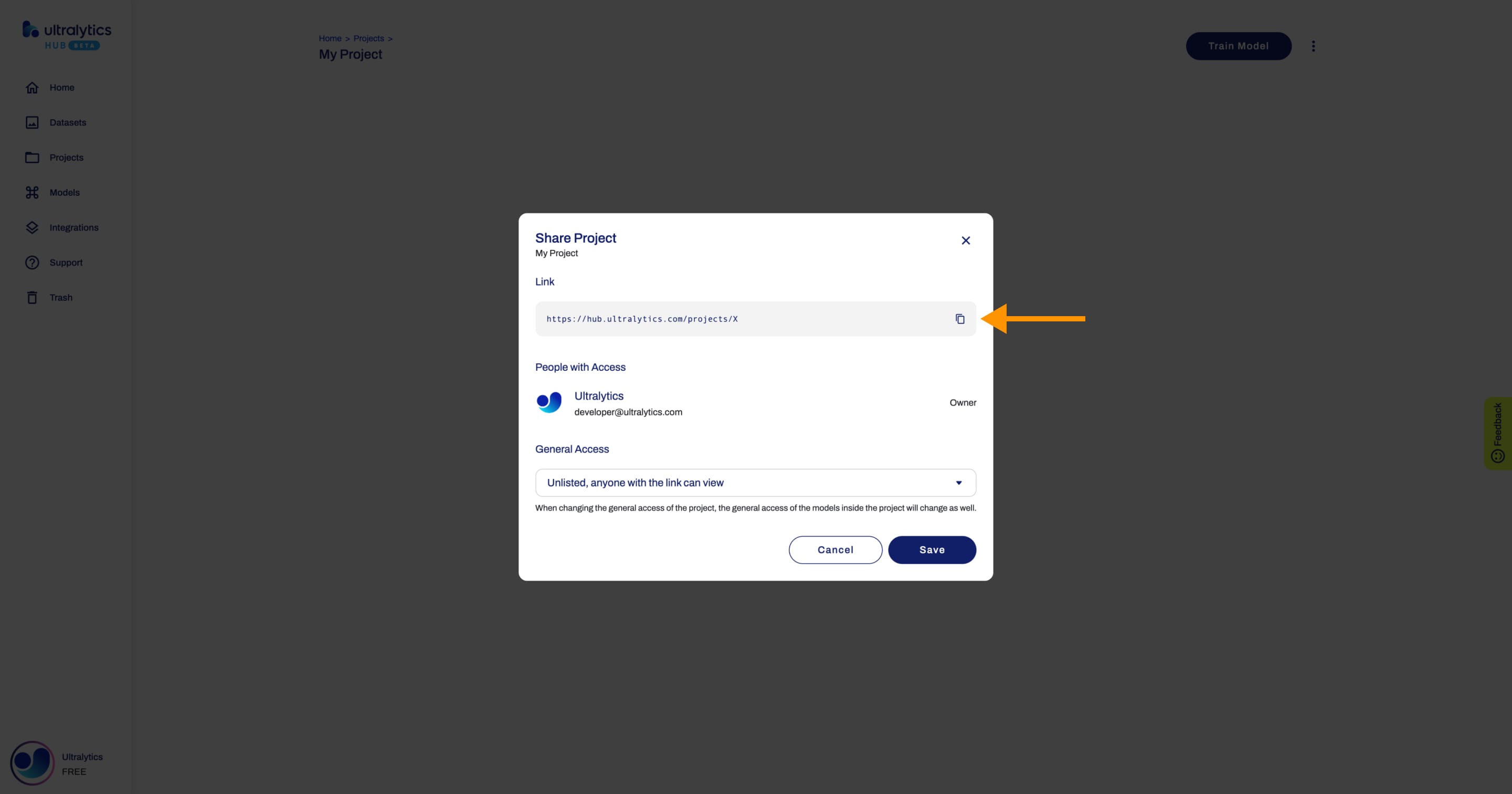

Now, anyone who has the direct link to your project can view it. |

|

|

|

|

|

|

|

|

|

??? tip "Tip" |

|

|

|

|

|

|

|

|

|

You can easily click on the project's link shown in the **Share Project** dialog to copy it. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

## Edit Project |

|

|

|

|

|

|

|

|

|

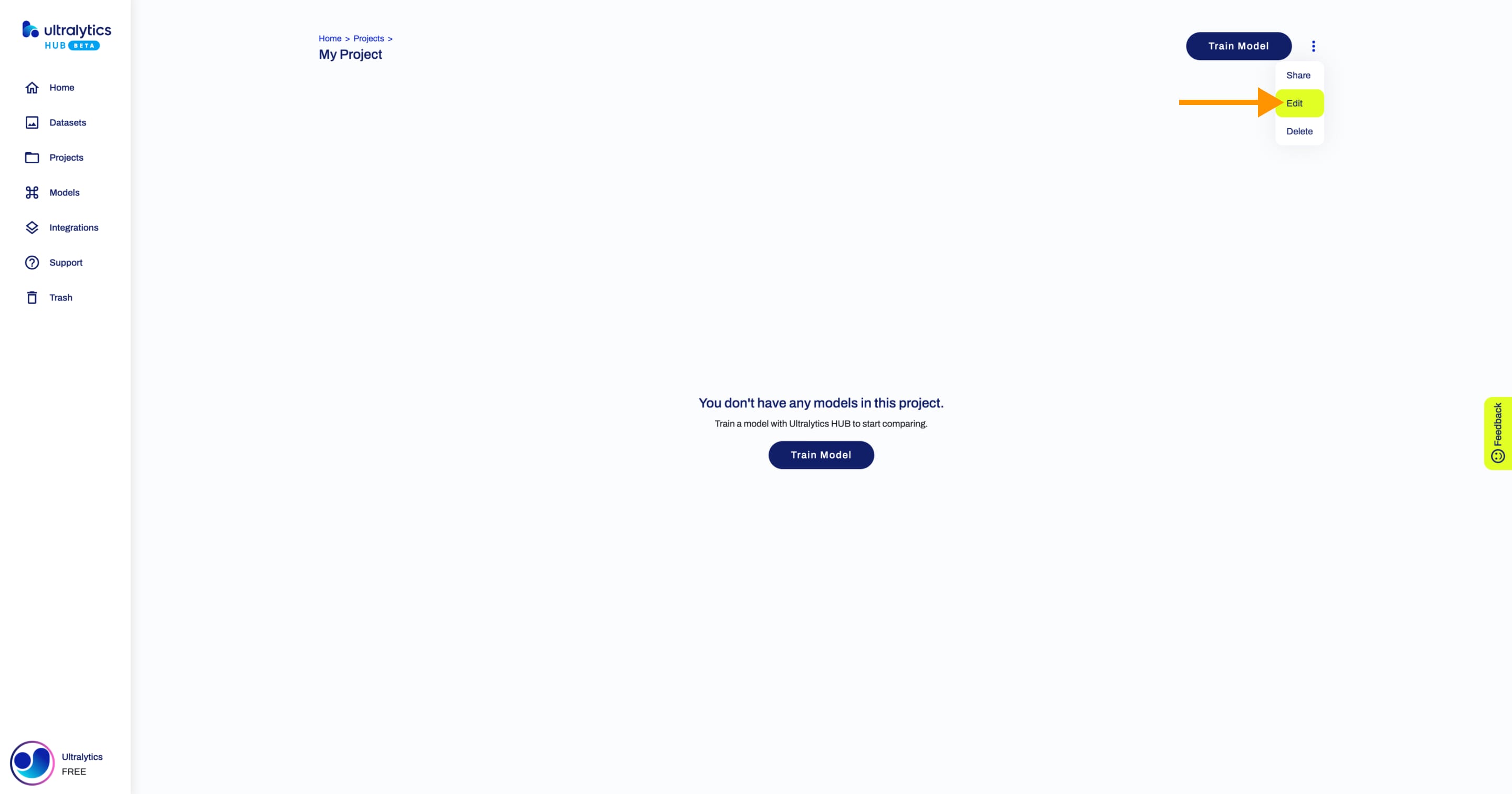

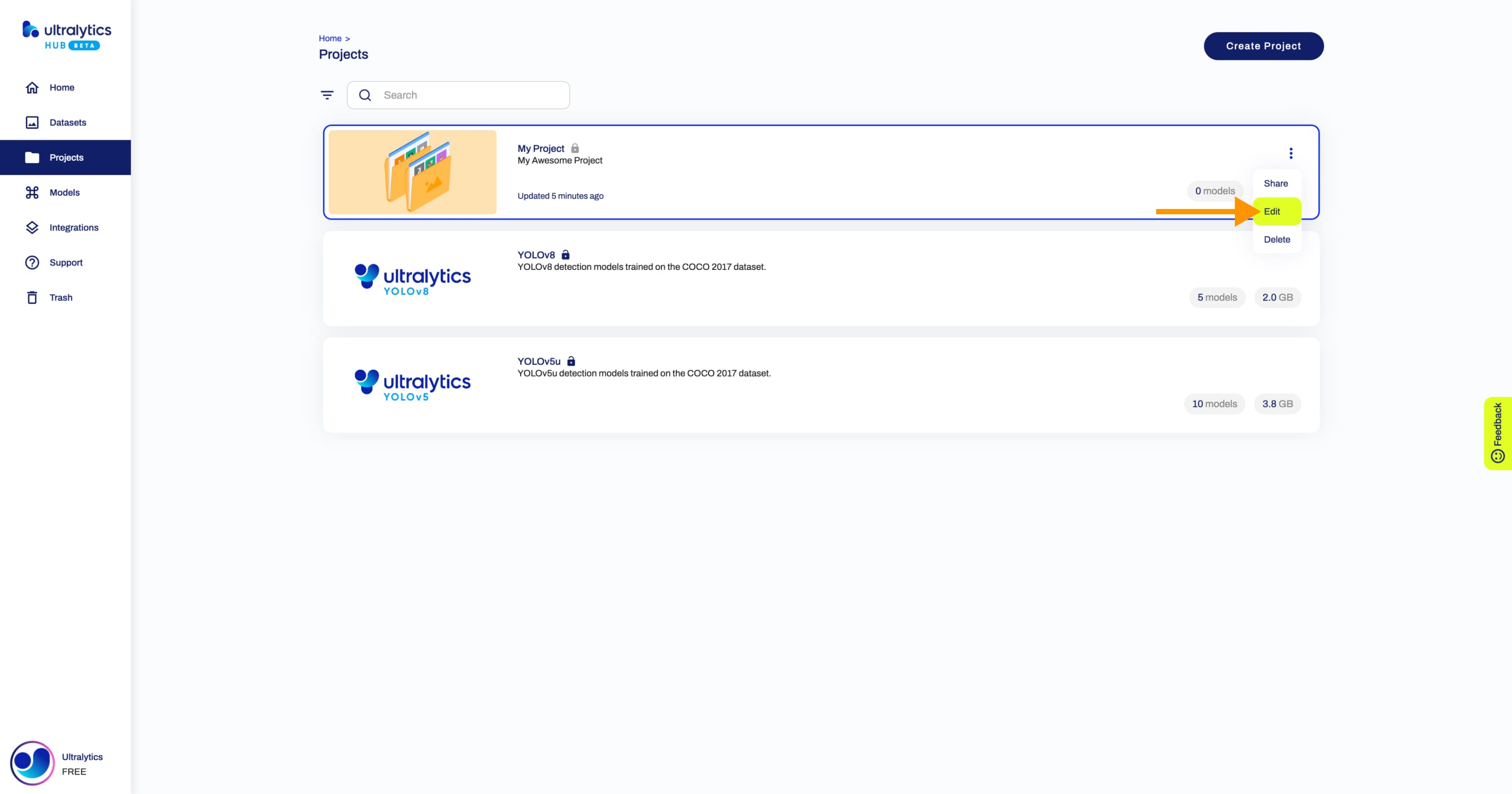

Navigate to the Project page of the project you want to edit, open the project actions dropdown and click on the **Edit** option. This action will trigger the **Update Project** dialog. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

??? tip "Tip" |

|

|

|

|

|

|

|

|

|

You can also edit a project directly from the [Projects](https://hub.ultralytics.com/projects) page. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Apply the desired modifications to your project and then confirm the changes by clicking **Save**. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

## Delete Project |

|

|

|

|

|

|

|

|

|

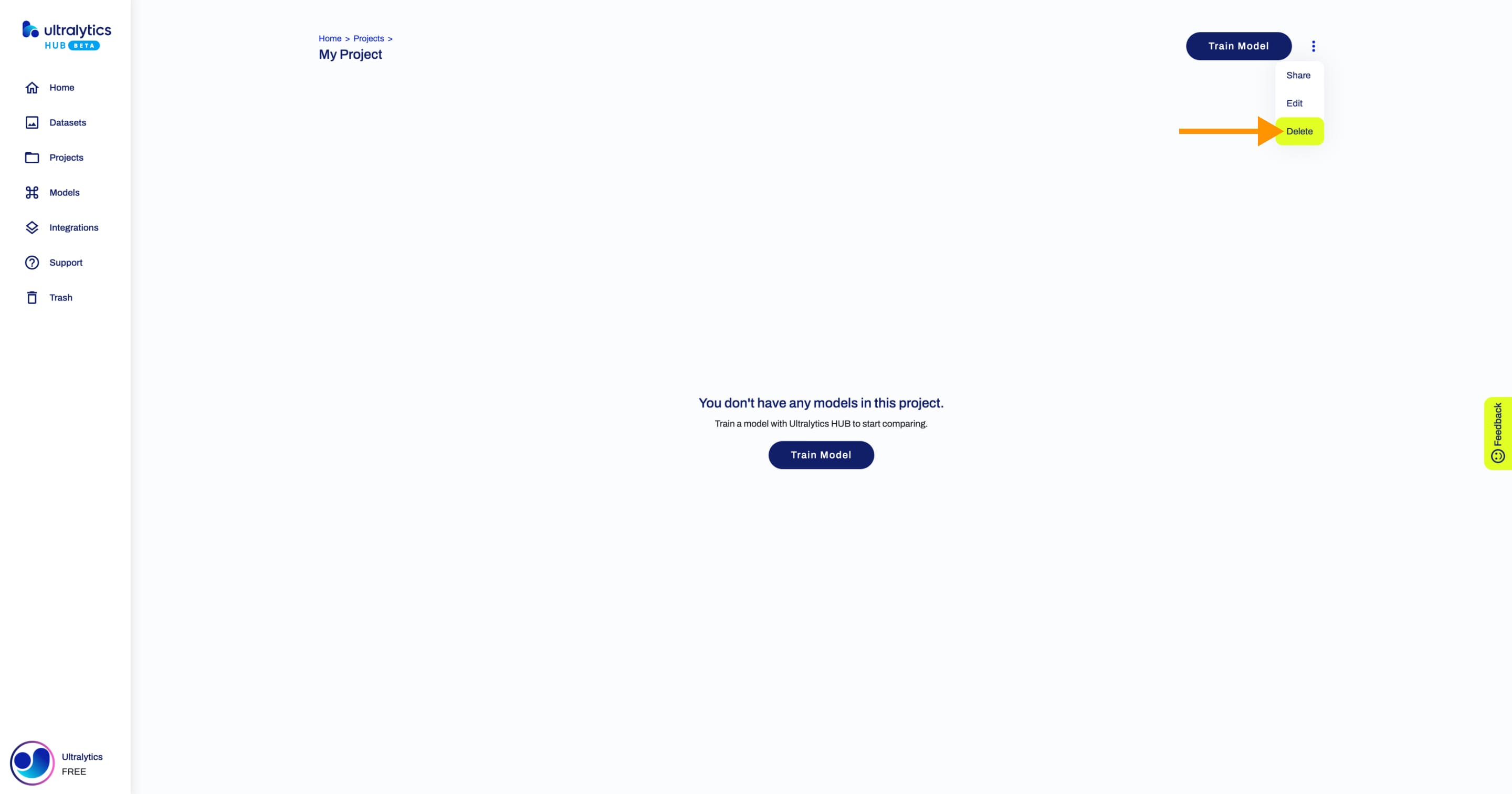

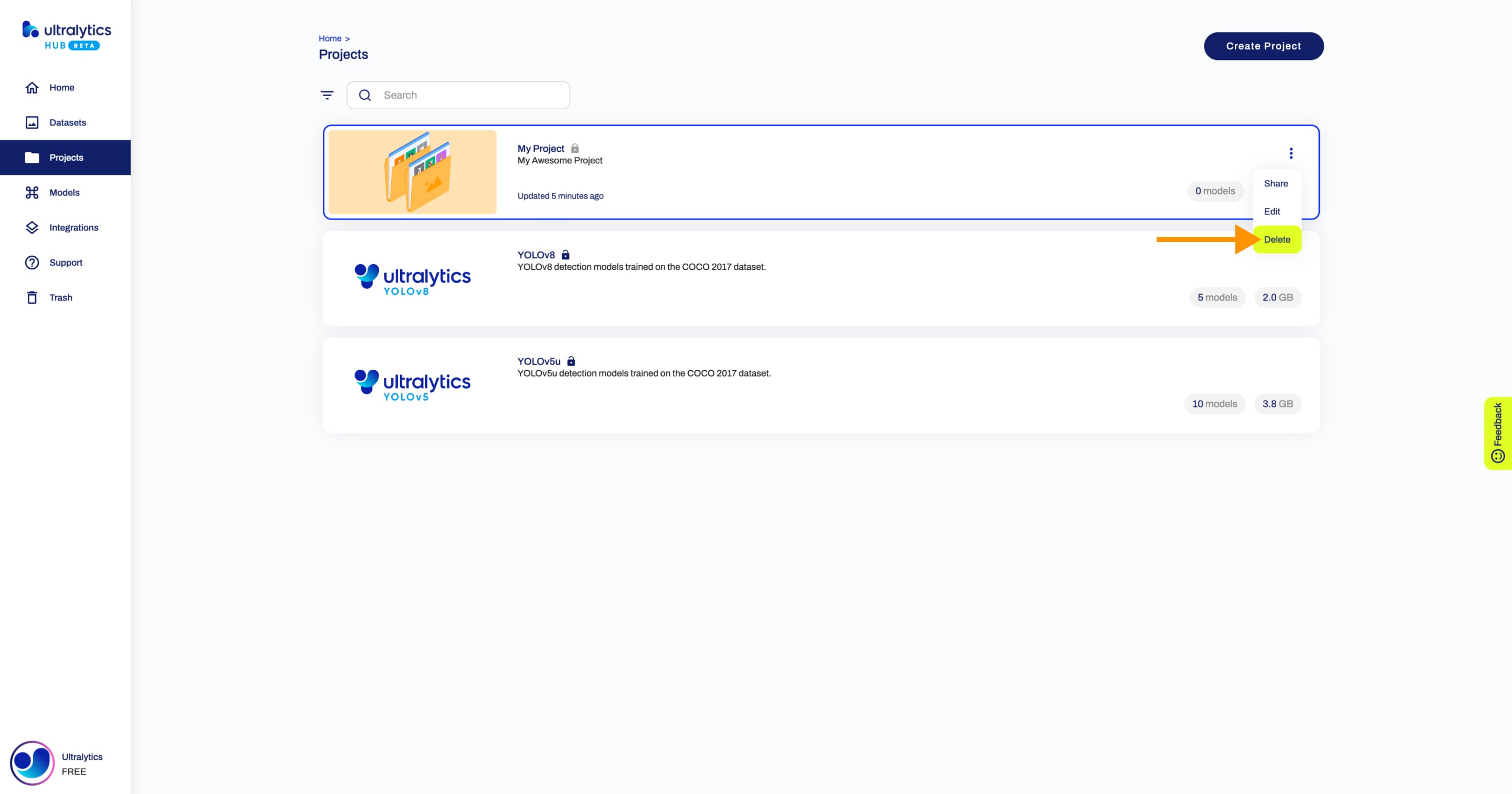

Navigate to the Project page of the project you want to delete, open the project actions dropdown and click on the **Delete** option. This action will delete the project. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

??? tip "Tip" |

|

|

|

|

|

|

|

|

|

You can also delete a project directly from the [Projects](https://hub.ultralytics.com/projects) page. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

!!! warning "Warning" |

|

|

|

|

|

|

|

|

|

When deleting a project, the the models inside the project will be deleted as well. |

|

|

|

|

|

|

|

|

|

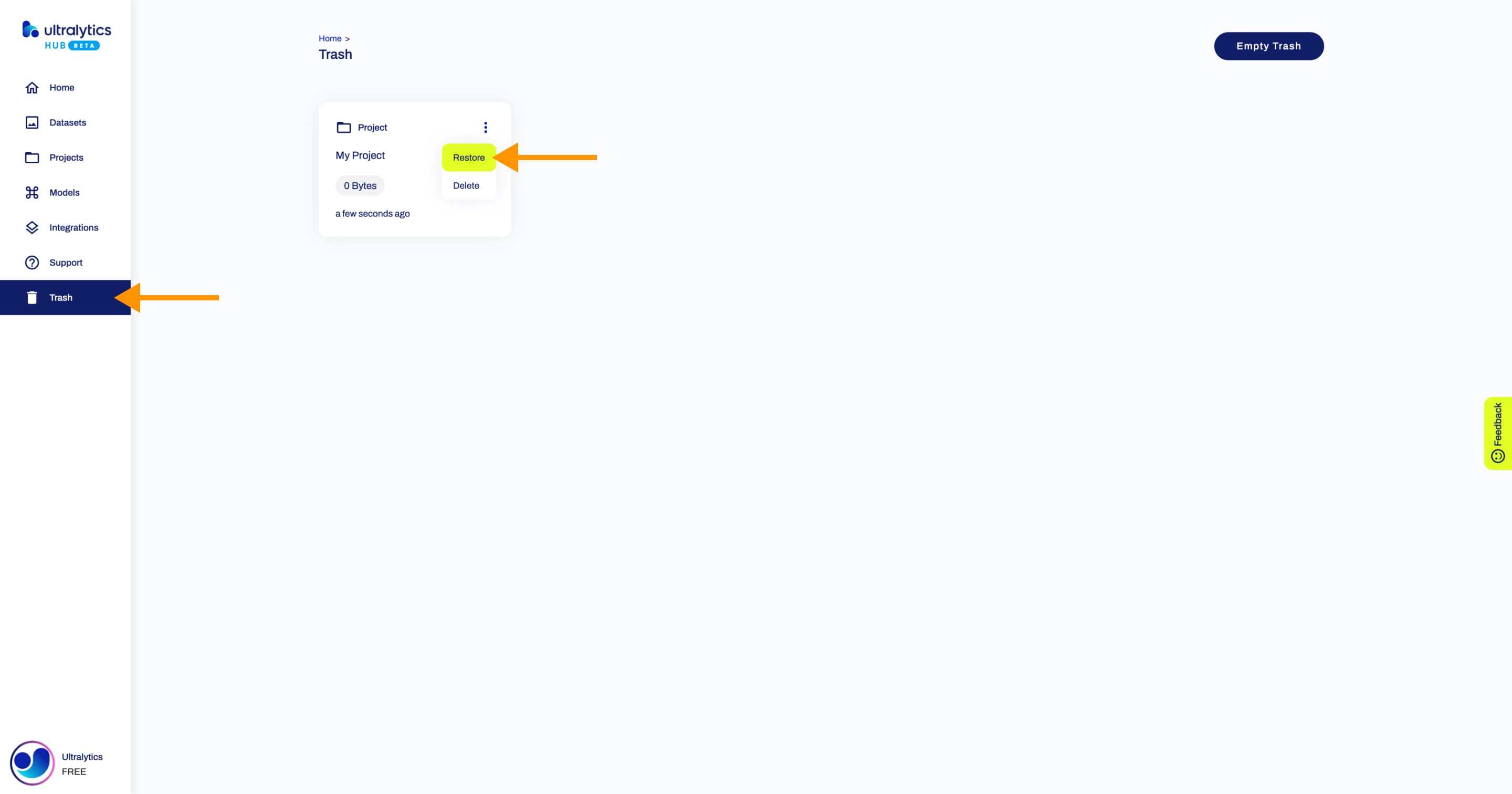

??? note "Note" |

|

|

|

|

|

|

|

|

|

If you change your mind, you can restore the project from the [Trash](https://hub.ultralytics.com/trash) page. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

## Compare Models |

|

|

|

|

|

|

|

|

|

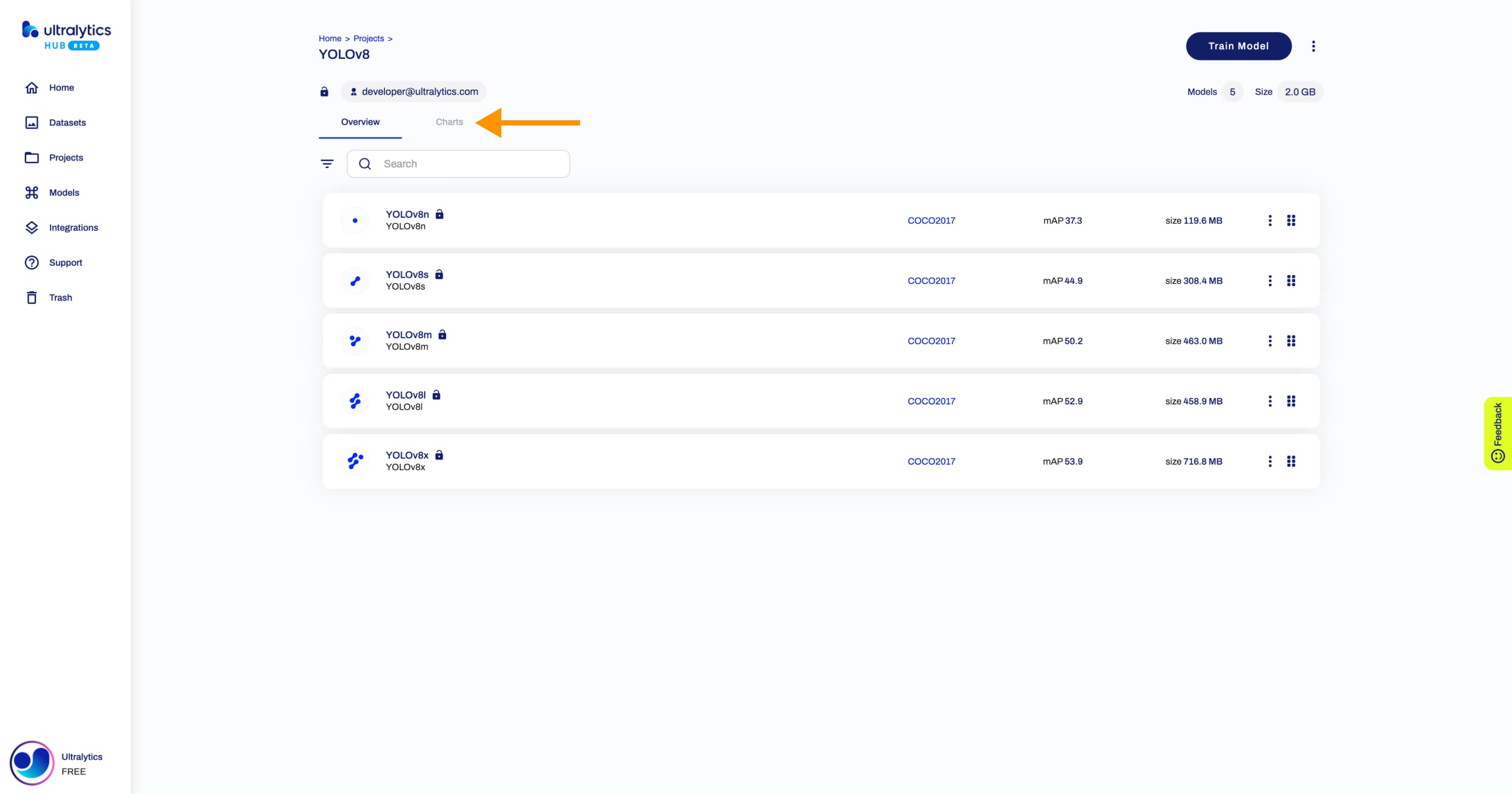

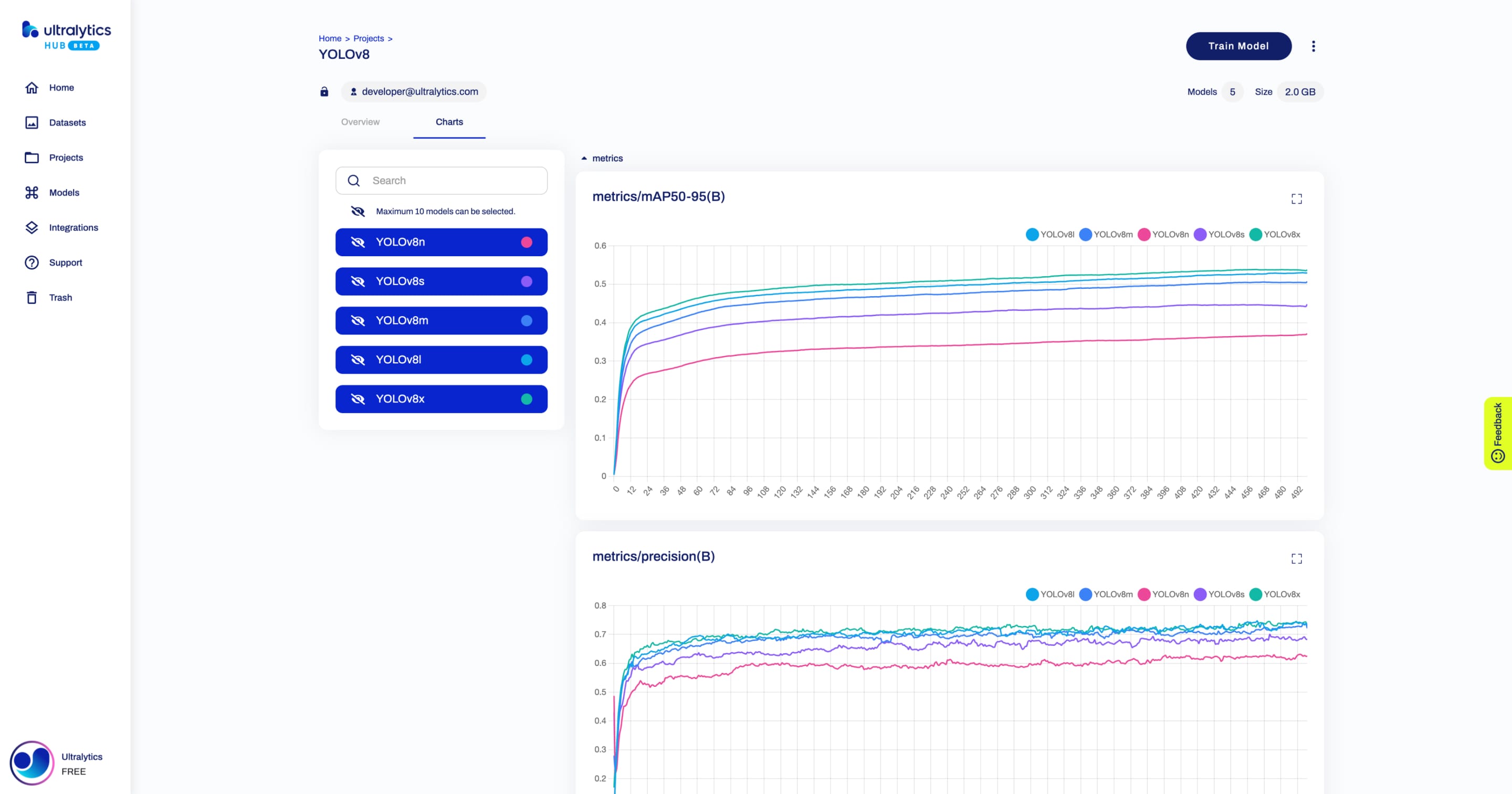

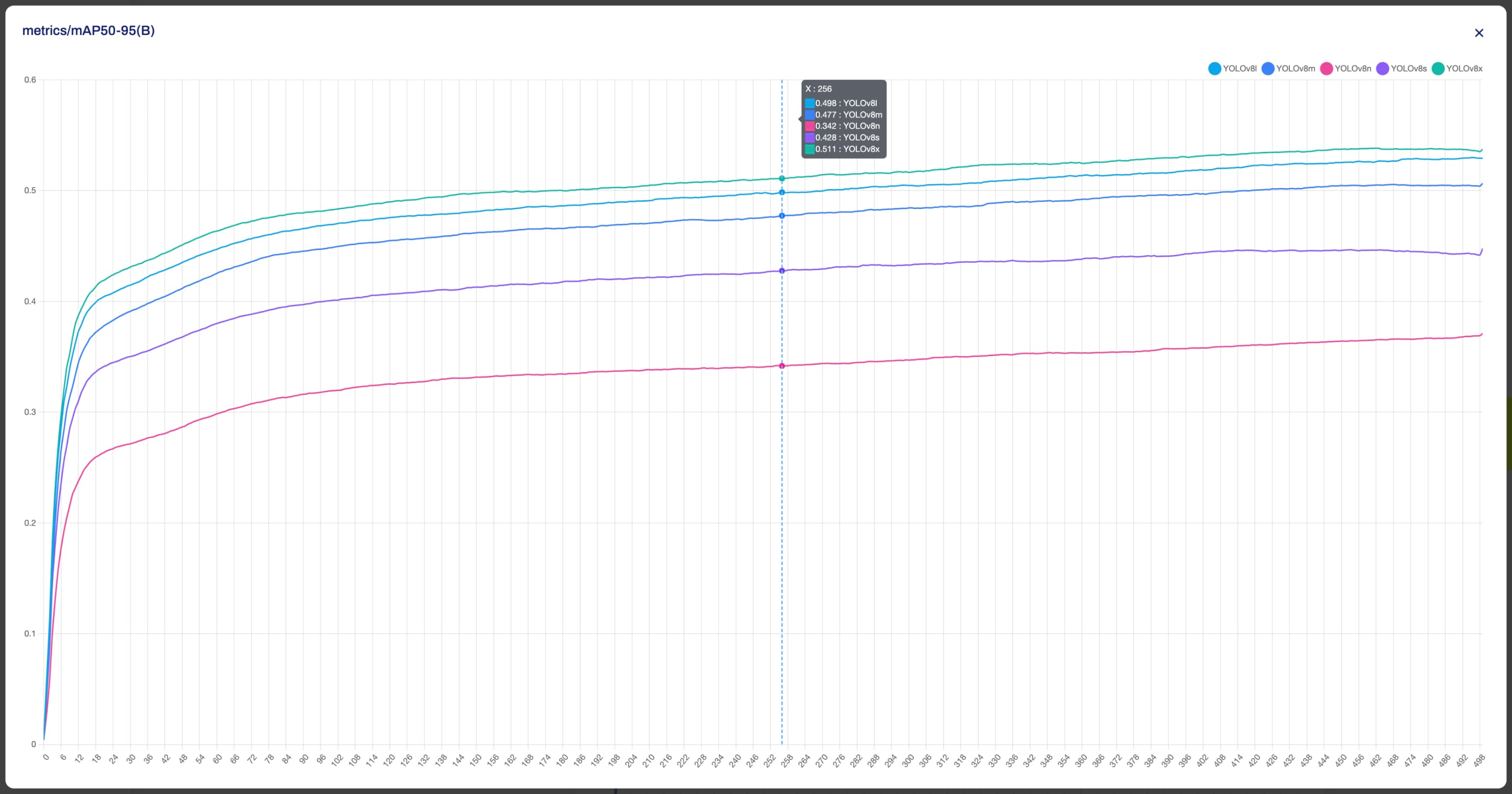

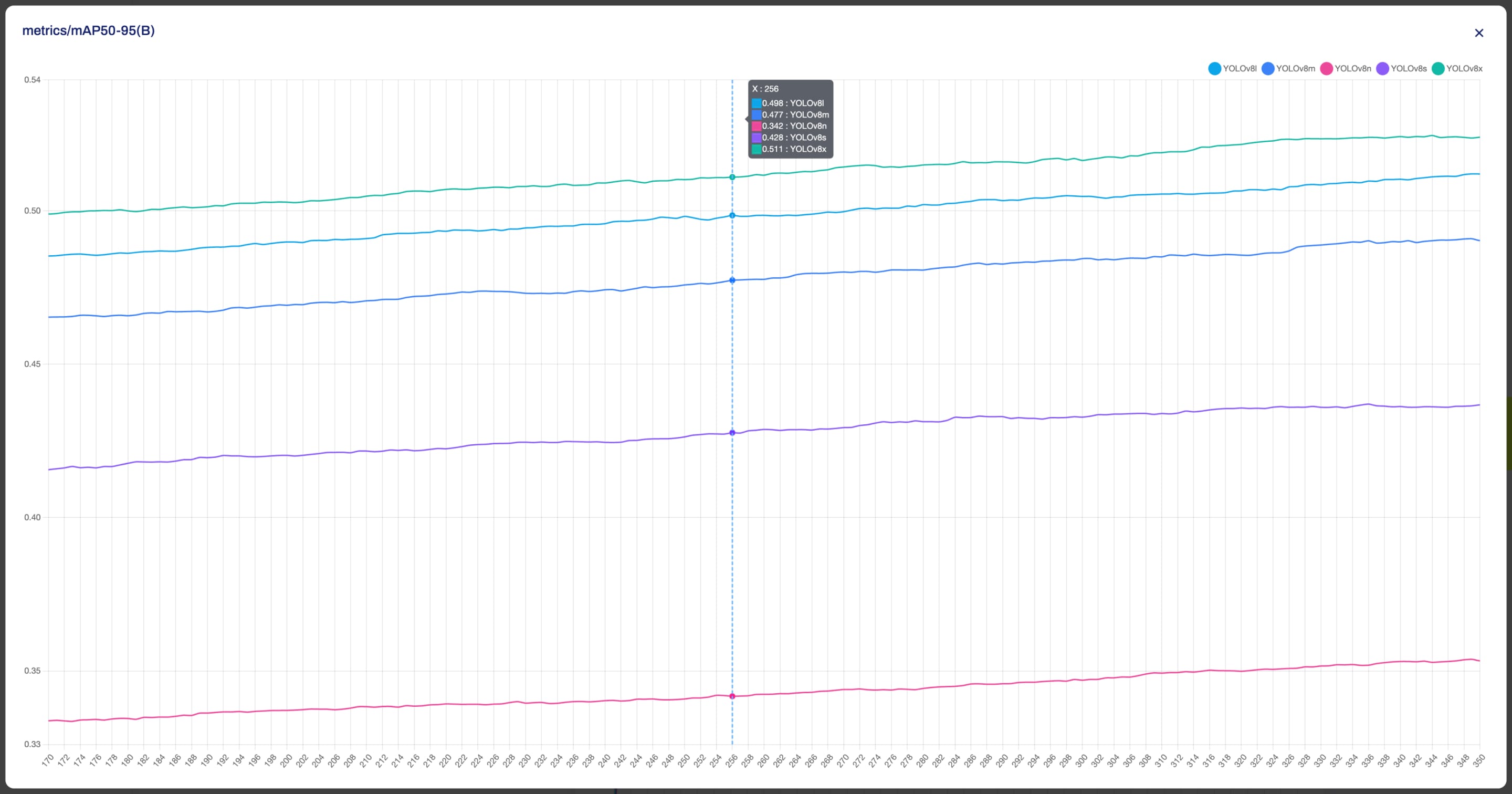

Navigate to the Project page of the project where the models you want to compare are located. To use the model comparison feature, click on the **Charts** tab. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

This will display all the relevant charts. Each chart corresponds to a different metric and contains the performance of each model for that metric. The models are represented by different colors and you can hover over each data point to get more information. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

??? tip "Tip" |

|

|

|

|

|

|

|

|

|

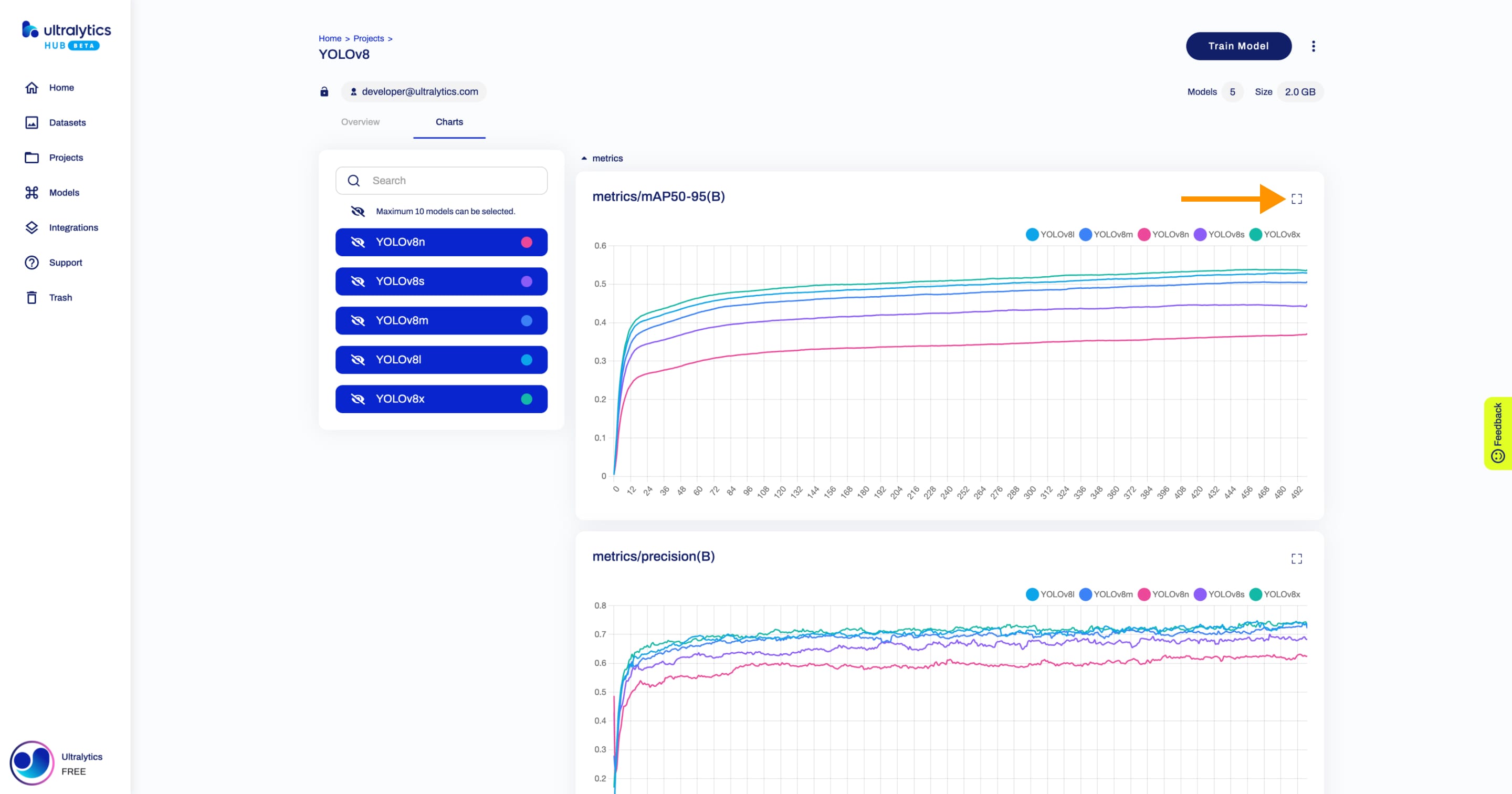

Each chart can be enlarged for better visualization. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

??? tip "Tip" |

|

|

|

|

|

|

|

|

|

You have the flexibility to customize your view by selectively hiding certain models. This feature allows you to concentrate on the models of interest. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

## Reorder Models |

|

|

|

|

|

|

|

|

|

??? note "Note" |

|

|

|

|

|

|

|

|

|

Ultralytics HUB's reordering functionality works only inside projects you own. |

|

|

|

|

|

|

|

|

|

Navigate to the Project page of the project where the models you want to reorder are located. Click on the designated reorder icon of the model you want to move and drag it to the desired location. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

## Transfer Models |

|

|

|

|

|

|

|

|

|

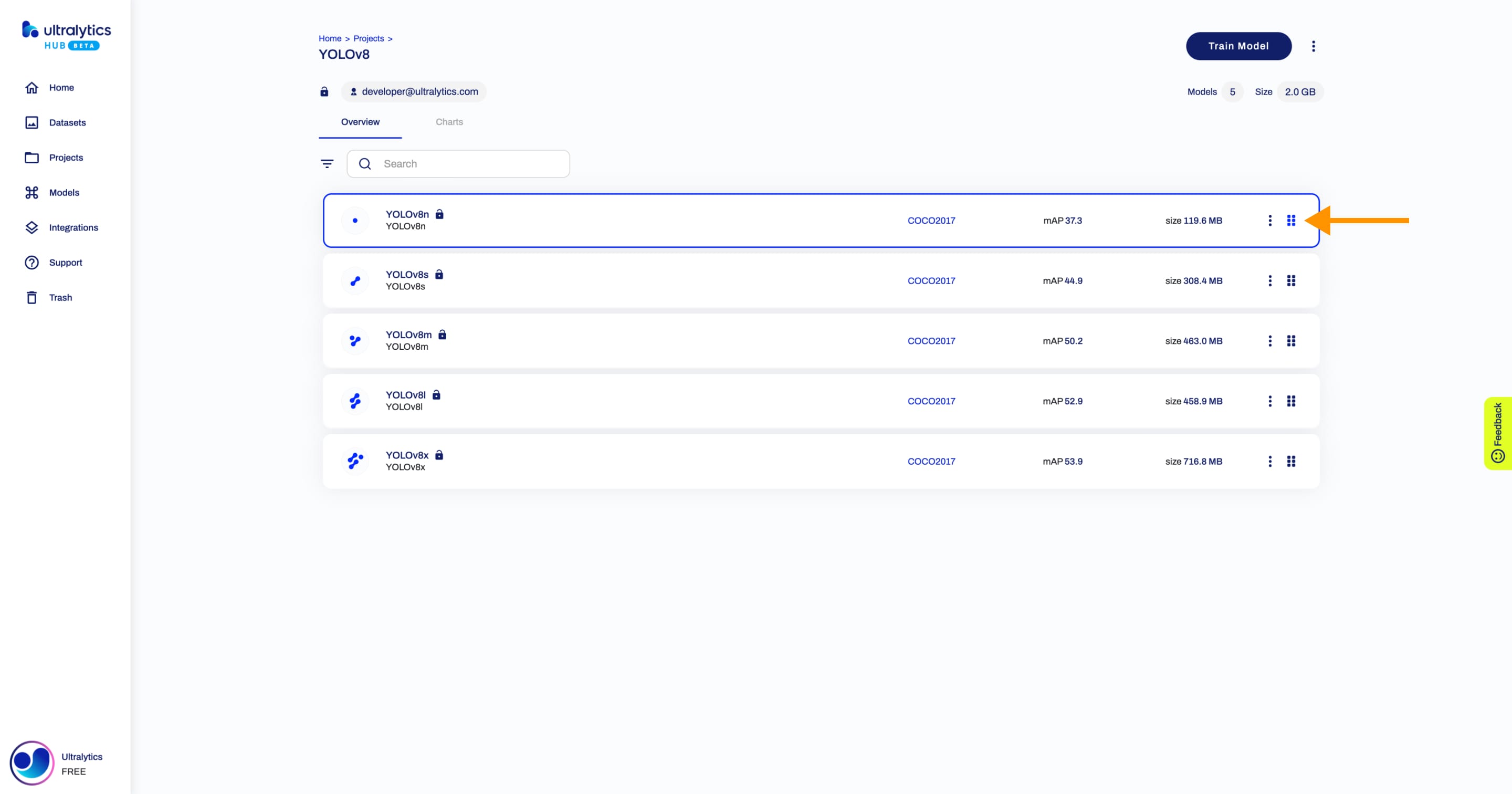

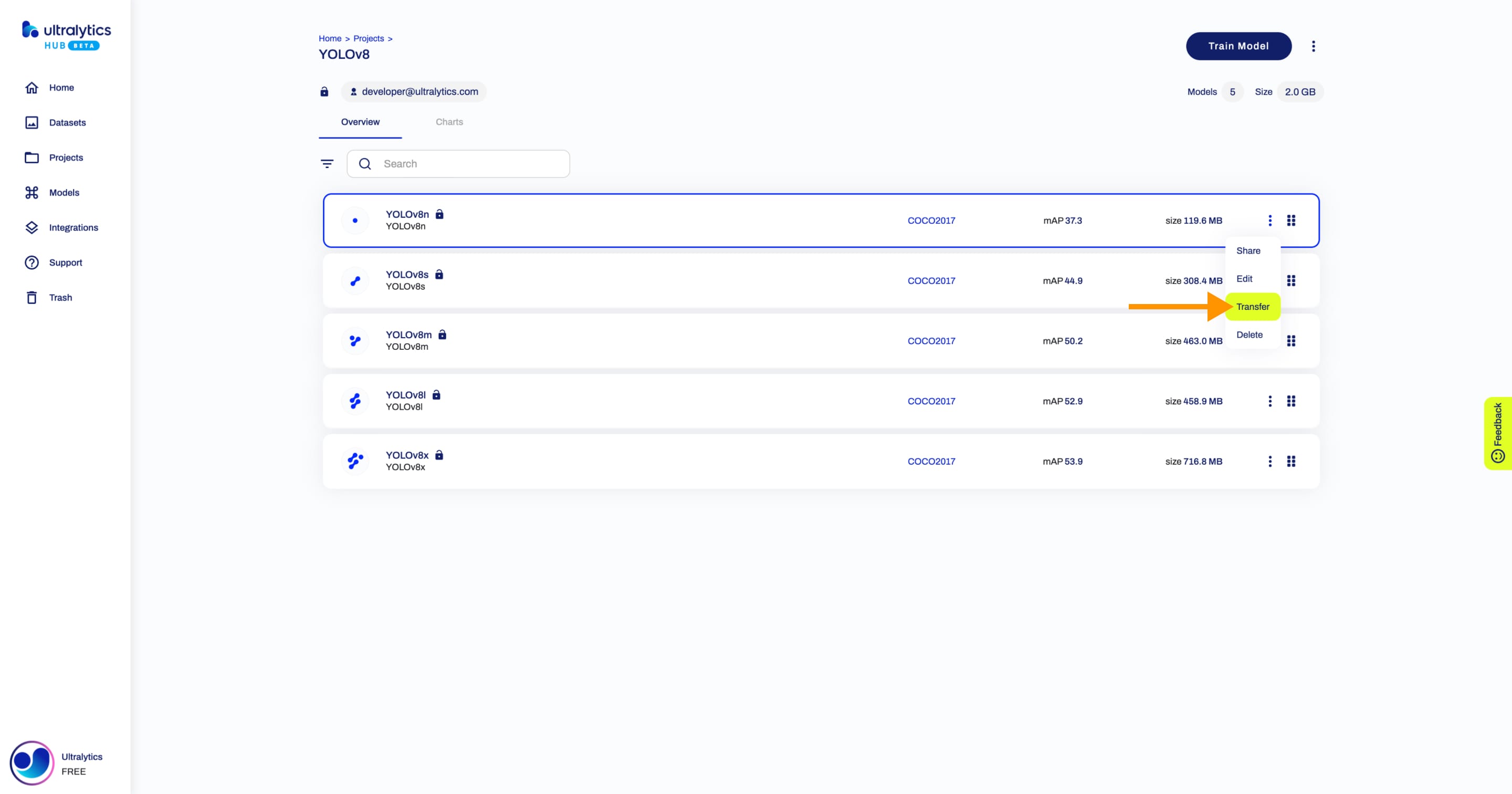

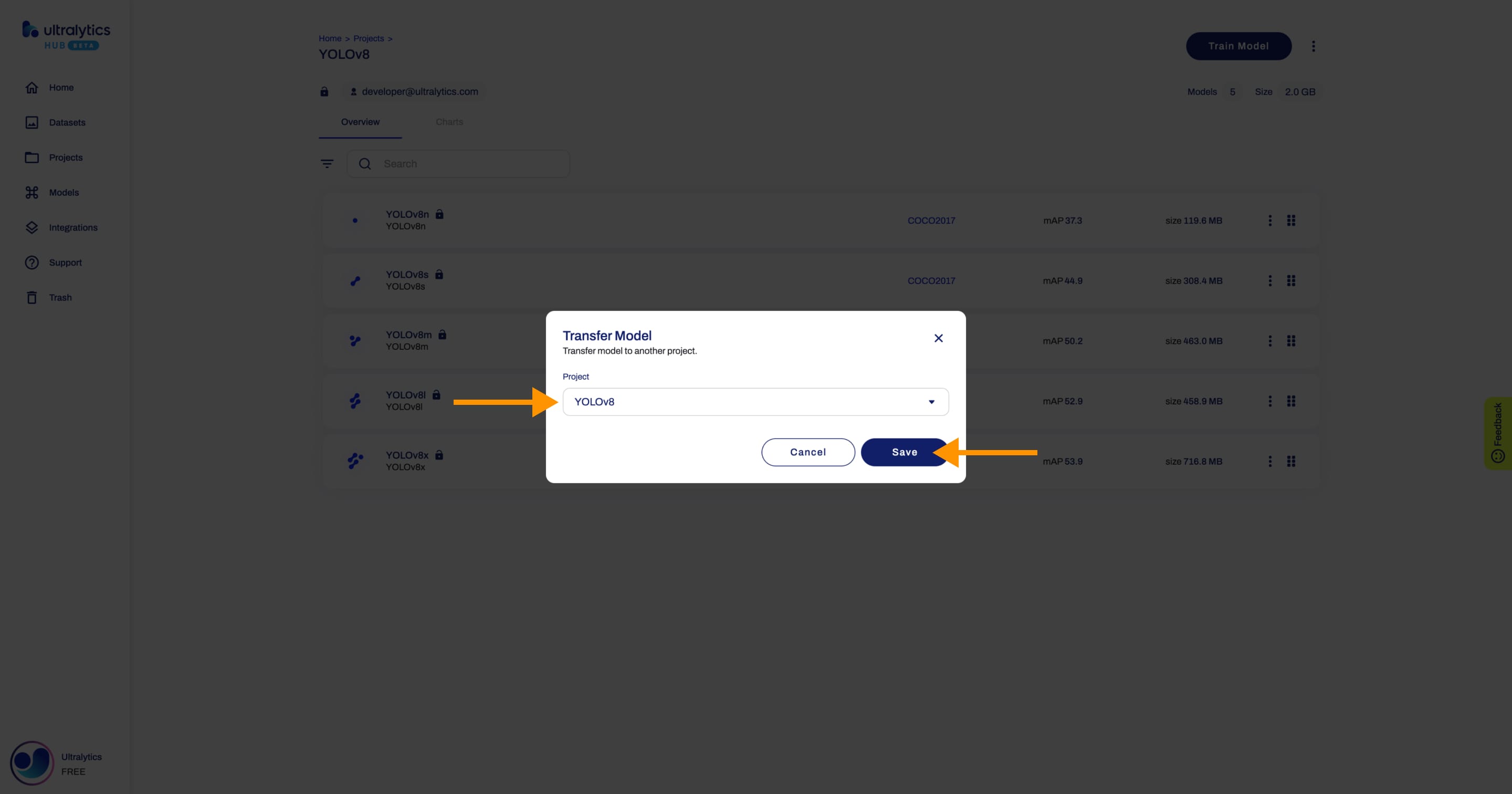

Navigate to the Project page of the project where the model you want to mode is located, open the project actions dropdown and click on the **Transfer** option. This action will trigger the **Transfer Model** dialog. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

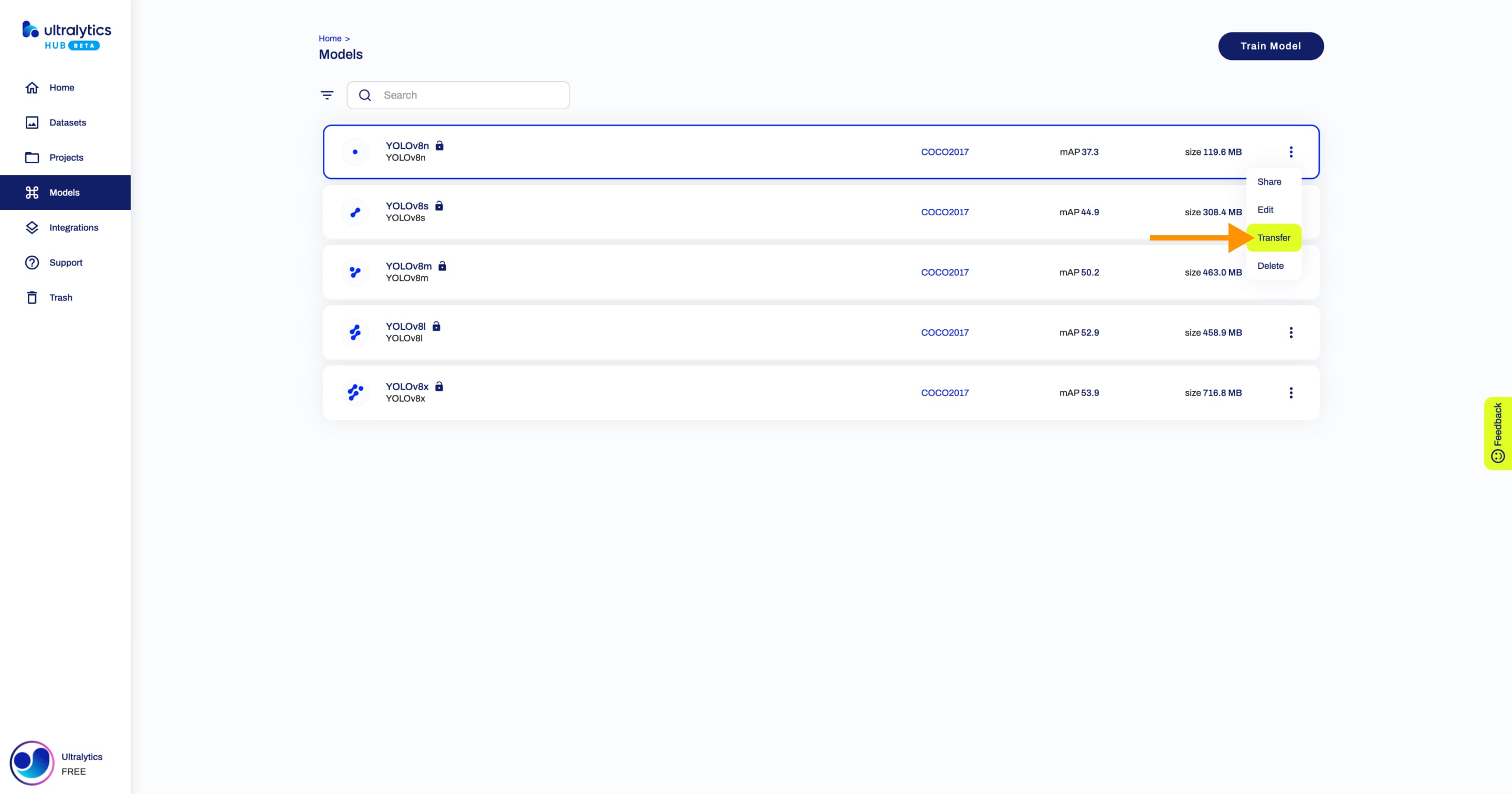

??? tip "Tip" |

|

|

|

|

|

|

|

|

|

You can also transfer a model directly from the [Models](https://hub.ultralytics.com/models) page. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Select the project you want to transfer the model to and click **Save**. |

|

|

|

|

|

|

|

|

|

|