description: Learn how to use Ultralytics HUB for cloud for efficient and user-friendly AI model training. For easy model creation, training, evaluation and deployment, follow our detailed guide.

description: Learn how to use Ultralytics HUB for efficient and user-friendly AI model training in the cloud. Follow our detailed guide for easy model creation, training, evaluation, and deployment.

keywords: Ultralytics, HUB Models, AI model training, model creation, model training, model evaluation, model deployment

---

# Cloud Training

Ultralytics provides a web-based cloud training platform, enabling rapid and streamlined deployment of custom object detection models. Users benefit from a straightforward interface that facilitates the selection of their desired dataset and training method. Ultralytics further streamlines the process by offering a diverse array of pre-built options and architectural configurations.

[Ultralytics HUB](https://hub.ultralytics.com/) provides a powerful and user-friendly cloud platform to train custom object detection models. Easily select your dataset and the desired training method, then kick off the process with just a few clicks. Ultralytics HUB offers pre-built options and various model architectures to streamline your workflow.

@ -14,60 +14,65 @@ Read more about creating and other details of a Model at our [HUB Models page](m

## Selecting an Instance

For details on Picking a model, and instances for it, please read [Instances guide Page](models.md)

For details on picking a model and instances for it, please read our [Instances guide Page](models.md)

## Steps to train the Model

## Steps to Train the Model

Once the instance has been selected, training a model using ultralytics Hub is a three step process, as below: <br/>

Once the instance has been selected, training a model using Ultralytics HUB is a three-step process, as below:

1. Picking a Dataset - Read more about Dataset, steps to add/remove dataset from [Dataset page](datasets.md)<br/>

2. Picking a Model - Read more about Models, steps to create / share and handle a model [HUB Models page](models.md)<br/>

3. Training the Model on the chosen Dataset <br/>

1. Picking a Dataset - Read more about datasets, steps to add/remove datasets from the [Dataset page](datasets.md)

2. Picking a Model - Read more about models, steps to create/share and handle a model on the [HUB Models page](models.md)

3. Training the Model on the Chosen Dataset

Ultralytics HUB offers three training options:

- **Ultralytics Cloud**

- **Google Colab** - Read more about training via Google Colab [HUB Models page](models.md)

- **Bring your own agent** - Read more about training via your own Agent [HUB Models page](models.md)

- **Ultralytics Cloud** - Explained in this page.

- **Google Colab** - Train on Google's popular Colab notebooks.

- **Bring your own agent** - Train models locally on your own hardware or on-premise GPU servers.

In order to start training your model, follow the instructions presented in these steps.

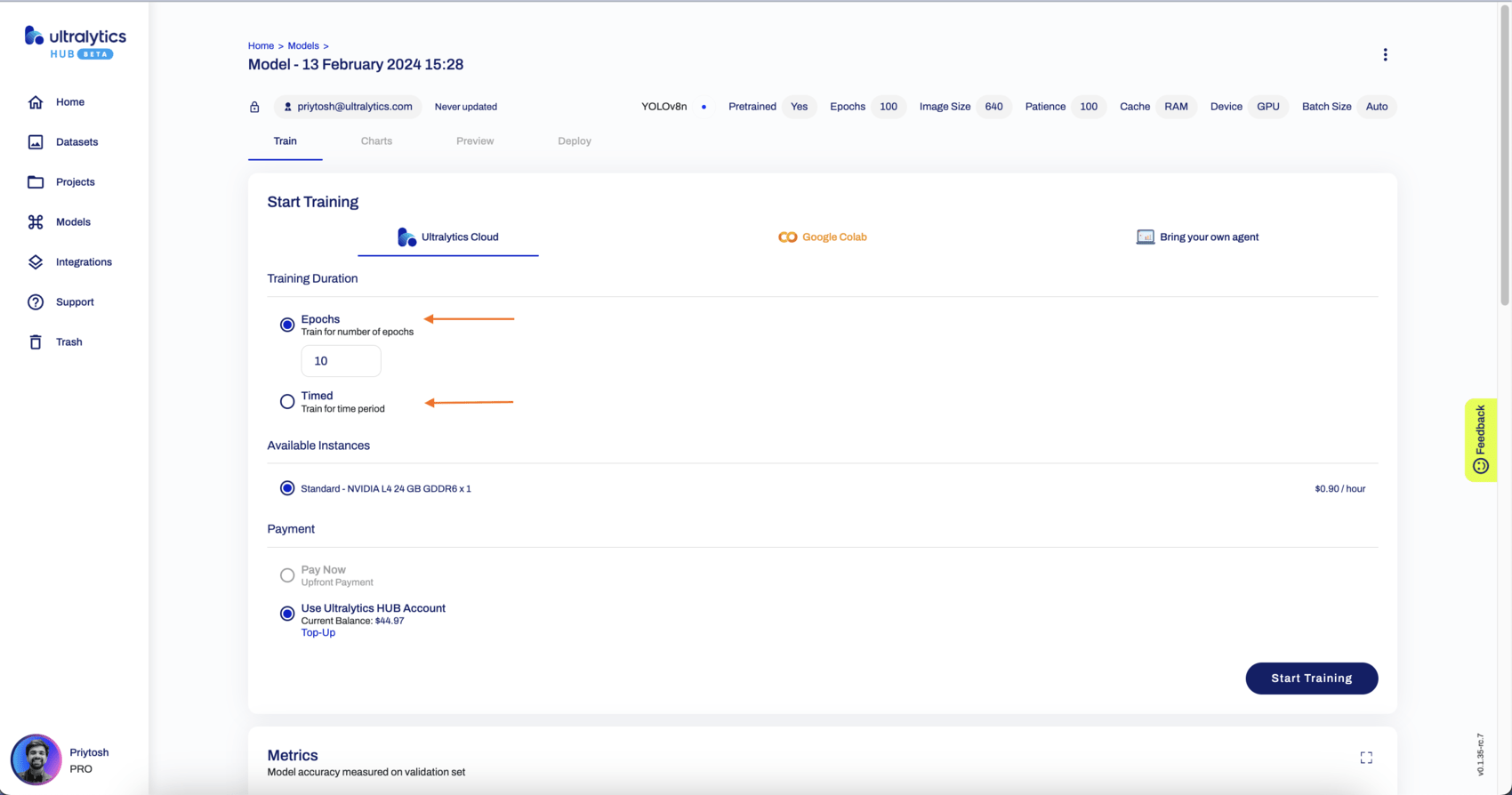

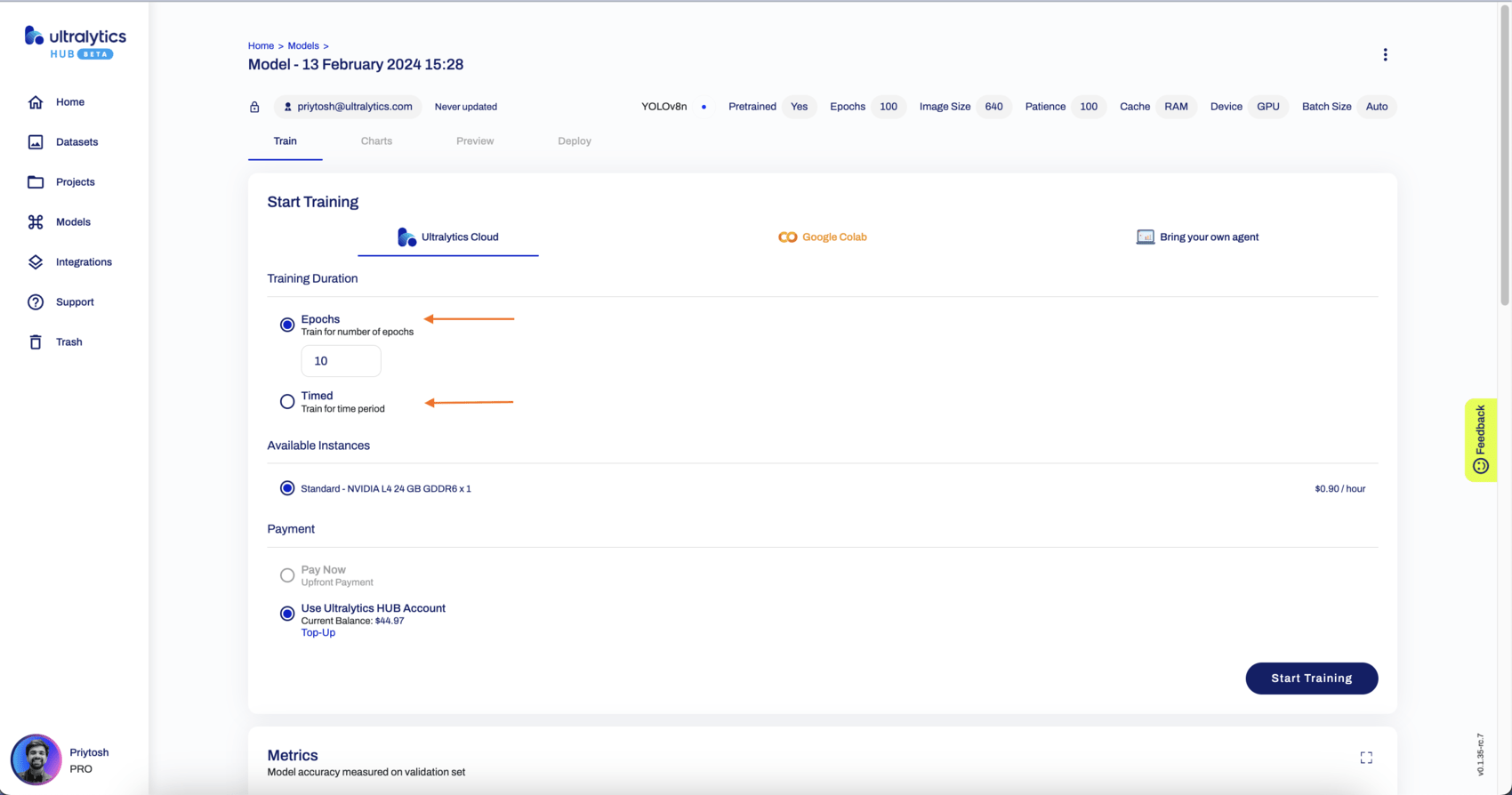

## Training via Ultralytics Cloud

To start training your model using Ultralytics Cloud, we need to simply select the Training Duration, Available Instances, and Payment options.<br/>

To start training your model using Ultralytics Cloud, simply select the Training Duration, Available Instances, and Payment options.

**Training Duration** - The Ultralytics offers two kind of training durations <br/>

**Training Duration** - Ultralytics offers two kinds of training durations:

1. Training based on `Epochs` - This option lets you train your model based on number of times your Dataset needs to go through the cycle of Train, Label and Test. The exact pricing based on number of Epochs is hard to determine. Hence, if the credit gets exhausted before intended number of Epochs, the training pauses and we get a prompt to Top-up and resume Training. <br/>

2. Timed Training - The timed training features allows you to fix the time duration of the entire Training process and also determines the estimated amount before the start of Training. <br/>

1. Training based on `Epochs`: This option allows you to train your model based on the number of times your dataset needs to go through the cycle of train, label, and test. The exact pricing based on the number of epochs is hard to determine. Hence, if the credit gets exhausted before the intended number of epochs, the training pauses, and you get a prompt to top-up and resume training.

2. Timed Training: The timed training feature allows you to fix the time duration of the entire training process and also determines the estimated amount before the start of training.

When the training starts, you can click **Done** and monitor the training progress on the Model page.

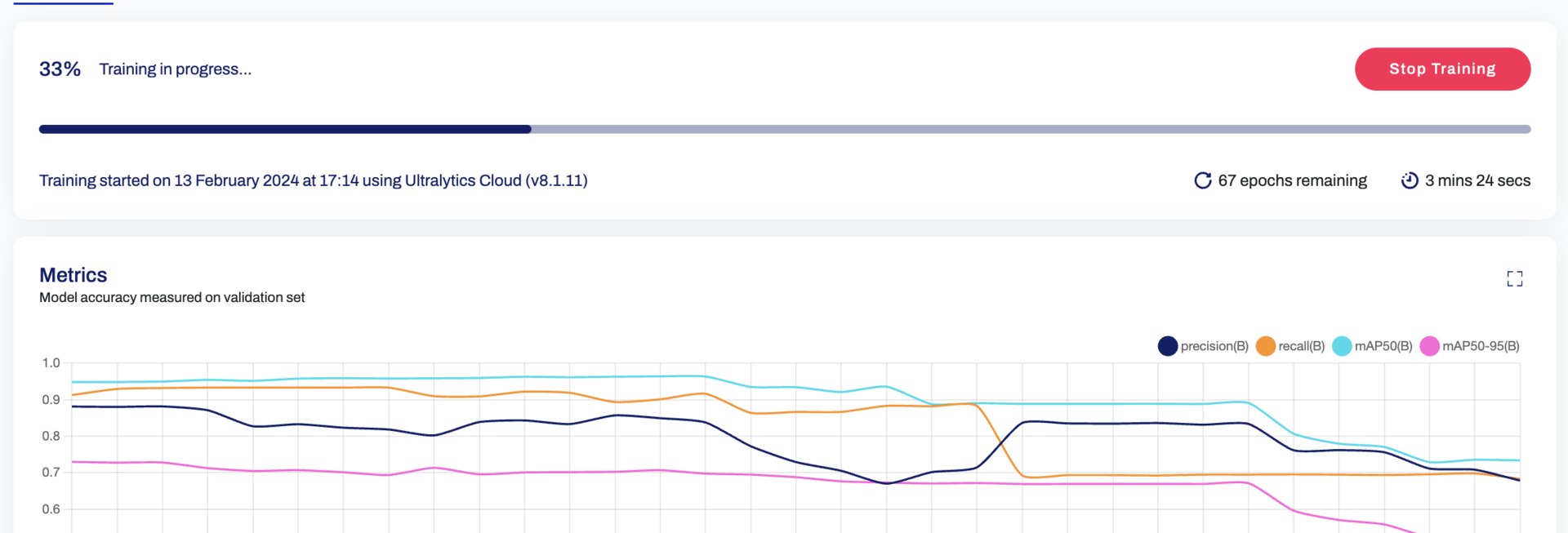

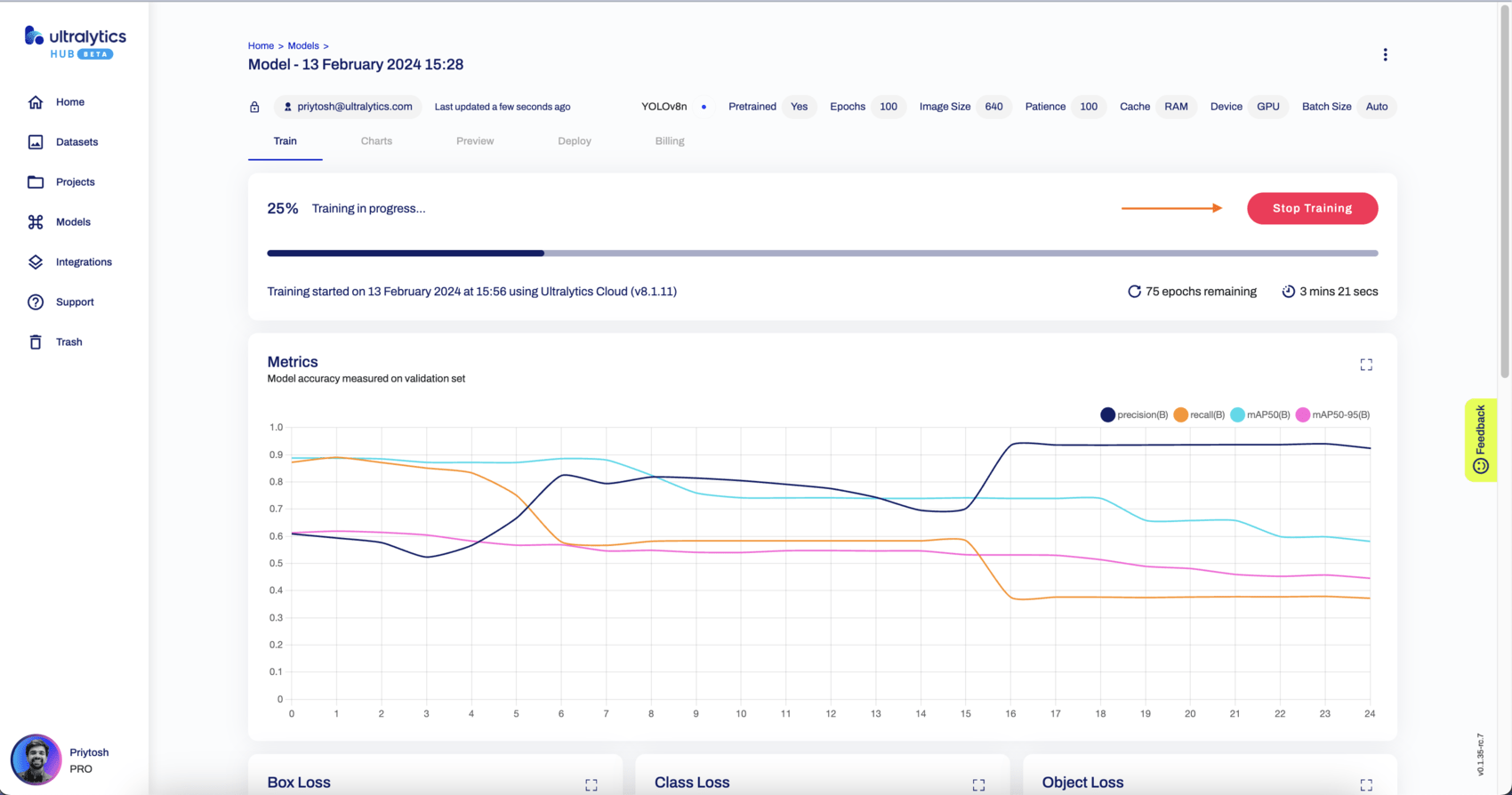

## Monitor your training

## Monitor Your Training

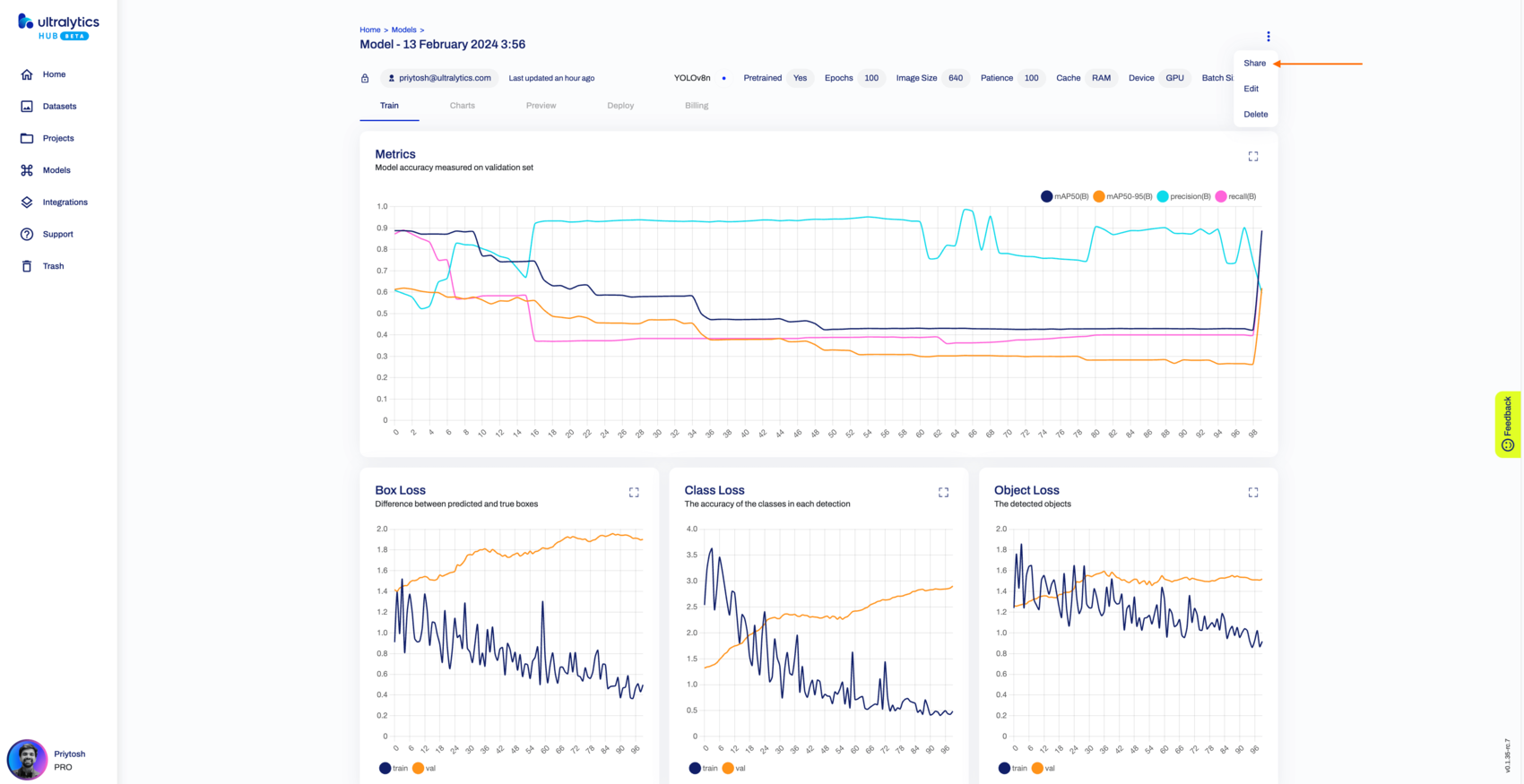

Once the model and mode of the training has been selected, a User can monitor the training procedure on the `Train` section with the link provided in the terminal (on your agent / Google colab) or a button from Ultralytics Cloud.

Once the model and mode of training have been selected, you can monitor the training procedure on the `Train` section with the link provided in the terminal (on your agent/Google Colab) or a button from Ultralytics Cloud.

## Stopping and resuming your training

## Stopping and Resuming Your Training

Once the training has started a user can `Stop` the training, which will also correspondingly pause the credit usage for the user. A user can again `Resume` the training from the point as described in the below screenshot.

Once the training has started, you can `Stop` the training, which will also correspondingly pause the credit usage. You can then `Resume` the training from the point where it stopped.

## Payments and Billing options

## Payments and Billing Options

Ultralytics HUB offers `Pay Now` as upfront and/or using `Ultralytics HUB Account` as a wallet to top up and fulfill the billing. You can choose from two types of accounts: `Free` and `Pro` user.

To access your profile, click on the profile picture in the bottom left corner.

Ultralytics HUB offers `Pay Now` as upfront and/or use `Ultralytics HUB Account` as a wallet to top-up and fulfil the billing. A user can pick from amongst two types of Account namely `Free` and `Pro` user. <br/>

The user can navigate to the profile by clicking the Profile picture in the bottom left corner

@ -79,7 +79,7 @@ You can view the images in your dataset grouped by splits (Train, Validation, Te

??? tip "Tip"

!!! tip "Tip"

Each image can be enlarged for better visualization.

@ -101,7 +101,7 @@ Next, [train a model](https://docs.ultralytics.com/hub/models/#train-model) on y

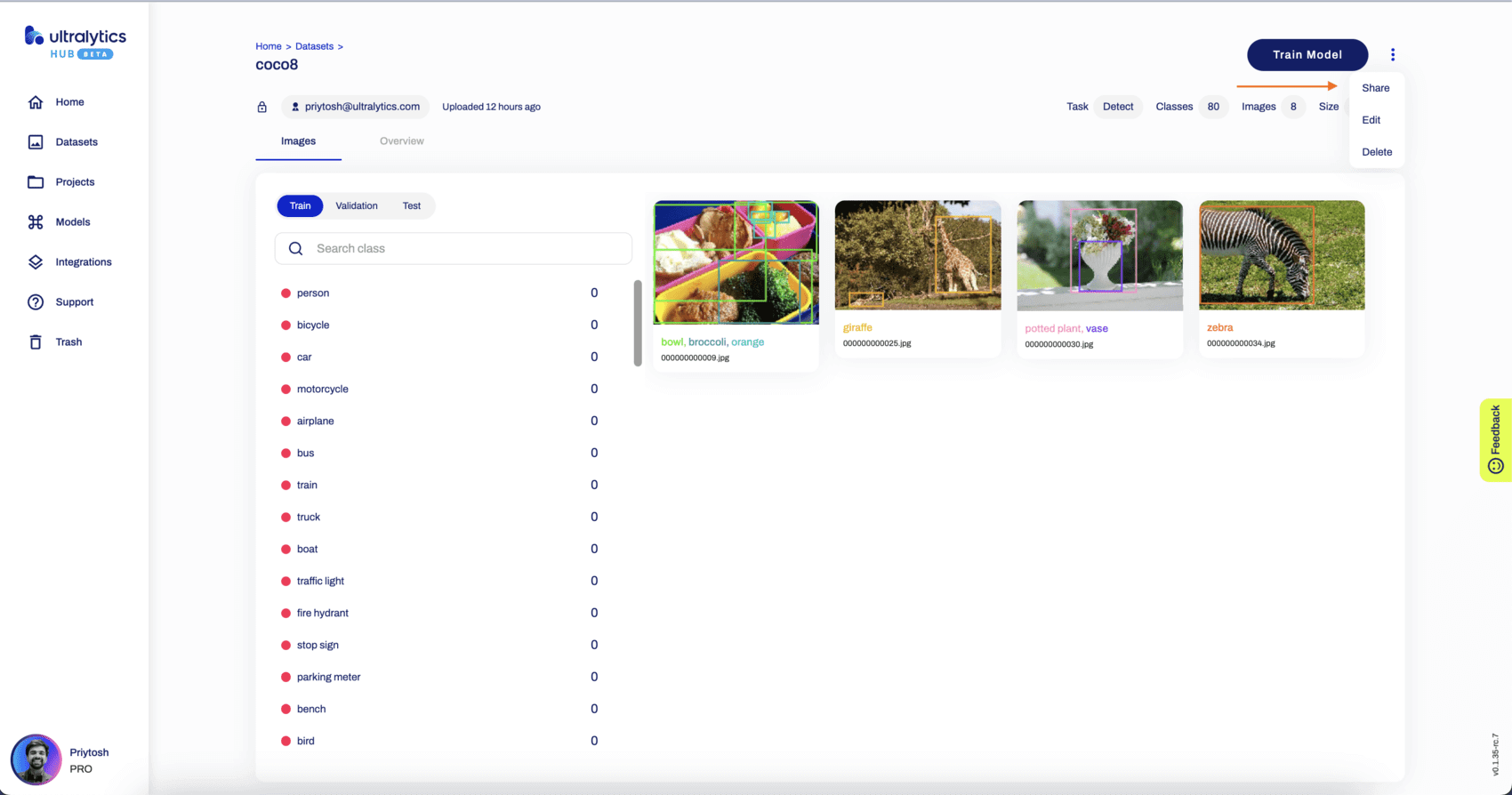

Ultralytics HUB's sharing functionality provides a convenient way to share datasets with others. This feature is designed to accommodate both existing Ultralytics HUB users and those who have yet to create an account.

??? note "Note"

!!! note "Note"

You have control over the general access of your datasets.

@ -111,7 +111,7 @@ Navigate to the Dataset page of the dataset you want to share, open the dataset

??? tip "Tip"

!!! tip "Tip"

You can also share a dataset directly from the [Datasets](https://hub.ultralytics.com/datasets) page.

@ -123,7 +123,7 @@ Set the general access to "Unlisted" and click **Save**.

Now, anyone who has the direct link to your dataset can view it.

??? tip "Tip"

!!! tip "Tip"

You can easily click on the dataset's link shown in the **Share Dataset** dialog to copy it.

@ -139,7 +139,7 @@ Apply the desired modifications to your dataset and then confirm the changes by

Navigate to the Dataset page of the dataset you want to delete, open the dataset actions dropdown and click on the **Delete** option. This action will delete the dataset.

??? note "Note"

!!! note "Note"

If you change your mind, you can restore the dataset from the [Trash](https://hub.ultralytics.com/trash) page.

description: Learn how to use Ultralytics HUB models for efficient and user-friendly AI model training. For easy model creation, training, evaluation and deployment, follow our detailed guide.

description: Learn how to efficiently train AI models using Ultralytics HUB, a streamlined solution for model creation, training, evaluation, and deployment.

keywords: Ultralytics, HUB Models, AI model training, model creation, model training, model evaluation, model deployment

---

# Ultralytics HUB Models

[Ultralytics HUB](https://hub.ultralytics.com/) models provide a streamlined solution for training vision AI models on your custom datasets.

[Ultralytics HUB](https://hub.ultralytics.com/) models provide a streamlined solution for training vision AI models on custom datasets.

The process is user-friendly and efficient, involving a simple three-step creation and accelerated training powered by Ultralytics YOLOv8. During training, real-time updates on model metrics are available so that you can monitor each step of the progress. Once training is completed, you can preview your model and easily deploy it to real-world applications. Therefore, Ultralytics HUB offers a comprehensive yet straightforward system for model creation, training, evaluation, and deployment.

The process is user-friendly and efficient, involving a simple three-step creation and accelerated training powered by Ultralytics YOLOv8. Real-time updates on model metrics are available during training, allowing users to monitor progress at each step. Once training is completed, models can be previewed and easily deployed to real-world applications. Therefore, Ultralytics HUB offers a comprehensive yet straightforward system for model creation, training, evaluation, and deployment.

The entire process of training a Model is detailed on our [Cloud Training Page](cloudtraining.md)

The entire process of training a model is detailed on our [Cloud Training Page](cloud-training.md).

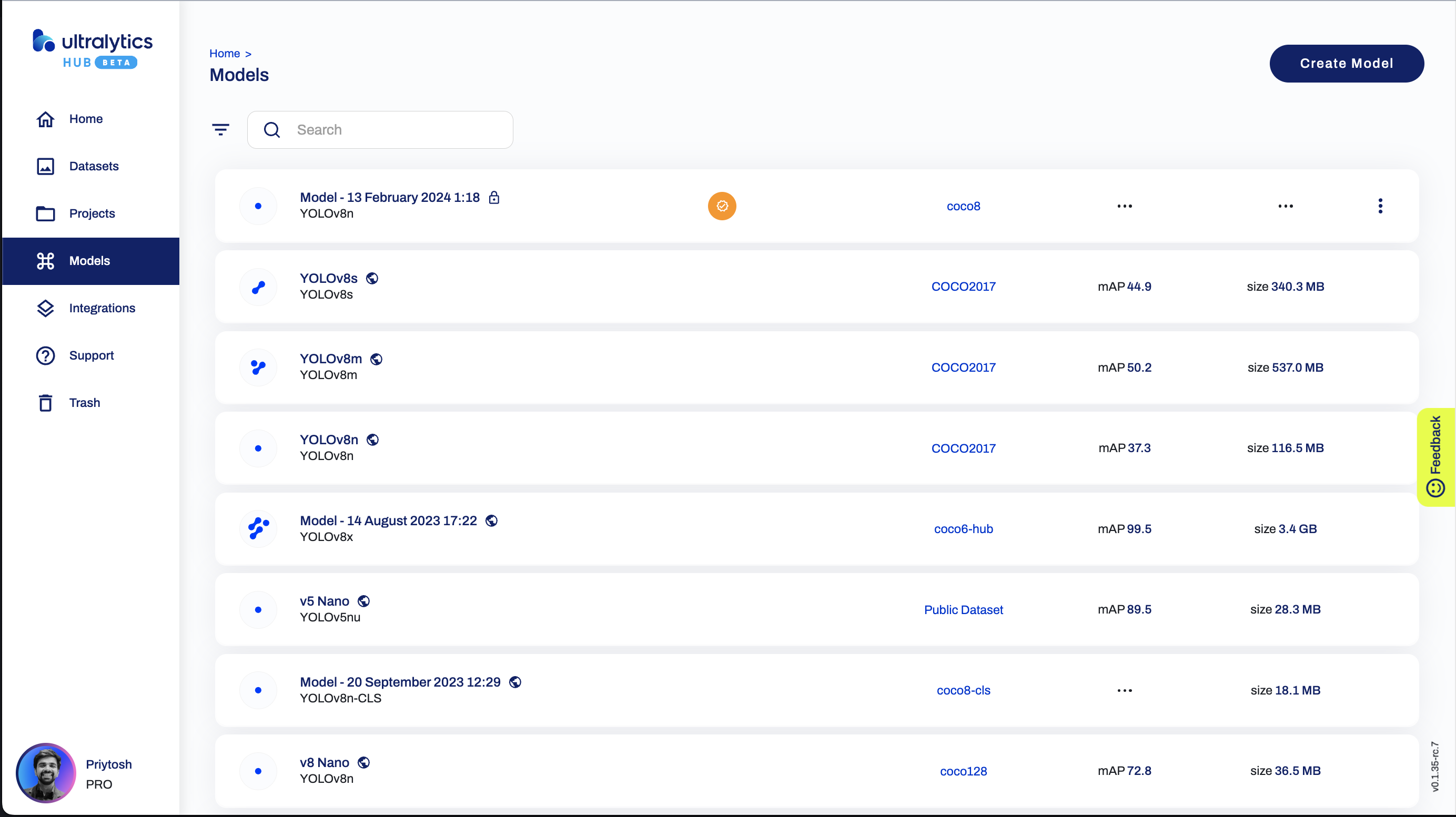

## Train Model

Navigate to the [Models](https://hub.ultralytics.com/models) page by clicking on the **Models** button in the sidebar.

Training the Model using HUB is a 4 step process <br/>

**Execute the pre-requisites script** - Run the already mention scripts to prepare the virtual Environment.<br/>

**Provide the API and start Training** - Once the model has been prepared, we can provide the API key as provided in the previous model (by simple copying and pasting the code block) and executing it.<br/>

**Check the results and Metrics** - Upon successful code execution, a link is presented that directs the user to the Metrics Page. This page provides comprehensive details regarding the trained model, including model specifications, box loss, class loss, object loss, dataset information, and image distributions. Additionally, the deploy tab offers access to the trained model's documentation and license details.<br/>

**Test your model** - Ultralytics HUB offers testing the model using custom Image, device camera or even links to test it using your `iPhone` or `Android` device.<br/>

Training a model using HUB is a 4-step process:

- **Execute the pre-requisites script**: Run the provided scripts to prepare the virtual environment.

- **Provide the API and start Training**: Once the model is prepared, provide the API key as instructed and execute the code block.

- **Check the results and Metrics**: Upon successful execution, a link is provided to the Metrics Page. This page offers comprehensive details on the trained model, including specifications, loss metrics, dataset information, and image distributions. Additionally, the 'Deploy' tab provides access to the trained model's documentation and license details.

- **Test your model**: Ultralytics HUB offers testing using custom images, device cameras, or links to test on `iPhone` or `Android` devices.

??? tip "Tip"

!!! tip "Tip"

You can also train a model directly from the [Home](https://hub.ultralytics.com/home) page.

Click on the **Train Model** button on the top right of the page.This action will trigger the **Train Model** dialog.

Click on the **Train Model** button on the top right of the page to trigger the **Train Model** dialog.

The **Train Model** dialog has three simple steps, explained below.

The **Train Model** dialog has three simple steps:

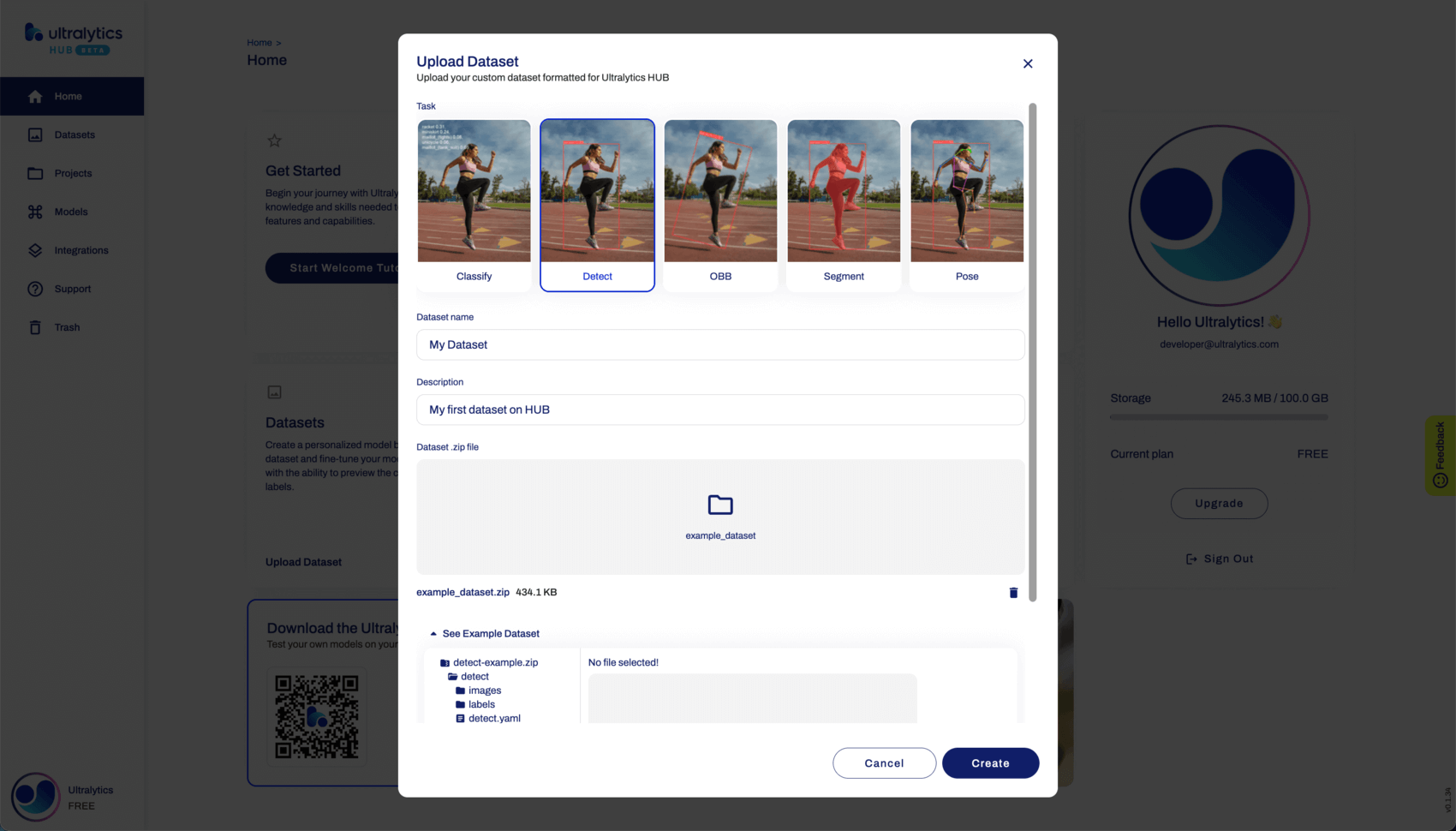

### 1. Dataset

In this step, you have to select the dataset you want to train your model on. After you selected a dataset, click **Continue**.

Select the dataset for training and click **Continue**.

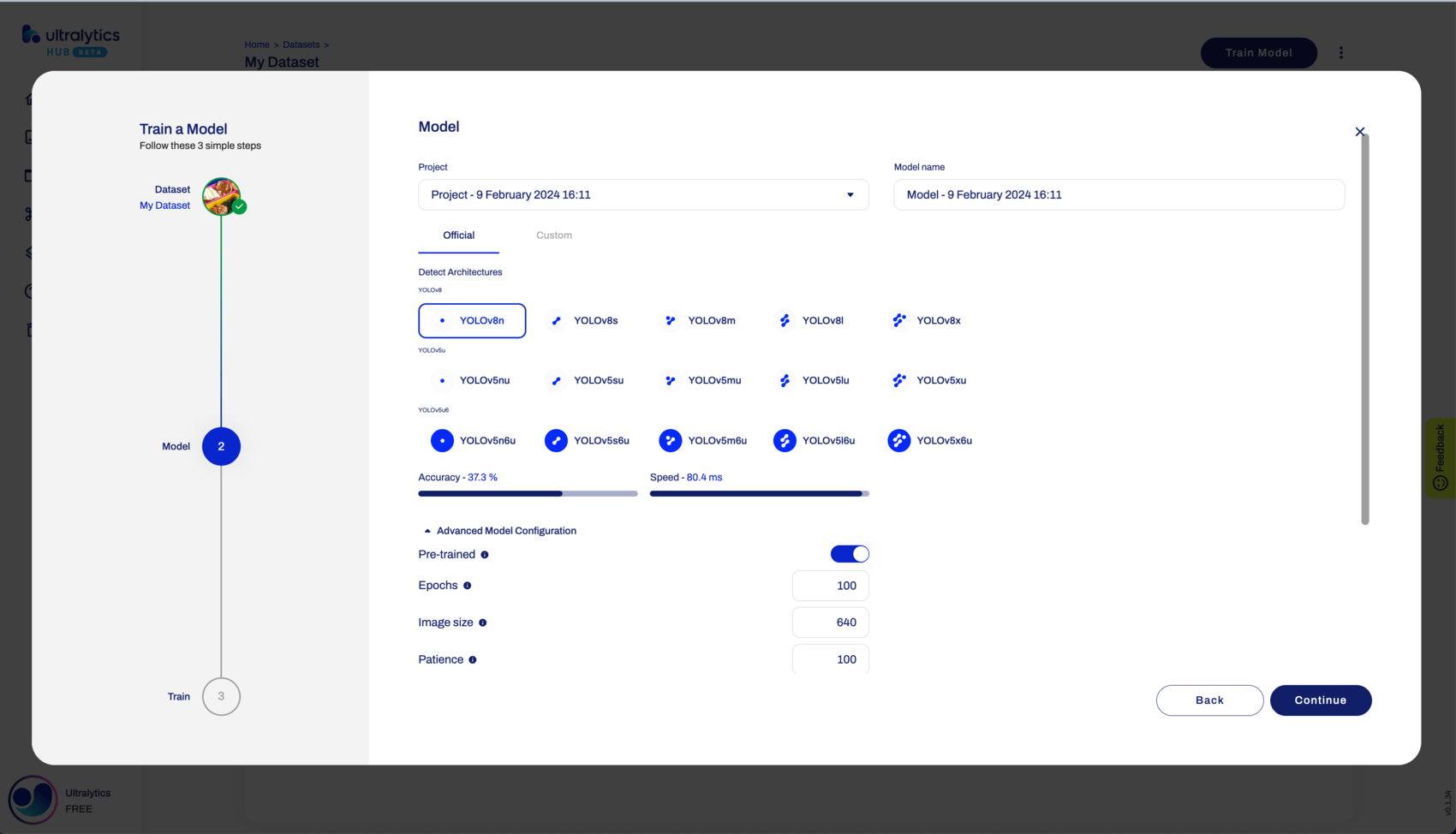

### 2. Model

In this step, you have to choose the project in which you want to create your model, the name of your model and your model's architecture.

!!! Info "Info"

Choose the project, model name, and architecture. Read more about available architectures in our [YOLOv8](https://docs.ultralytics.com/models/yolov8) (and [YOLOv5](https://docs.ultralytics.com/models/yolov5)) documentation.

You can read more about the available [YOLOv8](https://docs.ultralytics.com/models/yolov8) (and [YOLOv5](https://docs.ultralytics.com/models/yolov5)) architectures in our documentation.

When you're happy with your model configuration, click **Continue**.

Click **Continue** when satisfied with the configuration.

??? note "Note"

!!! note "Note"

By default, your model will use a pre-trained model (trained on the [COCO](https://docs.ultralytics.com/datasets/detect/coco) dataset) to reduce training time.

You can change this behavior by opening the **Advanced Options** accordion.

Advanced options are available to modify this behavior.

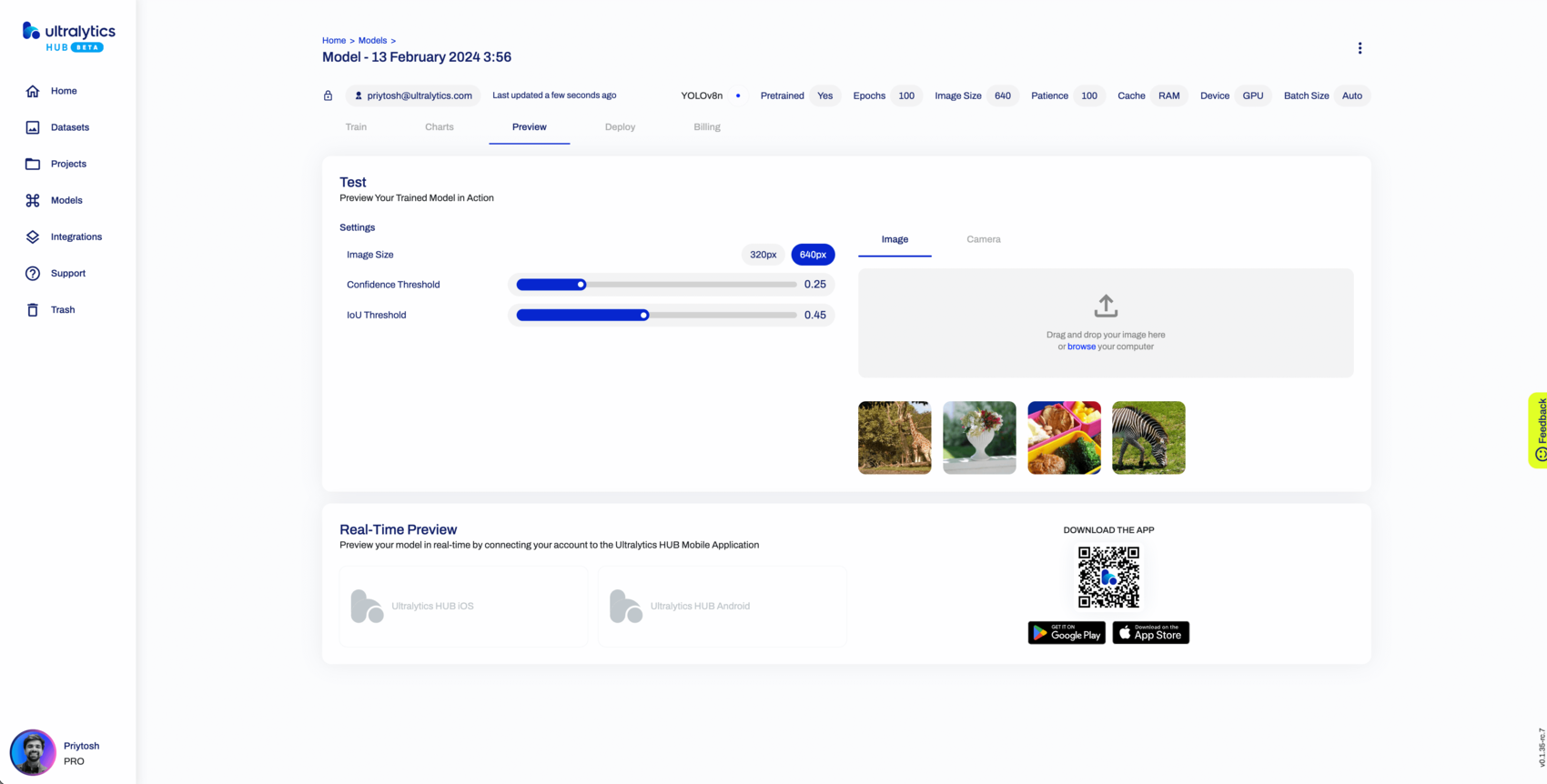

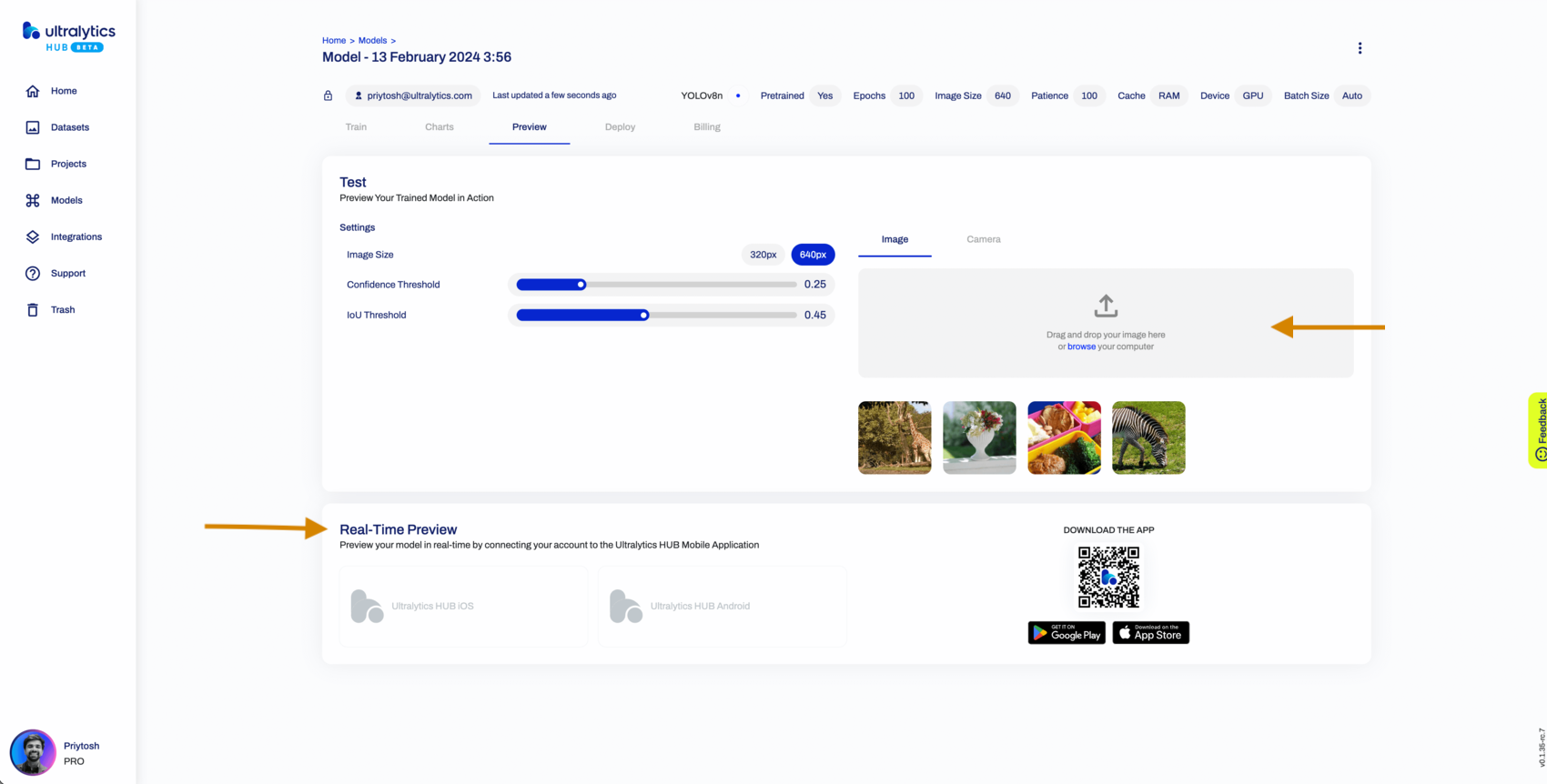

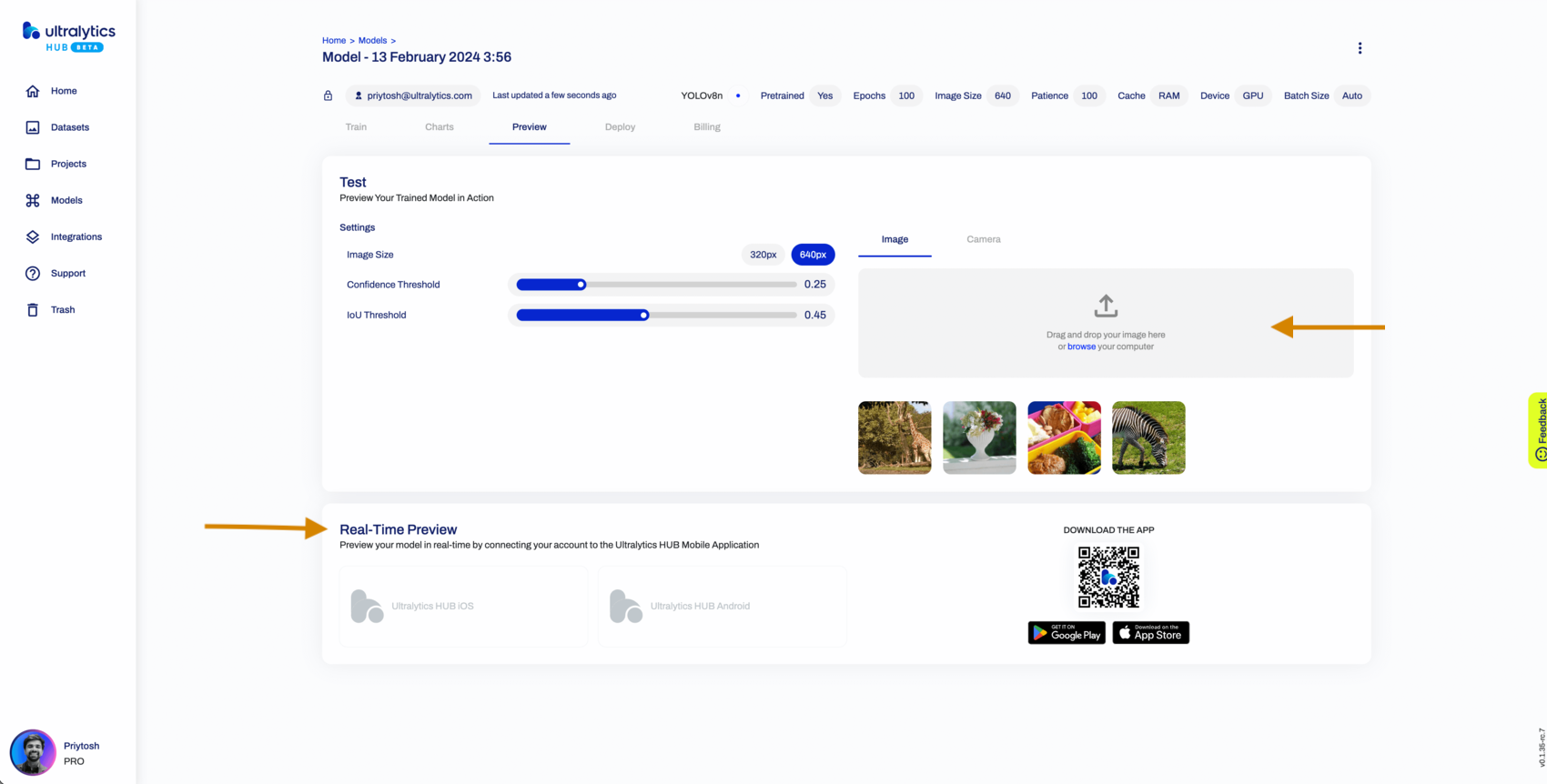

## Preview Model

Ultralytics HUB offers a variety of ways to preview your trained model.

Ultralytics HUB offers various ways to preview trained models.

You can preview your model if you click on the **Preview** tab and upload an image in the **Test** card.

You can upload an image in the **Test** card under the **Preview** tab to preview your model.

You can also use our Ultralytics Cloud API to effortlessly [run inference](inference-api.md) with your custom model.

Use our Ultralytics Cloud API to effortlessly [run inference](inference-api.md) with your custom model.

Furthermore, you can preview your model in real-time directly on your [iOS](https://apps.apple.com/xk/app/ultralytics/id1583935240) or [Android](https://play.google.com/store/apps/details?id=com.ultralytics.ultralytics_app) mobile device by [downloading](https://ultralytics.com/app_install) our [Ultralytics HUB Mobile Application](app/index.md).

Preview your model in real-time on your [iOS](https://apps.apple.com/xk/app/ultralytics/id1583935240) or [Android](https://play.google.com/store/apps/details?id=com.ultralytics.ultralytics_app) device by [downloading](https://ultralytics.com/app_install) our [Ultralytics HUB Mobile Application](app/index.md).

## Train the model

Ultralytics HUB offers three training options:

- **Ultralytics Cloud** - Read more about training via Ultralytics Cloud [Cloud Training Page](cloudtraining.md)

- **Ultralytics Cloud** - Learn more about training via the Ultralytics [Cloud Training Page](cloud-training.md)

- **Google Colab**

- **Bring your own agent**

## Training the Model on Google Colab

To start training your model using Google Colab, simply follow the instructions shown above or on the Google Colab notebook.

To start training using Google Colab, follow the instructions on the Google Colab notebook.

<imgsrc="https://colab.research.google.com/assets/colab-badge.svg"alt="Open In Colab">

@ -95,46 +93,38 @@ To start training your model using Google Colab, simply follow the instructions

## Bring your own Agent

A user can create API end point through Ultralytics HUB and use their own agent to train the Model locally. Simply follow the steps on the section and then we can see the details of the training by a link generated on the terminal on the Agent. <br/>

The link takes us to the Metrics Information and Deployment completion page to know more about the model and deploy / share it.

Create an API endpoint through Ultralytics HUB to train the Model locally. Follow the provided steps, and access training details via the link generated on the Agent terminal.

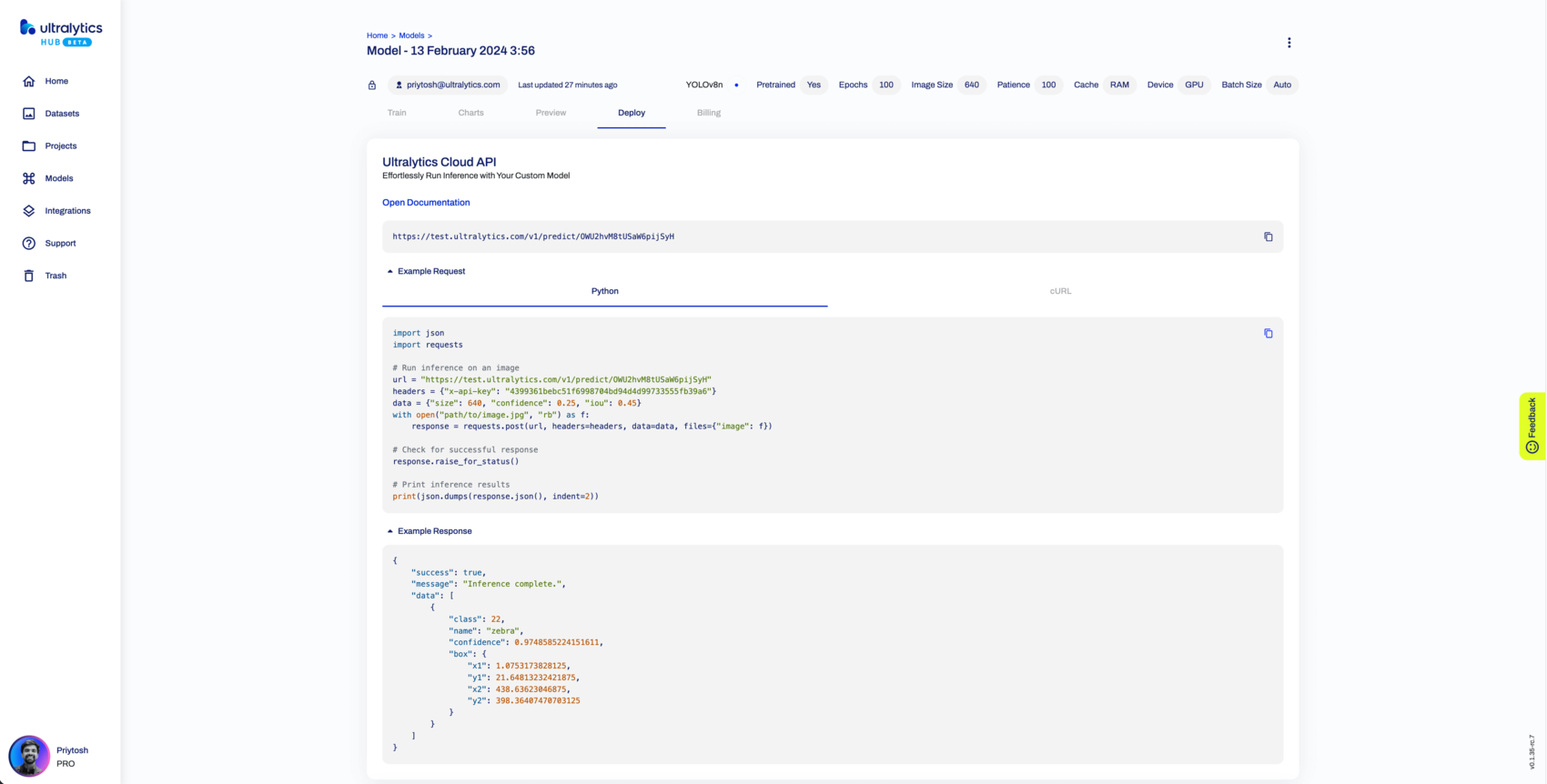

## Deploy Model

You can export your model to 13 different formats, including ONNX, OpenVINO, CoreML, TensorFlow, Paddle and many others.

Export your model to 13 different formats, including ONNX, OpenVINO, CoreML, TensorFlow, Paddle, and more.

## Share Model

!!! Info "Info"

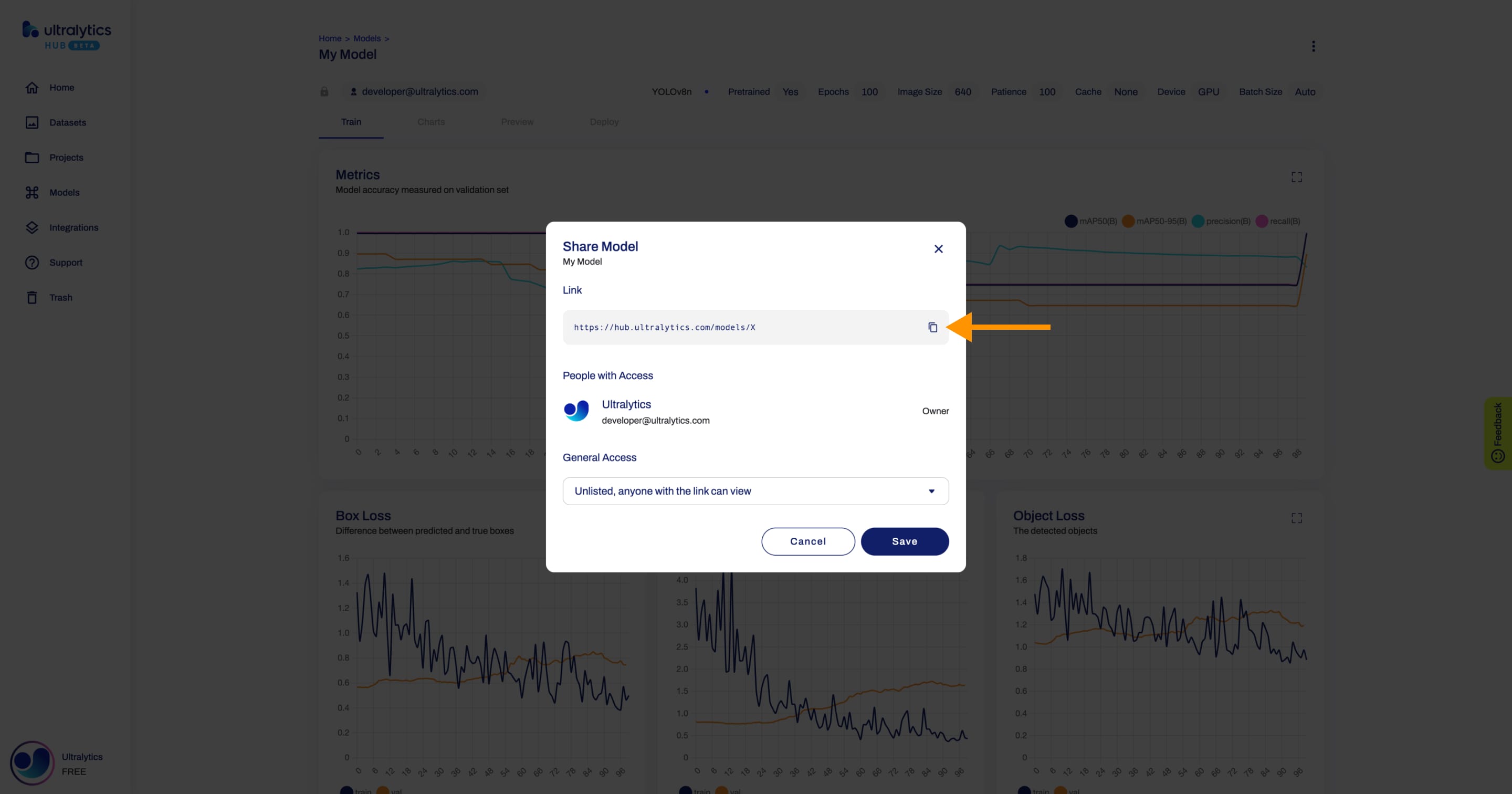

Ultralytics HUB's sharing functionality provides a convenient way to share models with others. This feature is designed to accommodate both existing Ultralytics HUB users and those who have yet to create an account.

Ultralytics HUB's sharing functionality provides a convenient way to share models. Control the general access of your models, setting them to "Private" or "Unlisted".

??? note "Note"

You have control over the general access of your models.

You can choose to set the general access to "Private", in which case, only you will have access to it. Alternatively, you can set the general access to "Unlisted" which grants viewing access to anyone who has the direct link to the model, regardless of whether they have an Ultralytics HUB account or not.

Navigate to the Model page of the model you want to share, open the model actions dropdown and click on the **Share** option. This action will trigger the **Share Model** dialog.

Navigate to the Model page, open the model actions dropdown, and click on the **Share** option.

Set the general access to "Unlisted" and click **Save**.

Set the general access and click **Save**.

Now, anyone who has the direct link to your model can view it.

Now, anyone with the direct link can view your model.

??? tip "Tip"

!!! tip "Tip"

You can easily click on the model's link shown in the **Share Model** dialog to copy it.

Easily copy the model's link shown in the **Share Model** dialog by clicking on it.

## Edit and Delete Model

Navigate to the Model page of the model you want to edit, open the model actions dropdown and click on the **Edit** option. This action will trigger the **Update Model** dialog. Navigate to the Model page of the model you want to delete, open the model actions dropdown and click on the **Delete** option. This action will delete the model.

Navigate to the Model page, open the model actions dropdown, and click on the **Edit** option to update the model. To delete the model, select the **Delete** option.

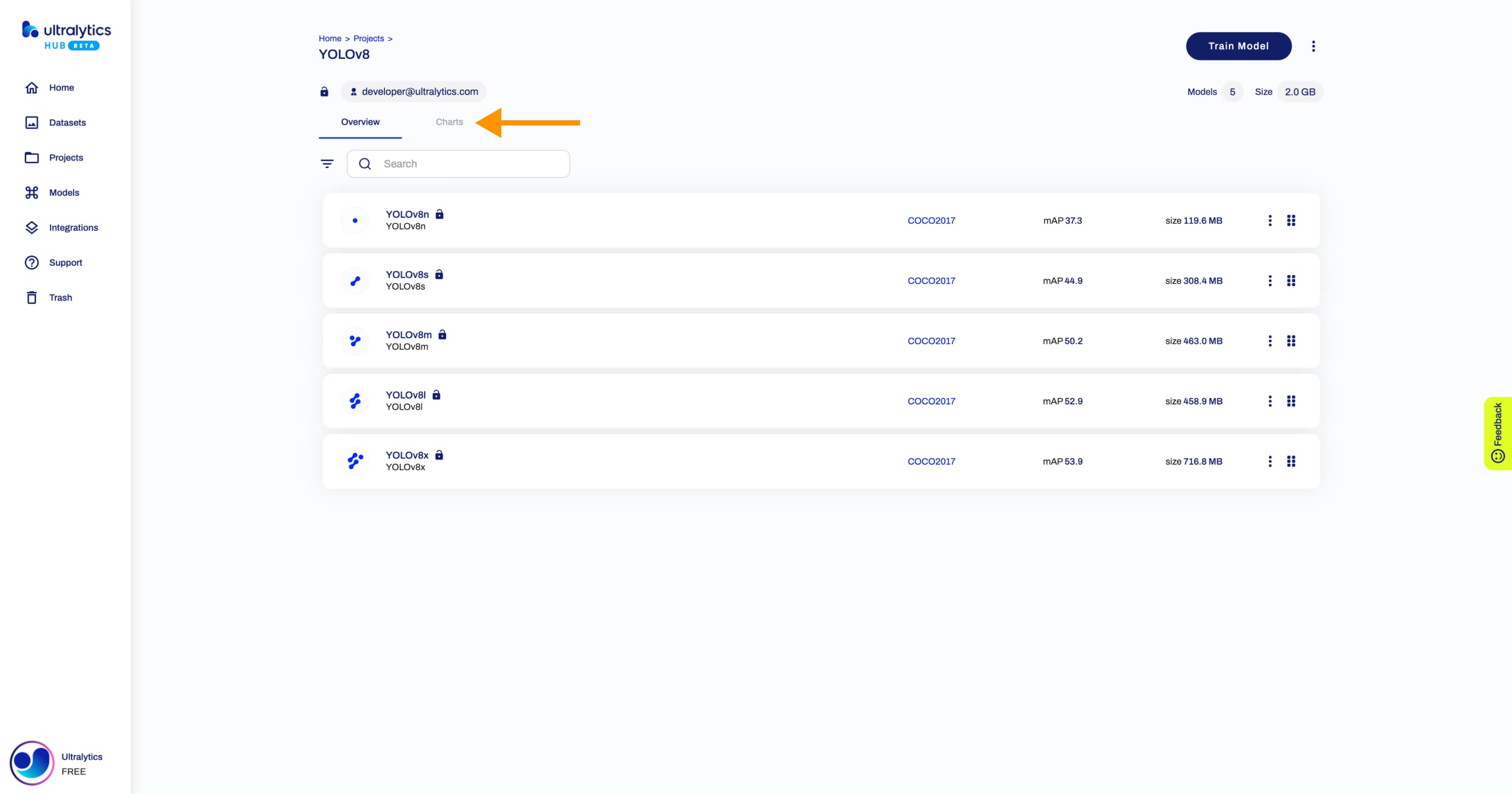

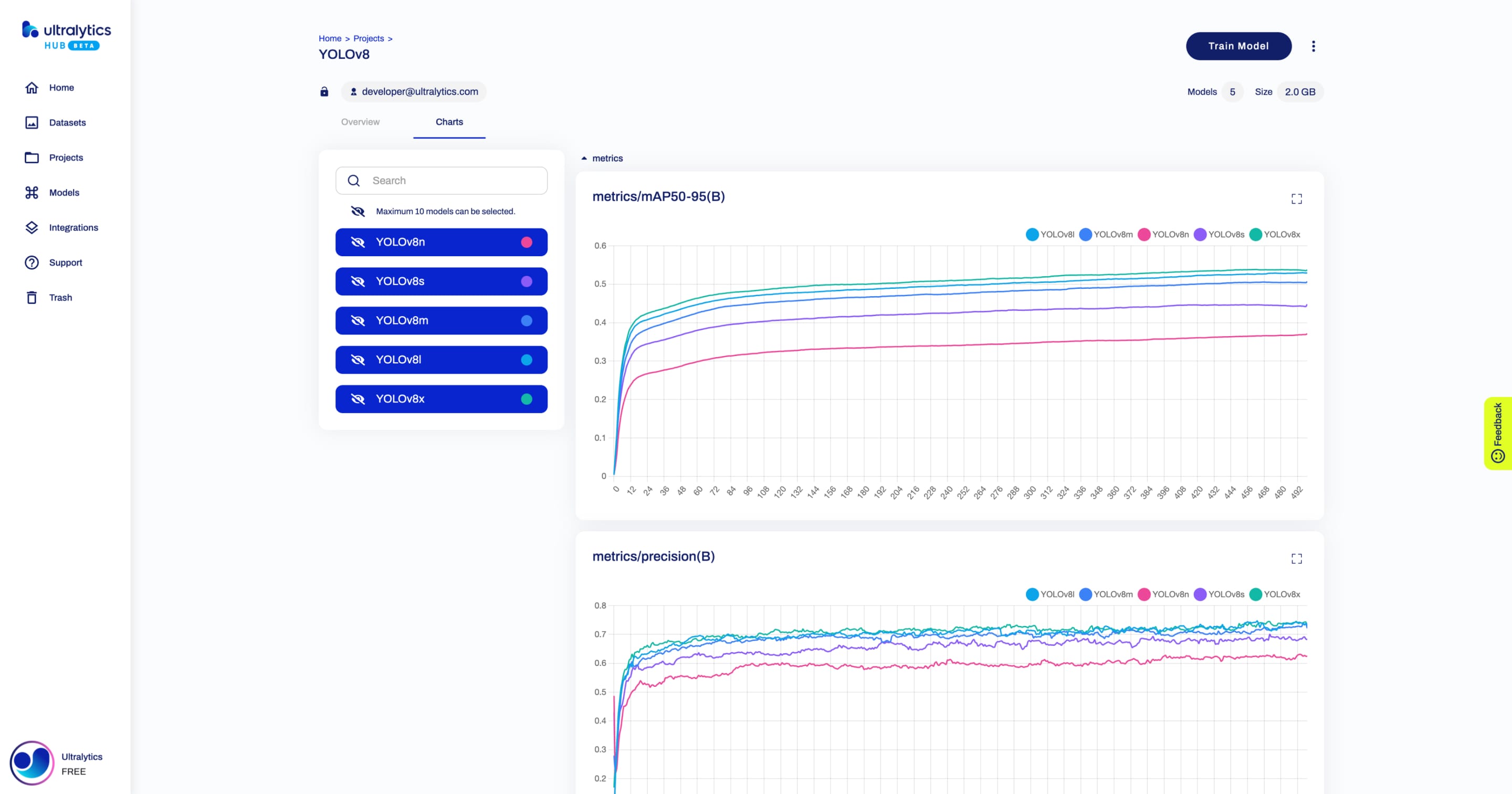

@ -135,7 +135,7 @@ Navigate to the Project page of the project where the models you want to compare

This will display all the relevant charts. Each chart corresponds to a different metric and contains the performance of each model for that metric. The models are represented by different colors and you can hover over each data point to get more information.

This will display all the relevant charts. Each chart corresponds to a different metric and contains the performance of each model for that metric. The models are represented by different colors, and you can hover over each data point to get more information.

@ -21,37 +21,38 @@ HUB is designed to be user-friendly and intuitive, with a drag-and-drop interfac

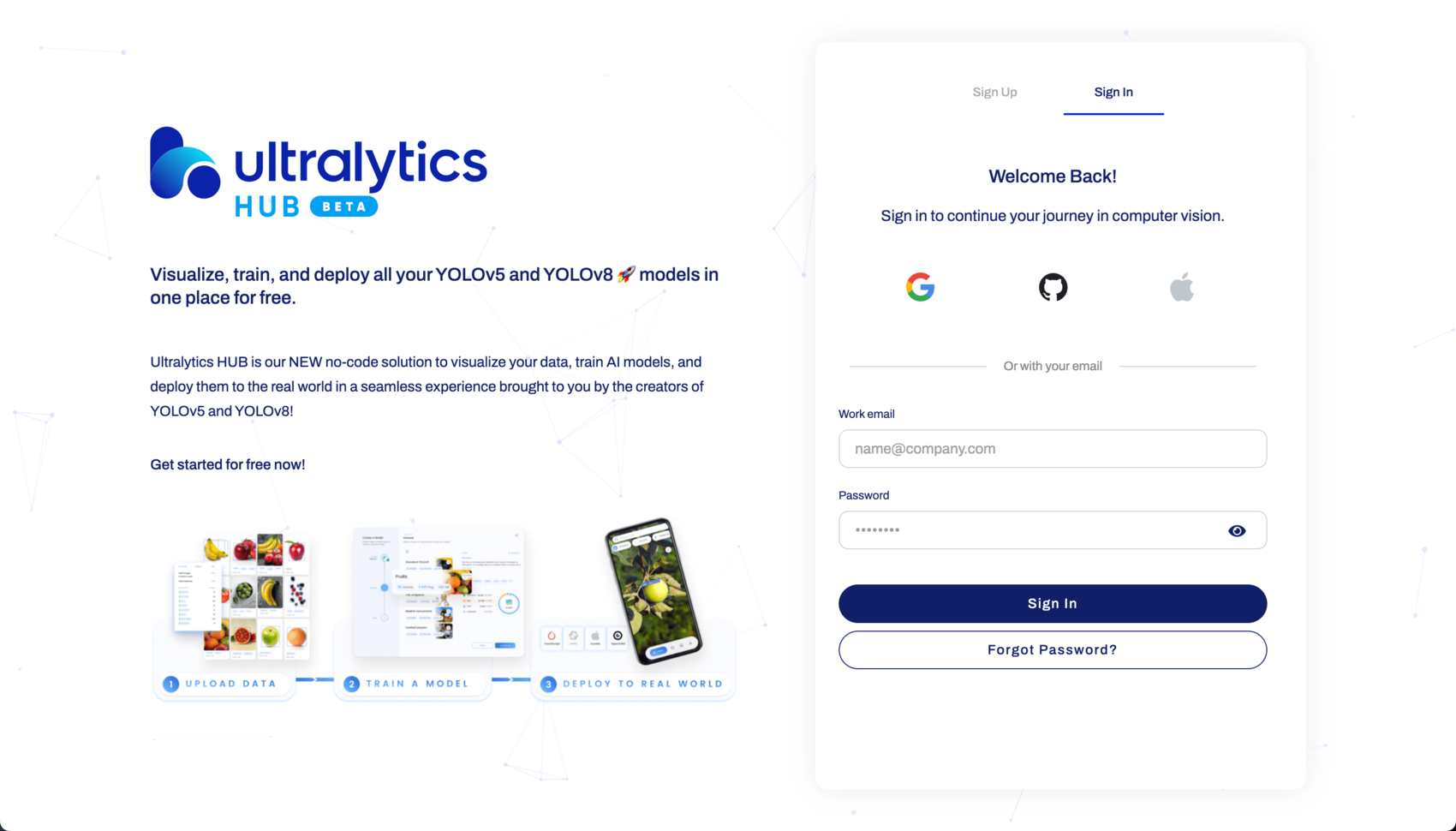

## Creating an Account

[Ultralytics HUB](https://hub.ultralytics.com/) offers multiple and easy account creation options to get started with. A user can user can register and sign-in using `Google`, `Apple` or `Github` account or a `work email` address(preferably for corporate users).

[Ultralytics HUB](https://hub.ultralytics.com/) offers multiple easy account creation options. Users can register and sign in using Google, Apple, GitHub accounts, or a work email address.

## The Dashboard and details

## The Dashboard

After completing the registration and login process on the HUB, users are directed to the HUB dashboard. This dashboard provides a comprehensive overview, with the Welcome tutorial prominently displayed. Additionally, the left pane conveniently offers links for tasks such as Uploading Datasets, Creating Projects, Training Models, Integrating Third-party Applications, Accessing Support, and Managing Trash.

Upon logging in, users are directed to the HUB dashboard, providing a comprehensive overview. The left pane conveniently offers links for tasks such as Uploading Datasets, Creating Projects, Training Models, Integrating Third-party Applications, Accessing Support, and Managing Trash.

Once we have decided on a Dataset, it's time to train the model. We first pick the Project name and Model name (or leave it to default, if they are not label specific), then pick an Architecture. Ultralytics provide a wide range of YOLOv8, YOLOv5 and YOLOv5u6 Architectures. You can also pick from previously trained or custom model.

The latter option allows us to fine tune option likes Pre-trained, Epochs, Image Size, Caching Strategy, Type of Device, Number of GPUs, Batch Size, AMP status and Freeze option. Read more about Models [HUB Models page](models.md).

Choose a Dataset and train the model by selecting the Project name, Model name, and Architecture. Ultralytics offers a range of YOLOv8, YOLOv5, and YOLOv5u6 Architectures, including pre-trained and custom options.

## Train the Model

Read more about Models on the [HUB Models page](models.md).

Once we reach the Model Training page, we are offered three-way option to train our model. We can either use Google Colab to simply follow the steps and use the API key provided at the page, or follow the steps to actually train the model locally. The third way is our upcoming Ultralytics Cloud , which enables you to directly train your model over cloud even more efficiently. Read more about Training the model at [Cloud Training Page](cloudtraining.md)

## Training the Model

There are three ways to train your model: using Google Colab, training locally, or through Ultralytics Cloud. Learn more about training options on the [Cloud Training Page](cloud-training.md).

## Integrating the Model

`Ultralytics Hub` supports integrating the model with other third-party applications or to connect HUB from an external agent. Currently we support `Roboflow`, with very simple one click API Integration. Read more about Integrating the model at [Integration Page](integrations.md)

Integrate your trained model with third-party applications or connect HUB from an external agent. Ultralytics HUB currently supports simple one-click API Integration with Roboflow. Read more about integration on the [Integration Page](integrations.md).

## Stuck? We got you!

## Need Help?

We at Ultralytics we have a strong faith in user feedbacks and complaints. You can `Report a bug`, `Request a Feature` and/or `Ask question`.

If you encounter any issues or have questions, we're here to assist you. You can report a bug, request a feature, or ask a question.

@ -65,10 +65,12 @@ Ultralytics YOLO models return either a Python list of `Results` objects, or a m

# Process results list

for result in results:

boxes = result.boxes # Boxes object for bbox outputs

boxes = result.boxes # Boxes object for bounding box outputs

masks = result.masks # Masks object for segmentation masks outputs

keypoints = result.keypoints # Keypoints object for pose outputs

probs = result.probs # Probs object for classification outputs

result.show() # display to screen

result.save(filename='result.jpg') # save to disk

```

=== "Return a generator with `stream=True`"

@ -84,10 +86,12 @@ Ultralytics YOLO models return either a Python list of `Results` objects, or a m

# Process results generator

for result in results:

boxes = result.boxes # Boxes object for bbox outputs

boxes = result.boxes # Boxes object for bounding box outputs

masks = result.masks # Masks object for segmentation masks outputs

keypoints = result.keypoints # Keypoints object for pose outputs

probs = result.probs # Probs object for classification outputs

result.show() # display to screen

result.save(filename='result.jpg') # save to disk

```

## Inference Sources

@ -391,7 +395,7 @@ Visualization arguments:

## Image and Video Formats

YOLOv8 supports various image and video formats, as specified in [data/utils.py](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/data/utils.py). See the tables below for the valid suffixes and example predict commands.

YOLOv8 supports various image and video formats, as specified in [ultralytics/data/utils.py](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/data/utils.py). See the tables below for the valid suffixes and example predict commands.

### Images

@ -456,31 +460,30 @@ All Ultralytics `predict()` calls will return a list of `Results` objects:

| `masks` | `Masks, optional` | A Masks object containing the detection masks. |

| `probs` | `Probs, optional` | A Probs object containing probabilities of each class for classification task. |

| `keypoints` | `Keypoints, optional` | A Keypoints object containing detected keypoints for each object. |

| `obb` | `OBB, optional` | A OBB object containing the oriented detection bounding boxes. |

| `update()` | `None` | Update the boxes, masks, and probs attributes of the Results object. |

| `cpu()` | `Results` | Return a copy of the Results object with all tensors on CPU memory. |

| `numpy()` | `Results` | Return a copy of the Results object with all tensors as numpy arrays. |

| `cuda()` | `Results` | Return a copy of the Results object with all tensors on GPU memory. |

| `to()` | `Results` | Return a copy of the Results object with tensors on the specified device and dtype. |

| `new()` | `Results` | Return a new Results object with the same image, path, and names. |

| `plot()` | `numpy.ndarray` | Plots the detection results. Returns a numpy array of the annotated image. |

| `show()` | `None` | Show annotated results to screen. |

| `save()` | `None` | Save annotated results to file. |

| `verbose()` | `str` | Return log string for each task. |

| `save_txt()` | `None` | Save predictions into a txt file. |

| `save_crop()` | `None` | Save cropped predictions to `save_dir/cls/file_name.jpg`. |

| `tojson()` | `str` | Convert the object to JSON format. |

For more details see the [`Results` class documentation](../reference/engine/results.md).

### Boxes

@ -518,7 +521,7 @@ Here is a table for the `Boxes` class methods and properties, including their na

| `xyxyn` | Property (`torch.Tensor`) | Return the boxes in xyxy format normalized by original image size. |

| `xywhn` | Property (`torch.Tensor`) | Return the boxes in xywh format normalized by original image size. |

For more details see the `Boxes` class [documentation](../reference/engine/results.md#ultralytics.engine.results.Boxes).

For more details see the [`Boxes` class documentation](../reference/engine/results.md#ultralytics.engine.results.Boxes).

### Masks

@ -551,7 +554,7 @@ Here is a table for the `Masks` class methods and properties, including their na

| `xyn` | Property (`torch.Tensor`) | A list of normalized segments represented as tensors. |

| `xy` | Property (`torch.Tensor`) | A list of segments in pixel coordinates represented as tensors. |

For more details see the `Masks` class [documentation](../reference/engine/results.md#ultralytics.engine.results.Masks).

For more details see the [`Masks` class documentation](../reference/engine/results.md#ultralytics.engine.results.Masks).

### Keypoints

@ -585,7 +588,7 @@ Here is a table for the `Keypoints` class methods and properties, including thei

| `xy` | Property (`torch.Tensor`) | A list of keypoints in pixel coordinates represented as tensors. |

| `conf` | Property (`torch.Tensor`) | Returns confidence values of keypoints if available, else None. |

For more details see the `Keypoints` class [documentation](../reference/engine/results.md#ultralytics.engine.results.Keypoints).

For more details see the [`Keypoints` class documentation](../reference/engine/results.md#ultralytics.engine.results.Keypoints).

### Probs

@ -620,7 +623,7 @@ Here's a table summarizing the methods and properties for the `Probs` class:

| `top1conf` | Property (`torch.Tensor`) | Confidence of the top 1 class. |

| `top5conf` | Property (`torch.Tensor`) | Confidences of the top 5 classes. |

For more details see the `Probs` class [documentation](../reference/engine/results.md#ultralytics.engine.results.Probs).

For more details see the [`Probs` class documentation](../reference/engine/results.md#ultralytics.engine.results.Probs).

### OBB

@ -658,11 +661,11 @@ Here is a table for the `OBB` class methods and properties, including their name

| `xyxyxyxy` | Property (`torch.Tensor`) | Return the rotated boxes in xyxyxyxy format. |

| `xyxyxyxyn` | Property (`torch.Tensor`) | Return the rotated boxes in xyxyxyxy format normalized by image size. |

For more details see the `OBB` class [documentation](../reference/engine/results.md#ultralytics.engine.results.OBB).

For more details see the [`OBB` class documentation](../reference/engine/results.md#ultralytics.engine.results.OBB).

## Plotting Results

You can use the `plot()` method of a `Result` objects to visualize predictions. It plots all prediction types (boxes, masks, keypoints, probabilities, etc.) contained in the `Results` object onto a numpy array that can then be shown or saved.

The `plot()` method in `Results` objects facilitates visualization of predictions by overlaying detected objects (such as bounding boxes, masks, keypoints, and probabilities) onto the original image. This method returns the annotated image as a NumPy array, allowing for easy display or saving.

!!! Example "Plotting"

@ -674,33 +677,43 @@ You can use the `plot()` method of a `Result` objects to visualize predictions.

model = YOLO('yolov8n.pt')

# Run inference on 'bus.jpg'

results = model('bus.jpg') # results list

# Show the results

for r in results:

im_array = r.plot() # plot a BGR numpy array of predictions

im = Image.fromarray(im_array[..., ::-1]) # RGB PIL image

im.show() # show image

im.save('results.jpg') # save image

results = model(['bus.jpg', 'zidane.jpg']) # results list