The [Ultralytics HUB](https://ultralytics.com/hub) Inference API allows you to run inference through our REST API without the need to install and set up the Ultralytics YOLO environment locally.

After you [train a model](./models.md#train-model), you can use the [Shared Inference API](#shared-inference-api) for free. If you are a [Pro](./pro.md) user, you can access the [Dedicated Inference API](#dedicated-inference-api). The [Ultralytics HUB](https://ultralytics.com/hub) Inference API allows you to run inference through our REST API without the need to install and set up the Ultralytics YOLO environment locally.

@ -20,6 +20,40 @@ The [Ultralytics HUB](https://ultralytics.com/hub) Inference API allows you to r

<strong>Watch:</strong> Ultralytics HUB Inference API Walkthrough

</p>

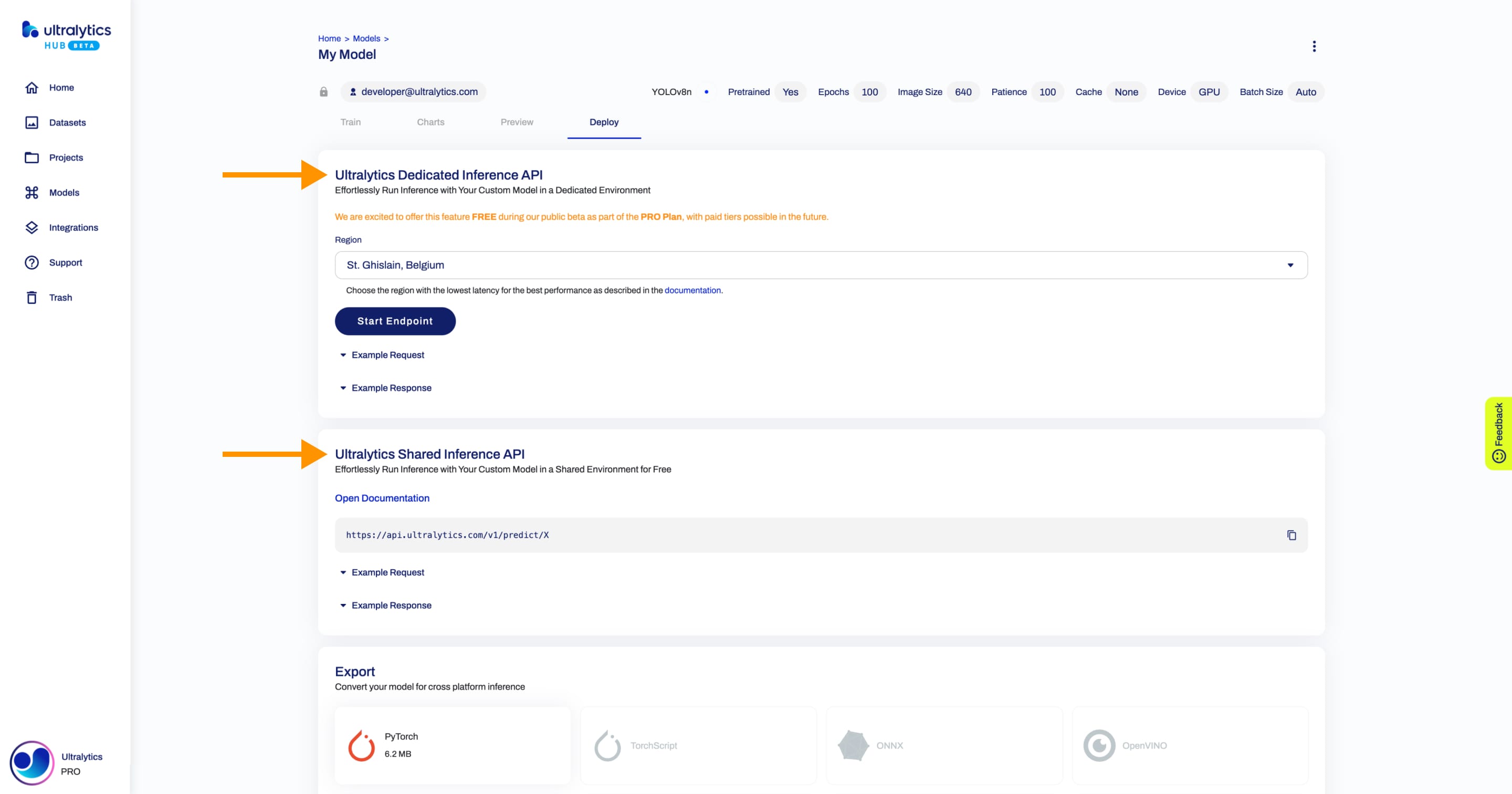

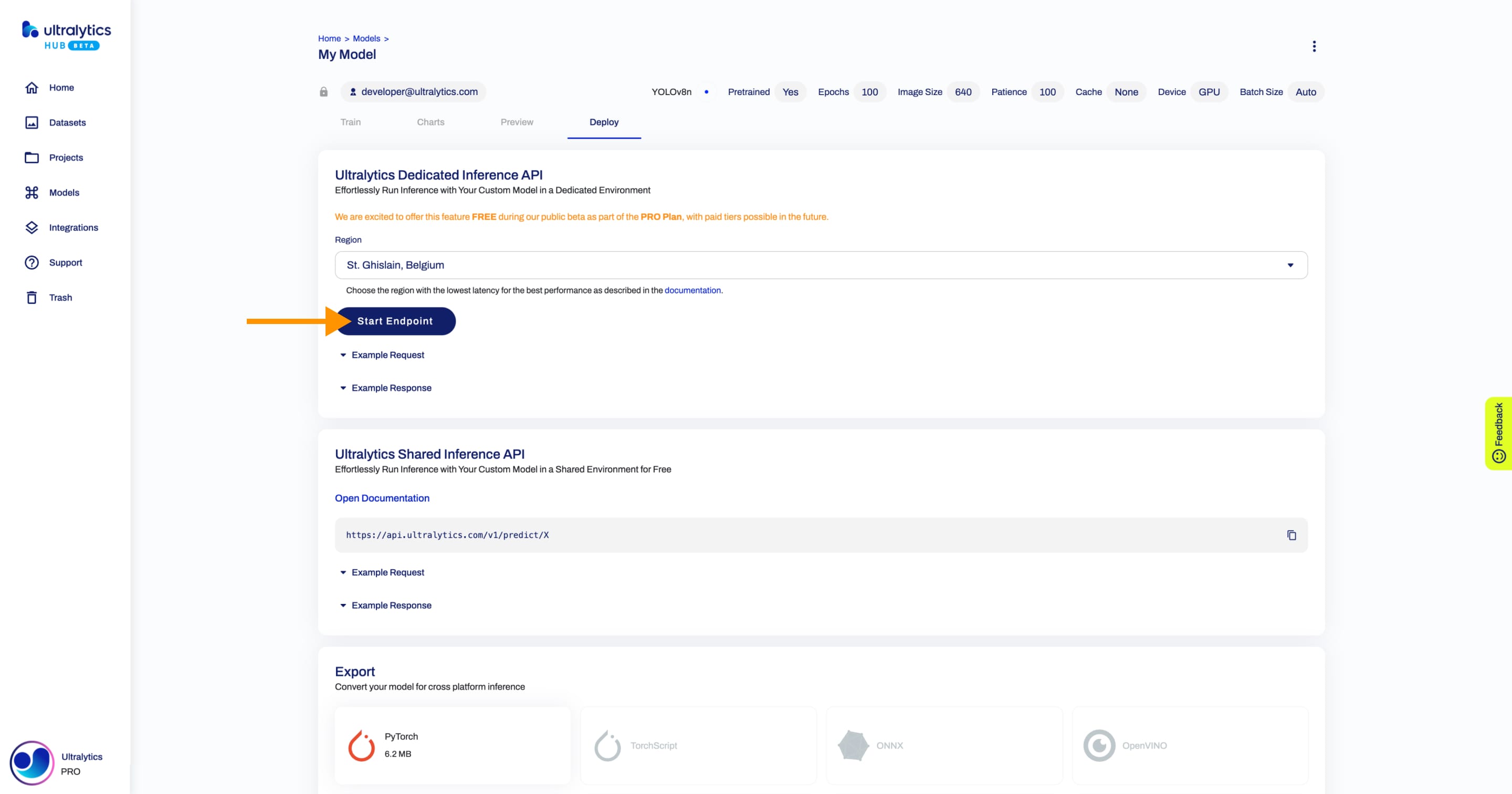

## Dedicated Inference API

In response to high demand and widespread interest, we are thrilled to unveil the [Ultralytics HUB](https://ultralytics.com/hub) Dedicated Inference API, offering single-click deployment in a dedicated environment for our [Pro](./pro.md) users!

!!! note "Note"

We are excited to offer this feature FREE during our public beta as part of the [Pro Plan](./pro.md), with paid tiers possible in the future.

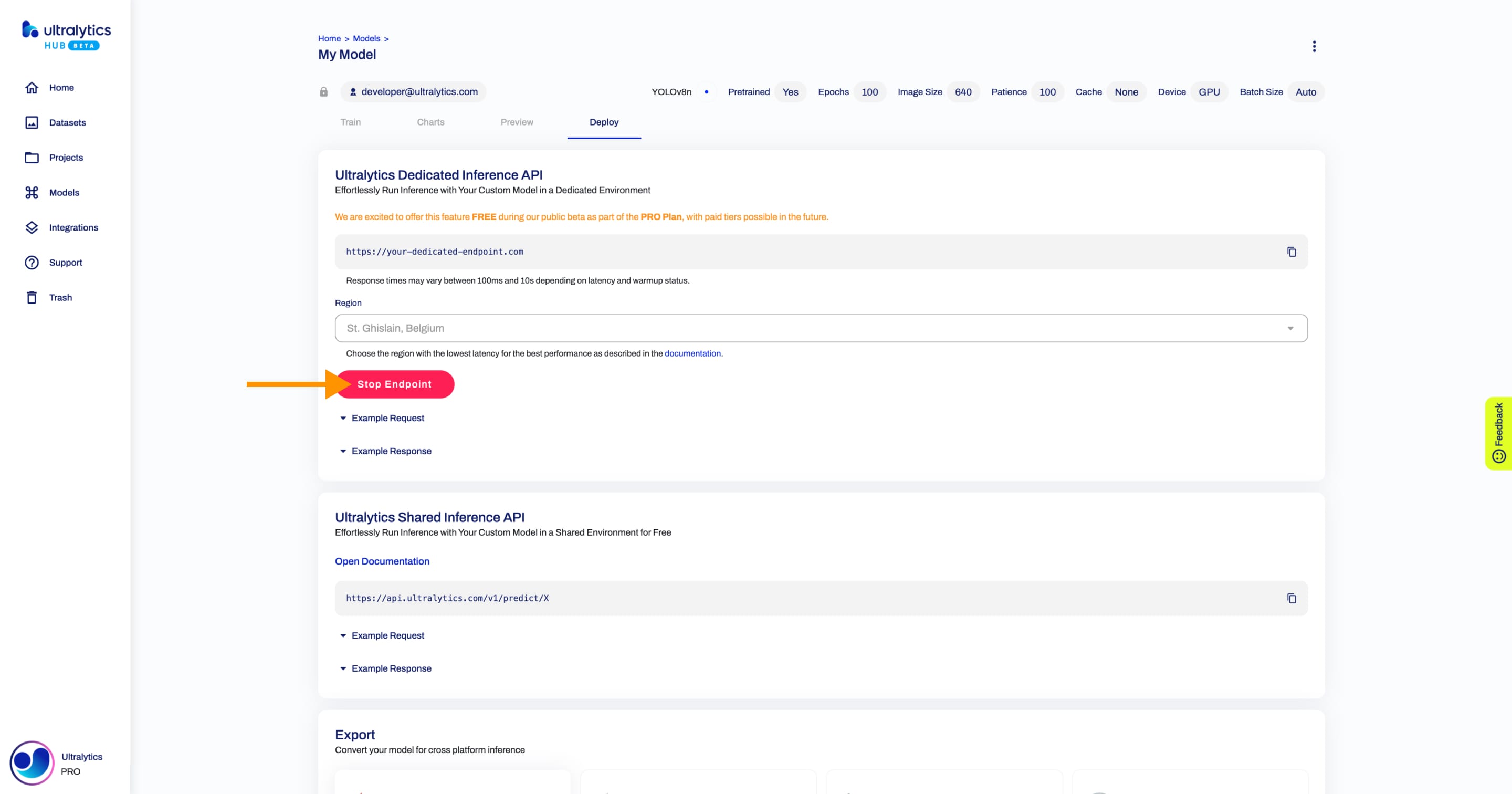

To use the [Ultralytics HUB](https://ultralytics.com/hub) Dedicated Inference API, click on the **Start Endpoint** button. Next, use the unique endpoint URL as described in the guides below.

!!! tip "Tip"

Choose the region with the lowest latency for the best performance as described in the [documentation](https://docs.ultralytics.com/reference/hub/google/__init__).

To shut down the dedicated endpoint, click on the **Stop Endpoint** button.

## Shared Inference API

To use the [Ultralytics HUB](https://ultralytics.com/hub) Shared Inference API, follow the guides below.

Free users have the following usage limits:

- 100 calls / hour

- 1000 calls / month

[Pro](./pro.md) users have the following usage limits:

- 1000 calls / hour

- 10000 calls / month

## Python

To access the [Ultralytics HUB](https://ultralytics.com/hub) Inference API using Python, use the following code:

data = {"size": 640, "confidence": 0.25, "iou": 0.45}

data = {"imgsz": 640, "conf": 0.25, "iou": 0.45}

# Load image and send request

with open("path/to/image.jpg", "rb") as image_file:

@ -48,6 +82,8 @@ print(response.json())

Replace `MODEL_ID` with the desired model ID, `API_KEY` with your actual API key, and `path/to/image.jpg` with the path to the image you want to run inference on.

If you are using our [Dedicated Inference API](#dedicated-inference-api), replace the `url` as well.

## cURL

To access the [Ultralytics HUB](https://ultralytics.com/hub) Inference API using cURL, use the following code:

@ -56,8 +92,8 @@ To access the [Ultralytics HUB](https://ultralytics.com/hub) Inference API using

curl -X POST "https://api.ultralytics.com/v1/predict/MODEL_ID" \

-H "x-api-key: API_KEY" \

-F "image=@/path/to/image.jpg" \

-F "size=640" \

-F "confidence=0.25" \

-F "imgsz=640" \

-F "conf=0.25" \

-F "iou=0.45"

```

@ -65,17 +101,18 @@ curl -X POST "https://api.ultralytics.com/v1/predict/MODEL_ID" \

Replace `MODEL_ID` with the desired model ID, `API_KEY` with your actual API key, and `path/to/image.jpg` with the path to the image you want to run inference on.

If you are using our [Dedicated Inference API](#dedicated-inference-api), replace the `url` as well.

## Arguments

See the table below for a full list of available inference arguments.

@ -104,7 +104,7 @@ YOLOv8 can process different types of input sources for inference, as shown in t

Use `stream=True` for processing long videos or large datasets to efficiently manage memory. When `stream=False`, the results for all frames or data points are stored in memory, which can quickly add up and cause out-of-memory errors for large inputs. In contrast, `stream=True` utilizes a generator, which only keeps the results of the current frame or data point in memory, significantly reducing memory consumption and preventing out-of-memory issues.