10 KiB

Polling Engines

Author: Sree Kuchibhotla (@sreecha) - Sep 2018

Why do we need a 'polling engine' ?

Polling engine component was created for the following reasons:

- gRPC code deals with a bunch of file descriptors on which events like descriptor being readable/writable/error have to be monitored

- gRPC code knows the actions to perform when such events happen

- For example:

grpc_endpointcode callsrecvmsgcall when the fd is readable andsendmsgcall when the fd is writabletcp_clientconnect code issues asyncconnectand finishes creating the client once the fd is writable (i.e when theconnectactually finished)

- gRPC needed some component that can "efficiently" do the above operations using the threads provided by the applications (i.e., not create any new threads). Also by "efficiently" we mean optimized for latency and throughput

Polling Engine Implementations in gRPC

There are multiple polling engine implementations depending on the OS and the OS version. Fortunately all of them expose the same interface

-

Linux:

epollex(default but requires kernel version >= 4.5),epoll1(Ifepollexis not available and glibc version >= 2.9)poll(If kernel does not have epoll support)poll-cv(If explicitly configured)

-

Mac:

poll(default),poll-cv(If explicitly configured) -

Windows: (no name)

-

One-off polling engines:

- AppEngine platform:

poll-cv(default) - NodeJS :

libuvpolling engine implementation (requires different compile#defines)

- AppEngine platform:

Polling Engine Interface

Opaque Structures exposed by the polling engine

The following are the Opaque structures exposed by Polling Engine interface (NOTE: Different polling engine implementations have different definitions of these structures)

- grpc_fd: Structure representing a file descriptor

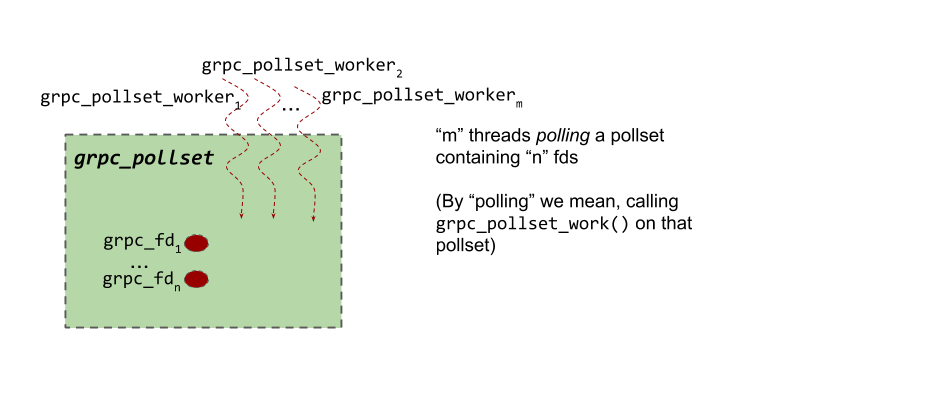

- grpc_pollset: A set of one or more grpc_fds that are ‘polled’ for readable/writable/error events. One grpc_fd can be in multiple

grpc_pollsets - grpc_pollset_worker: Structure representing a ‘polling thread’ - more specifically, the thread that calls

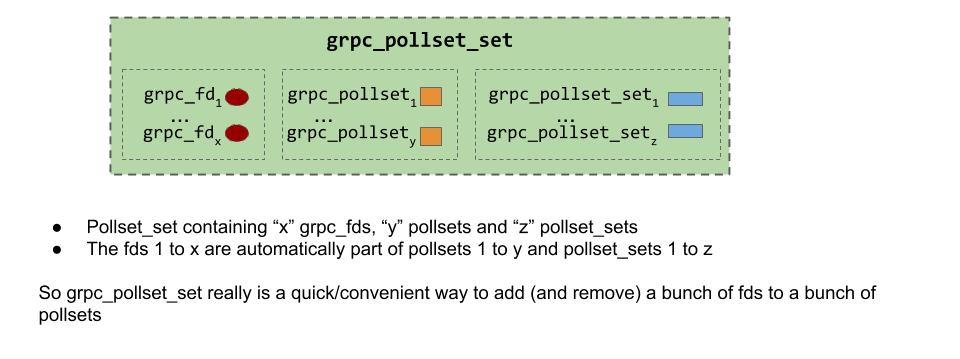

grpc_pollset_work()API - grpc_pollset_set: A group of

grpc_fds,grpc_pollsetsandgrpc_pollset_sets(yes, agrpc_pollset_setcan contain othergrpc_pollset_sets)

Polling engine API

grpc_fd

-

grpc_fd_notify_on_[read|write|error]

- Signature:

grpc_fd_notify_on_(grpc_fd* fd, grpc_closure* closure) - Register a closure to be called when the fd becomes readable/writable or has an error (In grpc parlance, we refer to this act as “arming the fd”)

- The closure is called exactly once per event. I.e once the fd becomes readable (or writable or error), the closure is fired and the fd is ‘unarmed’. To be notified again, the fd has to be armed again.

- Signature:

-

grpc_fd_shutdown

- Signature:

grpc_fd_shutdown(grpc_fd* fd) - Any current (or future) closures registered for readable/writable/error events are scheduled immediately with an error

- Signature:

-

grpc_fd_orphan

- Signature:

grpc_fd_orphan(grpc_fd* fd, grpc_closure* on_done, int* release_fd, char* reason) - Release the

grpc_fdstructure and callon_doneclosure when the operation is complete - If

release_fdis set tonullptr, thenclose()the underlying fd as well. If not, put the underlying fd inrelease_fd(and do not callclose())release_fdset to non-null in cases where the underlying fd is NOT owned by grpc core (like for example the fds used by C-Ares DNS resolver )

- Signature:

grpc_pollset

-

**grpc_pollset_add_fd **

- Signature:

grpc_pollset_add_fd(grpc_pollset* ps, grpc_fd *fd) - Add fd to pollset

NOTE: There is no

grpc_pollset_remove_fd. This is because callinggrpc_fd_orphan()will effectively remove the fd from all the pollsets it’s a part of

- Signature:

-

** grpc_pollset_work **

- Signature:

grpc_pollset_work(grpc_pollset* ps, grpc_pollset_worker** worker, grpc_millis deadline)NOTE:

grpc_pollset_work()requires the pollset mutex to be locked before calling it. Shortly after callinggrpc_pollset_work(), the function populates the*workerpointer (among other things) and releases the mutex. Oncegrpc_pollset_work()returns, the*workerpointer is invalid and should not be used anymore. See the code incompletion_queue.ccto see how this is used. - Poll the fds in the pollset for events AND return when ANY of the following is true:

- Deadline expired

- Some fds in the pollset were found to be readable/writable/error and those associated closures were ‘scheduled’ (but not necessarily executed)

- worker is “kicked” (see

grpc_pollset_kickfor more details)

- Signature:

-

grpc_pollset_kick

-

Signature:

grpc_pollset_kick(grpc_pollset* ps, grpc_pollset_worker* worker) -

“Kick the worker” i.e Force the worker to return from grpc_pollset_work()

-

If

worker == nullptr, kick ANY worker active on that pollset

grpc_pollset_set

-

grpc_pollset_set_[add|del]_fd

- Signature:

grpc_pollset_set_[add|del]_fd(grpc_pollset_set* pss, grpc_fd *fd)Add/Remove fd to thegrpc_pollset_set

- Signature:

-

grpc_pollset_set_[add|del]_pollset

- Signature:

grpc_pollset_set_[add|del]_pollset(grpc_pollset_set* pss, grpc_pollset* ps) - What does adding a pollset to a pollset_set mean ?

- It means that calling

grpc_pollset_work()on the pollset will also poll all the fds in the pollset_set i.e semantically, it is similar to adding all the fds inside pollset_set to the pollset. - This guarantee is no longer true once the pollset is removed from the pollset_set

- It means that calling

- Signature:

-

grpc_pollset_set_[add|del]_pollset_set

- Signature:

grpc_pollset_set_[add|del]_pollset_set(grpc_pollset_set* bag, grpc_pollset_set* item) - Semantically, this is similar to adding all the fds in the ‘bag’ pollset_set to the ‘item’ pollset_set

- Signature:

Recap:

Relation between grpc_pollset_worker, grpc_pollset and grpc_fd:

grpc_pollset_set

Polling Engine Implementations

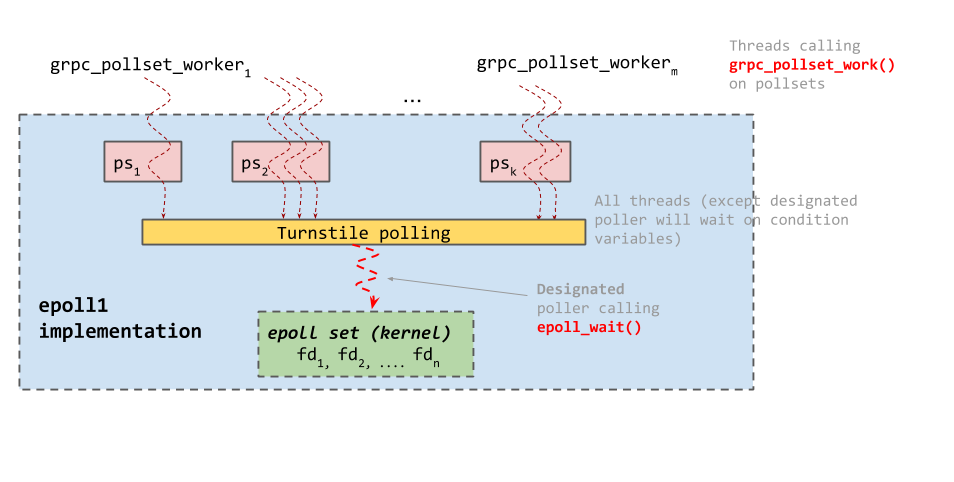

epoll1

Code at src/core/lib/iomgr/ev_epoll1_posix.cc

-

The logic to choose a designated poller is quite complicated. Pollsets are internally sharded into what are called

pollset_neighborhood(a structure internal toepoll1polling engine implementation).grpc_pollset_workersthat callgrpc_pollset_workon a given pollset are all queued in a linked-list against thegrpc_pollset. The head of the linked list is called "root worker" -

There are as many neighborhoods as the number of cores. A pollset is put in a neighborhood based on the CPU core of the root worker thread. When picking the next designated poller, we always try to find another worker on the current pollset. If there are no more workers in the current pollset, a

pollset_neighborhoodlisted is scanned to pick the next pollset and worker that could be the new designated poller.- NOTE: There is room to tune this implementation. All we really need is good way to maintain a list of

grpc_pollset_workerswith a way to group them per-pollset (needed to implementgrpc_pollset_kicksemantics) and a way randomly select a new designated poller

- NOTE: There is room to tune this implementation. All we really need is good way to maintain a list of

-

See

begin_worker()function to see how a designated poller is chosen. Similarlyend_worker()function is called by the worker that was just out ofepoll_wait()and will have to choose a new designated poller)

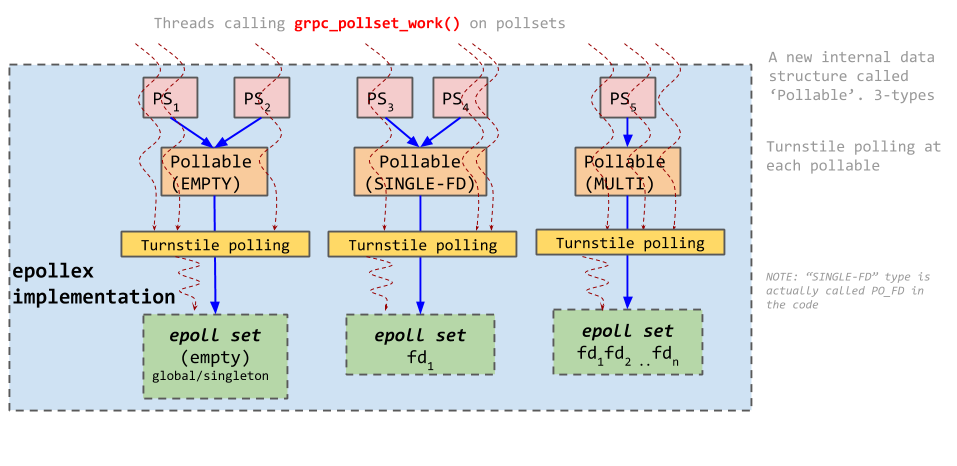

epollex

Code at src/core/lib/iomgr/ev_epollex_posix.cc

-

FDs are added to multiple epollsets with EPOLLEXCLUSIVE flag. This prevents multiple worker threads from waking up from polling whenever the fd is readable/writable

-

A few observations:

- If multiple pollsets are pointing to the same

Pollable, then thepollableMUST be either empty or of typePO_FD(i.e single-fd) - A multi-pollable has one-and-only-one incoming link from a pollset

- The same FD can be in multiple

Pollables (even if one of thePollables is of type PO_FD) - There cannot be two

Pollables of type PO_FD for the same fd

- If multiple pollsets are pointing to the same

-

Why do we need

Pollableof type PO_FD and PO_EMTPY ?- The main reason is the Sync client API

- We create one new completion queue per call. If we didn’t have PO_EMPTY and PO_FD type pollables, then every call on a given channel will effectively have to create a

Pollableand hence an epollset. This is because every completion queue automatically creates a pollset and the channel fd will have to be put in that pollset. This clearly requires an epollset to put that fd. Creating an epollset per call (even if we delete the epollset once the call is completed) would mean a lot of sys calls to create/delete epoll fds. This is clearly not a good idea. - With these new types of

Pollables, all pollsets (corresponding to the new per-call completion queue) will initially point to PO_EMPTY global epollset. Then once the channel fd is added to the pollset, the pollset will point to thePollableof type PO_FD containing just that fd (i.e it will reuse the existingPollable). This way, the epoll fd creation/deletion churn is avoided.

- We create one new completion queue per call. If we didn’t have PO_EMPTY and PO_FD type pollables, then every call on a given channel will effectively have to create a

- The main reason is the Sync client API

Other polling engine implementations (poll and windows polling engine)

-

poll polling engine: gRPC's

pollpolling engine is quite complicated. It uses thepoll()function to do the polling (and hence it is for platforms like osx where epoll is not available)- The implementation is further complicated by the fact that poll() is level triggered (just keep this in mind in case you wonder why the code at

src/core/lib/iomgr/ev_poll_posix.ccis written a certain/seemingly complicated way :))

- The implementation is further complicated by the fact that poll() is level triggered (just keep this in mind in case you wonder why the code at

-

Polling engine on Windows: Windows polling engine looks nothing like other polling engines

- Unlike the grpc polling engines for Unix systems (epollex, epoll1 and poll) Windows endpoint implementation and polling engine implementations are very closely tied together

- Windows endpoint read/write API implementations use the Windows IO API which require specifying an I/O completion port

- In Windows polling engine’s grpc_pollset_work() implementation, ONE of the threads is chosen to wait on the I/O completion port while other threads wait on a condition variable (much like the turnstile polling in epollex/epoll1)