More work on the dualstack backend design:

- Change ring_hash policy to delegate to pick_first instead of creating

subchannels directly.

- Note that, as mentioned in the WIP gRFC, because we lazily create the

pick_first child policies, so there's no need to swap over to a new list

as an atomic whole. As a result, we don't use the endpoint_list library

in this policy; instead, we just update a map in-place.

- Remove now-unused subchannel_list library.

More work on the dualstack backend design:

- Change round_robin to delegate to pick_first instead of creating

subchannels directly.

- Change pick_first such that when it is the child of a petiole policy,

it will unconditionally start a health watch.

- Change the client-side health checking code such that if client-side

health checking is not enabled, it will return the subchannel's raw

connectivity state.

- As part of this, we introduce a new endpoint_list library to be used

by petiole policies, which is intended to replace the existing

subchannel_list library. The only policy that will still directly

interact with subchannels is pick_first, so the relevant parts of the

subchannel_list functionality have been copied directly into that

policy. The subchannel_list library will be removed after all petiole

policies are updated to delegate to pick_first.

The address attribute interface was intended to provide a mechanism to

pass attributes separately from channel args, for values that do not

affect subchannel behavior and therefore do not need to be present in

the subchannel key, which does include channel args. However, the

mechanism as currently designed is fairly clunky and is probably not the

direction we will want to go in the long term.

Eventually, we will want some mechanism for registering channel args,

which would provide a cleaner way to indicate that a given channel arg

should not be used in the subchannel key, so that we don't need a

completely different mechanism. For now, this PR is just doing an

interim step, which is to establish a special channel arg key prefix to

indicate that an arg is not needed in the subchannel key.

Revert "Revert "[core] Add support for vsock transport"

(https://github.com/grpc/grpc/pull/33276)"

This reverts commit

c5ade3011a.

And fix the issue which broke the python build.

@markdroth@drfloob please review this PR. Thank you very much.

---------

Co-authored-by: AJ Heller <hork@google.com>

The approach of doing a recursive function call to expand the if checks

for known metadata names was tripping up an optimization clang has to

collapse that if/then tree into an optimized tree search over the set of

known strings. By unrolling that loop (with a code generator) we start

to present a pattern that clang *can* recognize, and hopefully get some

more stable and faster code generation as a benefit.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

---------

Co-authored-by: ctiller <ctiller@users.noreply.github.com>

This is another attempt to add support for vsock in grpc since previous

PRs(#24551, #21745) all closed without merging.

The VSOCK address family facilitates communication between

virtual machines and the host they are running on.

This patch will introduce new scheme: [vsock:cid:port] to

support VSOCK address family.

Fixes#32738.

---------

Signed-off-by: Yadong Qi <yadong.qi@intel.com>

Co-authored-by: AJ Heller <hork@google.com>

Co-authored-by: YadongQi <YadongQi@users.noreply.github.com>

Most of these data structures need to scale a bit like per-cpu, but not

entirely. We can have more than one cpu hit the same instance in most

cases, and probably want to cap out before the hundreds of shards some

platforms have.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

---------

Co-authored-by: ctiller <ctiller@users.noreply.github.com>

Upgrade apple platform deployment_target versions to fix the cocoapods

push of BoringSSL-GRPC about the following error:

```

ld: file not found: /Applications/Xcode.app/Contents/Developer/Toolchains/XcodeDefault.xctoolchain/usr/lib/arc/libarclite_macosx.a

clang: error: linker command failed with exit code 1 (use -v to see invocation)

```

ref: https://developer.apple.com/forums/thread/725300

This also aligns with the versions required by

[protobuf](https://github.com/protocolbuffers/protobuf/pull/10652)

```

ios.deployment_target = '10.0'

osx.deployment_target = '10.12'

tvos.deployment_target = '12.0'

watchos.deployment_target = '6.0'

```

This test mode tries to create threads wherever it legally can to

maximize the chances of TSAN finding errors in our codebase.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

---------

Co-authored-by: ctiller <ctiller@users.noreply.github.com>

The logger uses `absl::FPrintF` to write to stdout. After reading a

number of sources online, I got the impression that `std::fwrite` which

is used by `absl::FPrintF` is atomic so there is no locking required

here.

---------

Co-authored-by: rockspore <rockspore@users.noreply.github.com>

This makes the JSON API visible as part of the C-core API, but in the

`experimental` namespace. It will be used as part of various

experimental APIs that we will be introducing in the near future, such

as the audit logging API.

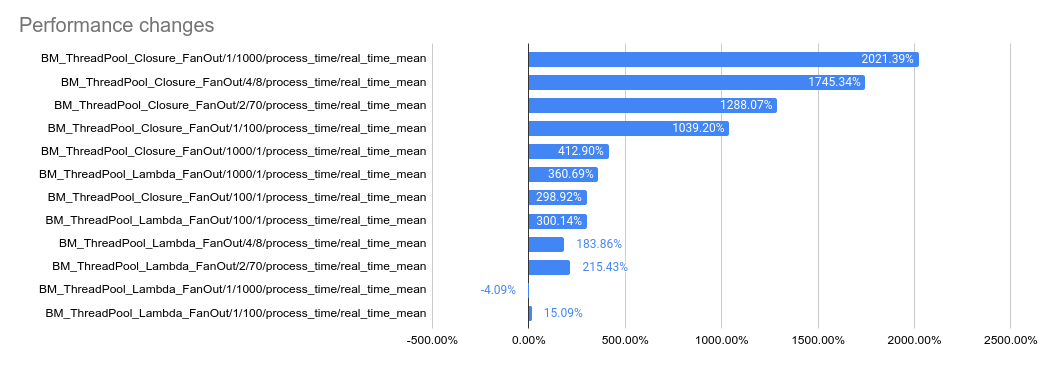

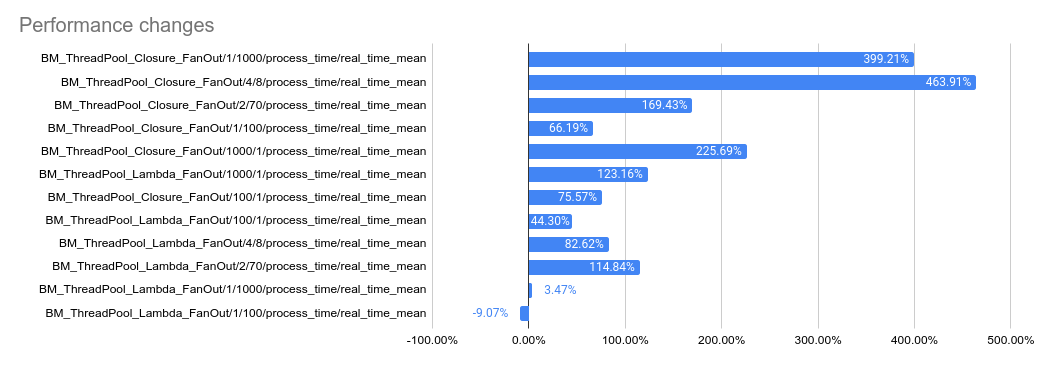

This PR implements a work-stealing thread pool for use inside

EventEngine implementations. Because of historical risks here, I've

guarded the new implementation behind an experiment flag:

`GRPC_EXPERIMENTS=work_stealing`. Current default behavior is the

original thread pool implementation.

Benchmarks look very promising:

```

bazel test \

--test_timeout=300 \

--config=opt -c opt \

--test_output=streamed \

--test_arg='--benchmark_format=csv' \

--test_arg='--benchmark_min_time=0.15' \

--test_arg='--benchmark_filter=_FanOut' \

--test_arg='--benchmark_repetitions=15' \

--test_arg='--benchmark_report_aggregates_only=true' \

test/cpp/microbenchmarks:bm_thread_pool

```

2023-05-04: `bm_thread_pool` benchmark results on my local machine (64

core ThreadRipper PRO 3995WX, 256GB memory), comparing this PR to

master:

2023-05-04: `bm_thread_pool` benchmark results in the Linux RBE

environment (unsure of machine configuration, likely small), comparing

this PR to master.

---------

Co-authored-by: drfloob <drfloob@users.noreply.github.com>

Reverts grpc/grpc#32924. This breaks the build again, unfortunately.

From `test/core/event_engine/cf:cf_engine_test`:

```

error: module .../grpc/test/core/event_engine/cf:cf_engine_test does not depend on a module exporting 'grpc/support/port_platform.h'

```

@sampajano I recommend looking into CI tests to catch iOS problems

before merging. We can enable EventEngine experiments in the CI

generally once this PR lands, but this broken test is not one of those

experiments. A normal build should have caught this.

cc @HannahShiSFB

Makes some awkward fixes to compression filter, call, connected channel

to hold the semantics we have upheld now in tests.

Once the fixes described here

https://github.com/grpc/grpc/blob/master/src/core/lib/channel/connected_channel.cc#L636

are in this gets a lot less ad-hoc, but that's likely going to be

post-landing promises client & server side.

We specifically need special handling for server side cancellation in

response to reads wrt the inproc transport - which doesn't track

cancellation thoroughly enough itself.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

---------

Co-authored-by: ctiller <ctiller@users.noreply.github.com>

1. `GrpcAuthorizationEngine` creates the logger from the given config in

its ctor.

2. `Evaluate()` invokes audit logging when needed.

---------

Co-authored-by: rockspore <rockspore@users.noreply.github.com>

Audit logging APIs for both built-in loggers and third-party logger

implementations.

C++ uses using decls referring to C-Core APIs.

---------

Co-authored-by: rockspore <rockspore@users.noreply.github.com>

Third-party loggers will be added in subsequent PRs once the logger

factory APIs are available to validate the configs here.

This registry is used in `xds_http_rbac_filter.cc` to generate service

config json.

This paves the way for making pick_first the universal leaf policy (see

#32692), which will be needed for the dualstack design. That change will

require changing pick_first to see both the raw connectivity state and

the health-checking connectivity state of a subchannel, so that we can

enable health checking when pick_first is used underneath round_robin

without actually changing the pick_first connectivity logic (currently,

pick_first always disables health checking). To make it possible to do

that, this PR moves the health checking code out of the subchannel and

into a separate API using the same data-watcher mechanism that was added

for ORCA OOB calls.

The PR also creates a separate BUILD target for:

- chttp2 context list

- iomgr buffer_list

- iomgr internal errqueue

This would allow the context list to be included as standalone

dependencies for EventEngine implementations.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

@sampajano

The very non-trivial upgrade of third_party/protobuf to 22.x

This PR strives to be as small as possible and many changes that were

compatible with protobuf 21.x and didn't have to be merged atomically

with the upgrade were already merged.

Due to the complexity of the upgrade, this PR wasn't created

automatically by a tool, but manually. Subsequent upgraded of

third_party/protobuf with our OSS release script should work again once

this change is merged.

This is best reviewed commit-by-commit, I tried to group changes in

logical areas.

Notable changes:

- the upgrade of third_party/protobuf submodule, the bazel protobuf

dependency itself

- upgrade of UPB dependency to 22.x (in the past, we used to always

upgrade upb to "main", but upb now has release branch as well). UPB

needs to be upgraded atomically with protobuf since there's a de-facto

circular dependency (new protobuf depends on new upb, which depends on

new protobuf for codegen).

- some protobuf and upb bazel rules are now aliases, so `

extract_metadata_from_bazel_xml.py` and `gen_upb_api_from_bazel_xml.py`

had to be modified to be able to follow aliases and reach the actual

aliased targets.

- some protobuf public headers were renamed, so especially

`src/compiler` needed to be updated to use the new headers.

- protobuf and upb now both depend on utf8_range project, so since we

bundle upb with grpc in some languages, we now have to bundle utf8_range

as well (hence changes in build for python, PHP, objC, cmake etc).

- protoc now depends on absl and utf8_range (previously protobuf had

absl dependency, but not for the codegen part), so python's

make_grpcio_tools.py required partial rewrite to be able to handle those

dependencies in the grpcio_tools build.

- many updates and fixes required for C++ distribtests (currently they

all pass, but we'll probably need to follow up, make protobuf's and

grpc's handling of dependencies more aligned and revisit the

distribtests)

- bunch of other changes mostly due to overhaul of protobuf's and upb's

internal build layout.

TODOs:

- [DONE] make sure IWYU and clang_tidy_code pass

- create a list of followups (e.g. work to reenable the few tests I had

to disable and to remove workaround I had to use)

- [DONE in cl/523706129] figure out problem(s) with internal import

---------

Co-authored-by: Craig Tiller <ctiller@google.com>

Notes:

- `+trace` fixtures haven't run since 2016, so they're disabled for now

(7ad2d0b463 (diff-780fce7267c34170c1d0ea15cc9f65a7f4b79fefe955d185c44e8b3251cf9e38R76))

- all current fixtures define `FEATURE_MASK_SUPPORTS_AUTHORITY_HEADER`

and hence `authority_not_supported` has not been run in years - deleted

- bad_hostname similarly hasn't been triggered in a long while, so

deleted

- load_reporting_hook has never been enabled, so deleted

(f23fb4cf31/test/core/end2end/generate_tests.bzl (L145-L148))

- filter_latency & filter_status_code rely on global variables and so

don't convert particularly cleanly - and their value seems marginal, so

deleted

---------

Co-authored-by: ctiller <ctiller@users.noreply.github.com>

When using this internally, we noticed that it's impossible to use

custom_metadata.h without creating a dependency cycle between

:custom_metadata and :grpc_base.

A full build refactoring is too large right now, so merge that header

into :grpc_base for the time being.

Also, separate `SimpleSliceBasedMetadata` into its own file, so that it

can be reused in custom_metadata.h.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

---------

Co-authored-by: ctiller <ctiller@users.noreply.github.com>

This PR also centralizes the client channel resolver selection. Resolver

selection is still done using the plugin system, but when the Ares and

native client channel resolvers go away, we can consider bootstrapping

this differently.

Aim here is to allow adding custom metadata types to the internal build.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

---------

Co-authored-by: ctiller <ctiller@users.noreply.github.com>

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

---------

Co-authored-by: ctiller <ctiller@users.noreply.github.com>

(hopefully last try)

Add new channel arg GRPC_ARG_ABSOLUTE_MAX_METADATA_SIZE as hard limit

for metadata. Change GRPC_ARG_MAX_METADATA_SIZE to be a soft limit.

Behavior is as follows:

Hard limit

(1) if hard limit is explicitly set, this will be used.

(2) if hard limit is not explicitly set, maximum of default and soft

limit * 1.25 (if soft limit is set) will be used.

Soft limit

(1) if soft limit is explicitly set, this will be used.

(2) if soft limit is not explicitly set, maximum of default and hard

limit * 0.8 (if hard limit is set) will be used.

Requests between soft and hard limit will be rejected randomly, requests

above hard limit will be rejected.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

---------

Co-authored-by: ctiller <ctiller@users.noreply.github.com>