Support Python 3.12.

### Testing

* Passed all Distribution Tests.

* Also tested locally by installing 3.12 artifact.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

The deleted code here was overriding the

[intended](866fc41067/tools/run_tests/run_tests.py (L62))

default test env of `GRPC_VERBOSITY=DEBUG`.

I'm just deleting it because it looks like`GRPC_TRACE=api` is not having

any affect anyways, since it relies on `GRPC_VERBOSITY=DEBUG` which it

happens to be unsetting.

Added a separate distribtests for gRPC C++ DLL build on Windows. This

DLL build is a community support so it should be independently run from

the existing Windows distribtests. Actual DLL test will be added.

Working towards testing against CSM Observability. Added ability to

register a prometheus exporter with our Opentelemetry plugin. This will

allow our metrics to be available at the standard prometheus port

`:9464`.

Since many tests now run reliably as bazelified tests on RBE, we can

remove them from presubmit runs

to speedup testing of PRs.

(for now, these jobs will still run on master, they can be removed from

master as a followup).

- linux/grpc_distribtests_standalone is now fully covered by bazel test

suite

a3b4c797a7/tools/bazelify_tests/test/BUILD (L202),

setting them to `presubmit=False` will stop tests from running on PRs.

- stop running tests from grpc_bazel_distribtest on PR, instead rely on

bazel distribtests running as bazelified tests.

This is just an initial scope of tests. Much of this code was written by

@ginayeh . I just did the final polish/integration step.

There are 3 main tests included:

1. The GAMMA baseline test, including the [actual GAMMA

API](https://gateway-api.sigs.k8s.io/geps/gep-1426/) rather than vendor

extensions.

2. Kubernetes-based stateful session affinity tests, where the mesh

(including SSA configuration) is configured using CRDs

3. GCP-based stateful session affinity tests, where the mesh is

configured using the networkservices APIs directly

Tests 1 and 2 will run in both prod and GKE staging, i.e.

`container.googleapis.com` and

`staging-container.sandbox.googleapis.com`. The latter of these will act

as an early detection mechanism for regressions in the controller that

translates Gateway resources into networkservices resources.

Test 3 will run against `staging-networkservices.sandbox.googleapis.com`

to act as an early detection mechanism for regressions in the control

plane SSA implementation.

The scope of the SSA tests is still fairly minimal. Session drain

testing is in-progress but not included in this PR, though several

elements required for it are (grace period, pre-stop hook, and the

ability to kill a single pod in a deployment).

---------

Co-authored-by: Jung-Yu (Gina) Yeh <ginayeh@google.com>

Co-authored-by: Sergii Tkachenko <sergiitk@google.com>

Distribtests is failing with the following error:

```

Collecting twine<=2.0

Downloading twine-2.0.0-py3-none-any.whl (34 kB)

Collecting pkginfo>=1.4.2 (from twine<=2.0)

Downloading pkginfo-1.9.6-py3-none-any.whl (30 kB)

Collecting readme-renderer>=21.0 (from twine<=2.0)

Obtaining dependency information for readme-renderer>=21.0 from 992e0e21b36c98bc06a55e514cb323/readme_renderer-42.0-py3-none-any.whl.metadata

Downloading readme_renderer-42.0-py3-none-any.whl.metadata (2.8 kB)

Collecting requests>=2.20 (from twine<=2.0)

Obtaining dependency information for requests>=2.20 from 0e2d847013cd6965bf26b47bc0bf44/requests-2.31.0-py3-none-any.whl.metadata

Downloading requests-2.31.0-py3-none-any.whl.metadata (4.6 kB)

Collecting requests-toolbelt!=0.9.0,>=0.8.0 (from twine<=2.0)

Downloading requests_toolbelt-1.0.0-py2.py3-none-any.whl (54 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 54.5/54.5 kB 5.1 MB/s eta 0:00:00

Requirement already satisfied: setuptools>=0.7.0 in ./venv/lib/python3.9/site-packages (from twine<=2.0) (68.2.0)

Collecting tqdm>=4.14 (from twine<=2.0)

Obtaining dependency information for tqdm>=4.14 from f12a80907dc3ae54c5e962cc83037e/tqdm-4.66.1-py3-none-any.whl.metadata

Downloading tqdm-4.66.1-py3-none-any.whl.metadata (57 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 57.6/57.6 kB 7.6 MB/s eta 0:00:00

Collecting nh3>=0.2.14 (from readme-renderer>=21.0->twine<=2.0)

Downloading nh3-0.2.14.tar.gz (14 kB)

Installing build dependencies: started

Installing build dependencies: finished with status 'done'

Getting requirements to build wheel: started

Getting requirements to build wheel: finished with status 'done'

Preparing metadata (pyproject.toml): started

Preparing metadata (pyproject.toml): finished with status 'error'

error: subprocess-exited-with-error

× Preparing metadata (pyproject.toml) did not run successfully.

│ exit code: 1

╰─> [6 lines of output]

Cargo, the Rust package manager, is not installed or is not on PATH.

This package requires Rust and Cargo to compile extensions. Install it through

the system's package manager or via https://rustup.rs/

Checking for Rust toolchain....

[end of output]

note: This error originates from a subprocess, and is likely not a problem with pip.

error: metadata-generation-failed

× Encountered error while generating package metadata.

╰─> See above for output.

```

### Why

* We're pulling readme_renderer 42.0 from twine, since 42.0 requires nh3

and nh3 requires Rust, the test is failing.

### Fix

* Pinged readme_renderer to `<40.0` since any version higher or equal to

40.0 requires Python 3.8.

### Testing

* Passed manual run: http://sponge/57d815a7-629f-455f-b710-5b80369206cd

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

* Remove `fetch_build_eggs` since they're deprecated and those deps will

be installed by `setuptools`.

* Fix indentation on `run_test` so we don't miss `native` test cases.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

### Background

* `distutils` is deprecated with removal planned for Python 3.12

([pep-0632](https://peps.python.org/pep-0632/)), thus we're trying to

replace all distutils usage with setuptools.

* Please note that user still have access to `distutils` if setuptools

is installed and `SETUPTOOLS_USE_DISTUTILS` is set to `local` (The

default in setuptools, more details can be found [in this

discussion](https://github.com/pypa/setuptools/issues/2806#issuecomment-1193336591)).

### How we decide the replacement

* We're following setuptools [Porting from Distutils

guide](https://setuptools.pypa.io/en/latest/deprecated/distutils-legacy.html#porting-from-distutils)

when deciding the replacement.

#### Replacement not mentioned in the guide

* Replaced `distutils.utils.get_platform()` with

`sysconfig.get_platform()`.

* Based on the [answer

here](https://stackoverflow.com/questions/71664875/what-is-the-replacement-for-distutils-util-get-platform),

and also checked the document that `sysconfig.get_platform()` is good

enough for our use cases.

* Replaced `DistutilsOptionError` with `OptionError`.

* `setuptools.error` is exporting it as `OptionError` [in the

code](https://github.com/pypa/setuptools/blob/v59.6.0/setuptools/errors.py).

* Upgrade `setuptools` in `test_packages.sh` and changed the version

ping to `59.6.0` in `build_artifact_python.bat`.

* `distutils.errors.*` is not fully re-exported until `59.0.0` (See

[this issue](https://github.com/pypa/setuptools/issues/2698) for more

details).

### Changes not included in this PR

* We're patching some compiler related functions provided by distutils

in our code

([example](ee4efc31c1/src/python/grpcio/_spawn_patch.py (L30))),

but since `setuptools` doesn't have similar interface (See [this issue

for more details](https://github.com/pypa/setuptools/issues/2806)), we

don't have a clear path to replace them yet.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

This change is to update the TD bootstrap generator for prod tests. This

is part of the TD release process. The new image has already been merged

to staging and tested locally in google3.

cc: @sergiitk PTAL.

Pipe-like type (has a send end, a receive end, and a closing mechanism)

for cross-activity transfers.

---------

Co-authored-by: ctiller <ctiller@users.noreply.github.com>

Building out a new framing layer for chttp2.

The central idea here is to have the framing layer be solely responsible

for serialization of frames, and their deserialization - the framing

layer can reject frames that have invalid syntax - but the enacting of

what that frame means is left to a higher layer.

This class will become foundational for the promise conversion of chttp2

- by eliminating action from the parsing of frames we can reuse this

sensitive code.

Right now the new layer is inactive - there's a test that exercises it

relatively well, and not much more. In the next PRs I'll add an

experiments to enable using this layer or the existing code in the

writing and reading paths.

---------

Co-authored-by: ctiller <ctiller@users.noreply.github.com>

This is the initial implementation of the chaotic-good client transport

write path. There will be a follow-up PR to fulfill the read path.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

Previously black wouldn't install, as it required newer `packaging`

package.

This fixes `pip install -r requirements-dev.txt`. In addition, `black`

in dev dependencies file is changed to `black[d]`, which bundles

`blackd` binary (["black as a

server"](https://black.readthedocs.io/en/stable/usage_and_configuration/black_as_a_server.html)).

Fixes an issue when an active context selected automatically picked up

as context for `secondary_k8s_api_manager`.

This was introducing an error in GAMMA Baseline PoC

```

sys:1: ResourceWarning: unclosed <ssl.SSLSocket fd=4, family=AddressFamily.AF_INET, type=SocketKind.SOCK_STREAM, proto=0, laddr=('100.71.2.143', 56723), raddr=('35.199.174.232', 443)>

```

Here's how the secondary context is incorrectly falls back to the

default context when `--secondary_kube_context` is not set:

```

k8s.py:142] Using kubernetes context "gke_grpc-testing_us-central1-a_psm-interop-security", active host: https://35.202.85.90

k8s.py:142] Using kubernetes context "None", active host: https://35.202.85.90

```

- Add Github Action to conditionally run PSM Interop unit tests:

- Only run when changes are detected in

`tools/run_tests/xds_k8s_test_driver` or any of the proto files used by

the driver

- Only run against PRs and pushes to `master`, `v1.*.*` branches

- Runs using `python3.9` and `python3.10`

- Ready to be added to the list of required GitHub checks

- Add `tools/run_tests/xds_k8s_test_driver/tests/unit/__main__.py` test

loader that recursively discovers all unit tests in

`tools/run_tests/xds_k8s_test_driver/tests/unit`

- Add basic coverage for `XdsTestClient` and `XdsTestServer` to verify

the test loader picks up all folders

Related:

- First unit tests without automated CI added in #34097

The tests are skipped incorrectly because `config.server_lang` is

incorrectly compared with the string value "java", instead of

`skips.Lang.JAVA`.

This has been broken since #26998.

```

xds_url_map_testcase.py:372] ----- Testing TestTimeoutInRouteRule -----

xds_url_map_testcase.py:373] Logs timezone: UTC

skips.py:121] Skipping TestConfig(client_lang='java', server_lang='java', version='v1.57.x')

[ SKIPPED ] setUpClass (timeout_test.TestTimeoutInRouteRule)

xds_url_map_testcase.py:372] ----- Testing TestTimeoutInApplication -----

xds_url_map_testcase.py:373] Logs timezone: UTC

skips.py:121] Skipping TestConfig(client_lang='java', server_lang='java', version='v1.57.x')

[ SKIPPED ] setUpClass (timeout_test.TestTimeoutInApplication)

```

This is to make sure upgrading packaging module won't break our logic on

version-based version skipping.

This also fixes a small issue with `dev-` prefix - it should only be

allowed on the left side of the comparison.

Context: packaging module needs to be upgraded to be compatible with

`blackd`.

Update from gtcooke94:

This PR adds support to build gRPC and it's tests with OpenSSL3. There were some

hiccups with tests as the tests with openssl haven't been built or exercised in a

few months, so they needed some work to fix.

Right now I expect all test files to pass except the following:

- h2_ssl_cert_test

- ssl_transport_security_utils_test

I confirmed locally that these tests fail with OpenSSL 1.1.1 as well,

thus we are at least not introducing regressions. Thus, I've added compiler directives around these tests so they only build when using BoringSSL.

---------

Co-authored-by: Gregory Cooke <gregorycooke@google.com>

Co-authored-by: Esun Kim <veblush@google.com>

- add debug-only `WorkSerializer::IsRunningInWorkSerializer()` method

and use it in client_channel to verify that subchannels are destroyed in

the `WorkSerializer`

- note: this mechanism uses `std:🧵:id`, so I had to exclude

work_serializer.cc from the core_banned_constructs check

- fix `WorkSerializer::Run()` to unref the callback before releasing

ownership of the `WorkSerializer`, so that any refs captured by the

`std::function<>` will be released before releasing ownership

- fix the WRR timer callback to hop into the `WorkSerializer` to release

its ref to the picker, since that transitively releases refs to

subchannels

- fix subchannel connectivity state notifications to unref the watcher

inside the `WorkSerializer`, since the watcher often transitively holds

refs to subchannels

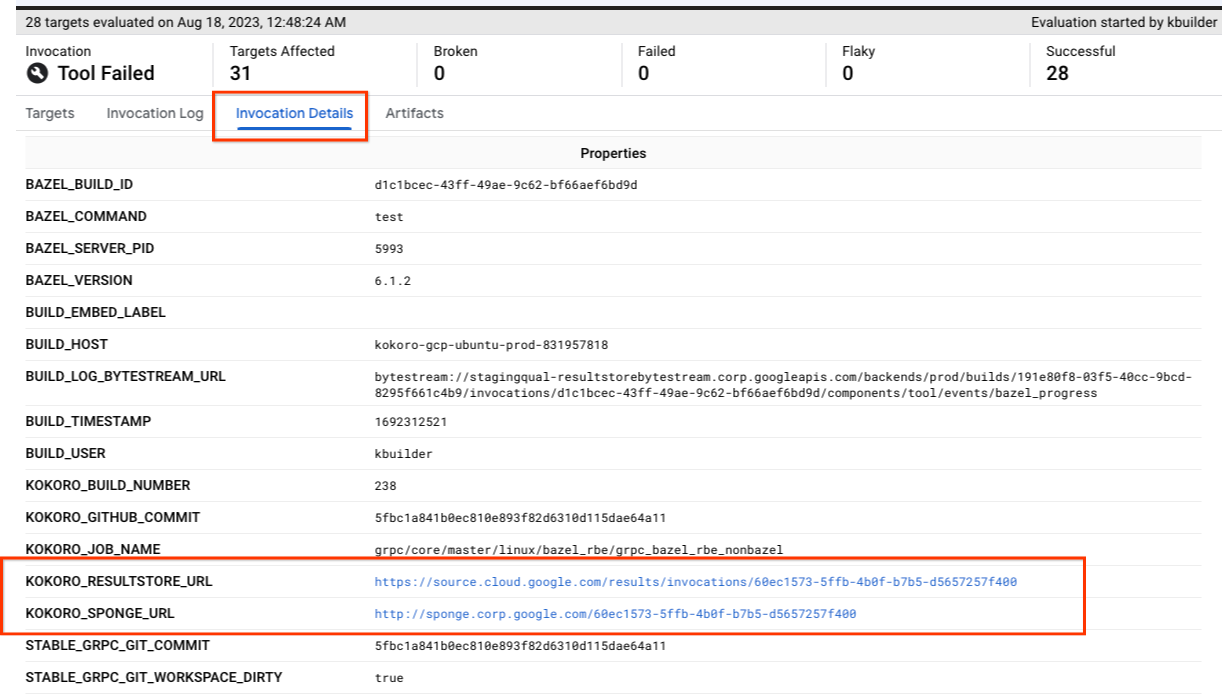

Currently the bazel invocations provide a link back to the original

kokoro jobs for resultstore and sponge UIs.

This adds another back link to Fusion UI, which has the advantage of

- being able to navigate to the kokoro job overview

- there is a button to trigger a new build (in case the job needs to be

re-run).

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

Reintroduce https://github.com/grpc/grpc/pull/33959.

I added a fix for the python arm64 build (which is the reason why the

change has been reverted earlier).

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

Disables the warning produced by kubernetes/client/rest.py calling the

deprecated `urllib3.response.HTTPResponse.getheaders`,

`urllib3.response.HTTPResponse.getheader` methods:

```

venv-test/lib/python3.9/site-packages/kubernetes/client/rest.py:44: DeprecationWarning: HTTPResponse.getheaders() is deprecated and will be removed in urllib3 v2.1.0. Instead access HTTPResponse.headers directly.

return self.urllib3_response.getheaders()

```

This issue introduced by openapi-generator, and solved in `v6.4.0`. To

fix the issue properly, kubernetes/python folks need to regenerate the

library using newer openapi-generator. The most recent release `v27.2.0`

still used openapi-generator

[`v4.3.0`](https://github.com/kubernetes-client/python/blob/v27.2.0/kubernetes/.openapi-generator/VERSION).

Since they release two times a year, and the 2 major version difference

of openapi-generator, the fix may take a while.

Created an issue in their repo:

https://github.com/kubernetes-client/python/issues/2101.

Not adding CMake support yet

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

Addresses some issues of the initial triage hint PR:

https://github.com/grpc/grpc/pull/33898.

1. Print unhealthy backend name before the health info - previously it

was unclear health status of which backend is dumped

2. Add missing `retry_err.add_note(note)` calls

3. Turn off the highlighter in triager hints, which isn't rendered

properly in the stack trace saved to junit.xml

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->