To fix this error

```

test/core/security/grpc_authorization_engine_test.cc:88:32: error: unknown type name 'Json'; did you mean 'experimental::Json'?

ParseAuditLoggerConfig(const Json&) override {

^~~~

experimental::Json

```

This check compares the host portion of the target name to the authority

header, but in common use cases (e.g. GCS) they may not coincide.

Additionally, this check does not happen in the Go and Java ALTS stacks.

This makes the JSON API visible as part of the C-core API, but in the

`experimental` namespace. It will be used as part of various

experimental APIs that we will be introducing in the near future, such

as the audit logging API.

WireWriter implementation schedules actions to be run by `ExecCtx`. We

should flush pending actions before destructing

`end2end_testing::g_transaction_processor`, which need to be alive to

handle the scheduled actions. Otherwise,

we get heap-use-after-free error because the testing fixture

(`end2end_testing::g_transaction_processor`) is destructed before all

the scheduled actions are run.

This lowers end2end binder transport test failure rate from 0.23% to

0.15%, according to internal tool that runs the test for 15000 times

under various configuration.

This file does not contain a shebang, and whenever I try and run it it

wedges my console into some weird state.

There's a .sh file with the same name that should be run instead. Remove

the executable bit of the thing we shouldn't run directly so we, like,

don't.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

Previously the error message didn't provide much context, example:

```py

Traceback (most recent call last):

File "/tmpfs/tmp/tmp.BqlenMyXyk/grpc/tools/run_tests/xds_k8s_test_driver/tests/affinity_test.py", line 127, in test_affinity

self.assertLen(

AssertionError: [] has length of 0, expected 1.

```

ref b/279990584.

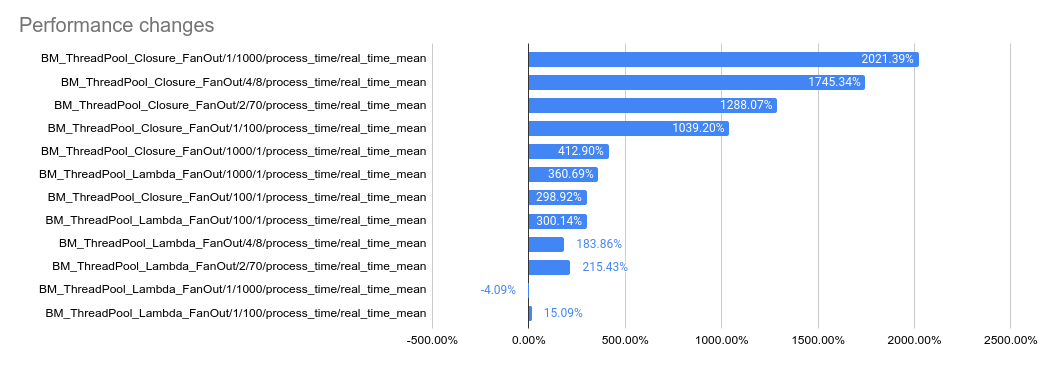

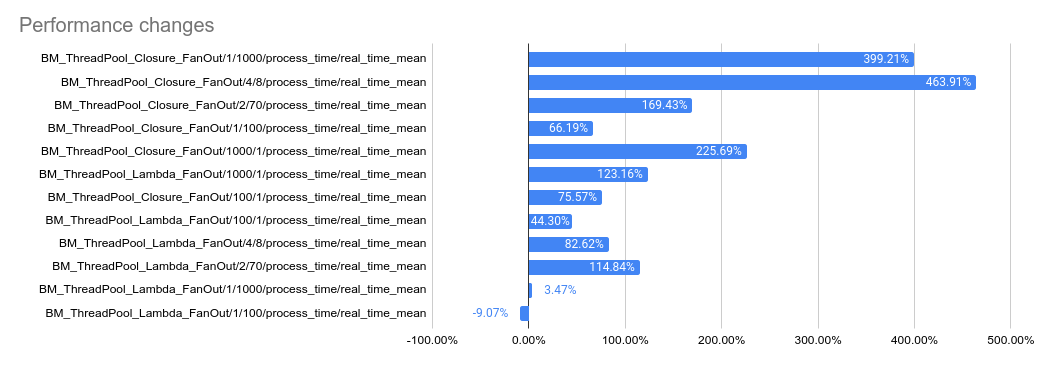

This PR implements a work-stealing thread pool for use inside

EventEngine implementations. Because of historical risks here, I've

guarded the new implementation behind an experiment flag:

`GRPC_EXPERIMENTS=work_stealing`. Current default behavior is the

original thread pool implementation.

Benchmarks look very promising:

```

bazel test \

--test_timeout=300 \

--config=opt -c opt \

--test_output=streamed \

--test_arg='--benchmark_format=csv' \

--test_arg='--benchmark_min_time=0.15' \

--test_arg='--benchmark_filter=_FanOut' \

--test_arg='--benchmark_repetitions=15' \

--test_arg='--benchmark_report_aggregates_only=true' \

test/cpp/microbenchmarks:bm_thread_pool

```

2023-05-04: `bm_thread_pool` benchmark results on my local machine (64

core ThreadRipper PRO 3995WX, 256GB memory), comparing this PR to

master:

2023-05-04: `bm_thread_pool` benchmark results in the Linux RBE

environment (unsure of machine configuration, likely small), comparing

this PR to master.

---------

Co-authored-by: drfloob <drfloob@users.noreply.github.com>

One TXT lookup query can return multiple TXT records (see the following

example). `EventEngine::DNSResolver` should return all of them to let

the caller (e.g. `event_engine_client_channel_resolver`) decide which

one they would use.

```

$ dig TXT wikipedia.org

; <<>> DiG 9.18.12-1+build1-Debian <<>> TXT wikipedia.org

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 49626

;; flags: qr rd ra; QUERY: 1, ANSWER: 3, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 512

;; QUESTION SECTION:

;wikipedia.org. IN TXT

;; ANSWER SECTION:

wikipedia.org. 600 IN TXT "google-site-verification=AMHkgs-4ViEvIJf5znZle-BSE2EPNFqM1nDJGRyn2qk"

wikipedia.org. 600 IN TXT "yandex-verification: 35c08d23099dc863"

wikipedia.org. 600 IN TXT "v=spf1 include:wikimedia.org ~all"

```

Note that this change also deviates us from the iomgr's DNSResolver API

which uses std::string as the result type.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

---------

Co-authored-by: Sergii Tkachenko <hi@sergii.org>

Reverts grpc/grpc#32924. This breaks the build again, unfortunately.

From `test/core/event_engine/cf:cf_engine_test`:

```

error: module .../grpc/test/core/event_engine/cf:cf_engine_test does not depend on a module exporting 'grpc/support/port_platform.h'

```

@sampajano I recommend looking into CI tests to catch iOS problems

before merging. We can enable EventEngine experiments in the CI

generally once this PR lands, but this broken test is not one of those

experiments. A normal build should have caught this.

cc @HannahShiSFB

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

The job run time was creeping to the 2h timeout. Let's bump it to 3h.

Note that this is `master` branch, so it also includes the build time

every time we commit to grpc/grpc.

ref b/280784903

This PR tries to correct a confusing message. For my little

understanding, it seems that if the code reaches that level, it is not

correct to say that setting TCP_USER_TIMEOUT failed, but actually it

succeeded (setsockopt returned 0) but is different to the previous value

option.

I haven't found the `gpr_log` description, so I hope the PR is correct.

---------

Signed-off-by: Joan Fontanals Martinez <joan.martinez@jina.ai>

### Description

Fix https://github.com/grpc/grpc/issues/24470.

Adding one example which demonstrate the following use cases:

* Generate RPC ID on client side and propagate to server.

* Context propagation from client to server.

* Context propagation between different server interceptors and the

server handler.

## Use:

1. Start server: `python3 -m async_greeter_server_with_interceptor`

2. Start client: `python3 -m async_greeter_client`

### Expected Logs:

* On client side:

```

Sending request with rpc id: 73bb98beff10c2dd7b9f2252a1e2039e

Greeter client received: Hello, you!

```

* On server side:

```

INFO:root:Starting server on [::]:50051

INFO:root:Interceptor1 called with rpc_id: default

INFO:root:Interceptor2 called with rpc_id: Interceptor1-default

INFO:root:Handle rpc with id Interceptor2-Interceptor1-73bb98beff10c2dd7b9f2252a1e2039e in server handler.

```

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

Reverts grpc/grpc#33002. Breaks internal builds:

`.../privacy_context:filters does not depend on a module exporting

'.../src/core/lib/channel/context.h'`

Change call attributes to be stored in a `ChunkedVector` instead of

`std::map<>`, so that the storage can be allocated on the arena. This

means that we're now doing a linear search instead of a map lookup, but

the total number of attributes is expected to be low enough that that

should be okay.

Also, we now hide the actual data structure inside of the

`ServiceConfigCallData` object, which required some changes to the

`ConfigSelector` API. Previously, the `ConfigSelector` would return a

`CallConfig` struct, and the client channel would then use the data in

that struct to populate the `ServiceConfigCallData`. This PR changes

that such that the client channel creates the `ServiceConfigCallData`

before invoking the `ConfigSelector`, and it passes the

`ServiceConfigCallData` into the `ConfigSelector` so that the

`ConfigSelector` can populate it directly.

The protection is added at `xds_http_rbac_filter.cc` where we read the

new field. With this disabling the feature, nothing from things like

`xds_audit_logger_registry.cc` shall be invoked.

Makes some awkward fixes to compression filter, call, connected channel

to hold the semantics we have upheld now in tests.

Once the fixes described here

https://github.com/grpc/grpc/blob/master/src/core/lib/channel/connected_channel.cc#L636

are in this gets a lot less ad-hoc, but that's likely going to be

post-landing promises client & server side.

We specifically need special handling for server side cancellation in

response to reads wrt the inproc transport - which doesn't track

cancellation thoroughly enough itself.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

---------

Co-authored-by: ctiller <ctiller@users.noreply.github.com>

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

1. `GrpcAuthorizationEngine` creates the logger from the given config in

its ctor.

2. `Evaluate()` invokes audit logging when needed.

---------

Co-authored-by: rockspore <rockspore@users.noreply.github.com>

See `event_engine.h` for the contract change. All other changes are

cleanup.

I confirmed that both the Posix and Windows implementations comply with

this already.

On Windows, the `WindowsEventEngineListener` will only call

`on_shutdown` after all `SinglePortSocketListener`s have been destroyed,

which ensures that no `on_accept` callback will be executed, even if

there is still trailing overlapped activity on the listening socket.

On Posix, the `PosixEngineListenerImpl` will only call `on_shutdown`

after all `AsyncConnectionAcceptor`s have been destroyed, which ensures

`EventHandle::OrphanHandle` has been called. The `OrphanHandle` contract

indicates that all existing notify closures must have already run. The

implementation looks to comply, so if it does not, that's a bug.

3aae08d25e/src/core/lib/event_engine/posix_engine/event_poller.h (L48-L50)

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

Whilst the per cpu counters probably help single channel contention, we

think it's likely that they're a pessimization when taken fleetwide.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

Spin off from https://github.com/grpc/grpc/pull/32701.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

Spin off from https://github.com/grpc/grpc/pull/32701.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

Add audit condition and audit logger config into `grpc_core::Rbac`.

Support translation of audit logging options from authz policy to it.

Audit logging options in authz policy looks like:

```json

{

"audit_logging_options": {

"audit_condition": "ON_DENY",

"audit_loggers": [

{

"name": "logger",

"config": {},

"is_optional": false

}

]

}

}

```

which is consistent with what's in the xDS RBAC proto but a little

flattened.

---------

Co-authored-by: rockspore <rockspore@users.noreply.github.com>

Without this change, new users get this warning:

```

CMake Warning (dev) at $MY_INSTALL_DIR/lib64/cmake/protobuf/protobuf-options.cmake:6 (option):

Policy CMP0077 is not set: option() honors normal variables. Run "cmake

--help-policy CMP0077" for policy details. Use the cmake_policy command to

set the policy and suppress this warning.

For compatibility with older versions of CMake, option is clearing the

normal variable 'protobuf_MODULE_COMPATIBLE'.

Call Stack (most recent call first):

$MY_INSTALL_DIR/lib64/cmake/protobuf/protobuf-config.cmake:2 (include)

$MY_SRC_PATH/examples/cpp/cmake/common.cmake:99 (find_package)

CMakeLists.txt:24 (include)

This warning is for project developers. Use -Wno-dev to suppress it.

```

release notes: no

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

- Add a new docker image "rbe_ubuntu2004" that is built in a way that's

analogous to how our other testing docker images are built (this gives

us control over what exactly is contained in the docker image and

ability to fine-tune our RBE configuration)

- Switch RBE on linux to the new image (which gives us ubuntu20.04-based

builds)

For some reason, RBE seems to have trouble pulling the docker image from

Google Artifact Registry (GAR), which is where our public testing images

normally live, so for now, I used a workaround and I upload a copy of

the rbe_ubuntu2004 docker image to GCR as well, and that makes RBE works

just fine (see comment in the `renerate_linux_rbe_configs.sh` script).

More followup items (config cleanup, getting local sanitizer builds

working etc.) are in go/grpc-rbe-tech-debt-2023