Add a new binary that runs all core end2end tests in fuzzing mode.

In this mode FuzzingEventEngine is substituted for the default event

engine. This means that time is simulated, as is IO. The FEE gets

control of callback delays also.

In our tests the `Step()` function becomes, instead of a single call to

`completion_queue_next`, a series of calls to that function and

`FuzzingEventEngine::Tick`, driving forward the event loop until

progress can be made.

PR guide:

---

**New binaries**

`core_end2end_test_fuzzer` - the new fuzzer itself

`seed_end2end_corpus` - a tool that produces an interesting seed corpus

**Config changes for safe fuzzing**

The implementation tries to use the config fuzzing work we've previously

deployed in api_fuzzer to fuzz across experiments. Since some

experiments are far too experimental to be safe in such fuzzing (and

this will always be the case):

- a new flag is added to experiments to opt-out of this fuzzing

- a new hook is added to the config system to allow variables to

re-write their inputs before setting them during the fuzz

**Event manager/IO changes**

Changes are made to the event engine shims so that tcp_server_posix can

run with a non-FD carrying EventEngine. These are in my mind a bit

clunky, but they work and they're in code that we expect to delete in

the medium term, so I think overall the approach is good.

**Changes to time**

A small tweak is made to fix a bug initializing time for fuzzers in

time.cc - we were previously failing to initialize

`g_process_epoch_cycles`

**Changes to `Crash`**

A version that prints to stdio is added so that we can reliably print a

crash from the fuzzer.

**Changes to CqVerifier**

Hooks are added to allow the top level loop to hook the verification

functions with a function that steps time between CQ polls.

**Changes to end2end fixtures**

State machinery moves from the fixture to the test infra, to keep the

customizations for fuzzing or not in one place. This means that fixtures

are now just client/server factories, which is overall nice.

It did necessitate moving some bespoke machinery into

h2_ssl_cert_test.cc - this file is beginning to be problematic in

borrowing parts but not all of the e2e test machinery. Some future PR

needs to solve this.

A cq arg is added to the Make functions since the cq is now owned by the

test and not the fixture.

**Changes to test registration**

`TEST_P` is replaced by `CORE_END2END_TEST` and our own test registry is

used as a first depot for test information.

The gtest version of these tests: queries that registry to manually

register tests with gtest. This ultimately changes the name of our tests

again (I think for the last time) - the new names are shorter and more

readable, so I don't count this as a regression.

The fuzzer version of these tests: constructs a database of fuzzable

tests that it can consult to look up a particular suite/test/config

combination specified by the fuzzer to fuzz against. This gives us a

single fuzzer that can test all 3k-ish fuzzing ready tests and cross

polinate configuration between them.

**Changes to test config**

The zero size registry stuff was causing some problems with the event

engine feature macros, so instead I've removed those and used GTEST_SKIP

in the problematic tests. I think that's the approach we move towards in

the future.

**Which tests are included**

Configs that are compatible - those that do not do fd manipulation

directly (these are incompatible with FuzzingEventEngine), and those

that do not join threads on their shutdown path (as these are

incompatible with our cq wait methodology). Each we can talk about in

the future - fd manipulation would be a significant expansion of

FuzzingEventEngine, and is probably not worth it, however many uses of

background threads now should probably evolve to be EventEngine::Run

calls in the future, and then would be trivially enabled in the fuzzers.

Some tests currently fail in the fuzzing environment, a

`SKIP_IF_FUZZING` macro is used for these few to disable them if in the

fuzzing environment. We'll burn these down in the future.

**Changes to fuzzing_event_engine**

Changes are made to time: an exponential sweep forward is used now -

this catches small time precision things early, but makes decade long

timers (we have them) able to be used right now. In the future we'll

just skip time forward to the next scheduled timer, but that approach

doesn't yet work due to legacy timer system interactions.

Changes to port assignment: we ensure that ports are legal numbers

before assigning them via `grpc_pick_port_or_die`.

A race condition between time checking and io is fixed.

---------

Co-authored-by: ctiller <ctiller@users.noreply.github.com>

Need to do the channelz bit prior to the finishing the op bit.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

Resolve `TESTING_VERSION` to `dev-VERSION` when the job is initiated by

a user, and not the CI. Override this behavior with setting

`FORCE_TESTING_VERSION`.

This solves the problem with the manual job runs executed against a WIP

branch (f.e. a PR) overriding the tag of the CI-built image we use for

daily testing.

The `dev` and `dev-VERSION` "magic" values supported by the

`--testing_version` flag:

- `dev` and `dev-master` and treated as `master`: all

`config.version_gte` checks resolve to `True`.

- `dev-VERSION` is treated as `VERSION`: `dev-v1.55.x` is treated as

simply `v1.55.x`. We do this so that when manually running jobs for old

branches the feature skip check still works, and unsupported tests are

skipped.

This changes will take care of all langs/branches, no backports needed.

ref b/256845629

Currently, we are not very consistent in what we assume the initial

state of an LB policy will be and whether or not we assume that it will

immediately report a new picker when it gets its initial address update;

different parts of our code make different assumptions. This PR

establishes the convention that LB policies will be assumed to start in

state CONNECTING and will *not* be assumed to report a new picker

immediately upon getting their initial address update, and we now assume

that convention everywhere consistently.

This is a preparatory step for changing policies like round_robin to

delegate to pick_first, which I'm working on in #32692. As part of that

change, we need pick_first to not report a connectivity state until it

actually sees the connectivity state of the underlying subchannels, so

that round_robin knows when to swap over to a new child list without

reintroducing the problem fixed in #31939.

To fix this error

```

test/core/security/grpc_authorization_engine_test.cc:88:32: error: unknown type name 'Json'; did you mean 'experimental::Json'?

ParseAuditLoggerConfig(const Json&) override {

^~~~

experimental::Json

```

This check compares the host portion of the target name to the authority

header, but in common use cases (e.g. GCS) they may not coincide.

Additionally, this check does not happen in the Go and Java ALTS stacks.

This makes the JSON API visible as part of the C-core API, but in the

`experimental` namespace. It will be used as part of various

experimental APIs that we will be introducing in the near future, such

as the audit logging API.

WireWriter implementation schedules actions to be run by `ExecCtx`. We

should flush pending actions before destructing

`end2end_testing::g_transaction_processor`, which need to be alive to

handle the scheduled actions. Otherwise,

we get heap-use-after-free error because the testing fixture

(`end2end_testing::g_transaction_processor`) is destructed before all

the scheduled actions are run.

This lowers end2end binder transport test failure rate from 0.23% to

0.15%, according to internal tool that runs the test for 15000 times

under various configuration.

This file does not contain a shebang, and whenever I try and run it it

wedges my console into some weird state.

There's a .sh file with the same name that should be run instead. Remove

the executable bit of the thing we shouldn't run directly so we, like,

don't.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

Previously the error message didn't provide much context, example:

```py

Traceback (most recent call last):

File "/tmpfs/tmp/tmp.BqlenMyXyk/grpc/tools/run_tests/xds_k8s_test_driver/tests/affinity_test.py", line 127, in test_affinity

self.assertLen(

AssertionError: [] has length of 0, expected 1.

```

ref b/279990584.

This PR implements a work-stealing thread pool for use inside

EventEngine implementations. Because of historical risks here, I've

guarded the new implementation behind an experiment flag:

`GRPC_EXPERIMENTS=work_stealing`. Current default behavior is the

original thread pool implementation.

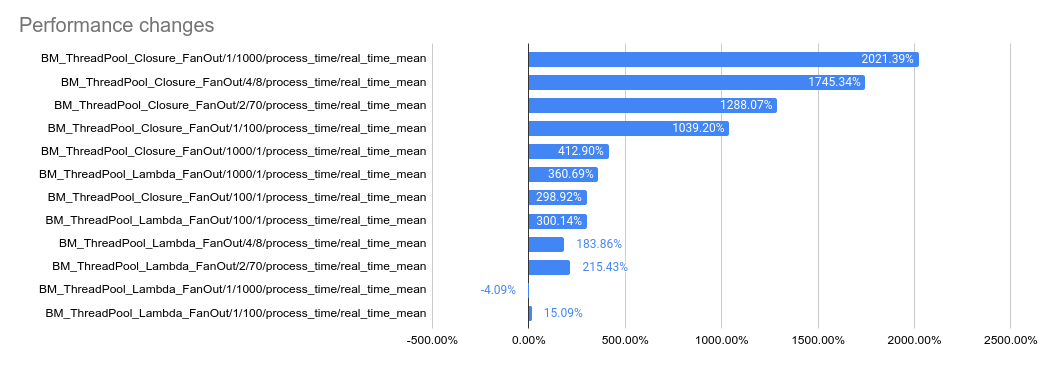

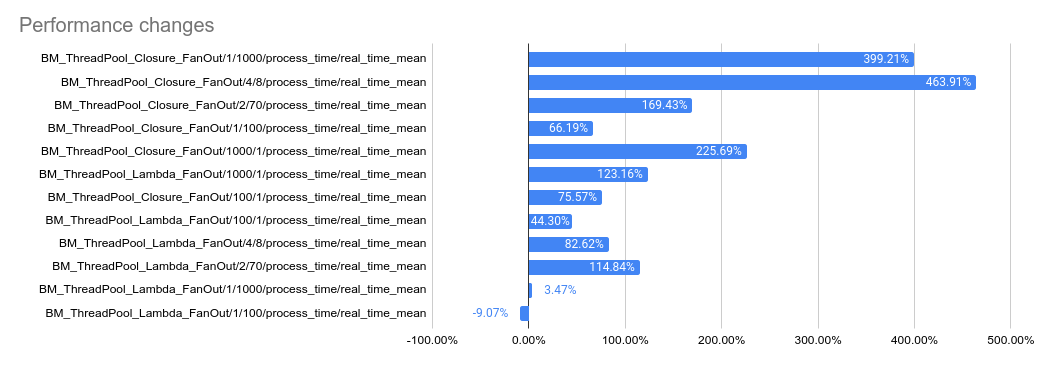

Benchmarks look very promising:

```

bazel test \

--test_timeout=300 \

--config=opt -c opt \

--test_output=streamed \

--test_arg='--benchmark_format=csv' \

--test_arg='--benchmark_min_time=0.15' \

--test_arg='--benchmark_filter=_FanOut' \

--test_arg='--benchmark_repetitions=15' \

--test_arg='--benchmark_report_aggregates_only=true' \

test/cpp/microbenchmarks:bm_thread_pool

```

2023-05-04: `bm_thread_pool` benchmark results on my local machine (64

core ThreadRipper PRO 3995WX, 256GB memory), comparing this PR to

master:

2023-05-04: `bm_thread_pool` benchmark results in the Linux RBE

environment (unsure of machine configuration, likely small), comparing

this PR to master.

---------

Co-authored-by: drfloob <drfloob@users.noreply.github.com>

One TXT lookup query can return multiple TXT records (see the following

example). `EventEngine::DNSResolver` should return all of them to let

the caller (e.g. `event_engine_client_channel_resolver`) decide which

one they would use.

```

$ dig TXT wikipedia.org

; <<>> DiG 9.18.12-1+build1-Debian <<>> TXT wikipedia.org

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 49626

;; flags: qr rd ra; QUERY: 1, ANSWER: 3, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 512

;; QUESTION SECTION:

;wikipedia.org. IN TXT

;; ANSWER SECTION:

wikipedia.org. 600 IN TXT "google-site-verification=AMHkgs-4ViEvIJf5znZle-BSE2EPNFqM1nDJGRyn2qk"

wikipedia.org. 600 IN TXT "yandex-verification: 35c08d23099dc863"

wikipedia.org. 600 IN TXT "v=spf1 include:wikimedia.org ~all"

```

Note that this change also deviates us from the iomgr's DNSResolver API

which uses std::string as the result type.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

---------

Co-authored-by: Sergii Tkachenko <hi@sergii.org>

Reverts grpc/grpc#32924. This breaks the build again, unfortunately.

From `test/core/event_engine/cf:cf_engine_test`:

```

error: module .../grpc/test/core/event_engine/cf:cf_engine_test does not depend on a module exporting 'grpc/support/port_platform.h'

```

@sampajano I recommend looking into CI tests to catch iOS problems

before merging. We can enable EventEngine experiments in the CI

generally once this PR lands, but this broken test is not one of those

experiments. A normal build should have caught this.

cc @HannahShiSFB

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

The job run time was creeping to the 2h timeout. Let's bump it to 3h.

Note that this is `master` branch, so it also includes the build time

every time we commit to grpc/grpc.

ref b/280784903

This PR tries to correct a confusing message. For my little

understanding, it seems that if the code reaches that level, it is not

correct to say that setting TCP_USER_TIMEOUT failed, but actually it

succeeded (setsockopt returned 0) but is different to the previous value

option.

I haven't found the `gpr_log` description, so I hope the PR is correct.

---------

Signed-off-by: Joan Fontanals Martinez <joan.martinez@jina.ai>

### Description

Fix https://github.com/grpc/grpc/issues/24470.

Adding one example which demonstrate the following use cases:

* Generate RPC ID on client side and propagate to server.

* Context propagation from client to server.

* Context propagation between different server interceptors and the

server handler.

## Use:

1. Start server: `python3 -m async_greeter_server_with_interceptor`

2. Start client: `python3 -m async_greeter_client`

### Expected Logs:

* On client side:

```

Sending request with rpc id: 73bb98beff10c2dd7b9f2252a1e2039e

Greeter client received: Hello, you!

```

* On server side:

```

INFO:root:Starting server on [::]:50051

INFO:root:Interceptor1 called with rpc_id: default

INFO:root:Interceptor2 called with rpc_id: Interceptor1-default

INFO:root:Handle rpc with id Interceptor2-Interceptor1-73bb98beff10c2dd7b9f2252a1e2039e in server handler.

```

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

Reverts grpc/grpc#33002. Breaks internal builds:

`.../privacy_context:filters does not depend on a module exporting

'.../src/core/lib/channel/context.h'`

Change call attributes to be stored in a `ChunkedVector` instead of

`std::map<>`, so that the storage can be allocated on the arena. This

means that we're now doing a linear search instead of a map lookup, but

the total number of attributes is expected to be low enough that that

should be okay.

Also, we now hide the actual data structure inside of the

`ServiceConfigCallData` object, which required some changes to the

`ConfigSelector` API. Previously, the `ConfigSelector` would return a

`CallConfig` struct, and the client channel would then use the data in

that struct to populate the `ServiceConfigCallData`. This PR changes

that such that the client channel creates the `ServiceConfigCallData`

before invoking the `ConfigSelector`, and it passes the

`ServiceConfigCallData` into the `ConfigSelector` so that the

`ConfigSelector` can populate it directly.

The protection is added at `xds_http_rbac_filter.cc` where we read the

new field. With this disabling the feature, nothing from things like

`xds_audit_logger_registry.cc` shall be invoked.

Makes some awkward fixes to compression filter, call, connected channel

to hold the semantics we have upheld now in tests.

Once the fixes described here

https://github.com/grpc/grpc/blob/master/src/core/lib/channel/connected_channel.cc#L636

are in this gets a lot less ad-hoc, but that's likely going to be

post-landing promises client & server side.

We specifically need special handling for server side cancellation in

response to reads wrt the inproc transport - which doesn't track

cancellation thoroughly enough itself.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

---------

Co-authored-by: ctiller <ctiller@users.noreply.github.com>

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

1. `GrpcAuthorizationEngine` creates the logger from the given config in

its ctor.

2. `Evaluate()` invokes audit logging when needed.

---------

Co-authored-by: rockspore <rockspore@users.noreply.github.com>

See `event_engine.h` for the contract change. All other changes are

cleanup.

I confirmed that both the Posix and Windows implementations comply with

this already.

On Windows, the `WindowsEventEngineListener` will only call

`on_shutdown` after all `SinglePortSocketListener`s have been destroyed,

which ensures that no `on_accept` callback will be executed, even if

there is still trailing overlapped activity on the listening socket.

On Posix, the `PosixEngineListenerImpl` will only call `on_shutdown`

after all `AsyncConnectionAcceptor`s have been destroyed, which ensures

`EventHandle::OrphanHandle` has been called. The `OrphanHandle` contract

indicates that all existing notify closures must have already run. The

implementation looks to comply, so if it does not, that's a bug.

3aae08d25e/src/core/lib/event_engine/posix_engine/event_poller.h (L48-L50)

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->