Fixes internal b/297030898.

This ought not be necessary, but getting context from the promise based

filter wrapper code at call destruction is unwieldy.

Hacking around it here and fixing it properly post client rollout seems

wiser.

This turns on the `work_stealing` experiment everywhere, enabling the

work-stealing thread pool in Posix & Windows EventEngine

implementations. This only really has an effect when EventEngine is used

for I/O (currently flag-guarded).

* Before change:

* Test hangs

[here](https://github.com/grpc/grpc/blob/master/src/python/grpcio/grpc/_cython/_cygrpc/aio/call.pyx.pxi#L250)(Called

from

[here](3b23fe62ca/src/python/grpcio/grpc/aio/_call.py (L261)))

waiting for status.

* After change, we'll manually set status. We're also printing traceback

like the following:

```

Traceback (most recent call last):

File "/usr/local/google/home/xuanwn/.cache/bazel/_bazel_xuanwn/da3828576aa39e99a5c826cc2e2e22fb/sandbox/linux-sandbox/1576/execroot/com_github_grpc_grpc/bazel-out/k8-fastbuild/bin/src/python/grpcio_tests/tests_aio/unit/metadata_test.runfiles/com_github_grpc_grpc/src/python/grpcio/grpc/aio/_call.py", line 492, in _writ

e

await self._cython_call.send_serialized_message(serialized_request)

File "src/python/grpcio/grpc/_cython/_cygrpc/aio/call.pyx.pxi", line 379, in send_serialized_message

File "src/python/grpcio/grpc/_cython/_cygrpc/aio/callback_common.pyx.pxi", line 163, in _send_message

File "src/python/grpcio/grpc/_cython/_cygrpc/aio/callback_common.pyx.pxi", line 160, in _cython.cygrpc._send_message

File "src/python/grpcio/grpc/_cython/_cygrpc/aio/callback_common.pyx.pxi", line 106, in execute_batch

_cython.cygrpc.ExecuteBatchError: Failed grpc_call_start_batch: 11 with grpc_call_error value: 'GRPC_CALL_ERROR_INVALID_MESSAGE'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/local/google/home/xuanwn/.cache/bazel/_bazel_xuanwn/da3828576aa39e99a5c826cc2e2e22fb/sandbox/linux-sandbox/1576/execroot/com_github_grpc_grpc/bazel-out/k8-fastbuild/bin/src/python/grpcio_tests/tests_aio/unit/metadata_test.runfiles/com_github_grpc_grpc/src/python/grpcio_tests/tests_aio/unit/_test_base.py", l

ine 31, in wrapper

return loop.run_until_complete(f(*args, **kwargs))

File "/usr/local/google/home/xuanwn/.pyenv/versions/3.10.9/lib/python3.10/asyncio/base_events.py", line 649, in run_until_complete

return future.result()

File "/usr/local/google/home/xuanwn/.cache/bazel/_bazel_xuanwn/da3828576aa39e99a5c826cc2e2e22fb/sandbox/linux-sandbox/1576/execroot/com_github_grpc_grpc/bazel-out/k8-fastbuild/bin/src/python/grpcio_tests/tests_aio/unit/metadata_test.runfiles/com_github_grpc_grpc/src/python/grpcio_tests/tests_aio/unit/metadata_test.py"

, line 310, in test_stream_unary

await call.write(_REQUEST)

File "/usr/local/google/home/xuanwn/.cache/bazel/_bazel_xuanwn/da3828576aa39e99a5c826cc2e2e22fb/sandbox/linux-sandbox/1576/execroot/com_github_grpc_grpc/bazel-out/k8-fastbuild/bin/src/python/grpcio_tests/tests_aio/unit/metadata_test.runfiles/com_github_grpc_grpc/src/python/grpcio/grpc/aio/_call.py", line 517, in write

await self._write(request)

File "/usr/local/google/home/xuanwn/.cache/bazel/_bazel_xuanwn/da3828576aa39e99a5c826cc2e2e22fb/sandbox/linux-sandbox/1576/execroot/com_github_grpc_grpc/bazel-out/k8-fastbuild/bin/src/python/grpcio_tests/tests_aio/unit/metadata_test.runfiles/com_github_grpc_grpc/src/python/grpcio/grpc/aio/_call.py", line 495, in _writ

e

await self._raise_for_status()

File "/usr/local/google/home/xuanwn/.cache/bazel/_bazel_xuanwn/da3828576aa39e99a5c826cc2e2e22fb/sandbox/linux-sandbox/1576/execroot/com_github_grpc_grpc/bazel-out/k8-fastbuild/bin/src/python/grpcio_tests/tests_aio/unit/metadata_test.runfiles/com_github_grpc_grpc/src/python/grpcio/grpc/aio/_call.py", line 263, in _rais

e_for_status

raise _create_rpc_error(

grpc.aio._call.AioRpcError: <AioRpcError of RPC that terminated with:

status = StatusCode.INTERNAL

details = "Internal error from Core"

debug_error_string = "Failed grpc_call_start_batch: 11 with grpc_call_error value: 'GRPC_CALL_ERROR_INVALID_MESSAGE'"

>

```

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

This makes the resolver component tests suite run on Window RBE by

adding a flag in the test driver to further differentiate between Bazel

local run and Bazel RBE run on Windows since they have different

RUNFILES behavior.

Local Bazel run succeeds:

```

C:\Users\yijiem\projects\grpc>bazel --output_base=C:\bazel2 test --dynamic_mode=off --verbose_failures --test_arg=--running_locally=true //test/cpp/naming:resolver_component_tests_runner_invoker

INFO: Analyzed target //test/cpp/naming:resolver_component_tests_runner_invoker (0 packages loaded, 0 targets configured).

INFO: Found 1 test target...

Target //test/cpp/naming:resolver_component_tests_runner_invoker up-to-date:

bazel-bin/test/cpp/naming/resolver_component_tests_runner_invoker.exe

INFO: Elapsed time: 196.080s, Critical Path: 193.21s

INFO: 2 processes: 1 internal, 1 local.

INFO: Build completed successfully, 2 total actions

//test/cpp/naming:resolver_component_tests_runner_invoker PASSED in 193.1s

Executed 1 out of 1 test: 1 test passes.

```

RBE run succeeds:

```

C:\Users\yijiem\projects\grpc>bazel --bazelrc=tools/remote_build/windows.bazelrc test --config=windows_opt --dynamic_mode=off --verbose_failures --host_linkopt=/NODEFAULTLIB:libcmt.lib --host_linkopt=/DEFAULTLIB:msvcrt.lib --nocache_test_results //test/cpp/naming:resolver_component_tests_runner_invoker

INFO: Invocation ID: d467f2e3-7da6-4bb5-8b9b-84f1181ebc60

WARNING: --remote_upload_local_results is set, but the remote cache does not support uploading action results or the current account is not authorized to write local results to the remote cache.

INFO: Streaming build results to: https://source.cloud.google.com/results/invocations/d467f2e3-7da6-4bb5-8b9b-84f1181ebc60

INFO: Analyzed target //test/cpp/naming:resolver_component_tests_runner_invoker (0 packages loaded, 133 targets configured).

INFO: Found 1 test target...

Target //test/cpp/naming:resolver_component_tests_runner_invoker up-to-date:

bazel-bin/test/cpp/naming/resolver_component_tests_runner_invoker.exe

INFO: Elapsed time: 41.627s, Critical Path: 39.42s

INFO: 2 processes: 1 internal, 1 remote.

//test/cpp/naming:resolver_component_tests_runner_invoker PASSED in 33.0s

Executed 1 out of 1 test: 1 test passes.

INFO: Streaming build results to: https://source.cloud.google.com/results/invocations/d467f2e3-7da6-4bb5-8b9b-84f1181ebc60

INFO: Build completed successfully, 2 total actions

```

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

This reverts commit fe1ba18dfc.

Reason: break import

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

This helps developers run benchmark loadtests locally. See comments in

scenario_runner.py for usage.

---------

Co-authored-by: drfloob <drfloob@users.noreply.github.com>

Previously black wouldn't install, as it required newer `packaging`

package.

This fixes `pip install -r requirements-dev.txt`. In addition, `black`

in dev dependencies file is changed to `black[d]`, which bundles

`blackd` binary (["black as a

server"](https://black.readthedocs.io/en/stable/usage_and_configuration/black_as_a_server.html)).

The `work_stealing` experiment on its own is not very valuable, so let's

delete it and save CI resources. We have a benchmark for

`GRPC_EXPERIMENTS=event_engine_listener,work_stealing`, which is really

what we care about right now.

Fixes an issue when an active context selected automatically picked up

as context for `secondary_k8s_api_manager`.

This was introducing an error in GAMMA Baseline PoC

```

sys:1: ResourceWarning: unclosed <ssl.SSLSocket fd=4, family=AddressFamily.AF_INET, type=SocketKind.SOCK_STREAM, proto=0, laddr=('100.71.2.143', 56723), raddr=('35.199.174.232', 443)>

```

Here's how the secondary context is incorrectly falls back to the

default context when `--secondary_kube_context` is not set:

```

k8s.py:142] Using kubernetes context "gke_grpc-testing_us-central1-a_psm-interop-security", active host: https://35.202.85.90

k8s.py:142] Using kubernetes context "None", active host: https://35.202.85.90

```

This WorkStealingThreadPool change improves the (lagging)

`cpp_protobuf_async_streaming_qps_unconstrained_secure` 32-core

benchmark.

Baseline OriginalThreadPool QPS: 830k

Previous average WorkStealingThreadPool QPS: 755k

New WorkStealingThreadPool average (2 runs) QPS: 850k

We enabled OpenSSL3 testing with #31256 and missed a failing test

It wasn't running before, so this isn't a regression - disabling it so

master doesn't fail while we figure out how to fix it.

- Add Github Action to conditionally run PSM Interop unit tests:

- Only run when changes are detected in

`tools/run_tests/xds_k8s_test_driver` or any of the proto files used by

the driver

- Only run against PRs and pushes to `master`, `v1.*.*` branches

- Runs using `python3.9` and `python3.10`

- Ready to be added to the list of required GitHub checks

- Add `tools/run_tests/xds_k8s_test_driver/tests/unit/__main__.py` test

loader that recursively discovers all unit tests in

`tools/run_tests/xds_k8s_test_driver/tests/unit`

- Add basic coverage for `XdsTestClient` and `XdsTestServer` to verify

the test loader picks up all folders

Related:

- First unit tests without automated CI added in #34097

The tests are skipped incorrectly because `config.server_lang` is

incorrectly compared with the string value "java", instead of

`skips.Lang.JAVA`.

This has been broken since #26998.

```

xds_url_map_testcase.py:372] ----- Testing TestTimeoutInRouteRule -----

xds_url_map_testcase.py:373] Logs timezone: UTC

skips.py:121] Skipping TestConfig(client_lang='java', server_lang='java', version='v1.57.x')

[ SKIPPED ] setUpClass (timeout_test.TestTimeoutInRouteRule)

xds_url_map_testcase.py:372] ----- Testing TestTimeoutInApplication -----

xds_url_map_testcase.py:373] Logs timezone: UTC

skips.py:121] Skipping TestConfig(client_lang='java', server_lang='java', version='v1.57.x')

[ SKIPPED ] setUpClass (timeout_test.TestTimeoutInApplication)

```

Add example for TLS.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

Some versions of MacOS (as well as some `glibc`-based platforms) require

`__STDC_FORMAT_MACROS` to be defined prior to including `<cinttypes>` or

`<inttypes.h>`, otherwise build fails with undeclared `PRIdMAX`,

`PRIdPTR` etc.

See note here: https://en.cppreference.com/w/cpp/types/integer

label: (release notes: no)

This is to make sure upgrading packaging module won't break our logic on

version-based version skipping.

This also fixes a small issue with `dev-` prefix - it should only be

allowed on the left side of the comparison.

Context: packaging module needs to be upgraded to be compatible with

`blackd`.

Local Bazel invocation succeeds:

```

C:\Users\yijiem\projects\grpc>bazel --output_base=C:\bazel2 test --dynamic_mode=off --verbose_failures //test/cpp/naming:resolver_component_tests_runner_invoker@poller=epoll1

INFO: Analyzed target //test/cpp/naming:resolver_component_tests_runner_invoker@poller=epoll1 (0 packages loaded, 0 targets configured).

INFO: Found 1 test target...

Target //test/cpp/naming:resolver_component_tests_runner_invoker@poller=epoll1 up-to-date:

bazel-bin/test/cpp/naming/resolver_component_tests_runner_invoker@poller=epoll1.exe

INFO: Elapsed time: 199.262s, Critical Path: 193.48s

INFO: 2 processes: 1 internal, 1 local.

INFO: Build completed successfully, 2 total actions

//test/cpp/naming:resolver_component_tests_runner_invoker@poller=epoll1 PASSED in 193.4s

Executed 1 out of 1 test: 1 test passes.

```

The local invocation of RBE failed with linker error `LINK : error

LNK2001: unresolved external symbol mainCRTStartup`, but that does not

limited to this target:

https://gist.github.com/yijiem/2c6cbd9a31209a6de8fd711afbf2b479.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

Update from gtcooke94:

This PR adds support to build gRPC and it's tests with OpenSSL3. There were some

hiccups with tests as the tests with openssl haven't been built or exercised in a

few months, so they needed some work to fix.

Right now I expect all test files to pass except the following:

- h2_ssl_cert_test

- ssl_transport_security_utils_test

I confirmed locally that these tests fail with OpenSSL 1.1.1 as well,

thus we are at least not introducing regressions. Thus, I've added compiler directives around these tests so they only build when using BoringSSL.

---------

Co-authored-by: Gregory Cooke <gregorycooke@google.com>

Co-authored-by: Esun Kim <veblush@google.com>

- add debug-only `WorkSerializer::IsRunningInWorkSerializer()` method

and use it in client_channel to verify that subchannels are destroyed in

the `WorkSerializer`

- note: this mechanism uses `std:🧵:id`, so I had to exclude

work_serializer.cc from the core_banned_constructs check

- fix `WorkSerializer::Run()` to unref the callback before releasing

ownership of the `WorkSerializer`, so that any refs captured by the

`std::function<>` will be released before releasing ownership

- fix the WRR timer callback to hop into the `WorkSerializer` to release

its ref to the picker, since that transitively releases refs to

subchannels

- fix subchannel connectivity state notifications to unref the watcher

inside the `WorkSerializer`, since the watcher often transitively holds

refs to subchannels

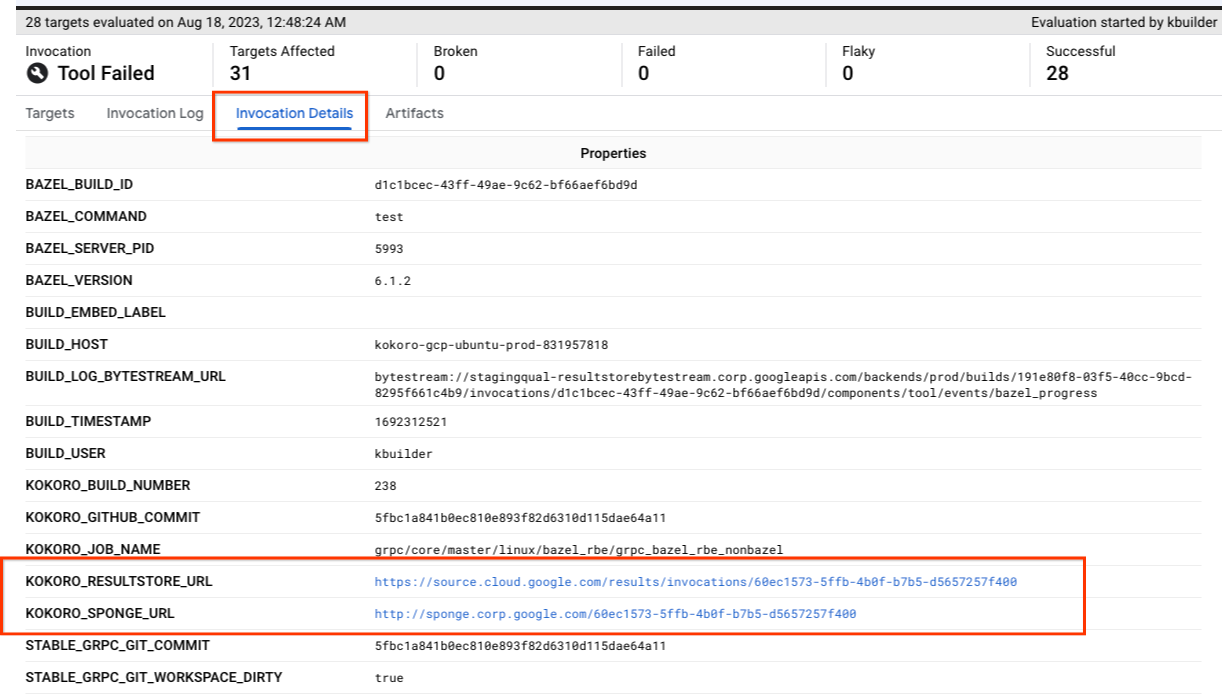

Basically run each of the subtests (buildtest, distribtest_cpp,

distribtest_python) as a separate bazel target.

- currently the bazel distribtest are the slowest targets in

grpc_bazel_rbe_nonbazel

- the shards are basically independent tests anyway

- when split into multiple targets, they each get a separate target log

so it's easier debug issues since there isn't multiple bazel invocations

in each log.

Currently the bazel invocations provide a link back to the original

kokoro jobs for resultstore and sponge UIs.

This adds another back link to Fusion UI, which has the advantage of

- being able to navigate to the kokoro job overview

- there is a button to trigger a new build (in case the job needs to be

re-run).

CNR the flake, but I've changed the test (which is very old) to use some

of our more modern helper functions that have saner timeouts.

Also re-add a `return` statement that was accidentally removed in

#33753, which I noticed while working on this. Its absence doesn't cause

a real problem, but it does cause us to needlessly trigger a duplicate

connection attempt or report a duplicate CONNECTING update in some

cases.

- Upgrade bazel

- Reduce the number of places where bazel version needs to be upgraded

in future.

- also make sure the list of bazel versions to test by bazelified tests

is loaded from supported_versions.txt (it was hardcoded before).

- ~~Try upgrading windows RBE build to bazel 6.3.2 as well.~~

The core idea:

- the source of truth for supported bazel versions is in

`bazel/supported_versions.txt`

- the first version listed in `bazel/supported_versions.txt` is

considered to be the "primary" bazel version and is going to be used in

most places thoroughout the repo.

- use templates to include the primary bazel version in testing

dockerfiles and in a newly introduced `.bazelversion` files (which gets

loaded by our existing `tools/bazel` wrapper).

~~Supersedes https://github.com/grpc/grpc/pull/33880~~

Proposed alternative to https://github.com/grpc/grpc/pull/34024.

This version has a simpler, faster busy-count implementation based on a

sharded set of atomic counts: fast increment/decrement operations,

relatively slower summation of total counts (which need to happen much

less frequently).

WRR is showing a very high CPU cost relative to previous solutions, and

it's unclear why this is.

Add two metrics that should help us see the shape of the subchannel sets

that are being passed to high cost systems in order to confirm/deny

theories.

---------

Co-authored-by: ctiller <ctiller@users.noreply.github.com>

- Remove no-longer-needed workaround for b/275571385 (and switch back to

using docker image from GAR)

- regenerate RBE linux toolchain for bazel 6.1.2 while at it.