Currently, we are not very consistent in what we assume the initial

state of an LB policy will be and whether or not we assume that it will

immediately report a new picker when it gets its initial address update;

different parts of our code make different assumptions. This PR

establishes the convention that LB policies will be assumed to start in

state CONNECTING and will *not* be assumed to report a new picker

immediately upon getting their initial address update, and we now assume

that convention everywhere consistently.

This is a preparatory step for changing policies like round_robin to

delegate to pick_first, which I'm working on in #32692. As part of that

change, we need pick_first to not report a connectivity state until it

actually sees the connectivity state of the underlying subchannels, so

that round_robin knows when to swap over to a new child list without

reintroducing the problem fixed in #31939.

To fix this error

```

test/core/security/grpc_authorization_engine_test.cc:88:32: error: unknown type name 'Json'; did you mean 'experimental::Json'?

ParseAuditLoggerConfig(const Json&) override {

^~~~

experimental::Json

```

This makes the JSON API visible as part of the C-core API, but in the

`experimental` namespace. It will be used as part of various

experimental APIs that we will be introducing in the near future, such

as the audit logging API.

WireWriter implementation schedules actions to be run by `ExecCtx`. We

should flush pending actions before destructing

`end2end_testing::g_transaction_processor`, which need to be alive to

handle the scheduled actions. Otherwise,

we get heap-use-after-free error because the testing fixture

(`end2end_testing::g_transaction_processor`) is destructed before all

the scheduled actions are run.

This lowers end2end binder transport test failure rate from 0.23% to

0.15%, according to internal tool that runs the test for 15000 times

under various configuration.

This PR implements a work-stealing thread pool for use inside

EventEngine implementations. Because of historical risks here, I've

guarded the new implementation behind an experiment flag:

`GRPC_EXPERIMENTS=work_stealing`. Current default behavior is the

original thread pool implementation.

Benchmarks look very promising:

```

bazel test \

--test_timeout=300 \

--config=opt -c opt \

--test_output=streamed \

--test_arg='--benchmark_format=csv' \

--test_arg='--benchmark_min_time=0.15' \

--test_arg='--benchmark_filter=_FanOut' \

--test_arg='--benchmark_repetitions=15' \

--test_arg='--benchmark_report_aggregates_only=true' \

test/cpp/microbenchmarks:bm_thread_pool

```

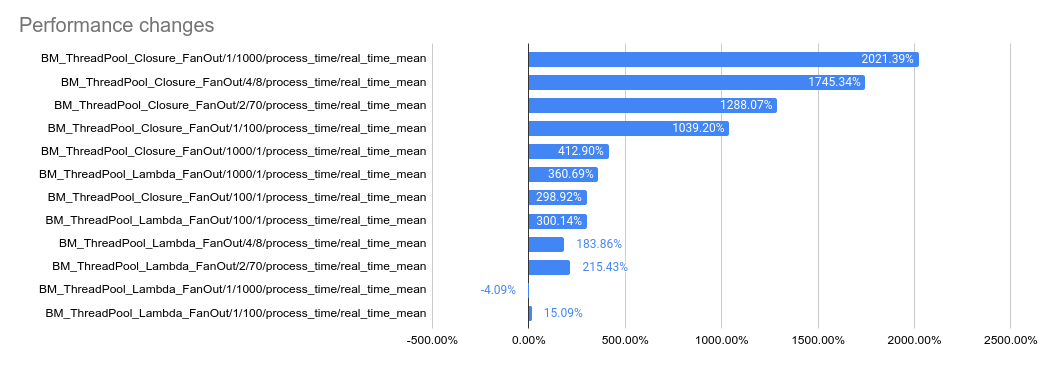

2023-05-04: `bm_thread_pool` benchmark results on my local machine (64

core ThreadRipper PRO 3995WX, 256GB memory), comparing this PR to

master:

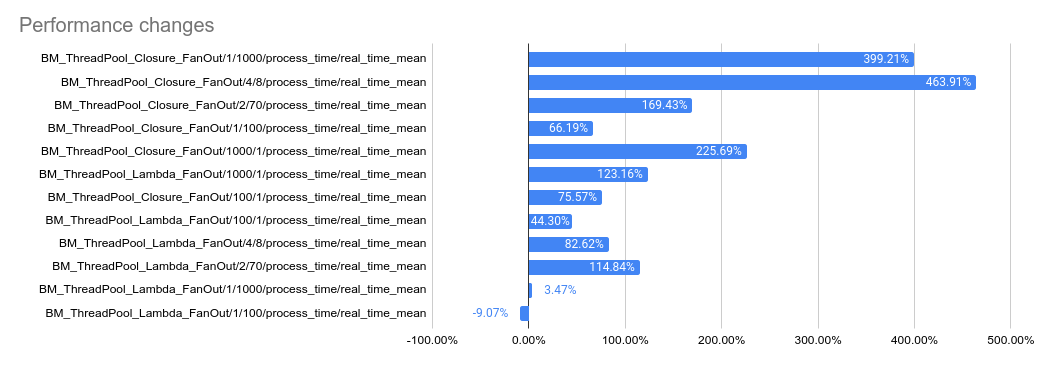

2023-05-04: `bm_thread_pool` benchmark results in the Linux RBE

environment (unsure of machine configuration, likely small), comparing

this PR to master.

---------

Co-authored-by: drfloob <drfloob@users.noreply.github.com>

One TXT lookup query can return multiple TXT records (see the following

example). `EventEngine::DNSResolver` should return all of them to let

the caller (e.g. `event_engine_client_channel_resolver`) decide which

one they would use.

```

$ dig TXT wikipedia.org

; <<>> DiG 9.18.12-1+build1-Debian <<>> TXT wikipedia.org

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 49626

;; flags: qr rd ra; QUERY: 1, ANSWER: 3, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 512

;; QUESTION SECTION:

;wikipedia.org. IN TXT

;; ANSWER SECTION:

wikipedia.org. 600 IN TXT "google-site-verification=AMHkgs-4ViEvIJf5znZle-BSE2EPNFqM1nDJGRyn2qk"

wikipedia.org. 600 IN TXT "yandex-verification: 35c08d23099dc863"

wikipedia.org. 600 IN TXT "v=spf1 include:wikimedia.org ~all"

```

Note that this change also deviates us from the iomgr's DNSResolver API

which uses std::string as the result type.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

Reverts grpc/grpc#32924. This breaks the build again, unfortunately.

From `test/core/event_engine/cf:cf_engine_test`:

```

error: module .../grpc/test/core/event_engine/cf:cf_engine_test does not depend on a module exporting 'grpc/support/port_platform.h'

```

@sampajano I recommend looking into CI tests to catch iOS problems

before merging. We can enable EventEngine experiments in the CI

generally once this PR lands, but this broken test is not one of those

experiments. A normal build should have caught this.

cc @HannahShiSFB

Reverts grpc/grpc#33002. Breaks internal builds:

`.../privacy_context:filters does not depend on a module exporting

'.../src/core/lib/channel/context.h'`

Change call attributes to be stored in a `ChunkedVector` instead of

`std::map<>`, so that the storage can be allocated on the arena. This

means that we're now doing a linear search instead of a map lookup, but

the total number of attributes is expected to be low enough that that

should be okay.

Also, we now hide the actual data structure inside of the

`ServiceConfigCallData` object, which required some changes to the

`ConfigSelector` API. Previously, the `ConfigSelector` would return a

`CallConfig` struct, and the client channel would then use the data in

that struct to populate the `ServiceConfigCallData`. This PR changes

that such that the client channel creates the `ServiceConfigCallData`

before invoking the `ConfigSelector`, and it passes the

`ServiceConfigCallData` into the `ConfigSelector` so that the

`ConfigSelector` can populate it directly.

The protection is added at `xds_http_rbac_filter.cc` where we read the

new field. With this disabling the feature, nothing from things like

`xds_audit_logger_registry.cc` shall be invoked.

Makes some awkward fixes to compression filter, call, connected channel

to hold the semantics we have upheld now in tests.

Once the fixes described here

https://github.com/grpc/grpc/blob/master/src/core/lib/channel/connected_channel.cc#L636

are in this gets a lot less ad-hoc, but that's likely going to be

post-landing promises client & server side.

We specifically need special handling for server side cancellation in

response to reads wrt the inproc transport - which doesn't track

cancellation thoroughly enough itself.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

---------

Co-authored-by: ctiller <ctiller@users.noreply.github.com>

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

1. `GrpcAuthorizationEngine` creates the logger from the given config in

its ctor.

2. `Evaluate()` invokes audit logging when needed.

---------

Co-authored-by: rockspore <rockspore@users.noreply.github.com>

Whilst the per cpu counters probably help single channel contention, we

think it's likely that they're a pessimization when taken fleetwide.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

Add audit condition and audit logger config into `grpc_core::Rbac`.

Support translation of audit logging options from authz policy to it.

Audit logging options in authz policy looks like:

```json

{

"audit_logging_options": {

"audit_condition": "ON_DENY",

"audit_loggers": [

{

"name": "logger",

"config": {},

"is_optional": false

}

]

}

}

```

which is consistent with what's in the xDS RBAC proto but a little

flattened.

---------

Co-authored-by: rockspore <rockspore@users.noreply.github.com>

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

Reverts grpc/grpc#32636

```

src/compiler/csharp_generator_helpers.h:25:7: error: no member named 'compiler' in namespace ...

src/compiler/csharp_generator_helpers.h:25:25: error: no member named 'csharp' in namespace 'compiler' ...

```

Added `base_namespace` experimental option to `grpc_csharp_plugin` as

this has been requested several times by

people not using `Grpc.Tools` to generate their code - see

https://github.com/grpc/grpc/issues/28663

Notes:

- it should not be used with `Grpc.Tools`. That has a different way of

handling duplicate proto file names in different directories. Using this

option will break those builds. It can only be used on the `protoc`

command line.

- it uses common code with the `base_namespace` option for C# in

`protoc`, which unfortunately has a slightly different name mangling

algorithm for converting proto file names to C# camel case names. This

only affects files with punctation or numbers in the name. This should

not matter unless you are expecting specific file names

- See

https://protobuf.dev/reference/csharp/csharp-generated/#compiler_options

for an explanation of this option

Apply Obsolete attribute to deprecated services and methods in C#

generated code

Fix for https://github.com/grpc/grpc/issues/28597

- Deprecated support for enums and enum values is already fixed by

https://github.com/protocolbuffers/protobuf/pull/10520 but this is not

yet released. It is fixed in Protocol Buffers v22.0-rc1 but the gRPC

repo currently has 21.12 as the protocol buffers submodule.

- Deprecated support for messages and fields already exists in the

protocol buffers compiler.

The fix in this PR adds `Obsolete` attribute to classes and methods for

deprecated services and methods within services. e.g.

```

service Greeter {

option deprecated=true; // service level deprecated

// Sends a greeting

rpc SayHello (HelloRequest) returns (HelloReply) {

option deprecated=true; // method level deprecated

}

}

```

I couldn't find any protocol buffers plugin tests to update. Tested

locally.

Audit logging APIs for both built-in loggers and third-party logger

implementations.

C++ uses using decls referring to C-Core APIs.

---------

Co-authored-by: rockspore <rockspore@users.noreply.github.com>

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

---------

Co-authored-by: ctiller <ctiller@users.noreply.github.com>

Third-party loggers will be added in subsequent PRs once the logger

factory APIs are available to validate the configs here.

This registry is used in `xds_http_rbac_filter.cc` to generate service

config json.

The PR also creates a separate BUILD target for:

- chttp2 context list

- iomgr buffer_list

- iomgr internal errqueue

This would allow the context list to be included as standalone

dependencies for EventEngine implementations.

In order to help https://github.com/grpc/grpc/pull/32748, change the

test so that it tells us what the problem is in the logs.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

As Protobuf is going to support Cord to reduce memory copy when

[de]serializing Cord fields, gRPC is going to leverage it. This

implementation is based on the internal one but it's slightly modified

to use the public APIs of Cord. only

This test proves that `global_stats.IncrementHttp2MetadataSize(0)` works

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

Initial bazel tests for C# protoc and grpc_protoc_plugin.

This initial test just generated code from the proto file and compares

the generated code against expected files.

I've put the tests in `test/csharp/codegen` as that is similar to where

the C++ tests are placed, but they could be moved to

`src\csharp` if that is a better place.

Further tests can be added once the initial framework for the tests is

agreed.

- Added `fuzzer_input.proto` and `NetworkInput` proto message

- Migrated client_fuzzer and server_fuzzer to proto fuzzer

- Migrated the existing corpus and verified that the code coverage (e.g.

chttp2) stays the same

Probably need to cherrypick due to amount of files changed.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

@sampajano

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

This bug occurred when the same xDS server was configured twice in the

same bootstrap config, once in an authority and again as the top-level

server. In that case, we were incorrectly failing to de-dup them and

were creating a separate channel for the LRS stream than the one that

already existed for the ADS stream. We fix this by canonicalizing the

server keys the same way in both cases.

As a separate follow-up item, I will work on trying to find a better way

to key these maps that does not suffer from this kind of fragility.