Building out a new framing layer for chttp2.

The central idea here is to have the framing layer be solely responsible

for serialization of frames, and their deserialization - the framing

layer can reject frames that have invalid syntax - but the enacting of

what that frame means is left to a higher layer.

This class will become foundational for the promise conversion of chttp2

- by eliminating action from the parsing of frames we can reuse this

sensitive code.

Right now the new layer is inactive - there's a test that exercises it

relatively well, and not much more. In the next PRs I'll add an

experiments to enable using this layer or the existing code in the

writing and reading paths.

---------

Co-authored-by: ctiller <ctiller@users.noreply.github.com>

This is the initial implementation of the chaotic-good client transport

write path. There will be a follow-up PR to fulfill the read path.

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

Previously black wouldn't install, as it required newer `packaging`

package.

This fixes `pip install -r requirements-dev.txt`. In addition, `black`

in dev dependencies file is changed to `black[d]`, which bundles

`blackd` binary (["black as a

server"](https://black.readthedocs.io/en/stable/usage_and_configuration/black_as_a_server.html)).

The `work_stealing` experiment on its own is not very valuable, so let's

delete it and save CI resources. We have a benchmark for

`GRPC_EXPERIMENTS=event_engine_listener,work_stealing`, which is really

what we care about right now.

Fixes an issue when an active context selected automatically picked up

as context for `secondary_k8s_api_manager`.

This was introducing an error in GAMMA Baseline PoC

```

sys:1: ResourceWarning: unclosed <ssl.SSLSocket fd=4, family=AddressFamily.AF_INET, type=SocketKind.SOCK_STREAM, proto=0, laddr=('100.71.2.143', 56723), raddr=('35.199.174.232', 443)>

```

Here's how the secondary context is incorrectly falls back to the

default context when `--secondary_kube_context` is not set:

```

k8s.py:142] Using kubernetes context "gke_grpc-testing_us-central1-a_psm-interop-security", active host: https://35.202.85.90

k8s.py:142] Using kubernetes context "None", active host: https://35.202.85.90

```

- Add Github Action to conditionally run PSM Interop unit tests:

- Only run when changes are detected in

`tools/run_tests/xds_k8s_test_driver` or any of the proto files used by

the driver

- Only run against PRs and pushes to `master`, `v1.*.*` branches

- Runs using `python3.9` and `python3.10`

- Ready to be added to the list of required GitHub checks

- Add `tools/run_tests/xds_k8s_test_driver/tests/unit/__main__.py` test

loader that recursively discovers all unit tests in

`tools/run_tests/xds_k8s_test_driver/tests/unit`

- Add basic coverage for `XdsTestClient` and `XdsTestServer` to verify

the test loader picks up all folders

Related:

- First unit tests without automated CI added in #34097

The tests are skipped incorrectly because `config.server_lang` is

incorrectly compared with the string value "java", instead of

`skips.Lang.JAVA`.

This has been broken since #26998.

```

xds_url_map_testcase.py:372] ----- Testing TestTimeoutInRouteRule -----

xds_url_map_testcase.py:373] Logs timezone: UTC

skips.py:121] Skipping TestConfig(client_lang='java', server_lang='java', version='v1.57.x')

[ SKIPPED ] setUpClass (timeout_test.TestTimeoutInRouteRule)

xds_url_map_testcase.py:372] ----- Testing TestTimeoutInApplication -----

xds_url_map_testcase.py:373] Logs timezone: UTC

skips.py:121] Skipping TestConfig(client_lang='java', server_lang='java', version='v1.57.x')

[ SKIPPED ] setUpClass (timeout_test.TestTimeoutInApplication)

```

This is to make sure upgrading packaging module won't break our logic on

version-based version skipping.

This also fixes a small issue with `dev-` prefix - it should only be

allowed on the left side of the comparison.

Context: packaging module needs to be upgraded to be compatible with

`blackd`.

Update from gtcooke94:

This PR adds support to build gRPC and it's tests with OpenSSL3. There were some

hiccups with tests as the tests with openssl haven't been built or exercised in a

few months, so they needed some work to fix.

Right now I expect all test files to pass except the following:

- h2_ssl_cert_test

- ssl_transport_security_utils_test

I confirmed locally that these tests fail with OpenSSL 1.1.1 as well,

thus we are at least not introducing regressions. Thus, I've added compiler directives around these tests so they only build when using BoringSSL.

---------

Co-authored-by: Gregory Cooke <gregorycooke@google.com>

Co-authored-by: Esun Kim <veblush@google.com>

- add debug-only `WorkSerializer::IsRunningInWorkSerializer()` method

and use it in client_channel to verify that subchannels are destroyed in

the `WorkSerializer`

- note: this mechanism uses `std:🧵:id`, so I had to exclude

work_serializer.cc from the core_banned_constructs check

- fix `WorkSerializer::Run()` to unref the callback before releasing

ownership of the `WorkSerializer`, so that any refs captured by the

`std::function<>` will be released before releasing ownership

- fix the WRR timer callback to hop into the `WorkSerializer` to release

its ref to the picker, since that transitively releases refs to

subchannels

- fix subchannel connectivity state notifications to unref the watcher

inside the `WorkSerializer`, since the watcher often transitively holds

refs to subchannels

Basically run each of the subtests (buildtest, distribtest_cpp,

distribtest_python) as a separate bazel target.

- currently the bazel distribtest are the slowest targets in

grpc_bazel_rbe_nonbazel

- the shards are basically independent tests anyway

- when split into multiple targets, they each get a separate target log

so it's easier debug issues since there isn't multiple bazel invocations

in each log.

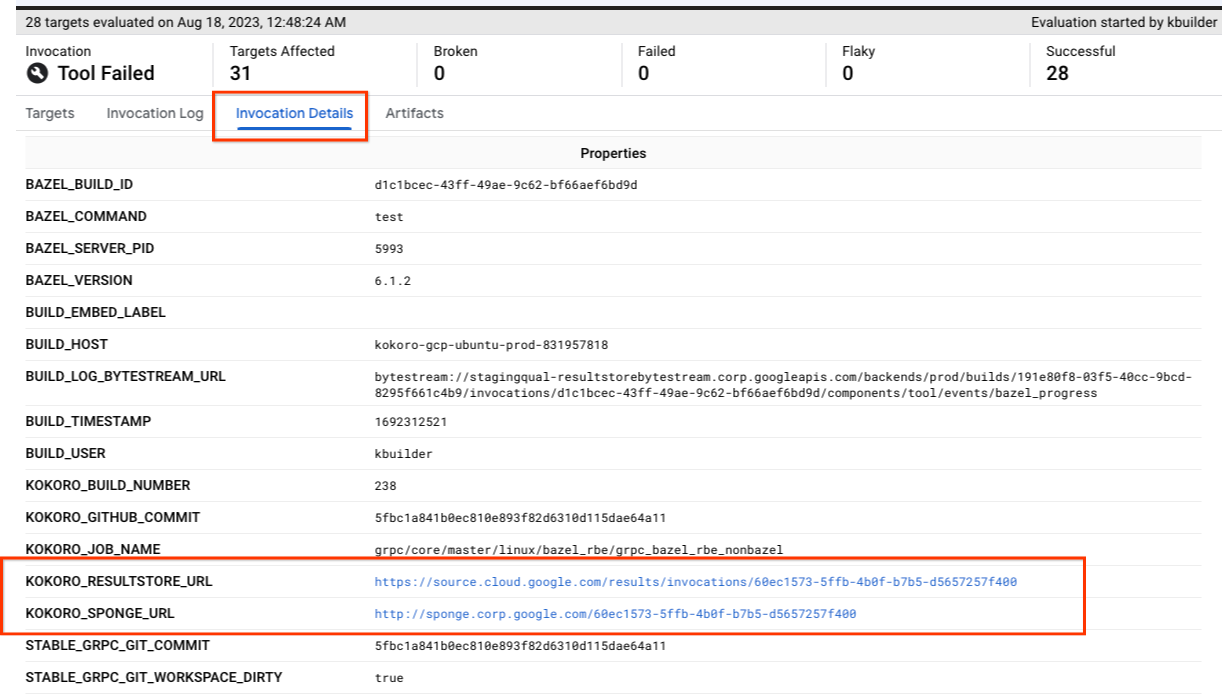

Currently the bazel invocations provide a link back to the original

kokoro jobs for resultstore and sponge UIs.

This adds another back link to Fusion UI, which has the advantage of

- being able to navigate to the kokoro job overview

- there is a button to trigger a new build (in case the job needs to be

re-run).

- Upgrade bazel

- Reduce the number of places where bazel version needs to be upgraded

in future.

- also make sure the list of bazel versions to test by bazelified tests

is loaded from supported_versions.txt (it was hardcoded before).

- ~~Try upgrading windows RBE build to bazel 6.3.2 as well.~~

The core idea:

- the source of truth for supported bazel versions is in

`bazel/supported_versions.txt`

- the first version listed in `bazel/supported_versions.txt` is

considered to be the "primary" bazel version and is going to be used in

most places thoroughout the repo.

- use templates to include the primary bazel version in testing

dockerfiles and in a newly introduced `.bazelversion` files (which gets

loaded by our existing `tools/bazel` wrapper).

~~Supersedes https://github.com/grpc/grpc/pull/33880~~

Proposed alternative to https://github.com/grpc/grpc/pull/34024.

This version has a simpler, faster busy-count implementation based on a

sharded set of atomic counts: fast increment/decrement operations,

relatively slower summation of total counts (which need to happen much

less frequently).

WRR is showing a very high CPU cost relative to previous solutions, and

it's unclear why this is.

Add two metrics that should help us see the shape of the subchannel sets

that are being passed to high cost systems in order to confirm/deny

theories.

---------

Co-authored-by: ctiller <ctiller@users.noreply.github.com>

Needs https://github.com/grpc/grpc/pull/34035 to be merged first. With

newer ccache in available in the gcc12 test image, the build is now

faster so we can reenable the test.

This should get the benchmarks running again. The dotnet benchmark is

broken (unclear if it's still necessary), and the grpc-go benchmark

build currently fails. The go benchmark should be re-enabled when the

dockerfiles are fixed. The rest of the dotnet benchmark configuration /

artifacts should be deleted or fixed as well. @jtattermusch

Based on https://github.com/grpc/grpc/pull/34033

Bunch of cleanup and rebuilding many docker images from scratch

- consolidate the workaround for "dubious ownership" issue reported by

git. Other team members have run into this recently and used similar but

not identical workarounds so some cleanup is due.

- rebuilding many images increases the chance that we fix the "dubious

ownership" git issue early on rather than later on in the one-at-a-time

fashion in the future (and the former will prevent many teammembers from

wasting time on this weird issue).

- Newer version of ccache is needed for some portability tests to be

able to benefit from caching (e.g. the GCC 12 portability test to get

benefits of local disk caching) - this is a prerequisite for reenabling

the bazelified gcc12 portability test.

- upgrade node interop images to debian:11 (since debian jessie is long

past EOL).

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

- Extract build metadata for some external dependencies from bazel

build. This is achieved by letting extract_metadata_from_bazel_xml.py

analyze some external libraries and sources. The logic is basically the

same as for internal libraries, I only needed to teach

extract_metadata_from_bazel_xml.py which external libraries it is

allowed to analyze.

* currently, the list of source files is automatically determined for

`z`, `upb`, `re2` and `gtest` dependencies (at least for the case where

we're building in "embedded" mode - e.g. mostly native extensions for

python, php, ruby etc. - cmake has the ability to replace some of these

dependencies by actual cmake dependency.)

- Eliminate the need for manually written gen_build_yaml.py for some

dependencies.

- Make the info on target dependencies in build_autogenerated.yaml more

accurate and complete. Until now, there were some depdendencies that

were allowed to show up in build_autogenerated.yaml and some that were

being skipped. This made generating the CMakeLists.txt and Makefile

quite confusing (since some dependencies are being explicitly mentioned

and some had to be assumed by the build system).

- Overhaul the Makefile

* the Makefile is currently only used internally (e.g. for ruby and PHP

builds)

* until now, the makefile wasn't really using the info about which

targets depend on what libraries, but it was effectively hardcoding the

depedendency data (by magically "knowing" what is the list of all the

stuff that e.g. "grpc" depends on).

* After the overhaul, the Makefile.template now actually looks at the

library dependencies and uses them when generating the makefile. This

gives a more correct and easier to maintain makefile.

* since csharp is no longer on the master branch, remove all mentions of

"csharp" targets in the Makefile.

Other notable changes:

- make extract_metadata_from_bazel_xml.py capable of resolving workspace

bind() rules (so that it knows the real name of the target that is

referred to as e.g. `//external:xyz`)

TODO:

- [DONE] ~~pkgconfig C++ distribtest~~

- [DONE} ~~update third_party/README to reflect changes in how some deps

get updated now.~~

Planned followups:

- cleanup naming of some targets in build metadata and buildsystem

templates: libssl vs boringssl, ares vs cares etc.

- further cleanup of Makefile

- further cleanup of CMakeLists.txt

- remote the need from manually hardcoding extra metadata for targets in

build_autogenerated.yaml. Either add logic that determines the

properties of targets automatically, or use metadata from bazel BUILD.

Reintroduce https://github.com/grpc/grpc/pull/33959.

I added a fix for the python arm64 build (which is the reason why the

change has been reverted earlier).

Our current implementation of Join, TryJoin leverage some complicated

template stuff to work, which makes them hard to maintain. I've been

thinking about ways to simplify that for some time and had something

like this in mind - using a code generator that's at least a little more

understandable to code generate most of the complexity into a file that

is checkable.

Concurrently - I have a cool optimization in mind - but it requires that

we can move promises after polling, which is a contract change. I'm

going to work through the set of primitives we have in the coming weeks

and change that contract to enable the optimization.

---------

Co-authored-by: ctiller <ctiller@users.noreply.github.com>

The types `google::api::expr::v1alpha1` are available in

`"@com_google_googleapis//google/api/expr/v1alpha1:expr_proto"` and not

`"google_type_expr_upb"`

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

Disables the warning produced by kubernetes/client/rest.py calling the

deprecated `urllib3.response.HTTPResponse.getheaders`,

`urllib3.response.HTTPResponse.getheader` methods:

```

venv-test/lib/python3.9/site-packages/kubernetes/client/rest.py:44: DeprecationWarning: HTTPResponse.getheaders() is deprecated and will be removed in urllib3 v2.1.0. Instead access HTTPResponse.headers directly.

return self.urllib3_response.getheaders()

```

This issue introduced by openapi-generator, and solved in `v6.4.0`. To

fix the issue properly, kubernetes/python folks need to regenerate the

library using newer openapi-generator. The most recent release `v27.2.0`

still used openapi-generator

[`v4.3.0`](https://github.com/kubernetes-client/python/blob/v27.2.0/kubernetes/.openapi-generator/VERSION).

Since they release two times a year, and the 2 major version difference

of openapi-generator, the fix may take a while.

Created an issue in their repo:

https://github.com/kubernetes-client/python/issues/2101.

Not adding CMake support yet

<!--

If you know who should review your pull request, please assign it to

that

person, otherwise the pull request would get assigned randomly.

If your pull request is for a specific language, please add the

appropriate

lang label.

-->

Addresses some issues of the initial triage hint PR:

https://github.com/grpc/grpc/pull/33898.

1. Print unhealthy backend name before the health info - previously it

was unclear health status of which backend is dumped

2. Add missing `retry_err.add_note(note)` calls

3. Turn off the highlighter in triager hints, which isn't rendered

properly in the stack trace saved to junit.xml